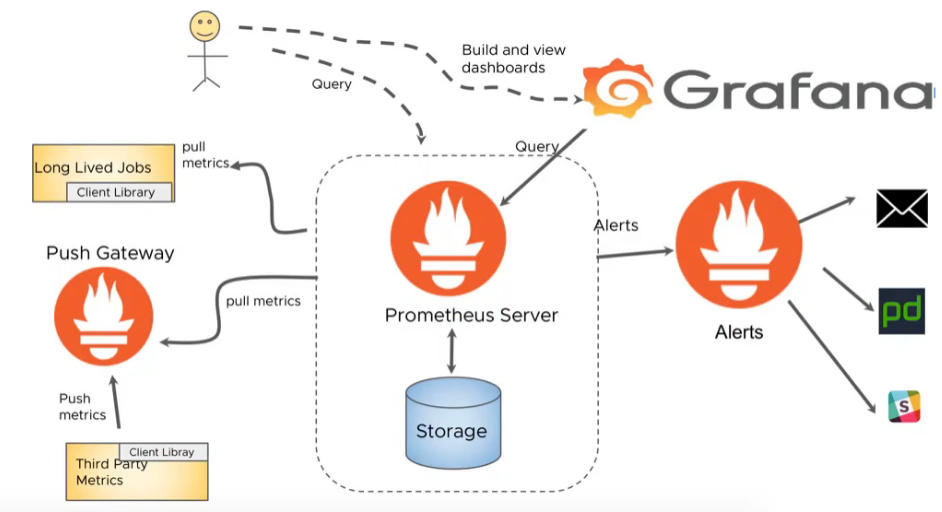

基于Operator方式和二进制方式部署prometheus环境

Operator部署器是基于已经编写好的yaml文件,可以将prometheus server、alertmanager、grafana、nodeexporter等组件⼀键批量部署在k8s集群当中。

github项目地址:

根据支持的kubernetes版本去选择:

拉取项目代码

root@master1:~\ git clone -b release-0.11 https://github.com/prometheus-operator/kube-prometheus.git

替换谷歌仓库镜像为阿里云仓库

root@master1:~\ cd kube-prometheus/

root@master1:~/kube-prometheus\ grep "k8s.gcr.io" ./manifests/ -R #搜索要替换的谷歌仓库的镜像

./manifests/kubeStateMetrics-deployment.yaml: image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.5.0

./manifests/prometheusAdapter-deployment.yaml: image: k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.9.1

替换kube-state-metrics镜像为阿里云仓库镜像

root@master1:~/kube-prometheus\ sed -i 's#k8s.gcr.io\/kube-state-metrics\/kube-state-metrics:v2.5.0#registry.cn-hangzhou.aliyuncs.com\/liangxiaohui\/kube-state-metrics:v2.5.0#g' ./manifests/kubeStateMetrics-deployment.yaml

替换prometheus-adapter镜像为阿里云仓库镜像

root@master1:~/kube-prometheus\ sed -i 's#k8s.gcr.io\/prometheus-adapter\/prometheus-adapter:v0.9.1#registry.cn-hangzhou.aliyuncs.com\/liangxiaohui\/prometheus-adapter:v0.9.1#g' ./manifests/prometheusAdapter-deployment.yaml

删除prometheus相关组件的networkpolicy

因v0.11.0版本新增加了每个组件的网络策略,使用了目录下直接apply -f创建所有yaml,就需要手动删除

root@master1:~/kube-prometheus/manifests\ mv *networkPolicy.yaml /tmp

修改prometheus svc,通过nodeport访问prometheus server

root@master1:~/kube-prometheus\# vim manifests/prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 39090

- name: reloader-web

port: 8080

targetPort: reloader-web

selector:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

修改grafana svc,通过nodeport访问grafana

root@master1:~/kube-prometheus/manifests\# cat grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 8.5.5

name: grafana

namespace: monitoring

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: http

nodePort: 40000

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

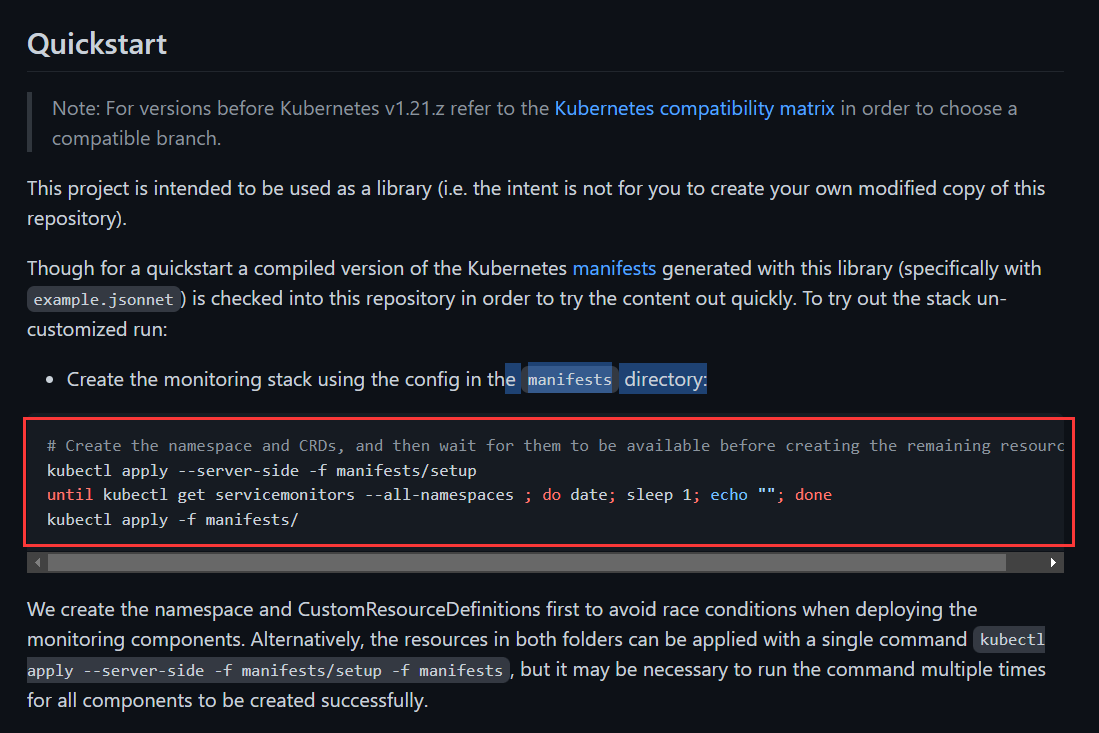

github项目官网部署参考:

使用server-side apply部署或者使用kubectl create -f部署

root@master1:~/kube-prometheus\ kubectl apply --server-side -f manifests/setup

server-side 官网参考:

https://kubernetes.io/zh-cn/docs/reference/using-api/server-side-apply/

服务器端应用协助用户、控制器通过声明式配置的方式管理他们的资源。 客户端可以发送完整描述的目标(A fully specified intent), 声明式地创建和/或修改对象。...服务器端应用既是原有 kubectl apply的替代品, 也是控制器发布自身变化的一个简化机制。

再接着部署manifests目录下的资源应用

root@master1:~/kube-prometheus\ kubectl apply -f manifests/

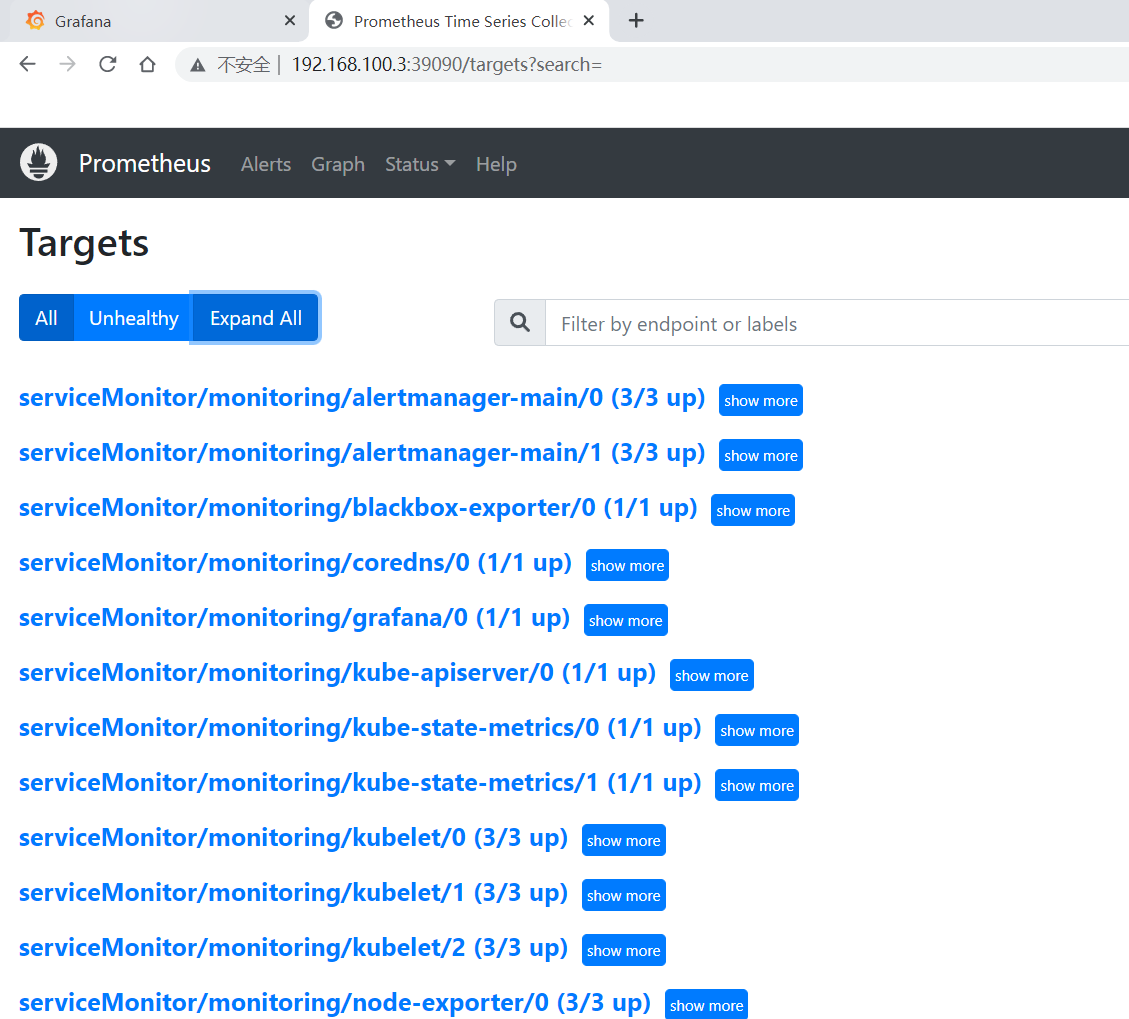

prometheus都部署在monitoring的ns下,查看pod运行状态

root@master1:~/kube-prometheus\ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 2m15s

alertmanager-main-1 2/2 Running 0 2m15s

alertmanager-main-2 2/2 Running 0 2m14s

blackbox-exporter-559db48fd-t85mt 3/3 Running 0 2m40s

grafana-546559f668-xvhk8 1/1 Running 0 2m39s

kube-state-metrics-5f74d45876-tzfqj 3/3 Running 0 2m38s

node-exporter-7gn52 2/2 Running 0 2m38s

node-exporter-kd22j 2/2 Running 0 2m38s

node-exporter-q499p 2/2 Running 0 2m38s

prometheus-adapter-58b89c775f-sqkrd 1/1 Running 0 2m36s

prometheus-adapter-58b89c775f-whb4c 1/1 Running 0 2m36s

prometheus-k8s-0 2/2 Running 0 2m14s

prometheus-k8s-1 2/2 Running 0 2m14s

prometheus-operator-79c5847fd8-qsmlw 2/2 Running 0 2m35s

卸载所有prometheus服务组件

kubectl delete --ignore-not-found=true -f manifests/ -f manifests/setup

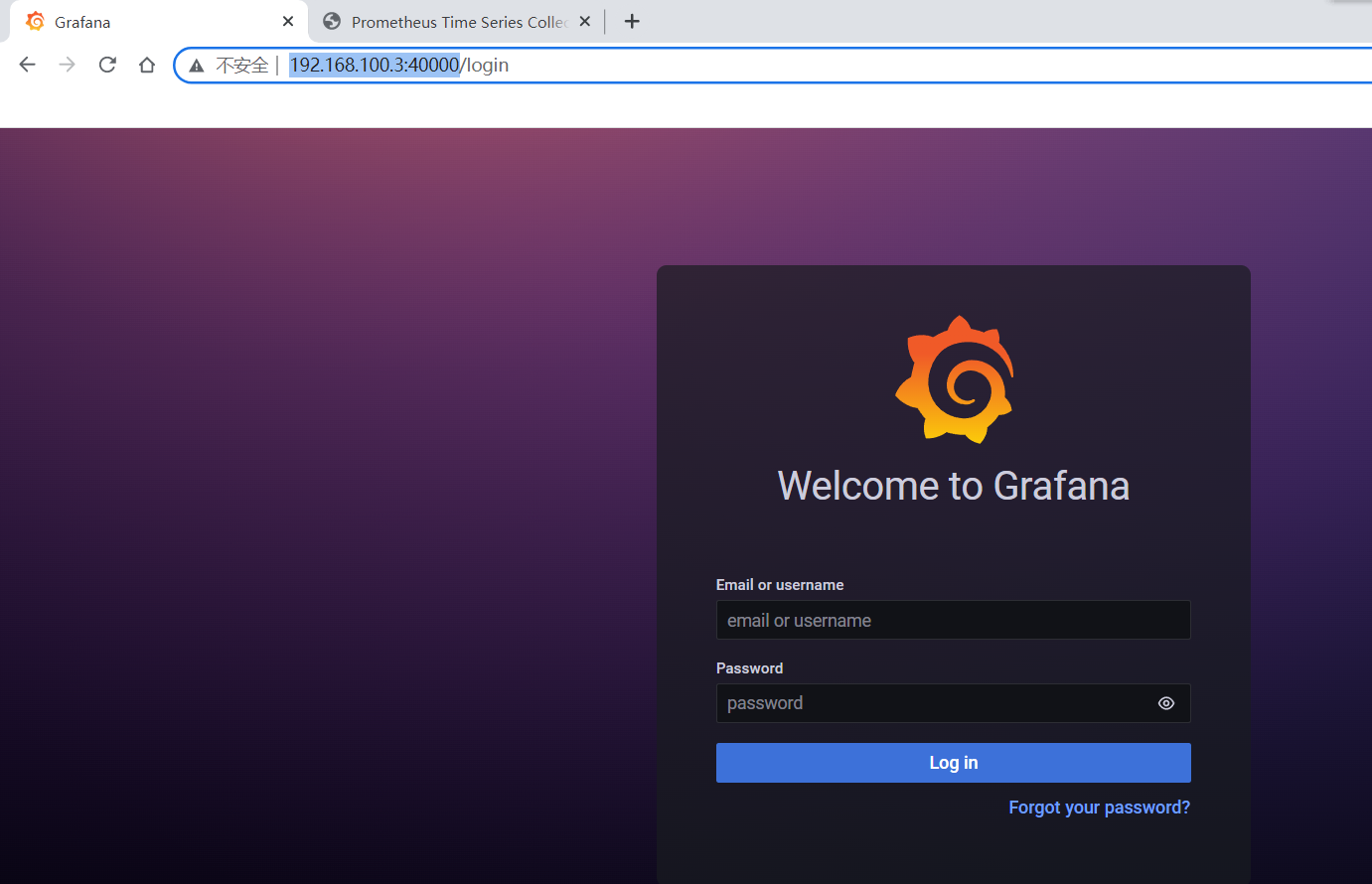

验证访问:

grafana密码为admin/admin

将prometheus的每个组件进行模块化单独部署,其中prometheus Server、grafana、alertmanager、node-exporter等监控组件,使用单独的服务器进行二进制安装或者单独的容器进行部署

安装prometheus-server

二进制部署prometheus-server

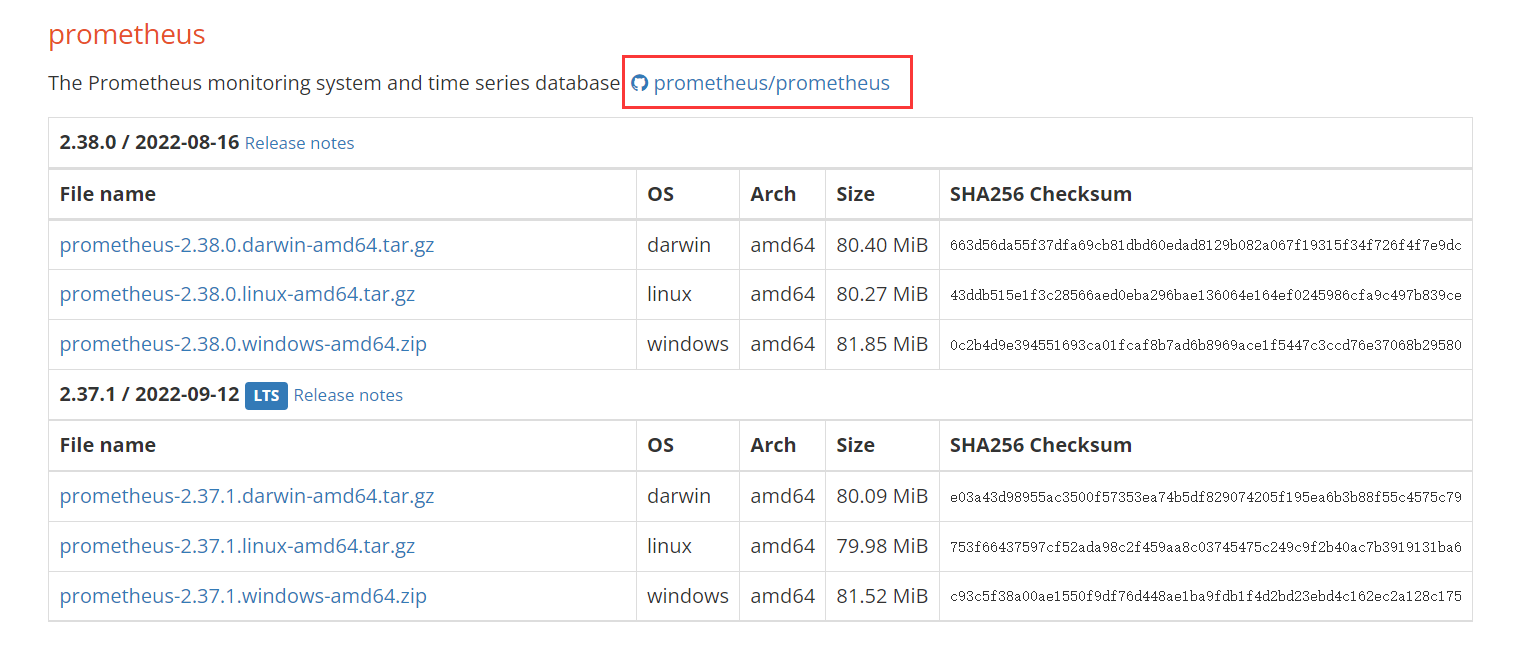

访问官网:

https://prometheus.io/download/

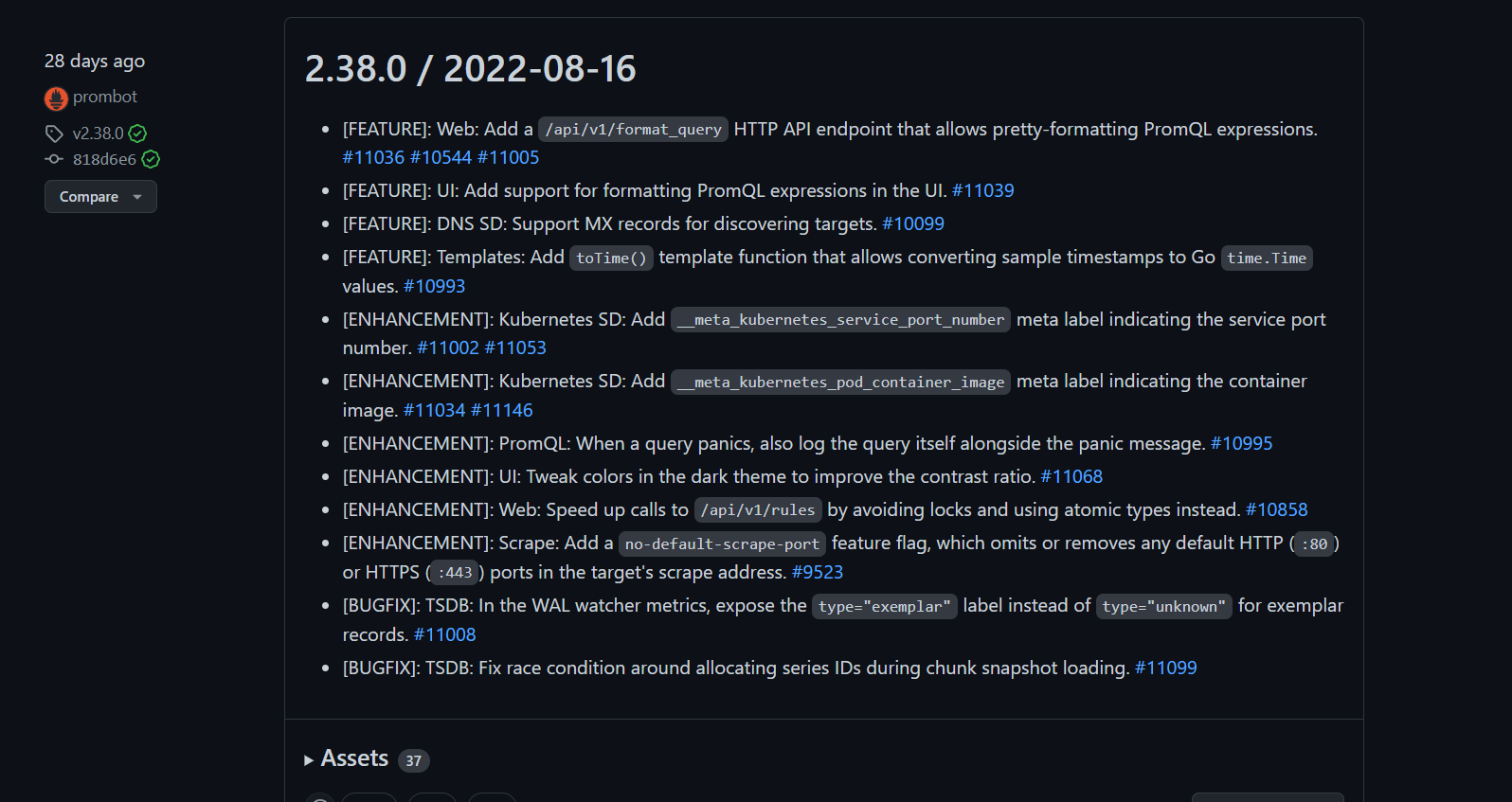

访问后,选择github的链接,也可以直接下载官网最新版本

选择稳定正式版本

https://github.com/prometheus/prometheus

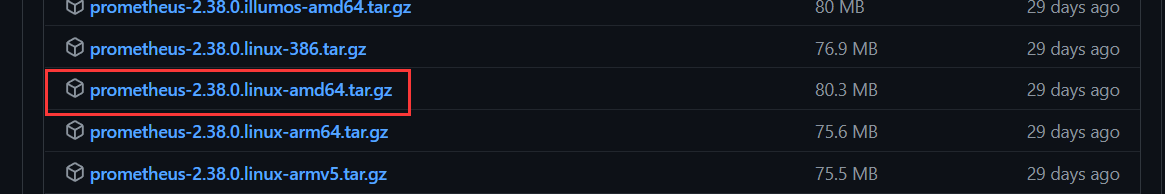

解压部署

root@prometheus:/usr/local/src\ tar xf prometheus-2.38.0.linux-amd64.tar.gz -C /usr/local/

root@prometheus:/usr/local/src\ cd /usr/local/

root@prometheus:/usr/local\ ln -s prometheus-2.38.0.linux-amd64 prometheus

使用帮助

root@prometheus:/usr/local\ cd prometheus

root@prometheus:/usr/local/prometheus\ ./prometheus -h

文件介绍

root@prometheus:/usr/local/prometheus\ ll

total 207312

drwxr-xr-x 4 3434 3434 132 Aug 16 13:45 ./

drwxr-xr-x 11 root root 169 Sep 14 03:00 ../

drwxr-xr-x 2 3434 3434 38 Aug 16 13:42 console_libraries/

drwxr-xr-x 2 3434 3434 173 Aug 16 13:42 consoles/

-rw-r--r-- 1 3434 3434 11357 Aug 16 13:42 LICENSE

-rw-r--r-- 1 3434 3434 3773 Aug 16 13:42 NOTICE

-rwxr-xr-x 1 3434 3434 110234973 Aug 16 13:26 prometheus #prometheus服务可执行程序

-rw-r--r-- 1 3434 3434 934 Aug 16 13:42 prometheus.yml #prometheus服务配置文件

-rwxr-xr-x 1 3434 3434 102028302 Aug 16 13:28 promtool #测试工具,⽤于检测配置prometheus配置文件、检测metrics数据等

检查prometheus配置文件语法

root@prometheus:/usr/local/prometheus\ ./promtool check config prometheus.yml

创建prometheus service启动脚本:默认监听9090端口

cat > /etc/systemd/system/prometheus.service << EOF

[Unit]

Description=Prometheus Server

Documentation=https://prometheus.io/docs/introduction/overview/

After=network.target

[Service]

Restart=on-failure

WorkingDirectory=/usr/local/prometheus/

ExecStart=/usr/local/prometheus/prometheus --config.file=/usr/local/prometheus/prometheus.yml --web.enable-lifecycle

--storage.tsdb.retention=720h

[Install]

WantedBy=multi-user.target

EOF

启动prometheus

systemctl daemon-reload && systemctl enable prometheus.service && systemctl restart prometheus.service访问

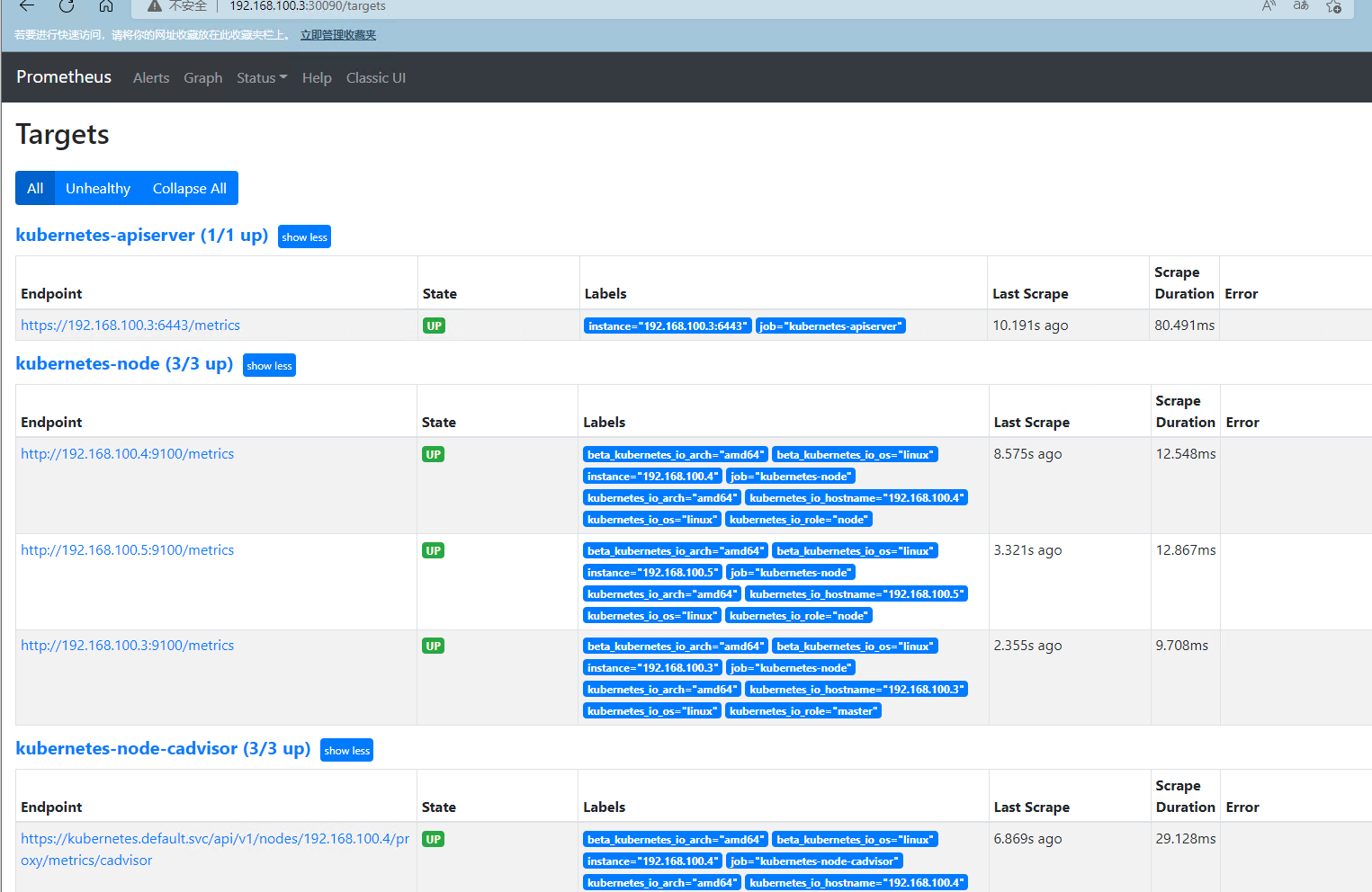

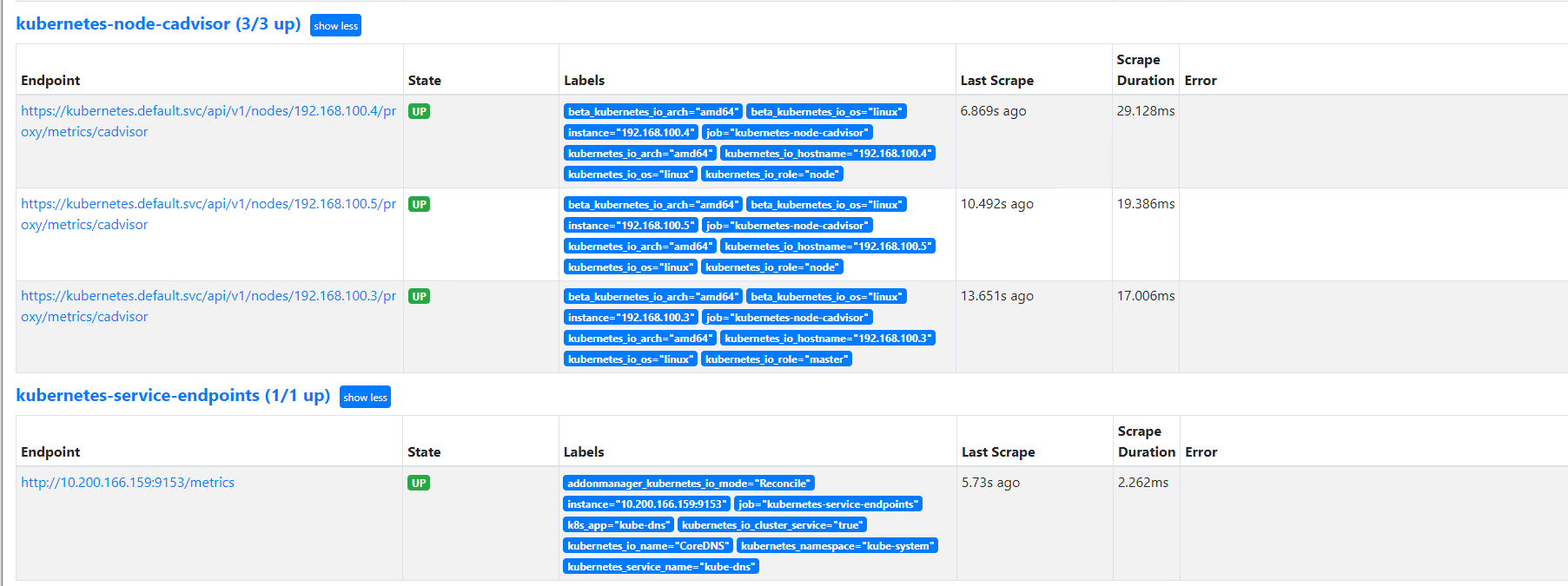

deployment部署prometheus-server

在k8s集群中部署prometheus-server,实现动态发现集群pod

创建prometheus的配置文件configmap,实现服务发现的配置

root@master1:~/yaml\# vim prometheus-cfg.yaml

kind: ConfigMap

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 10s

evaluation_interval: 1m

scrape_configs:

- job_name: 'kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: 'kubernetes-node'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-node-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-apiserver'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_service_name

root@harbor:~\ mkdir /data/k8sdata/prometheus

root@harbor:~\ chown nobody.nogroup -R /data/k8sdata/prometheus #或者 chown -R 65534

root@harbor:/data/k8sdata/prometheus\ cat /etc/exports

/data/k8sdata *(rw,sync,no_root_squash)

root@harbor:/data/k8sdata/prometheus\ exportfs -r

创建供给prometheus 容器使用的pv和pvc

pv:

root@master1:~/yaml\ cat prometheus-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-nfs

labels:

type: nfs

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

path: "/data/k8sdata/prometheus"

server: 192.168.100.15

root@master1:~/yaml# vim prometheus-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: prometheus-nfs

namespace: monitoring

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

selector:

matchLabels:

type: nfs

验证存储部署

root@master1:~/yaml\ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

prometheus-nfs Bound prometheus-nfs 20Gi RWO 32s

root@master1:~/yaml\ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

prometheus-nfs 20Gi RWO Retain Bound monitoring/prometheus-nfs 3m22s

对prometheus-server分配集群控制权限,能够动态发现pod

创建sa账号

root@master1:~/yaml\ kubectl create serviceaccount monitor -n monitoring

serviceaccount/monitor created

对sa账号monitor进行clusterrole权限绑定

root@master1:~/yaml\ kubectl create clusterrolebinding monitoring-clusterrolebinding -n monitoring --clusterrole cluster-admin --serviceaccount=monitoring:monitor

clusterrolebinding.rbac.authorization.k8s.io/monitoring-clusterrolebinding created

创建prometheus-server

root@master1:~/yaml# cat prometheus-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-server

namespace: monitoring

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

component: server

template:

metadata:

labels:

app: prometheus

component: server

annotations:

prometheus.io/scrape: 'false'

spec:

serviceAccountName: monitor #指定sa账号赋予集群权限

containers:

- name: prometheus

image: prom/prometheus:v2.31.2

imagePullPolicy: IfNotPresent

command:

- prometheus

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus

- --storage.tsdb.retention=720h #保留30天数据

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /etc/prometheus/prometheus.yml

name: prometheus-config

subPath: prometheus.yml

- mountPath: /prometheus/

name: prometheus-storage-volume

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

items:

- key: prometheus.yml

path: prometheus.yml

mode: 0644

- name: prometheus-storage-volume

persistentVolumeClaim:

claimName: prometheus-nfs

创建svc

root@master1:~/yaml\# cat prometheus-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitoring

labels:

app: prometheus

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30090

protocol: TCP

selector:

app: prometheus

component: server

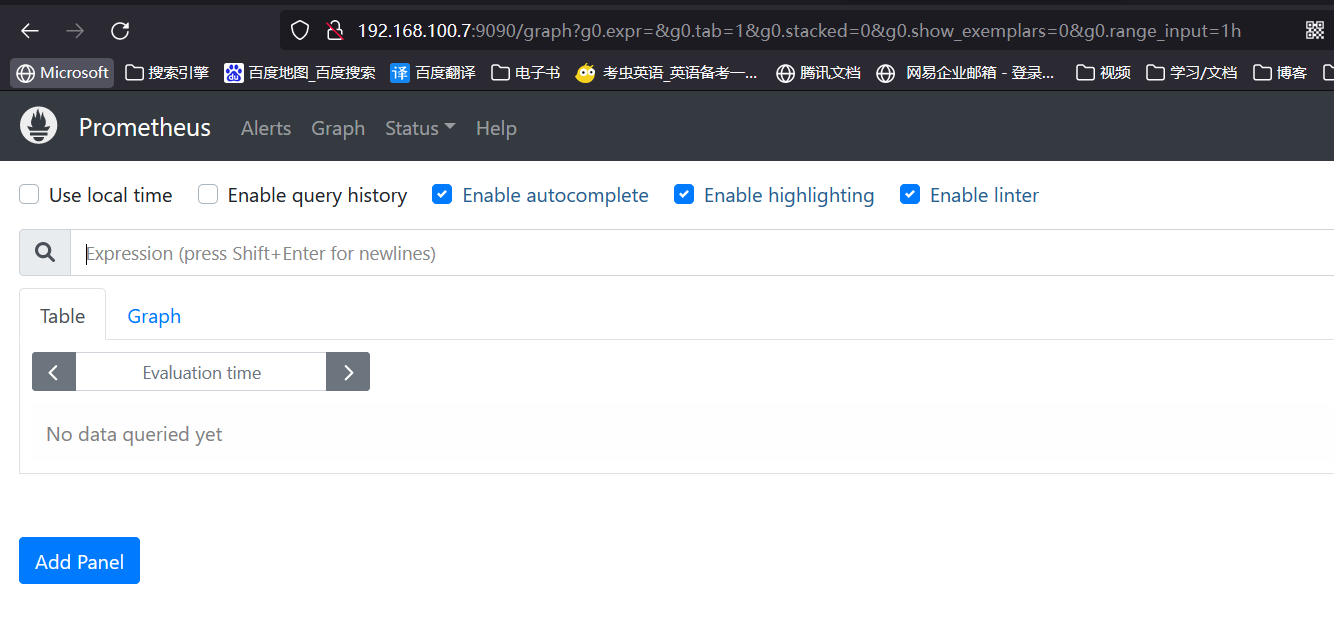

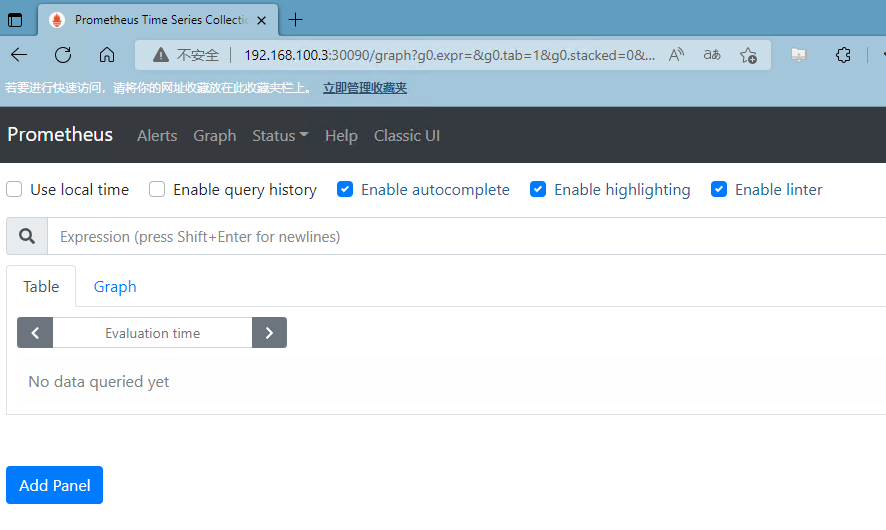

浏览器访问prometheus-server

修改prometheus.server,启动prometheus命令后添加--web.enable-lifecycle参数

root@prometheus:~\ vim /etc/systemd/system/prometheus.service

ExecStart=/usr/local/prometheus/prometheus --config.file=/usr/local/prometheus/prometheus.yml --web.enable-lifecycle

systemctl daemon-reload && systemctl restart prometheus.service

curl -X POST http://192.168.100.7:9090/-/reload

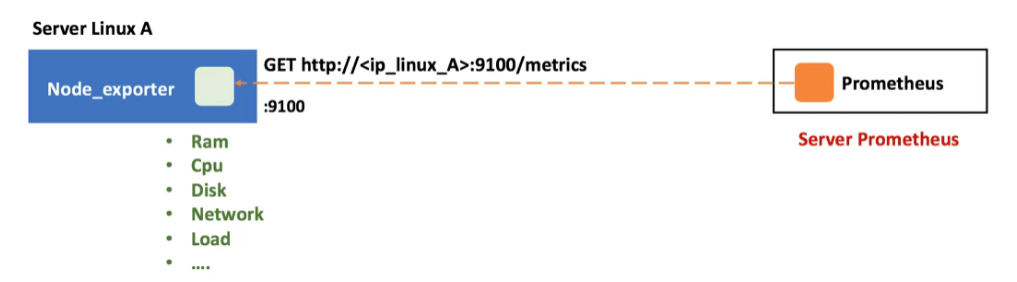

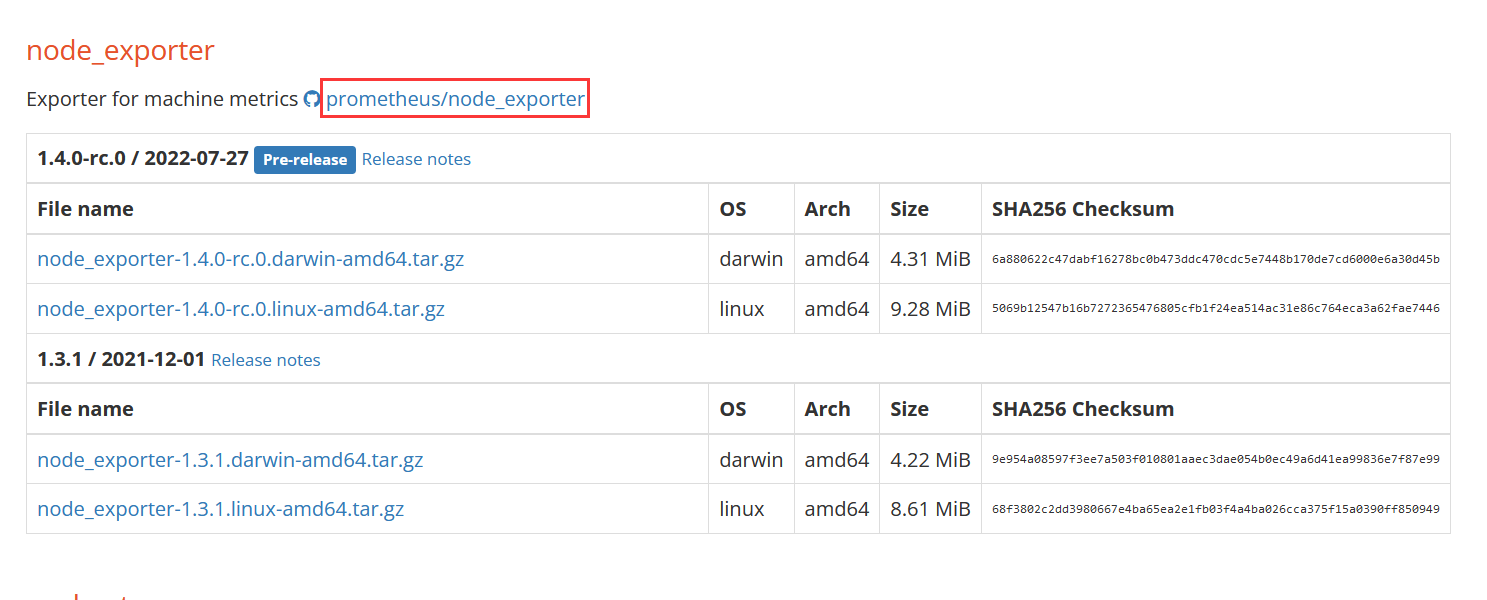

k8s各node节点使用⼆进制或者daemonset方式安装node_exporter,⽤于收集各k8s node节点宿主机的监控指标数据,默认监听端口为9100。

二进制部署node-exporter

官网:

https://prometheus.io/download/

直接从官网下载或从github上选择版本下载

部署node-exporter

root@node1:/usr/local/src\ tar xf node_exporter-1.3.1.linux-amd64.tar.gz -C /usr/local/

root@node1:/usr/local\ ln -s node_exporter-1.3.1.linux-amd64 node_exporter

创建node-exporter service启动⽂件

root@node1:~\ vim /etc/systemd/system/node-exporter.service

[Unit]

Description=Prometheus Node Exporter

After=network.target

[Service]

ExecStart=/usr/local/node_exporter/node_exporter

[Install]

WantedBy=multi-user.target

启动node-exporter

root@node1:~\ systemctl daemon-reload && systemctl restart node-exporter && systemctl enable node-exporter.service

使用帮助

root@node1:/usr/local/node_exporter\ ./node_exporter -h

访问

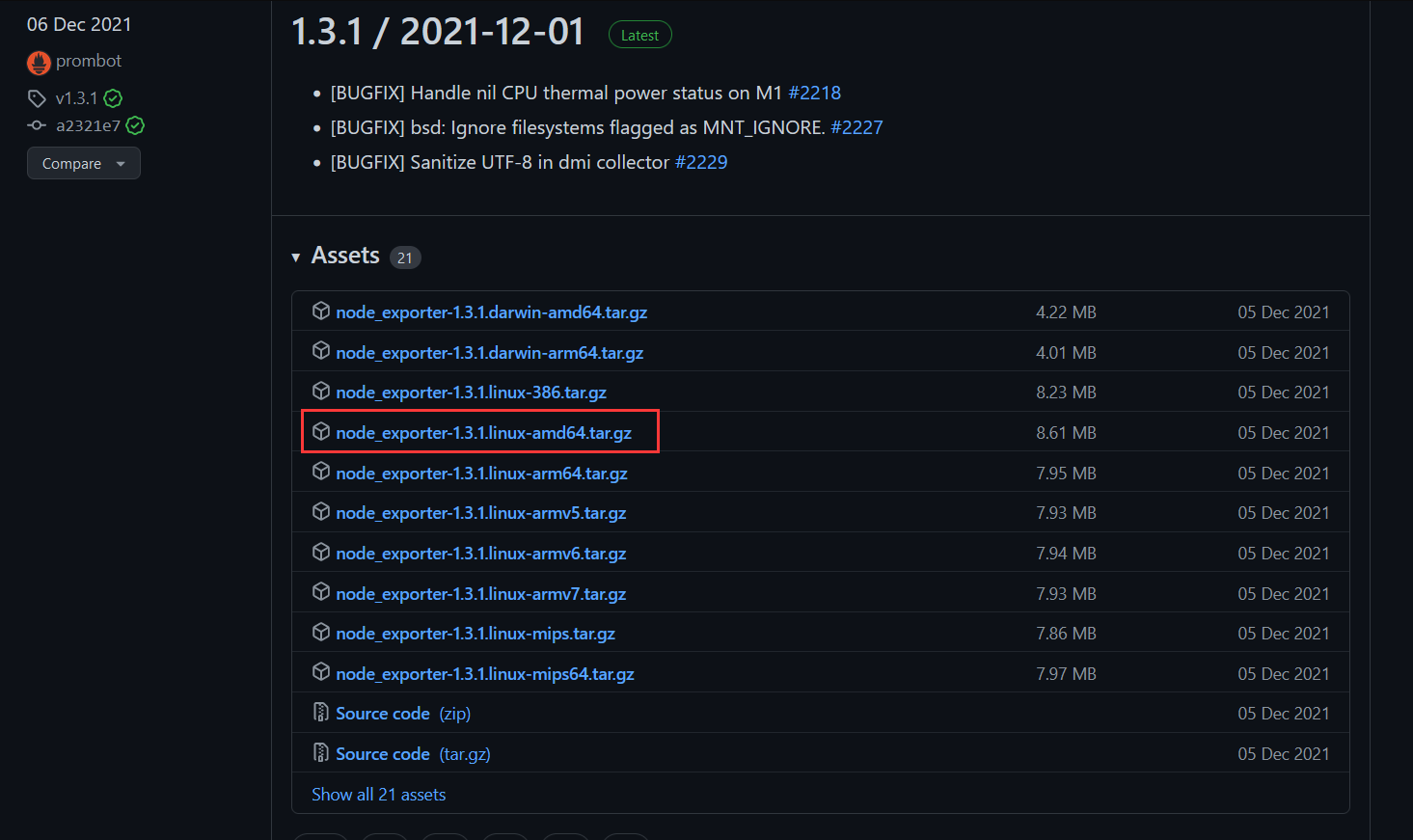

daemonset部署node-exporter

github项目地址

https://github.com/prometheus/node_exporter

创建namespace

root@master1:~/yaml\ kubectl create ns monitoring

创建node-exporter k8s yaml

root@master1:~/yaml\# cat daemonset-deploy-node-exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitoring

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

tolerations:

- effect: NoSchedule #master节点污点容忍

key: node-role.kubernetes.io/master

containers:

- image: prom/node-exporter:v1.3.1

imagePullPolicy: IfNotPresent

name: prometheus-node-exporter

ports:

- containerPort: 9100 #端口暴露在9100

hostPort: 9100

protocol: TCP

name: metrics

volumeMounts:

- mountPath: /host/proc

name: proc

- mountPath: /host/sys

name: sys

- mountPath: /host

name: rootfs

args:

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host

volumes:

- name: proc

hostPath:

path: /proc

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

hostNetwork: true #使用宿主机网络

hostPID: true #Pod使用宿主机的进程命名空间

验证部署

root@master1:~/yaml\ kubectl apply -f daemonset-deploy-node-exporter.yaml

root@master1:~/yaml\ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-exporter-8w9fp 1/1 Running 0 3s 192.168.100.4 192.168.100.4 <none> <none>

node-exporter-lr8lx 1/1 Running 0 3s 192.168.100.3 192.168.100.3 <none> <none>

node-exporter-rx48k 1/1 Running 0 3s 192.168.100.5 192.168.100.5 <none> <none>

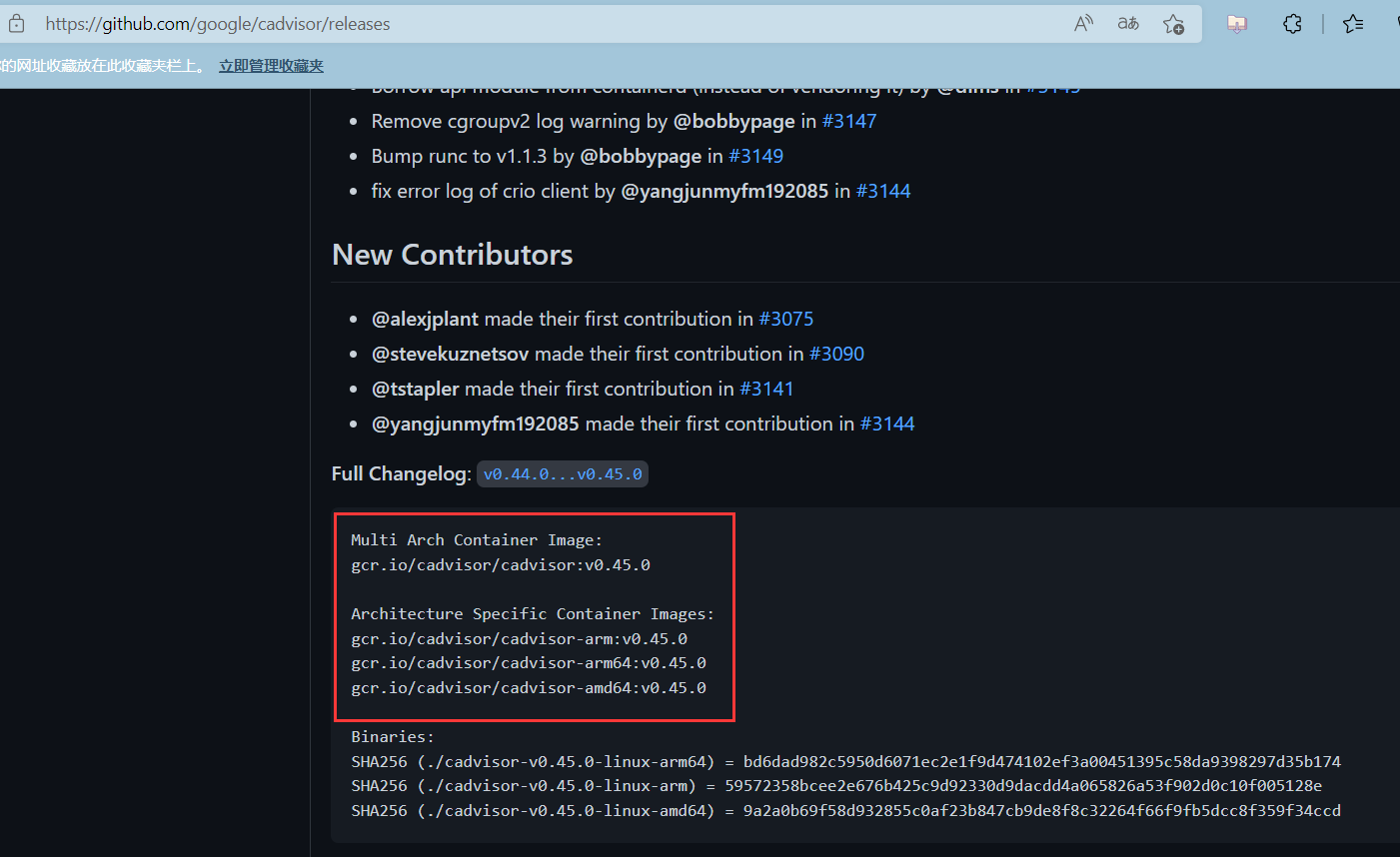

安装cadvisor

监控Pod指标数据需要使用cadvisor,cadvisor由谷歌开源,在kubernetes v1.11及之前的版本内置在kubelet中并监听在4194端口(https://github.com/kubernetes/kubernetes/pull/65707),从v1.12开始kubelet中的cadvisor被移除,因此需要单独通过daemonset等方式部署。

cadvisor(容器顾问)不仅可以搜集⼀台机器上所有运行的容器信息,还提供基础查询界⾯和http接口,方便其他组件如Prometheus进行数据抓取,cAdvisor可以对节点机器上的容器进行实时监控和性能数据采集,包括容器的CPU使用情况、内存使用情况、网络吞吐量及文件系统使用情况。

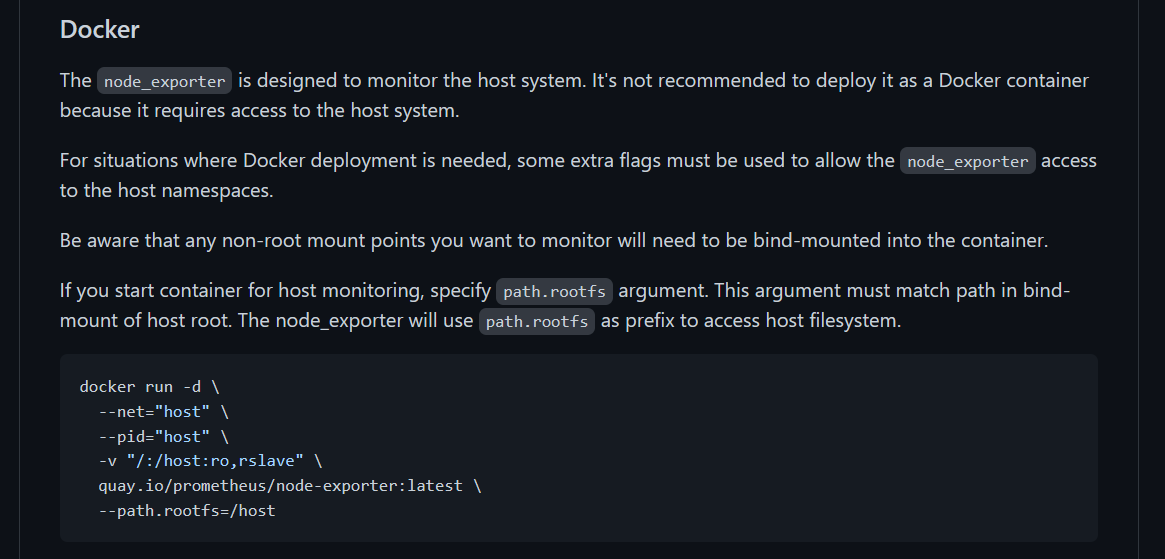

docker部署cadvisor

cadvisor镜像准备:

cadvisor github项目地址

https://github.com/google/cadvisor/releases

替换镜像为阿里云镜像

docker pull registry.cn-hangzhou.aliyuncs.com/liangxiaohui/cadvisor-amd64:v0.45.0

docker容器部署

docker run -it -d \

--volume=/:/rootfs:ro \

--volume=/var/run:/var/run:ro \

--volume=/sys:/sys:ro \

--volume=/var/lib/docker/:/var/lib/docker:ro \

--volume=/dev/disk/:/dev/disk:ro \

--publish=8080:8080 \

--detach=true \

--name=cadvisor \

--privileged \

--device=/dev/kmsg \

registry.cn-hangzhou.aliyuncs.com/liangxiaohui/cadvisor-amd64:v0.45.0

containerd容器部署

修改/var/lib/docker为/var/lib/containerd

docker run -it -d \

--volume=/:/rootfs:ro \

--volume=/var/run:/var/run:ro \

--volume=/sys:/sys:ro \

--volume=/var/lib/containerd/:/var/lib/containerd:ro \

--volume=/dev/disk/:/dev/disk:ro \

--publish=8080:8080 \

--detach=true \

--name=cadvisor \

--privileged \

--device=/dev/kmsg \

registry.cn-hangzhou.aliyuncs.com/liangxiaohui/cadvisor-amd64:v0.45.0

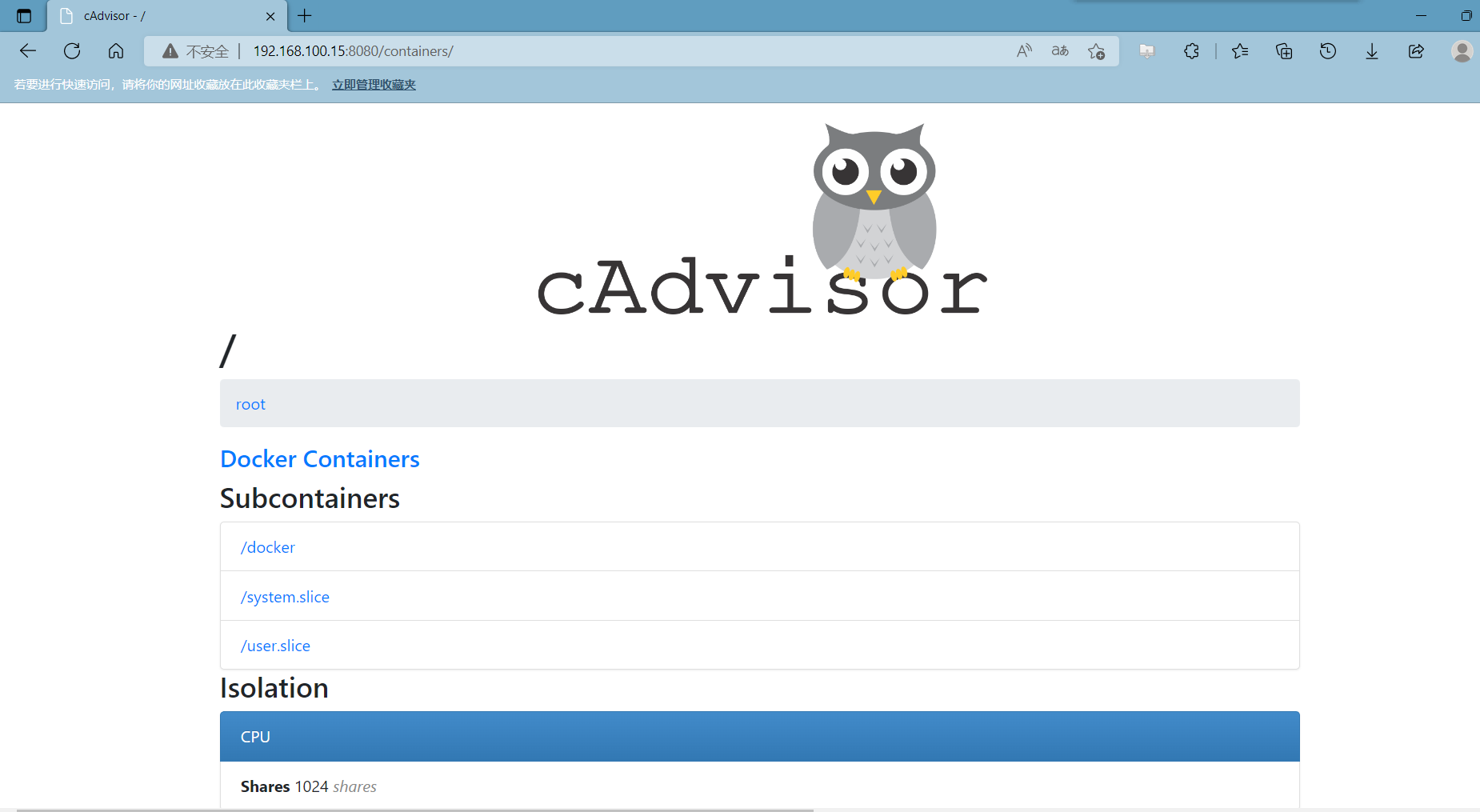

部署后浏览器访问 ip:8080端口

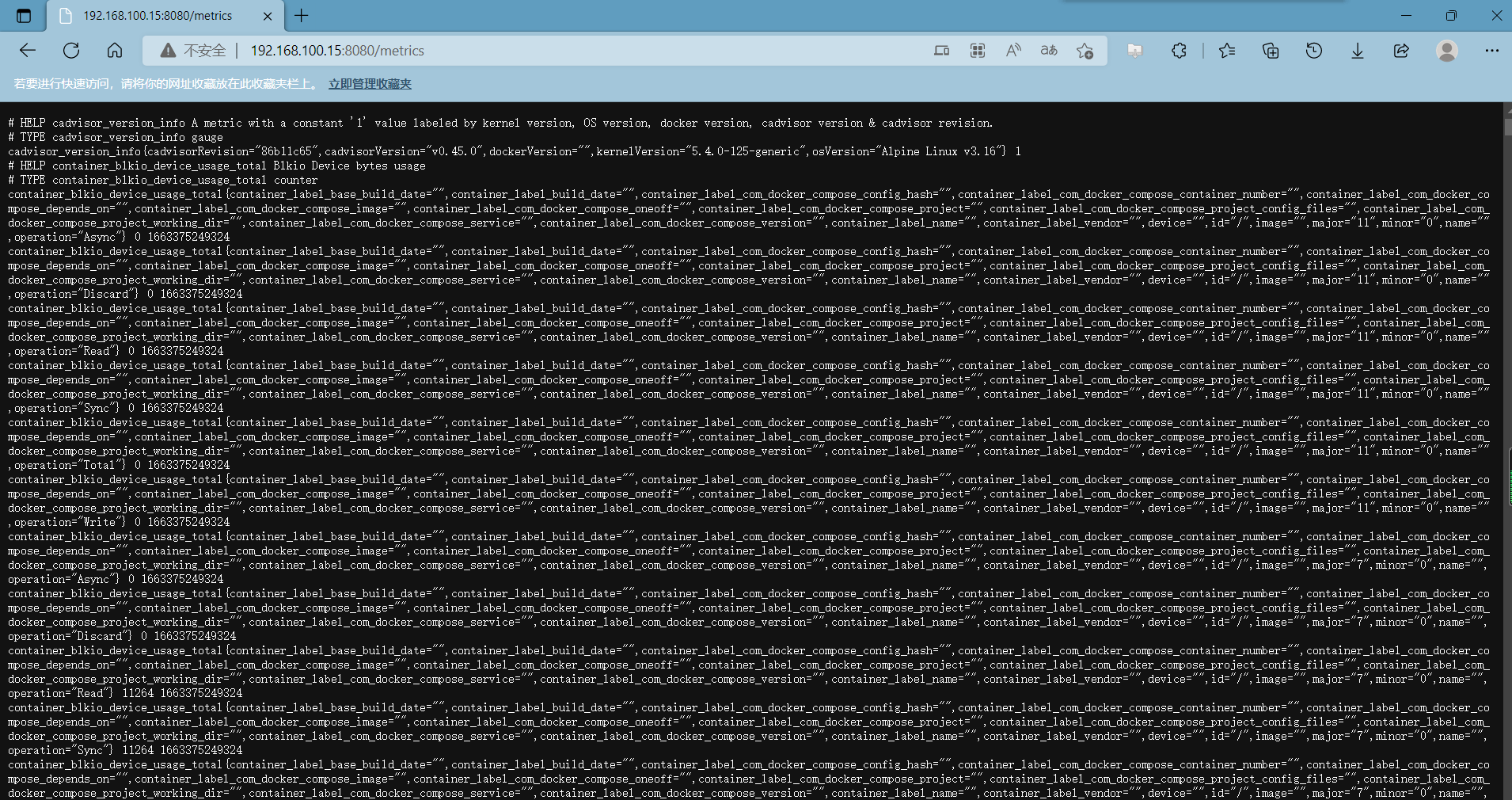

访问cadvisor的metrics页面,显示采集容器的指标数据

http://192.168.100.15:8080/metrics

root@master1:~/yaml\ kubectl create ns monitoring

创建daemonset cadvisor yaml文件

root@master1:/yaml/prometheus# cat daemonset-cadvisor.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: cadvisor

namespace: monitoring

spec:

selector:

matchLabels:

app: cAdvisor

template:

metadata:

labels:

app: cAdvisor

spec:

tolerations: #master节点的污点容忍,忽略NoSchedule

- effect: NoSchedule

key: node-role.kubernetes.io/master

hostNetwork: true #使用宿主机网络

restartPolicy: Always #重启策略

containers:

- name: cadvisor

image: registry.cn-hangzhou.aliyuncs.com/liangxiaohui/cadvisor-amd64:v0.45.0

imagePullPolicy: IfNotPresent #镜像拉取策略

ports:

- containerPort: 8080

volumeMounts:

- name: root

mountPath: /rootfs

- name: run

mountPath: /var/run

- name: sys

mountPath: /sys

- name: containerd

mountPath: /var/lib/containerd #对应宿主机容器的存储目录,docker则为/var/lib/docker

- name: disk

mountPath: /dev/disk/

volumes:

- name: root

hostPath:

path: /

- name: run

hostPath:

path: /var/run

- name: sys

hostPath:

path: /sys

- name: disk

hostPath:

path: /dev/disk/

- name: containerd

hostPath:

path: /var/lib/containerd #对应宿主机容器的存储目录,docker则为/var/lib/docker

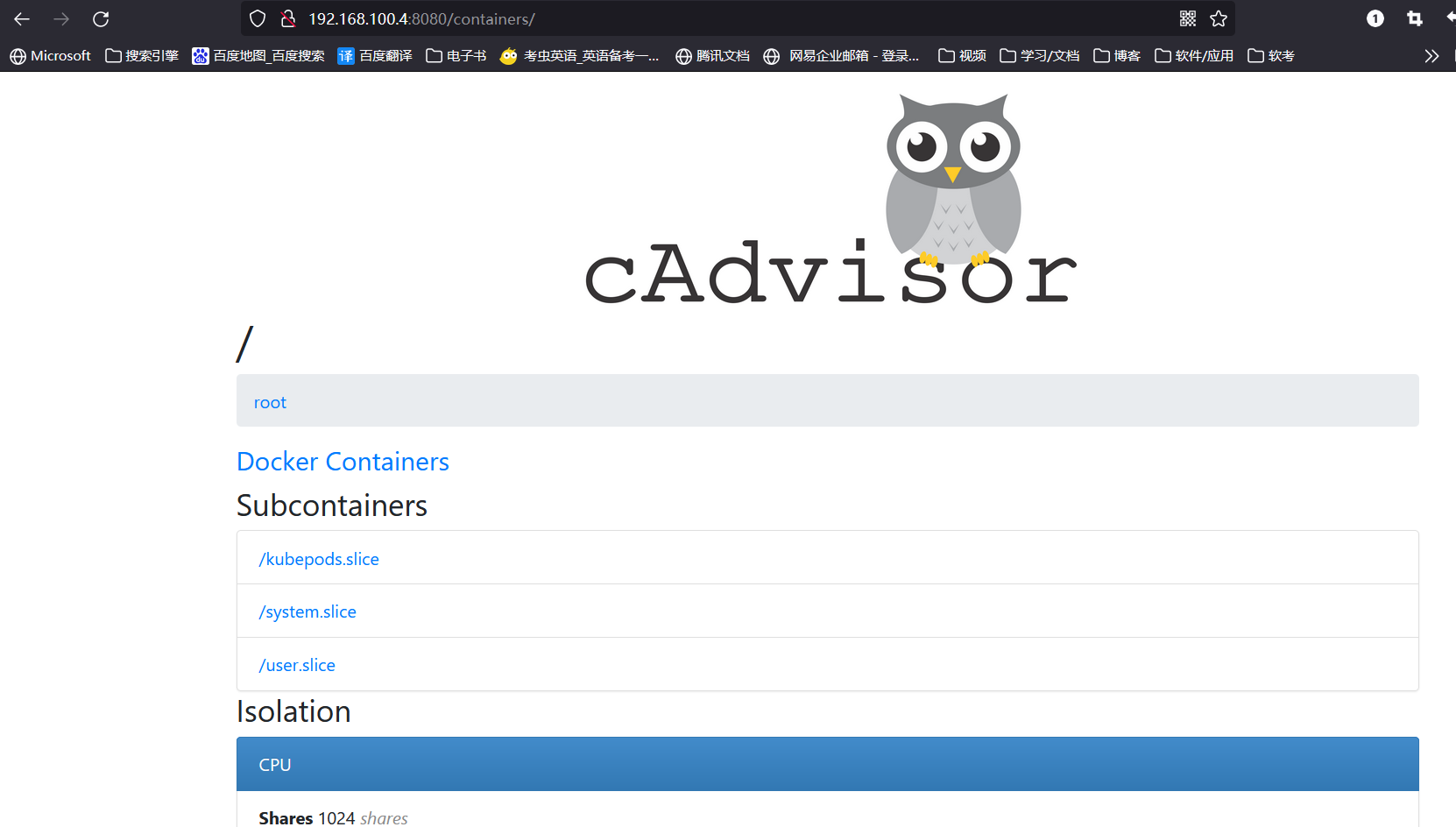

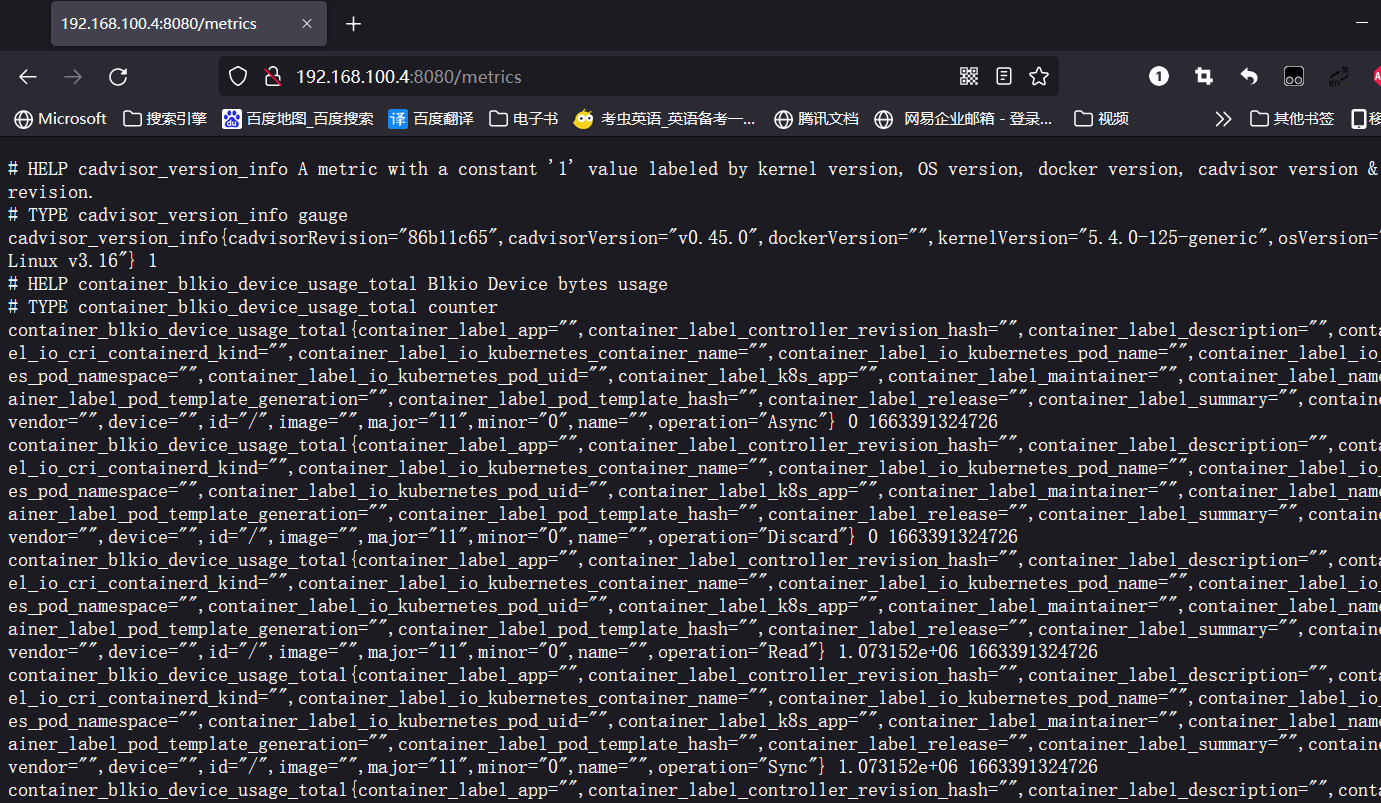

部署后查看每个节点的pod是否成功创建,pod使用的是hostNetwork网络,因此端口直接暴露在宿主机网络

root@master1:~/yaml\ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cadvisor-56p5x 1/1 Running 0 71m 192.168.100.4 192.168.100.4 <none> <none>

cadvisor-shz7k 1/1 Running 0 71m 192.168.100.5 192.168.100.5 <none> <none>

cadvisor-z2hct 1/1 Running 0 71m 192.168.100.3 192.168.100.3 <none> <none>

访问各个节点ip:8080端口,验证cadvisor服务

查看metrics指标数据 URI:/metrics

本文来自博客园,作者:PunchLinux,转载请注明原文链接:https://www.cnblogs.com/punchlinux/p/16756410.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号