kubernetes 1.24高可用集群二进制部署

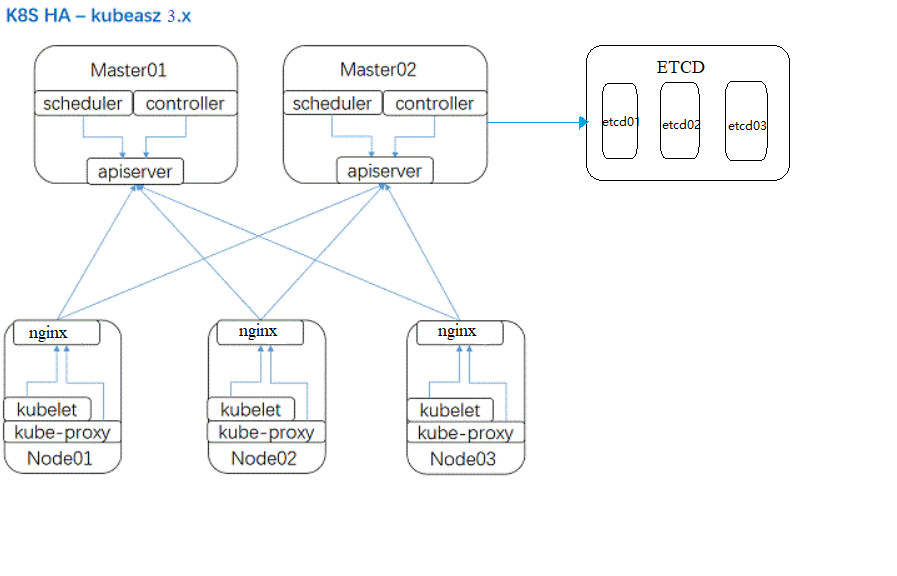

kubeasz介绍

kubeasz github地址:

https://github.com/easzlab/kubeasz

软件结构

配置master 集群负载均衡与高可用

准备两台服务器部署keepalived与haproxy

1、配置haproxy

root@haproxyA:~# cat /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen k8s-6443

bind 192.168.100.188:6443

mode tcp

server master1 192.168.100.2:6443 check inter 3s fall 3 rise 3

server master2 192.168.100.3:6443 check inter 3s fall 3 rise 3

server master3 192.168.100.4:6443 check inter 3s fall 3 rise 3

2、配置keepalived

Master:

root@haproxyA:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.100.188 dev eth0 label eth0:1

192.168.100.189 dev eth0 label eth0:2

192.168.100.190 dev eth0 label eth0:3

192.168.100.191 dev eth0 label eth0:4

192.168.100.192 dev eth0 label eth0:5

}

}

Backup:

root@haproxyB:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_21

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.100.188 dev eth0 label eth0:1

192.168.100.189 dev eth0 label eth0:2

192.168.100.190 dev eth0 label eth0:3

192.168.100.191 dev eth0 label eth0:4

192.168.100.192 dev eth0 label eth0:5

}

}

启动haproxy和keepalived

root@haproxyA:~# systemctl restart haproxy keepalived

root@haproxyB:~# systemctl restart haproxy keepalived

配置集群免密登录

设置所有节点都能够免密登录,并为每个节点设置python的软连接

root@deploy:~# apt install sshpass

root@deploy:~# ssh-keygen部署节点也执行python软连接

root@deploy:~# ln -sv /usr/bin/python3 /usr/bin/python

root@deploy:~# cat key.sh

#!/bin/bash

IP="

192.168.100.2

192.168.100.3

192.168.100.4

192.168.100.5

192.168.100.6

192.168.100.7

192.168.100.8

192.168.100.9

192.168.100.10

"

for n in ${IP};do

sshpass -p 123456 ssh-copy-id ${n} -o StrictHostKeyChecking=no

echo "${n} done"

ssh ${n} "ln -sv /usr/bin/python3 /usr/bin/python"

echo "${n} /usr/bin/python3 done"

done

配置hosts文件

root@deploy:/etc/kubeasz# cat /etc/hosts

127.0.0.1 localhost

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.100.15 harbor.cncf.net

192.168.100.2 master1

192.168.100.3 master2

192.168.100.4 master3

192.168.100.5 node1

192.168.100.6 node2

192.168.100.7 node3

192.168.100.8 etcd1

192.168.100.9 etcd2

192.168.100.10 etcd3

分发hosts文件到所有集群节点

root@deploy:~# for n in {2..10};do scp /etc/hosts 192.168.100.$n:/etc/;done

安装依赖组件

deploy节点安装ansible和git,也可以自行安装docker(可选)

root@deploy:~# apt install git ansible -y

配置时间同步

root@deploy:~ #for n in {2..10};do ssh 192.168.100.$n "apt install chrony -y;systemctl enable chrony --now";done

下载kubeasz

需要一台deploy部署服务器来安装kubeasz,并由这台服务器对k8s节点进行安装和管理。

下载kubeasz安装脚本

root@deploy:~# export release=3.3.1

root@deploy:~# wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

root@deploy:~# chmod a+x ezdown

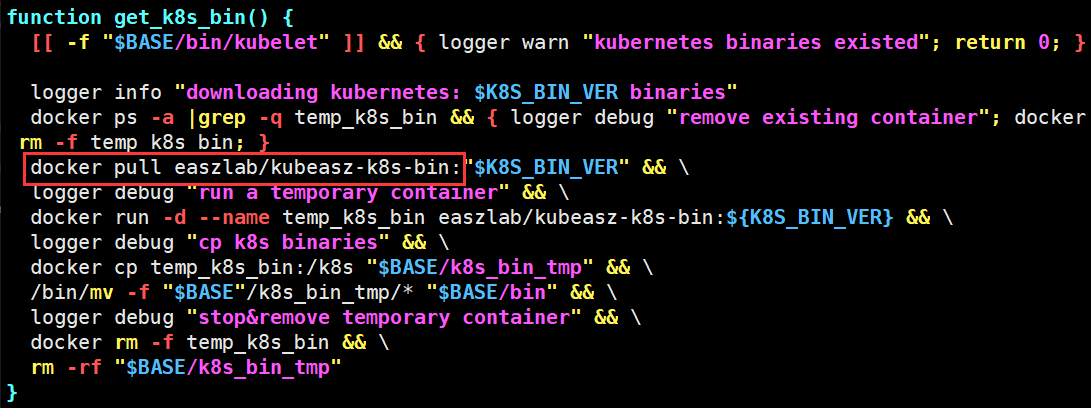

查看脚本,可以选择自定义kubernetes组件版本号;查看安装镜像手动拉去镜像到本地。该脚本以包含docker安装,如果本机如果已经安装docker则直接使用。

root@deploy:~# vim ezdown

下载镜像

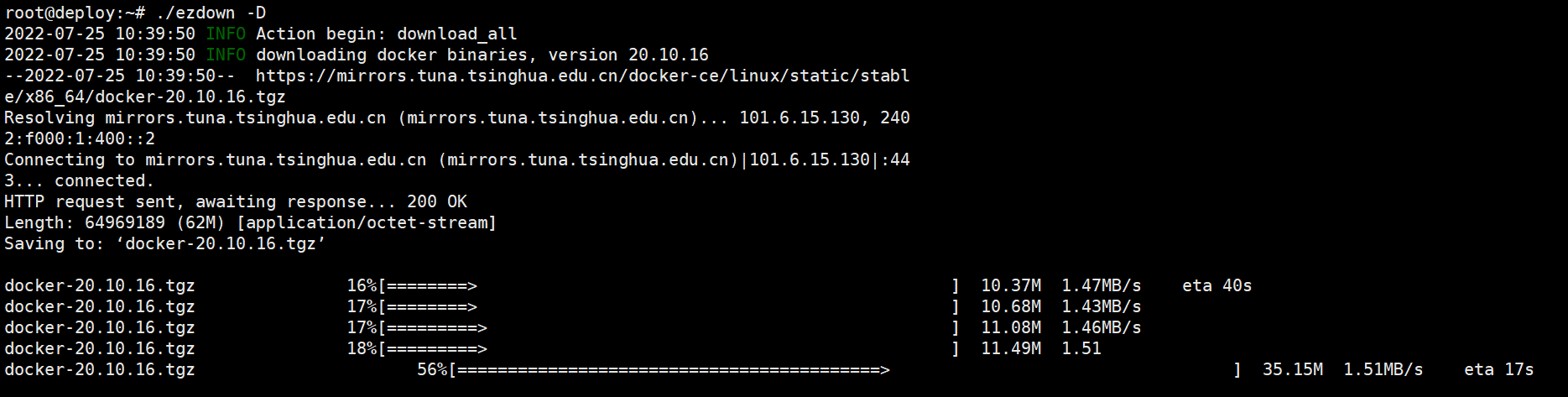

下载kubeasz代码、二进制、默认容器镜像(更多关于ezdown的参数,运行./ezdown 查看)

root@deploy:~# ./ezdown -D

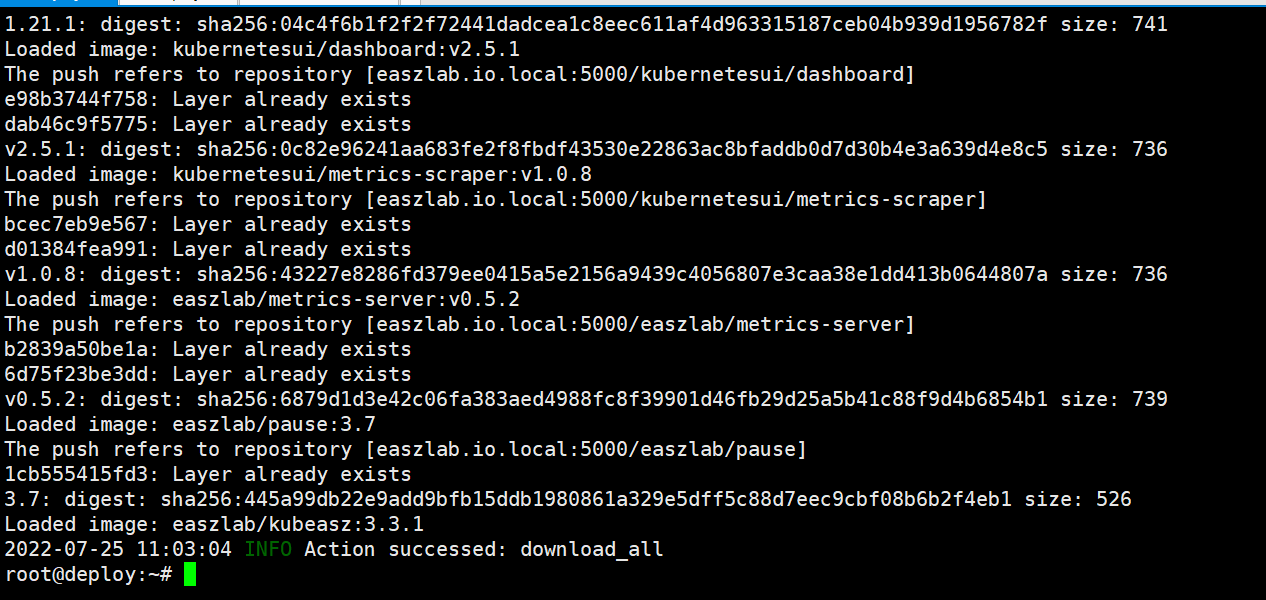

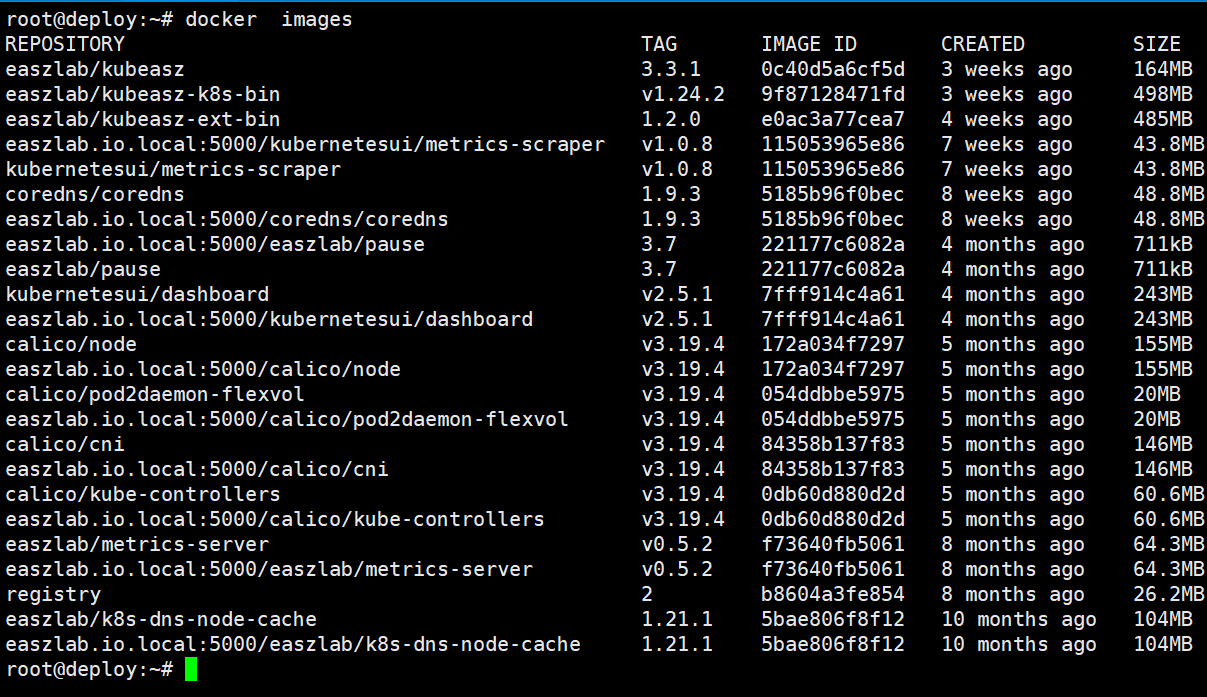

查看镜像

将deploy部署服务器下载好的所有镜像进行上传到本地harbor做一个归档备份

添加harbor域名解析

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# vim /etc/hosts

192.168.100.15 harbor.cncf.net

批量分发hosts问价到所有集群

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# for n in {2..10};do scp /etc/hosts 192.168.100.$n:/etc/;done

创建证书存放路径

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# mkdir /etc/docker/certs.d/harbor.cncf.net -p

Harbor将证书发送给客户端

root@Harbor:/usr/local/harbor/certs# scp cncf.net.crt 192.168.100.16:/etc/docker/certs.d/harbor.cncf.net/

docker登录harbor

root@deploy:/etc/docker/certs.d# docker login harbor.cncf.net

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

上传镜像到harbor

上传pause镜像

root@deploy:/etc/docker/certs.d# docker tag easzlab/pause:3.7 harbor.cncf.net/baseimages/pause:3.7

root@deploy:/etc/docker/certs.d# docker push harbor.cncf.net/baseimages/pause:3.7

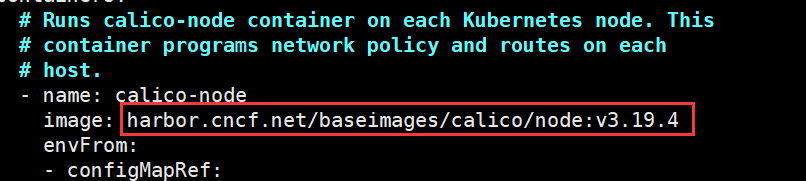

上传calico/node镜像

root@deploy:/etc/kubeasz# docker tag calico/node:v3.19.4 harbor.cncf.net/baseimages/calico/node:v3.19.4

root@deploy:/etc/kubeasz# docker push harbor.cncf.net/baseimages/calico/node:v3.19.4

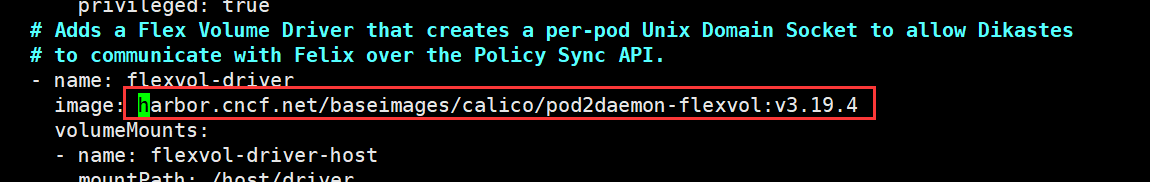

上传calico/pod2daemon-flexvol镜像

root@deploy:~# docker tag calico/pod2daemon-flexvol:v3.19.4 harbor.cncf.net/baseimages/calico/pod2daemon-flexvol:v3.19.4

root@deploy:~# docker push harbor.cncf.net/baseimages/calico/pod2daemon-flexvol:v3.19.4

上传calico/cni镜像

root@deploy:~# docker tag calico/cni:v3.19.4 harbor.cncf.net/baseimages/calico/cni:v3.19.4

root@deploy:~# docker push harbor.cncf.net/baseimages/calico/cni:v3.19.4

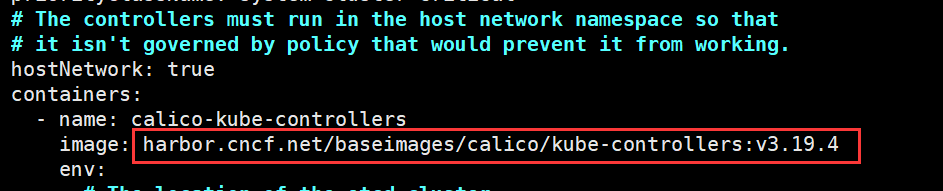

上传calico/kube-controllers镜像

root@deploy:~# docker tag calico/kube-controllers:v3.19.4 harbor.cncf.net/baseimages/calico/kube-controllers:v3.19.4

root@deploy:~# docker push harbor.cncf.net/baseimages/calico/kube-controllers:v3.19.4

执行安装

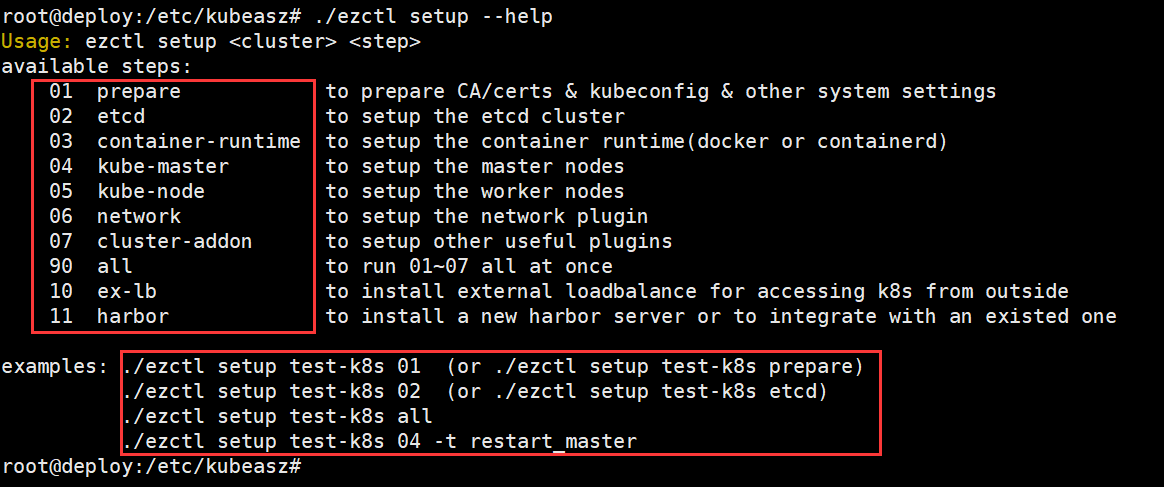

ezctl脚本使用介绍

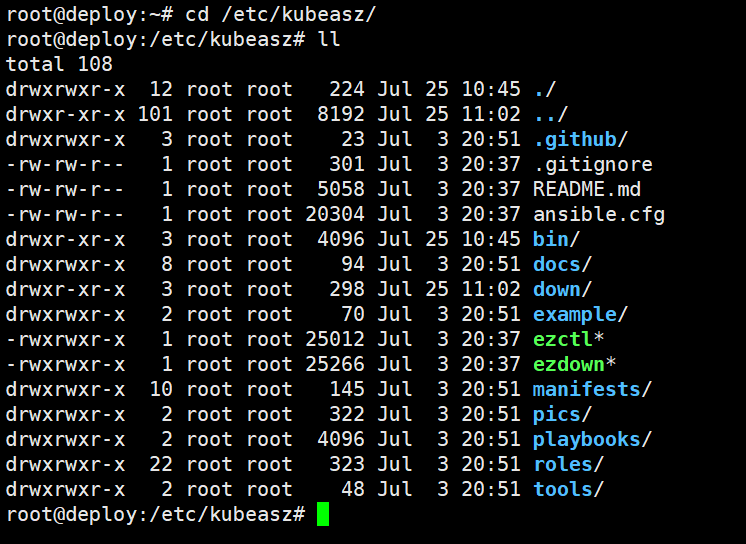

脚本运行成功后,所有文件(kubeasz代码、二进制、离线镜像)均已整理好放入目录/etc/kubeasz下

角色目录

/etc/kubeasz/roles/

剧本目录

/etc/kubeasz/playboos/

所有组件的二进制文件路径

/etc/kubeasz/bin/

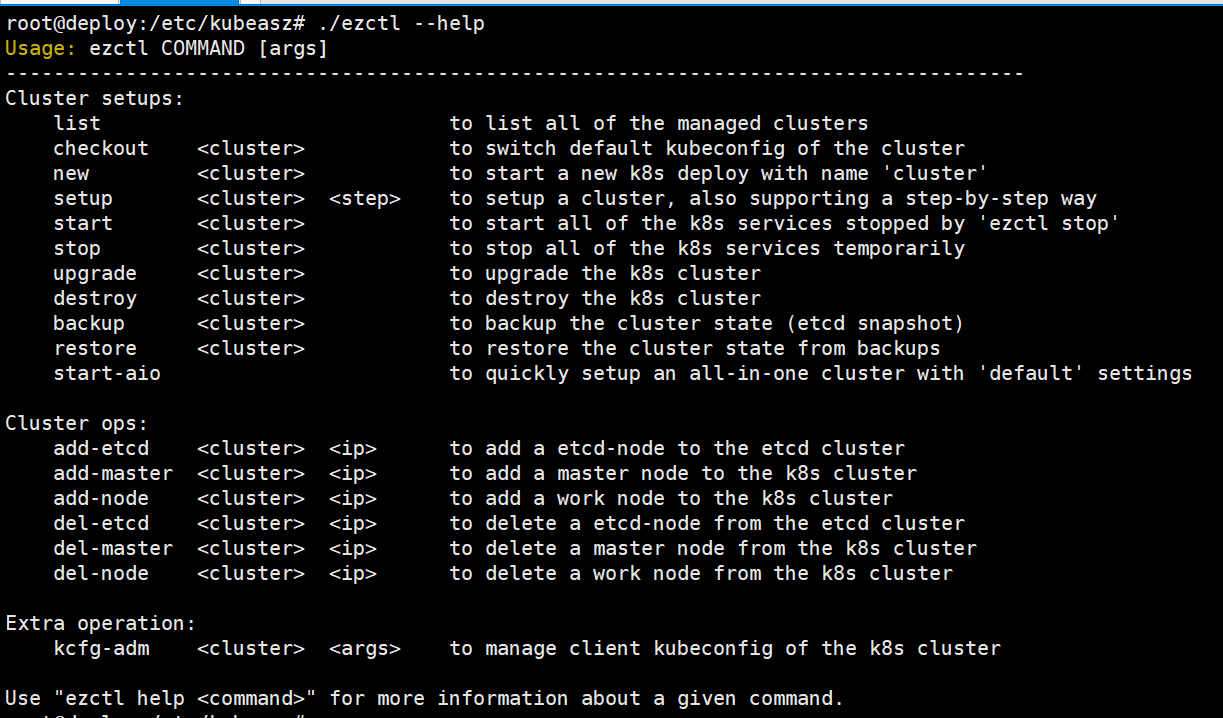

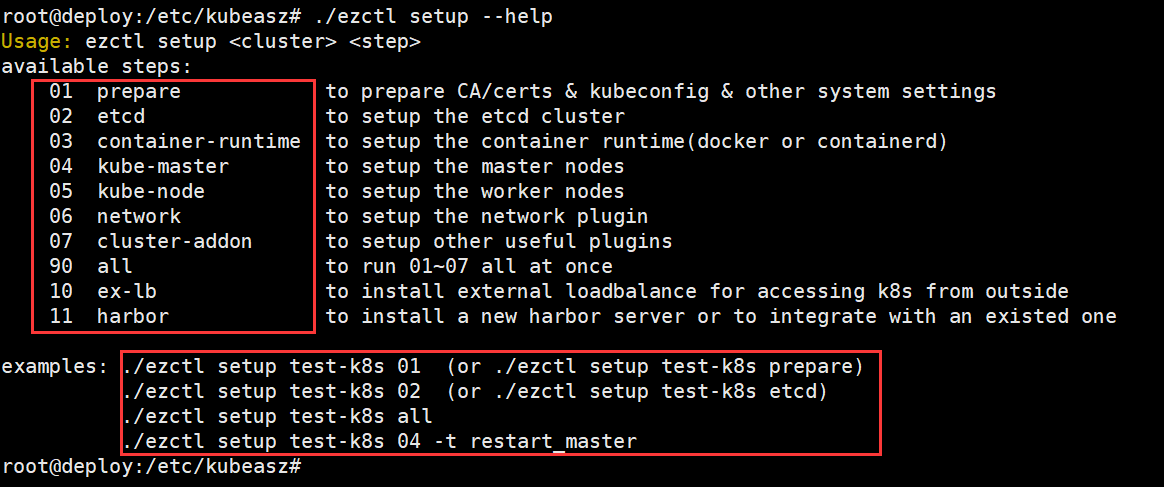

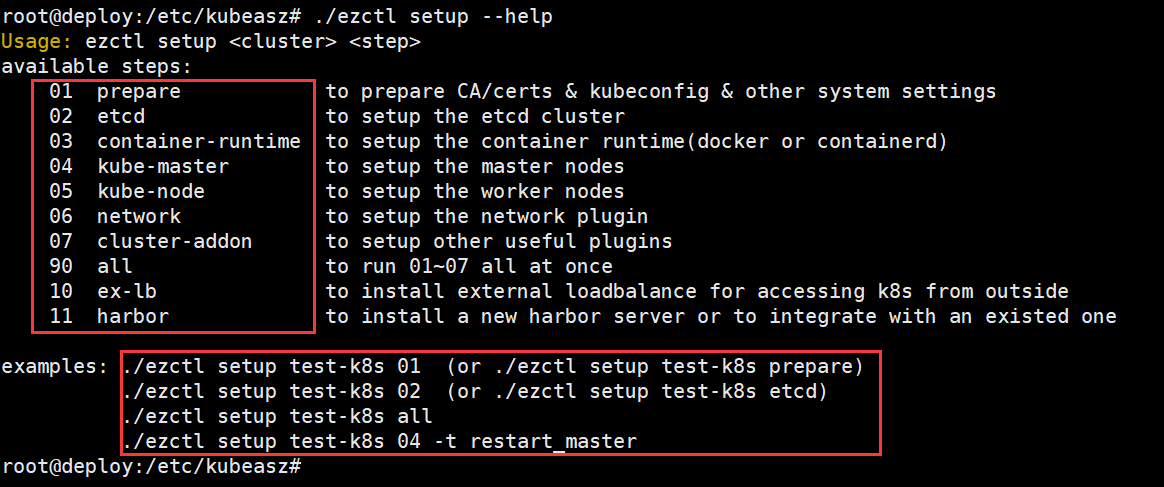

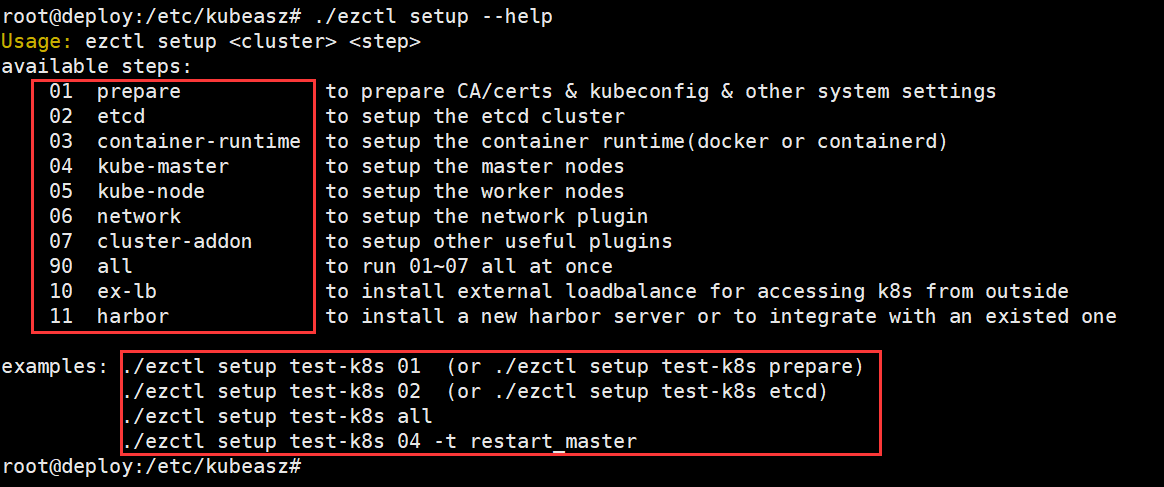

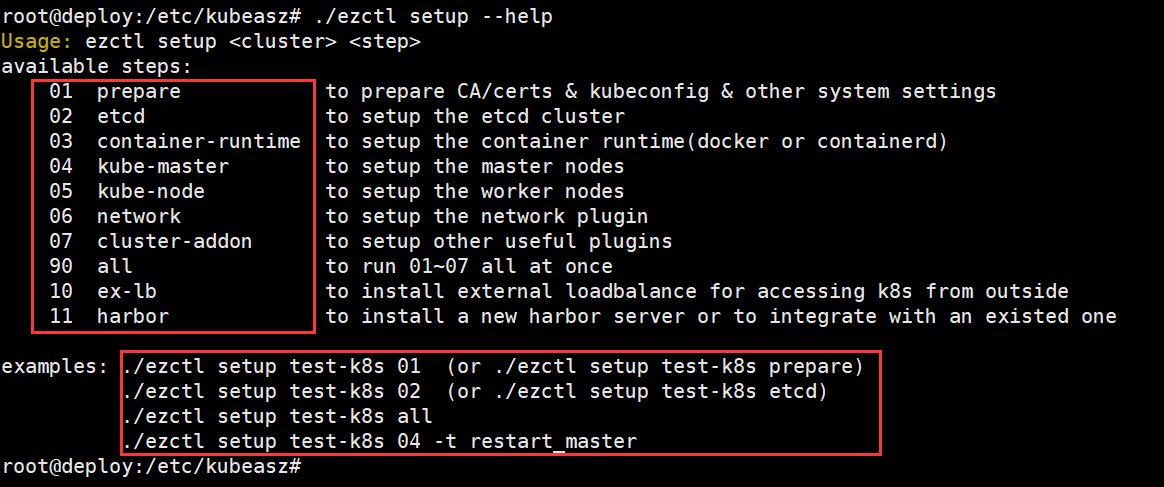

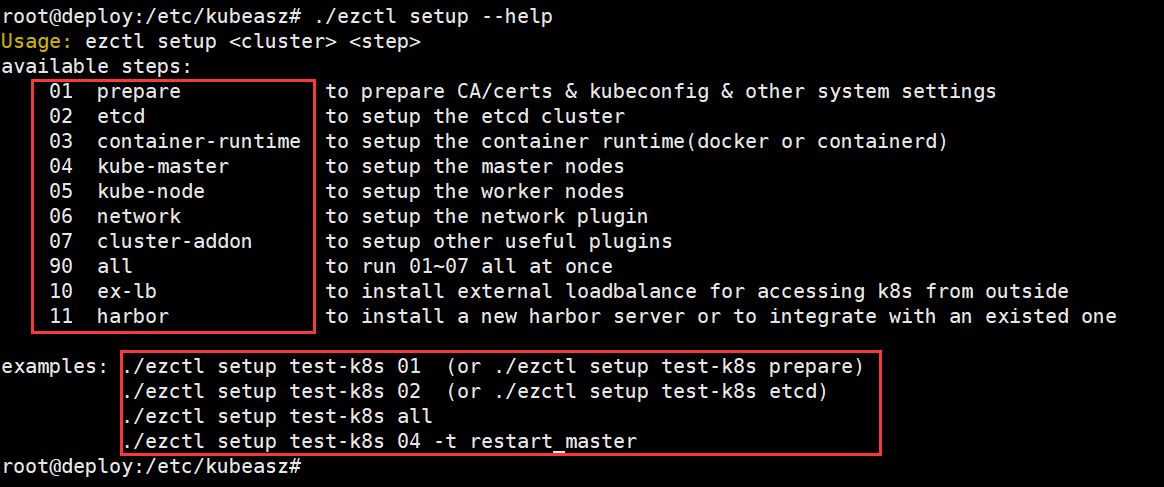

查看/etc/kubeasz下的ezctl脚本文件,包含了ansible部署剧本,脚本使用方法

root@deploy:/etc/kubeasz# ./ezctl --help

参数介绍:

new <cluster> 创建一个新的k8s集群去部署,<cluster>为k8s集群名称

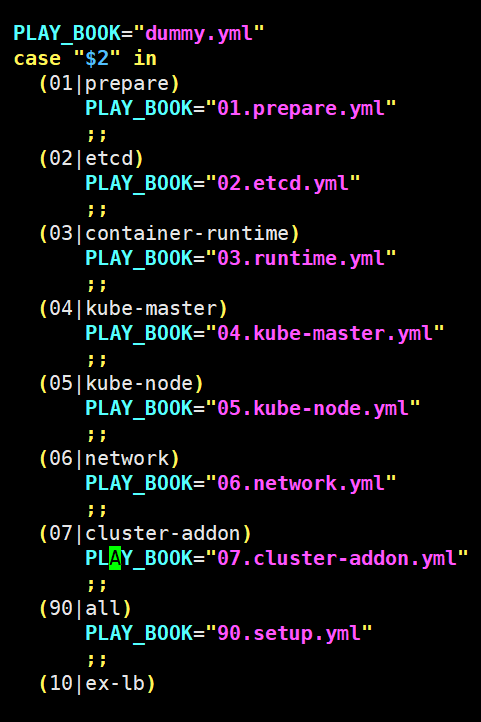

setup <cluster> <step> 对k8s集群进行步骤安装,<setup>为步骤名称

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# ./ezctl setup --help

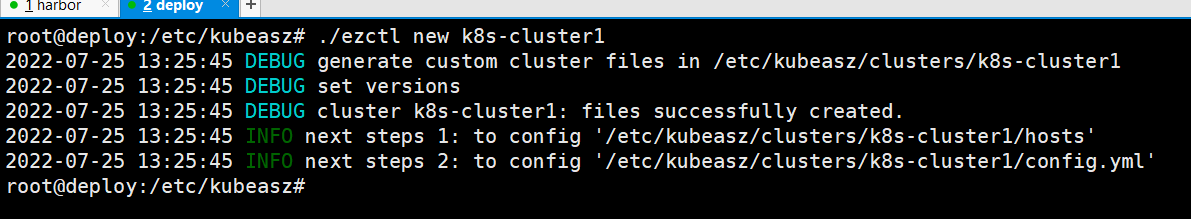

创建集群配置

创建自定义集群名称k8s-cluster1

root@deploy:/etc/kubeasz# ./ezctl new k8s-cluster1

会在当前/etc/kubeasz/clusters/创建一个名称为k8s-cluster1的文件夹并将ansible资源清单(hosts)和变量文件(config.yaml)放在这个目录下

进入集群配置目录

root@deploy:/etc/kubeasz# cd clusters/k8s-cluster1/

编辑资源清单文件

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# vim hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd] #指定etcd集群地址

192.168.100.8

192.168.100.9

192.168.100.10

# master node(s) #指定k8s master集群地址

[kube_master]

192.168.100.2

192.168.100.3

# work node(s) #指定k8s node集群地址

[kube_node]

192.168.1.5

192.168.1.6

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443" #指定apiserver端口号,默认即可

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd" #指定容器运行时,与kubeasz版本兼容有关

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico" #指定网络插件

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs" #指定svc模式

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16" #指定svc网络地址

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16" #指定pod网络地址

# NodePort Range

NODE_PORT_RANGE="30000-62767" #指定svc nodeport端口范围

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local" #指定集群节点dns后缀

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin" #指定集群节点二进制安装命令存放路径

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz" #指定kubeasz的配置目录

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster1" #指定集群配置目录

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl" #指定证书签发路径

修改config.yaml修改安装k8s的组件配置

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# vim config.yml

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# k8s version

K8S_VER: "1.24.2"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像

SANDBOX_IMAGE: "harbor.cncf.net/baseimages/pause:3.7" #修改为本地镜像harbor仓库地址

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP仓库

INSECURE_REG: '["http://easzlab.io.local:5000"]'

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)#添加master 高可用和负载均衡的vip地址

MASTER_CERT_HOSTS:

- "192.168.100.188"

- "k8s.easzlab.io"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段 #calico地址网段长度默认为26位

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数 #修改每个节点最大pod数量

MAX_PODS: 500

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64"

flannelVer: "v0.15.1"

flanneld_image: "easzlab.io.local:5000/easzlab/flannel:{{ flannelVer }}"

# ------------------------------------------- calico

# [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: false

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []

# [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x]

calico_ver: "v3.19.4"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

# [cilium]镜像版本

cilium_ver: "1.11.6"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: true

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "no" #取消安装

corednsVer: "1.9.3"

ENABLE_LOCAL_DNS_CACHE: false

dnsNodeCacheVer: "1.21.1"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

metricsserver_install: "no" #取消安装

metricsVer: "v0.5.2"

# dashboard 自动安装 #取消安装

dashboard_install: "no"

dashboardVer: "v2.5.1"

dashboardMetricsScraperVer: "v1.0.8"

# prometheus 自动安装 #取消安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "35.5.1"

# nfs-provisioner 自动安装 #取消安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

# network-check 自动安装 #取消安装

network_check_enabled: false

network_check_schedule: "*/5 * * * *"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.1.3"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_TLS_PORT: 8443

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true

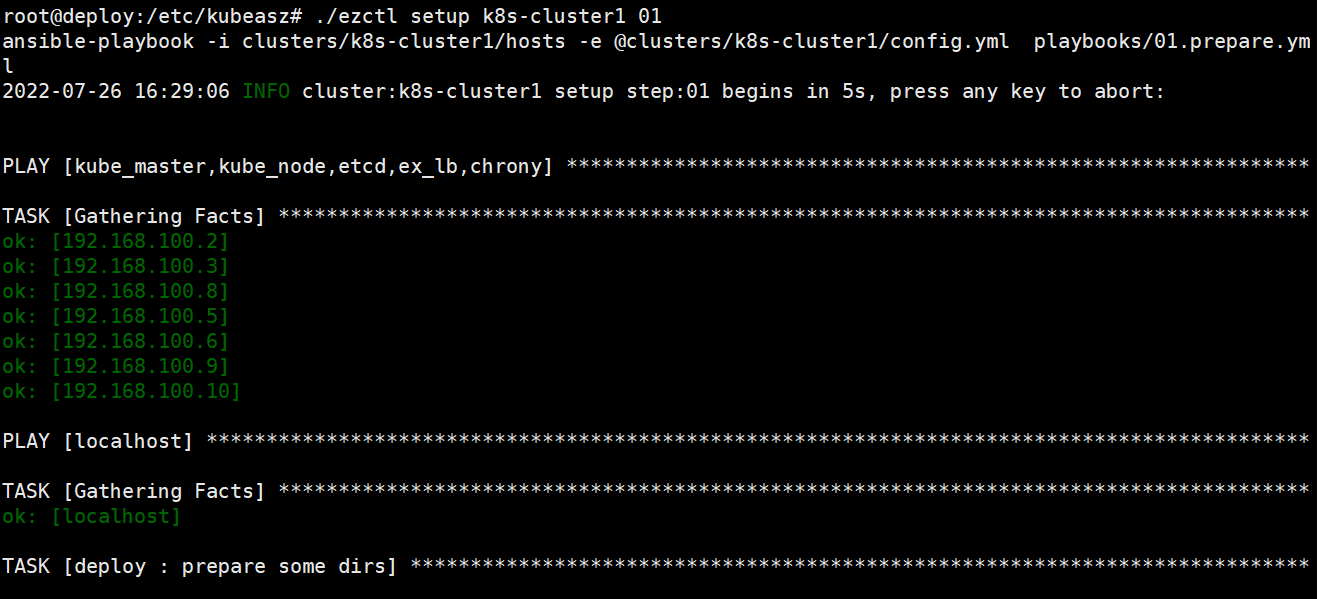

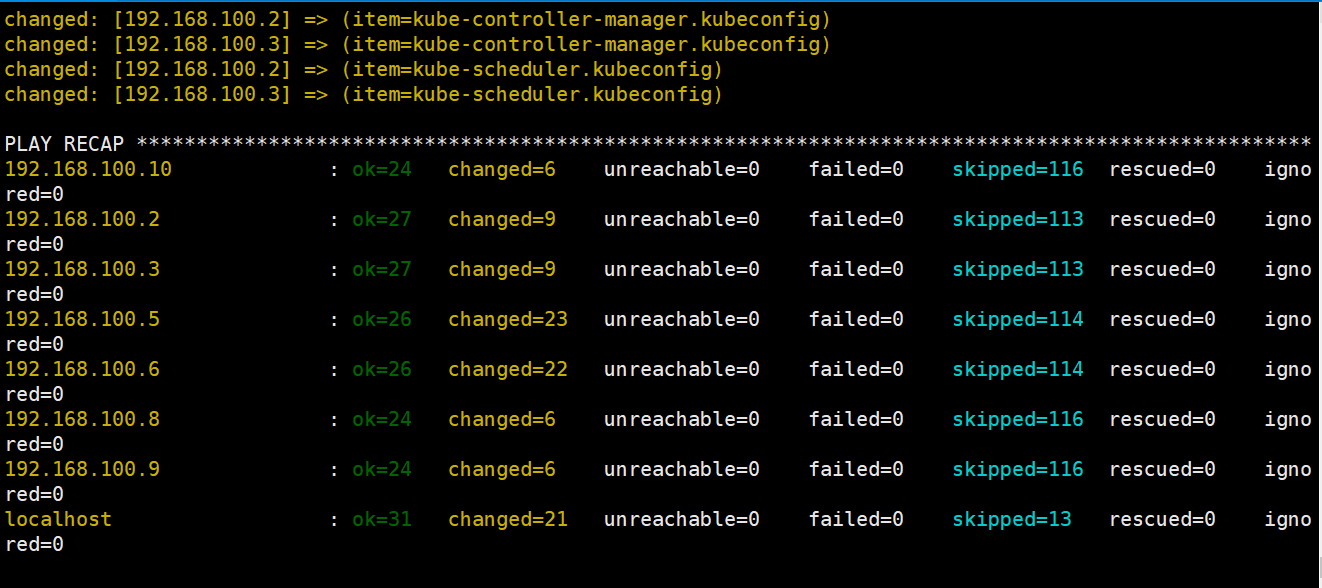

初始化集群安装

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# cd /etc/kubeasz/

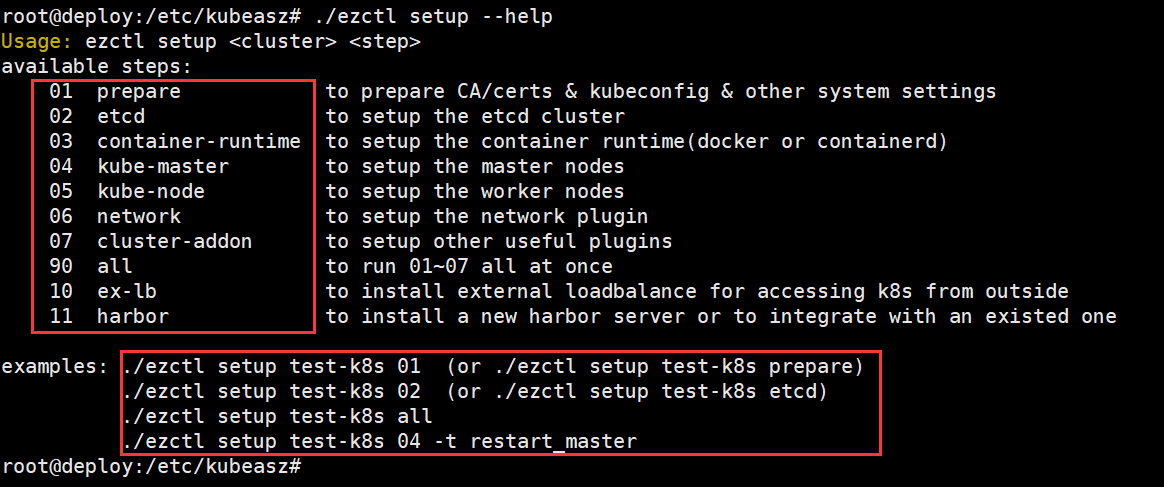

root@deploy:/etc/kubeasz# ./ezctl setup --help

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster1 01剧本文件为:/etc/kubeasz/playbooks/01.preare.yml

剧本角色执行文件:/etc/kubeasz/roles/prepare/tasks

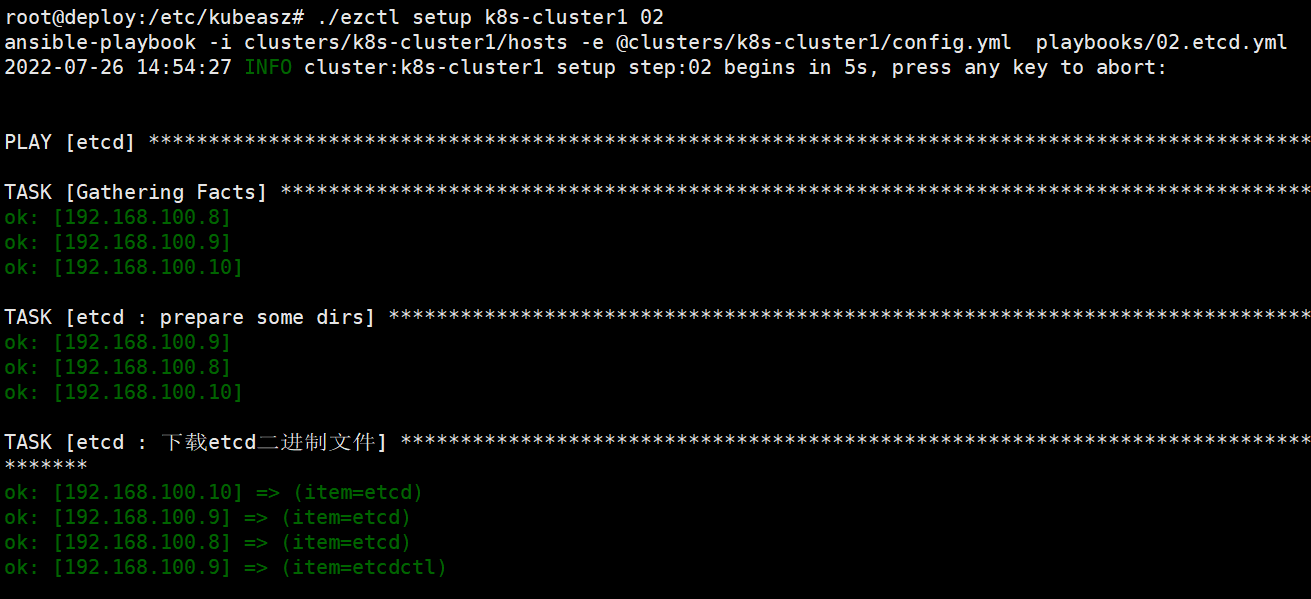

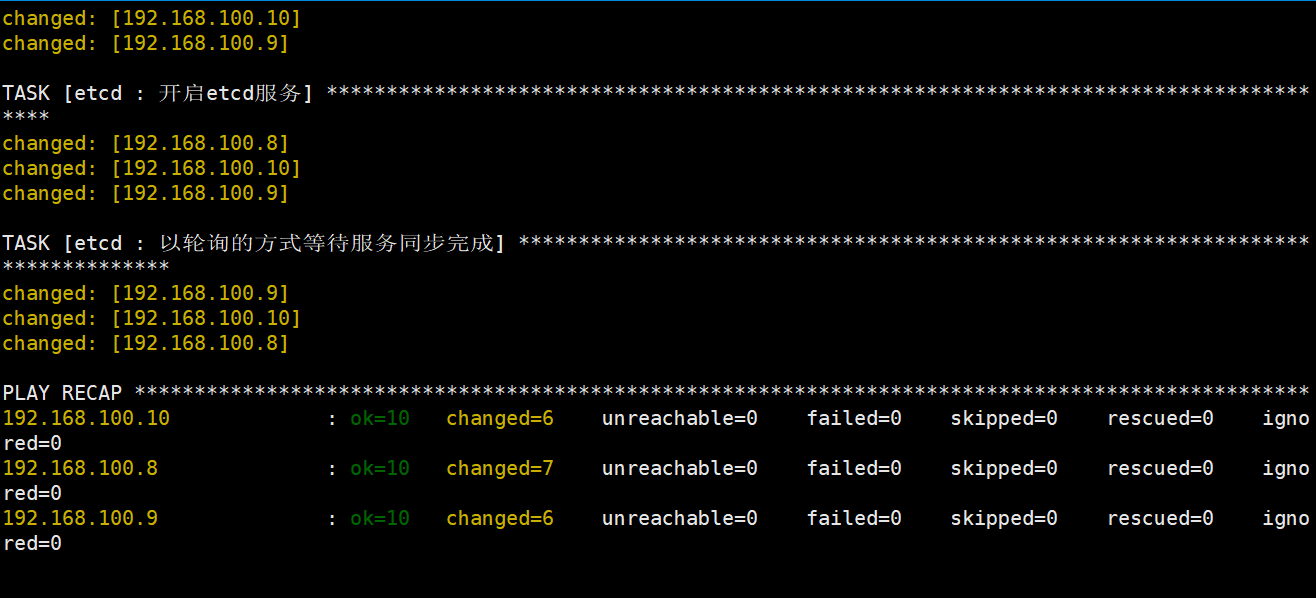

部署etcd集群

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# ./ezctl setup --help

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster1 02剧本文件为:/etc/kubeasz/playbooks/02.etcd.yml

剧本角色执行文件:/etc/kubeasz/roles/etcd/tasks

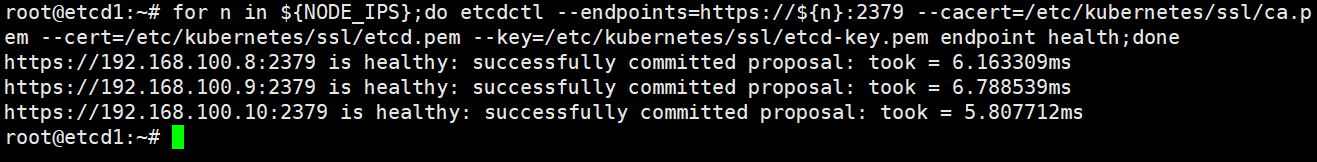

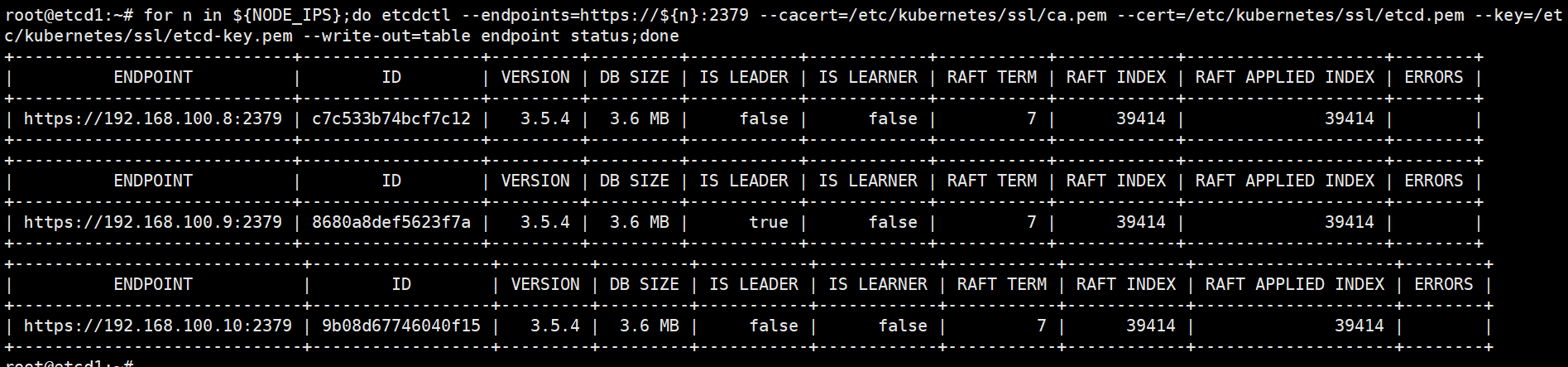

登录etcd集群查看节点状态

root@etcd1:~# export NODE_IPS="192.168.100.8 192.168.100.9 192.168.100.10"

root@etcd1:~# for n in ${NODE_IPS};do etcdctl --endpoints=https://${n}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health;done

root@etcd1:~# for n in ${NODE_IPS};do etcdctl --endpoints=https://${n}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem --write-out=table endpoint status;done

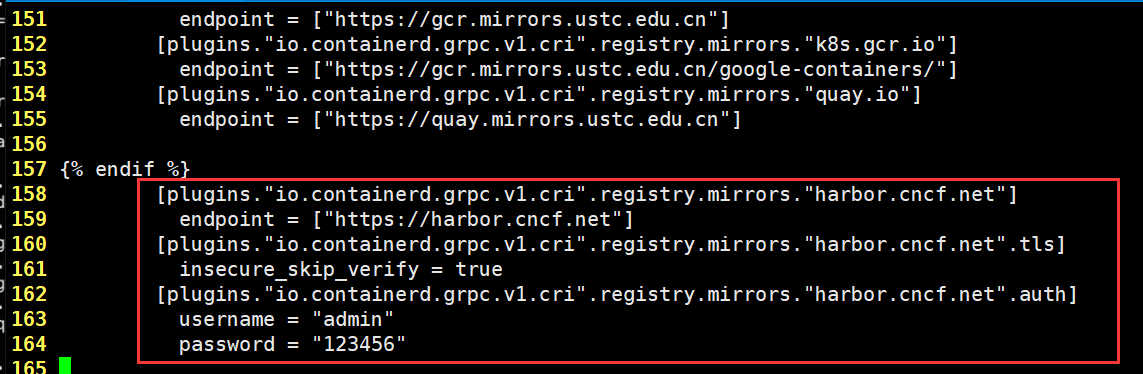

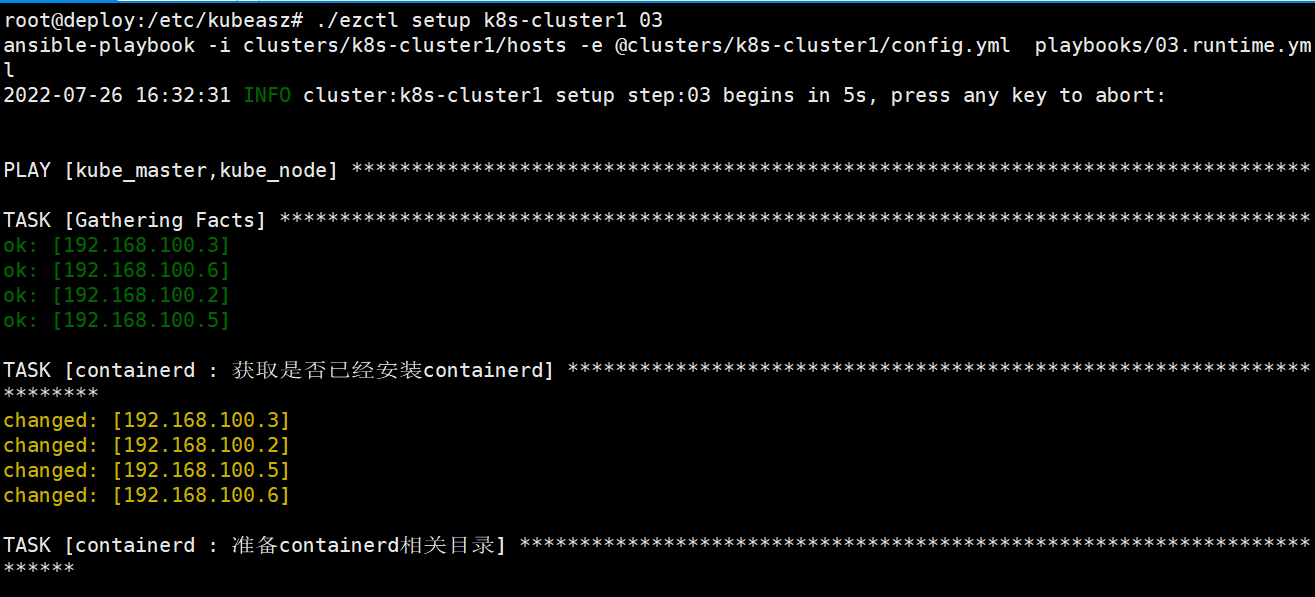

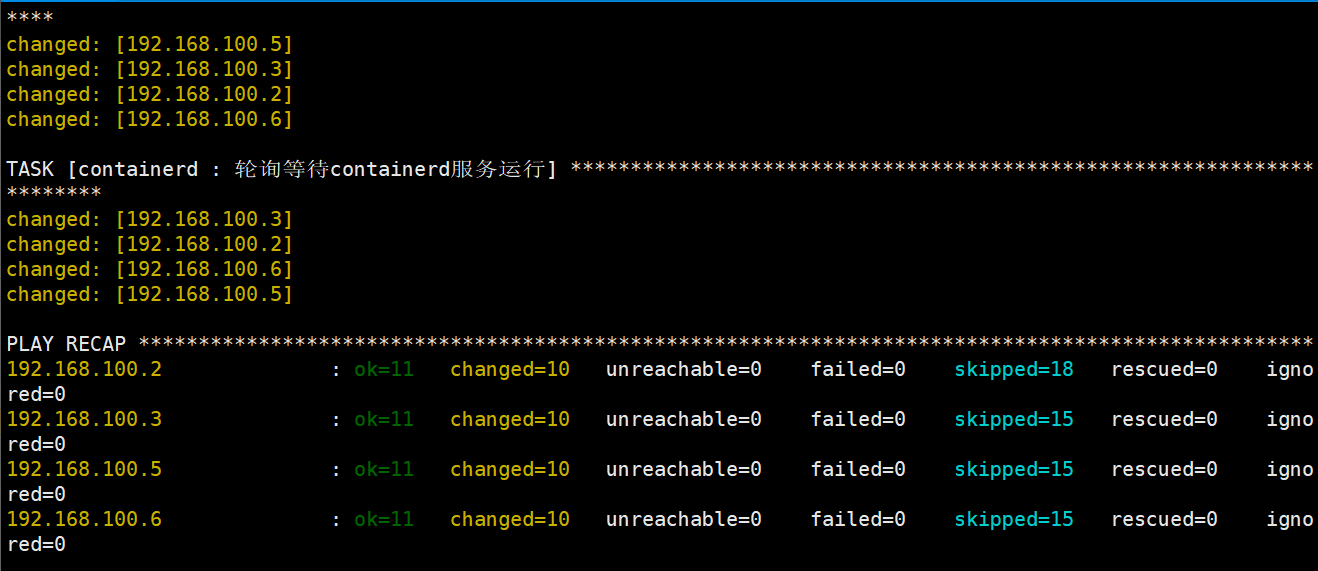

部署集群runtime

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# ./ezctl setup --help

修改containerd的配置文件,添加本地集群的harbor镜像仓库验证

root@deploy:/etc/kubeasz# cd roles/containerd/templates/

root@deploy:/etc/kubeasz/roles/containerd/templates# vim config.toml.j2

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.cncf.net"]

endpoint = ["https://harbor.cncf.net"]

[plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.cncf.net".tls]

insecure_skip_verify = true

[plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.cncf.net".auth]

username = "admin"

password = "123456"

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster1 03剧本文件为:/etc/kubeasz/playbooks/03.runtime.yml

剧本角色执行文件:/etc/kubeasz/roles/containerd/tasks

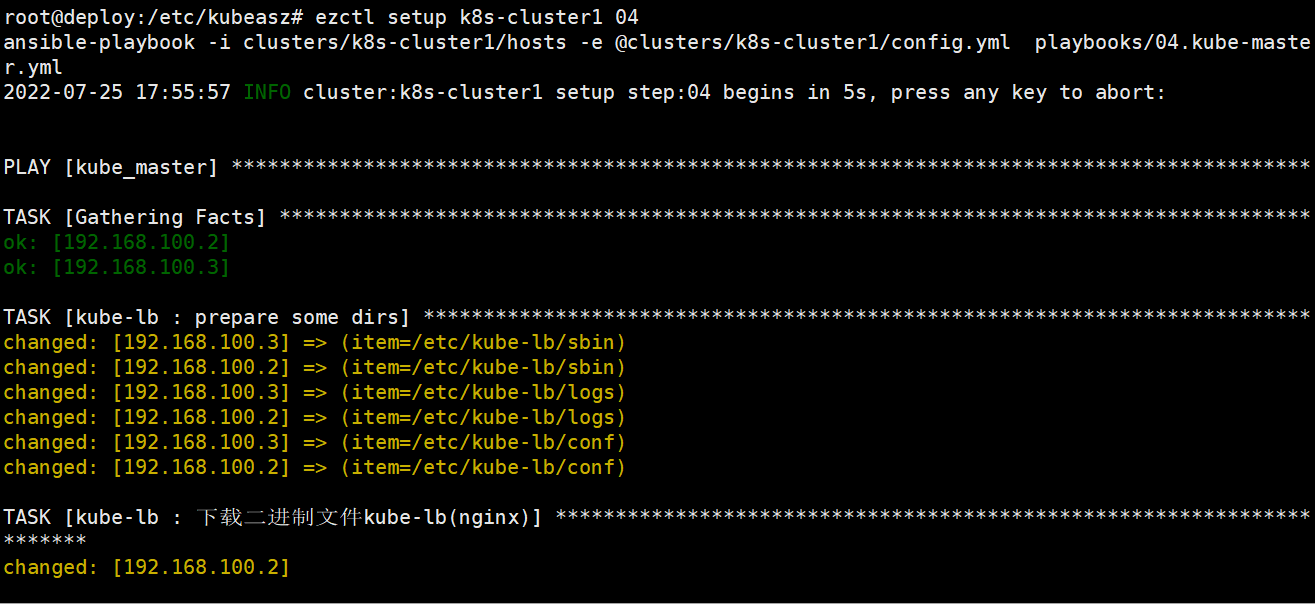

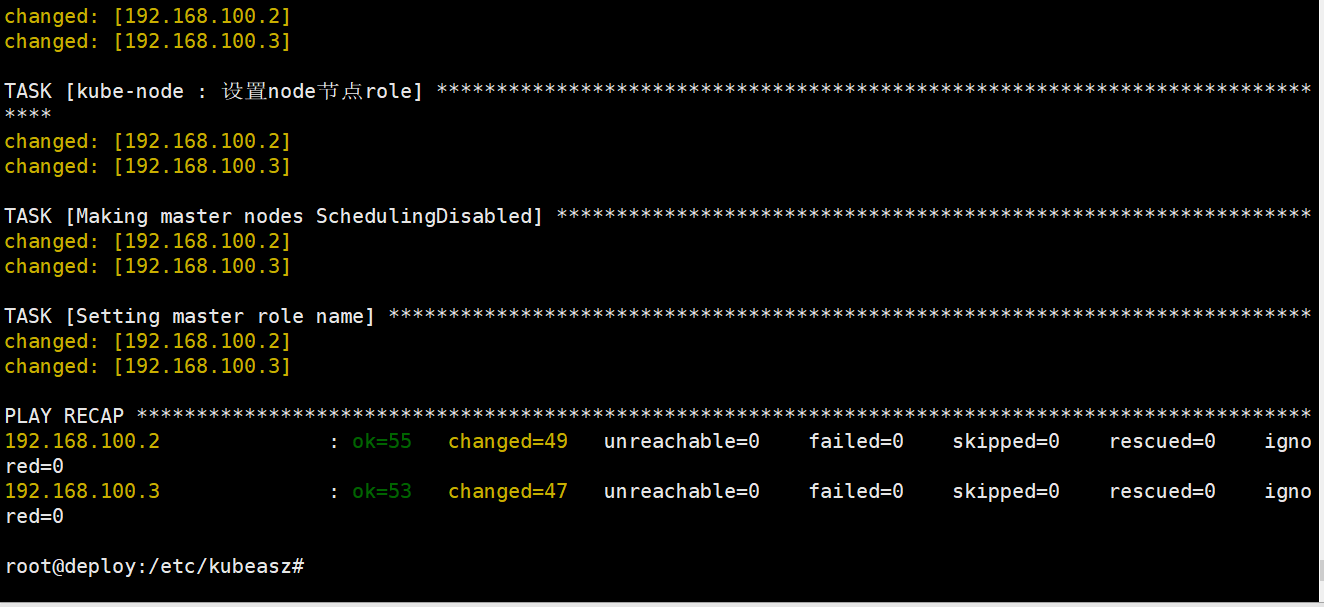

部署k8s master

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# ./ezctl setup --help

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster1 04剧本文件:/etc/kubeasz/playbooks/04.kube-master.yml

剧本角色执行文件:/etc/kubeasz/roles/kube-master/tasks

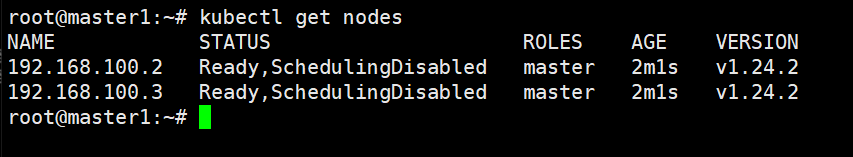

kubenetes Master节点验证

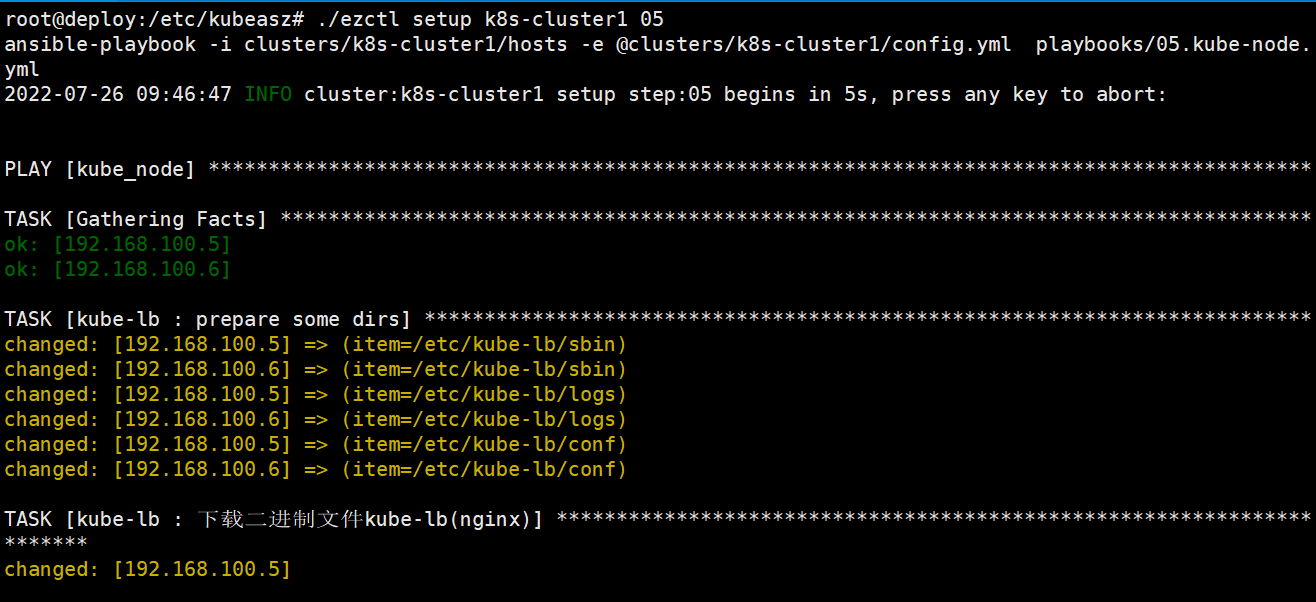

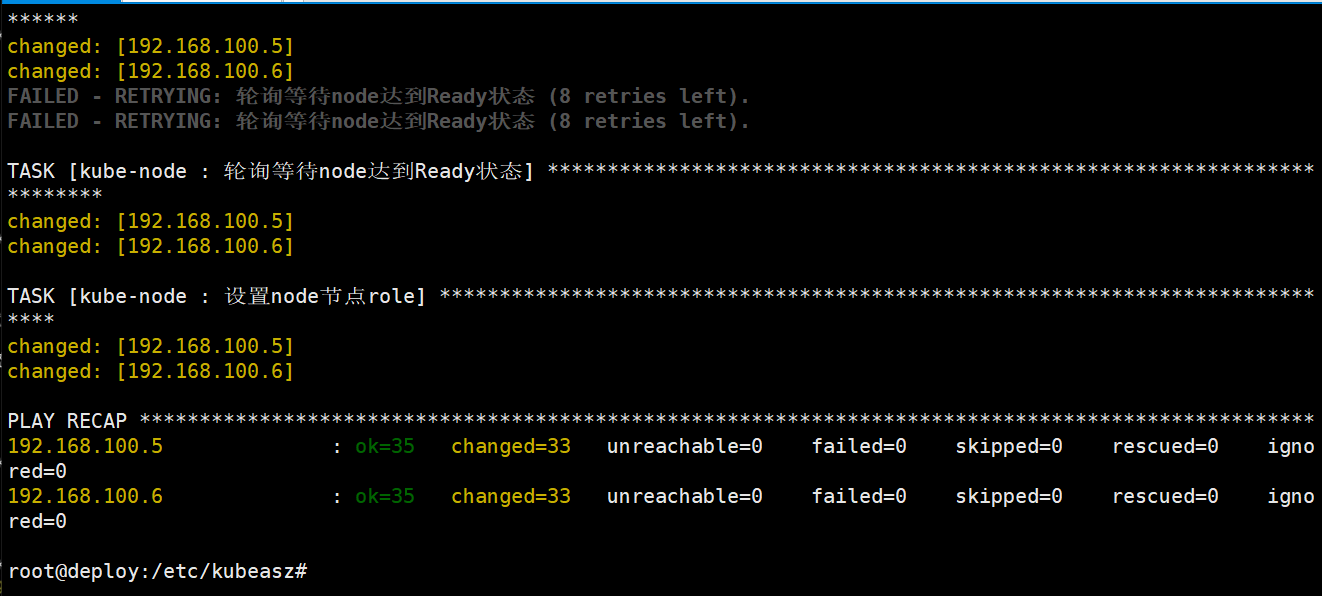

部署k8s node

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# ./ezctl setup --help

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster1 05剧本文件:/etc/kubeasz/playbooks/05.kube-node.yml

剧本角色执行文件:/etc/kubeasz/roles/kube-node/tasks/

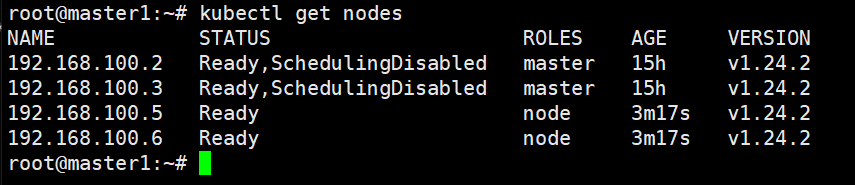

节点验证

root@master1:~# kubectl get nodes

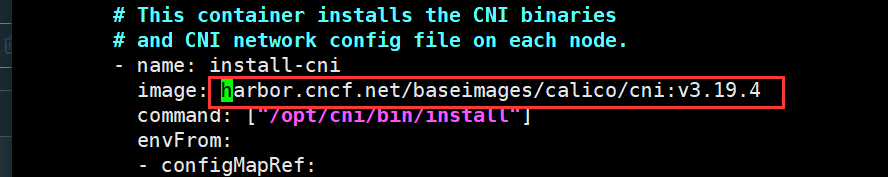

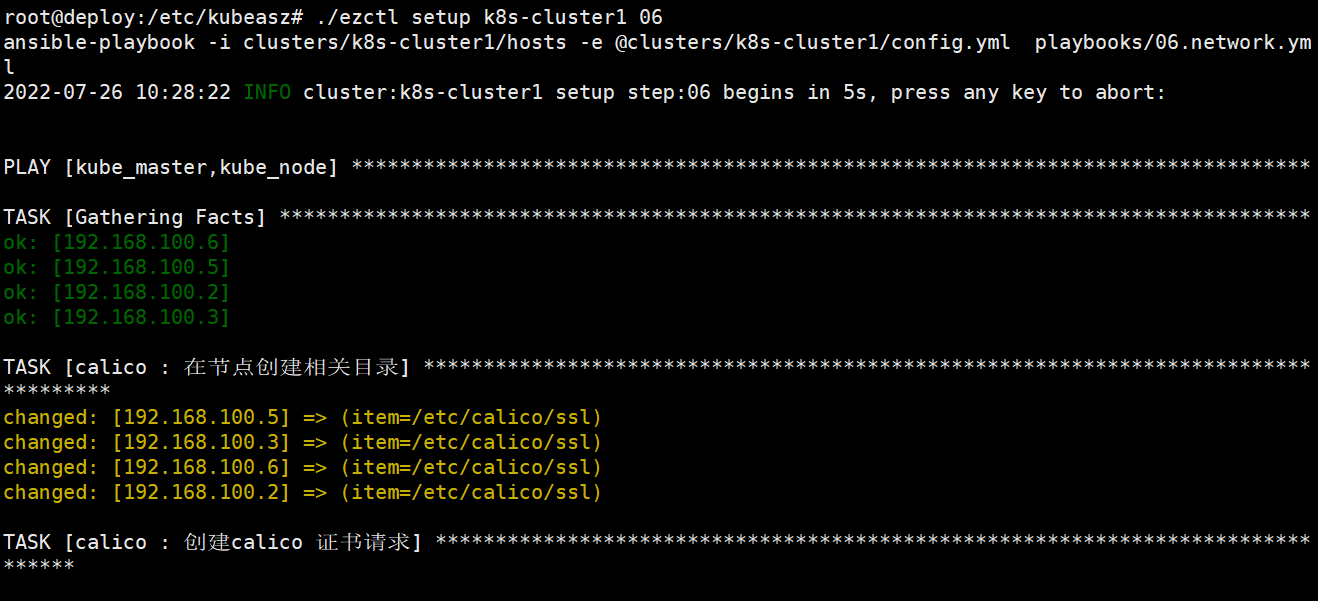

部署网络插件calico

root@deploy:/etc/kubeasz/clusters/k8s-cluster1# cd /etc/kubeasz/

root@deploy:/etc/kubeasz# ./ezctl setup --help

将镜像统一修改为本地harbor的镜像仓库地址

root@deploy:/etc/kubeasz# cd roles/calico/templates/

root@deploy:/etc/kubeasz/roles/calico/templates# vim calico-v3.19.yaml.j2

修改calico-cni镜像仓库地址

修改calico/pod2daemon-flexvol镜像仓库地址

修改calico/node镜像仓库地址

修改calico/kube-controllers镜像仓库地址

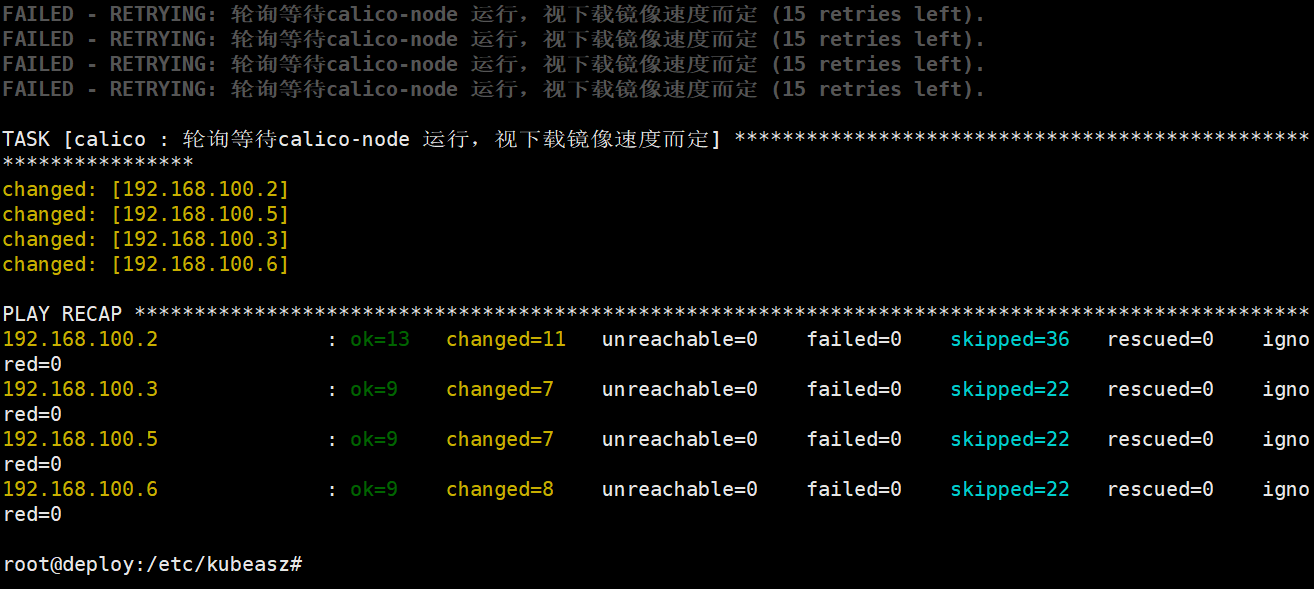

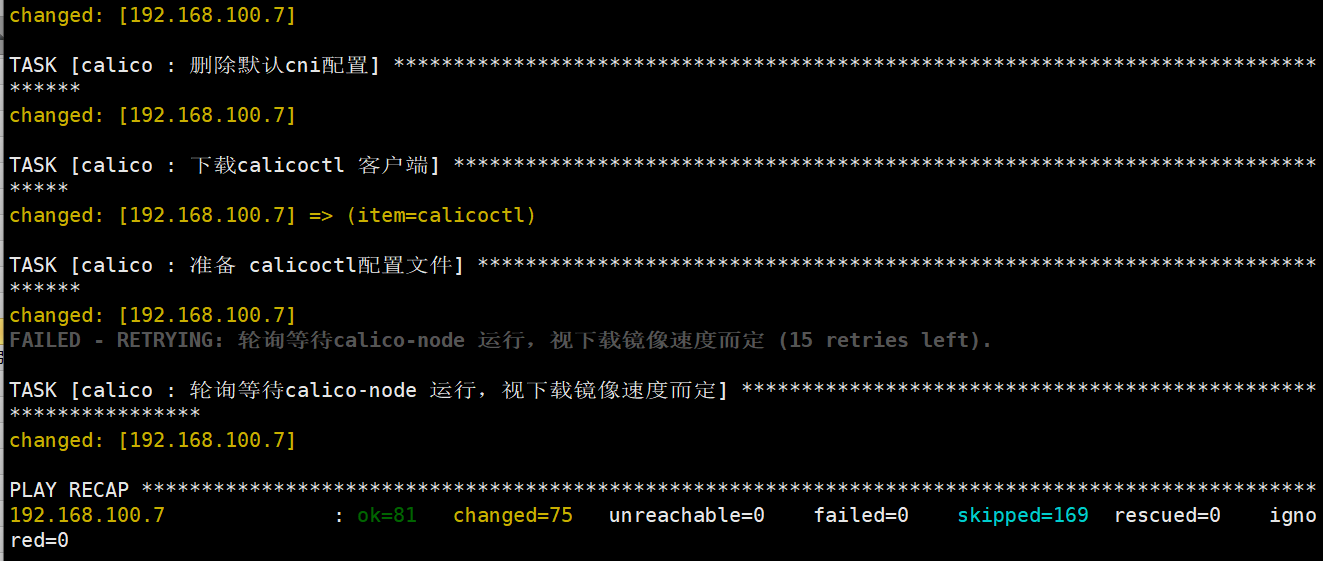

root@deploy:/etc/kubeasz# ./ezctl setup k8s-cluster1 06剧本文件:/etc/kubeasz/playbooks/06.network.yml

剧本角色执行文件:/etc/kubeasz/roles/calico/tasks

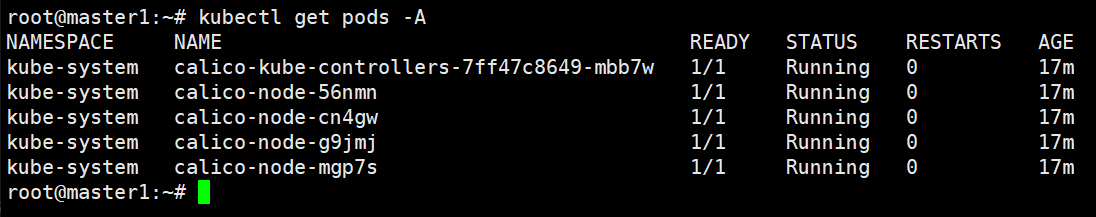

节点验证:

简单测试k8s

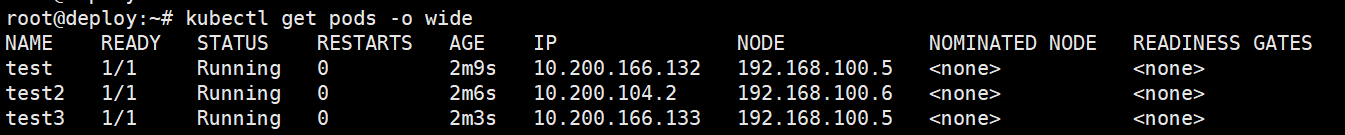

root@deploy:/etc/kubeasz# kubectl create ns test

root@deploy:~# kubectl config set-context --namespace test --current

root@deploy:~# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context-cluster1 cluster1 admin test

root@deploy:~# kubectl run test --image=centos:7.9.2009 sleep 100000

root@deploy:~# kubectl run test2 --image=centos:7.9.2009 sleep 100000

root@deploy:~# kubectl run test3 --image=centos:7.9.2009 sleep 100000

root@deploy:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

test 0/1 ContainerCreating 0 3s

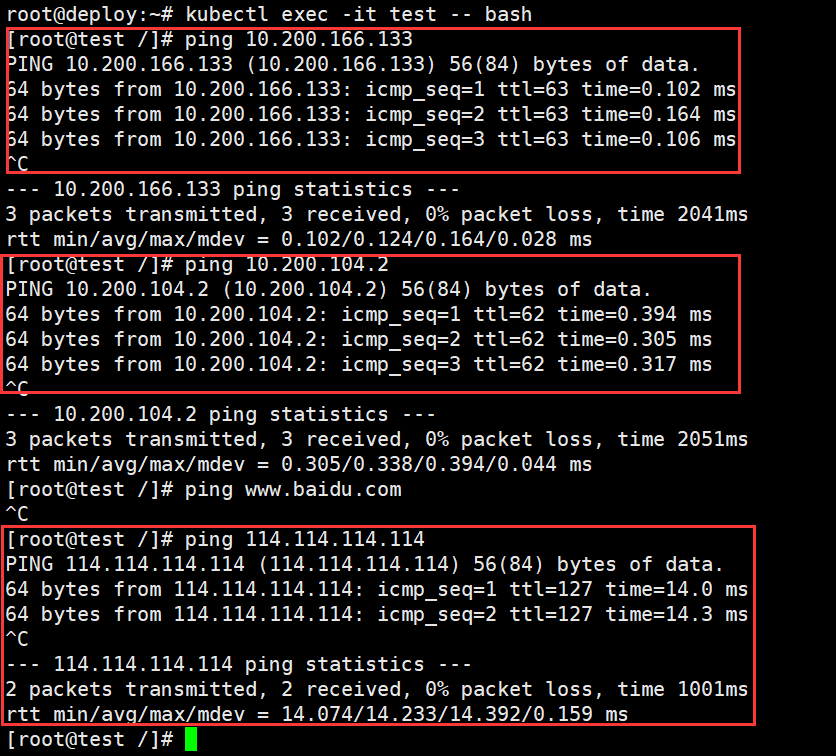

测试pod网络:

1、测试不同node下的pod网络

2、测试外网的网络访问

kubernetes集群维护

节点维护

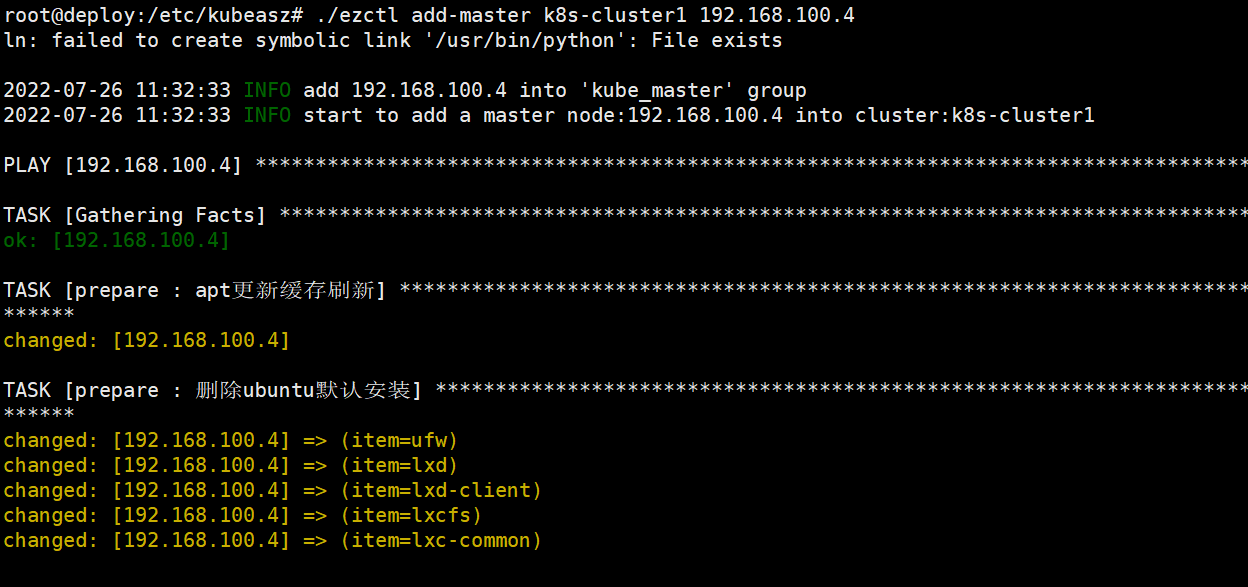

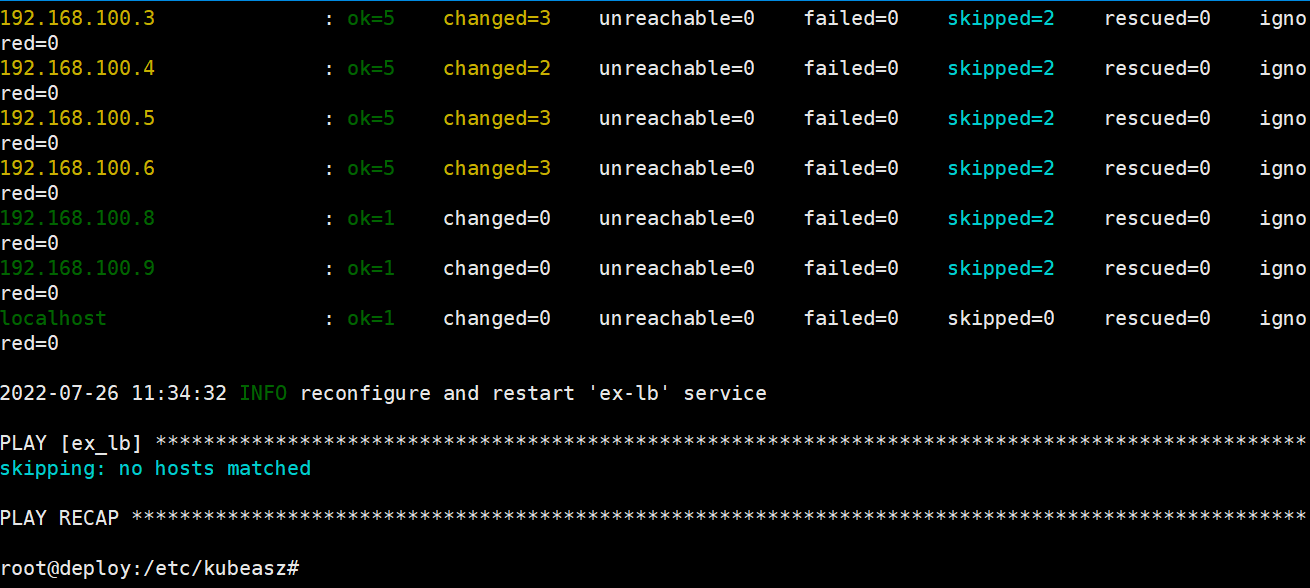

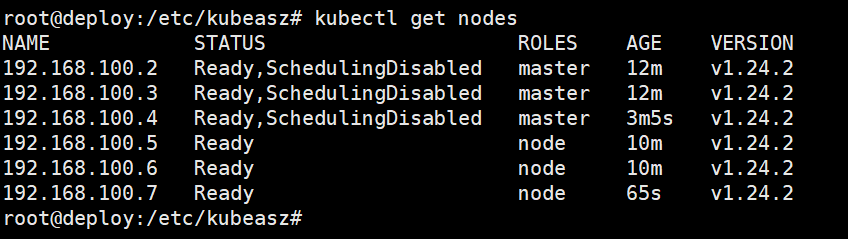

1、添加master节点

root@deploy:/etc/kubeasz# ./ezctl add-master k8s-cluster1 192.168.100.4

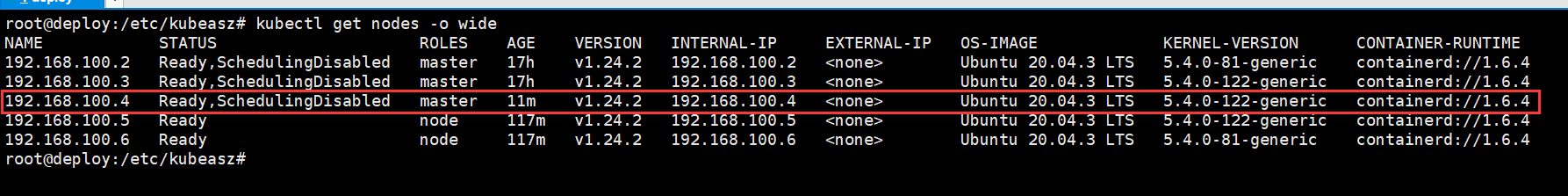

验证节点

2、添加node节点

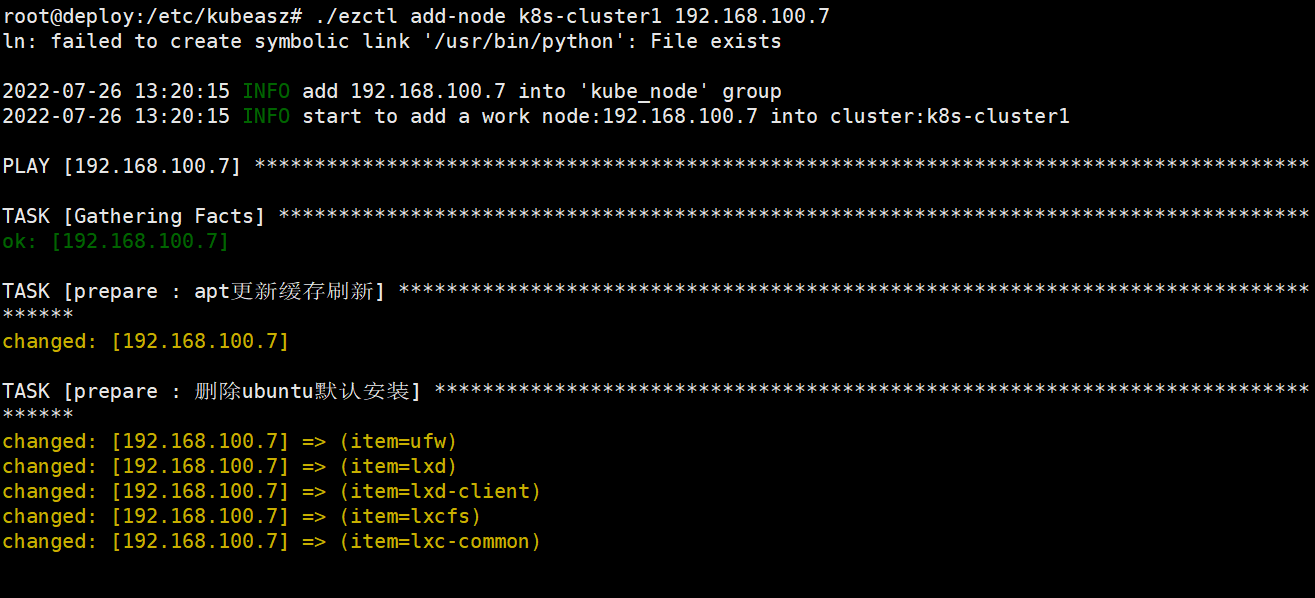

root@deploy:/etc/kubeasz# ./ezctl add-node k8s-cluster1 192.168.100.7

验证node节点

本文来自博客园,作者:PunchLinux,转载请注明原文链接:https://www.cnblogs.com/punchlinux/p/16524596.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号