[转]Part4 – OPENVSWITCH – Playing with Bonding on Openvswitch

Bonding is aggregation multiple links to single link in order to increase throughput and achieve redundancy in case one of links fails. At least, officials say it so it must be true. Now, let me ask the question. How can we configure bonding?

From what I know about bonding, they are two possibilities to configure it.

The first option is to load bonding module to kernel and use ifenslave utility for its configuration. Configuration steps for Microcore are described here.

If you want to have fun as I had with bonding configuration, check this lab for reference.

http://brezular.com/2011/03/10/etherchannelvrrp-dhcp-ospf-configuration-cisco-vyatta-microcore/

As the tutorial should be dedicated to bonding on Openvswitch I should focus on this topic. This is the second option how can we configure bonding without loading bonding module to Linux kernel.

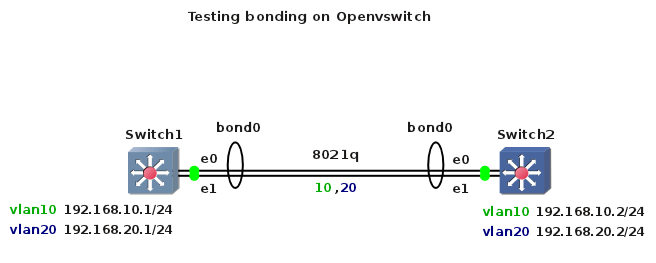

Let’s say we have two Microcore Linux boxes connected with two links. As usual, our topology is created using GNS3 and Qemu images of Microcore with installed Openvswitch 1.2.2 and Quagga 0.99.20 on the top of Microcore.

There is nothing in the world what could prevent you to download my Openvswitch Microcore Qemu image available here.

There is nothing in the world what could prevent you to download my Openvswitch Microcore Qemu image available here.

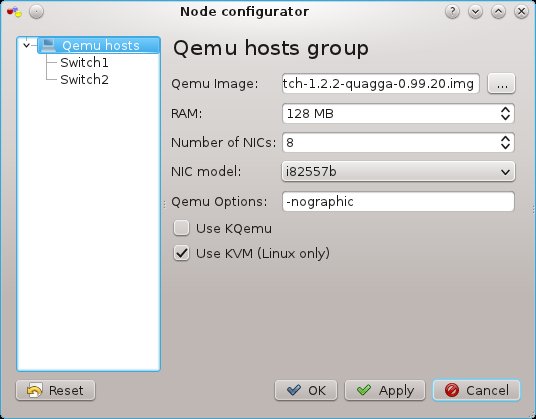

As for Ethernet card I suggest you to use emulated i82557b Ethernet card. Hint – check GNS3 Qemu setting. According to Bill, 640 kB is enough for anyone but it is better to assign at least 128 MB RAM for Microcore. Here is a snapshot of Qemu setting.

Part1 – Basic Microcore, Openvswitch and Quagga Configuration

Before we start to configure Openvswitch we should be aware of initial configuration requirement for Openvswitch and Quagga. Frankly to say, Openvswitch is not Cisco IOS which you can configure right after device is booted. First you need to create database, configuration files, start services etc.

My Qemu Microcore image with Openvswitch and Quaggae is preconfigured for such things so feel free to use it.

1. Switch 1 configuration – hostname, trunks, vlan interfaces

tc@box:~$ echo "hostname Switch1" >> /opt/bootlocal.sh

tc@box:~$ sudo hostname Switch1

tc@Switch1:~$ sudo su

root@Switch1:~# ovs-vsctl add-br br0

root@Switch1:~# ovs-vsctl add-port br0 vlan10 tag=10 -- set interface vlan10 type=internal

root@Switch1:~# ovs-vsctl add-port br0 vlan20 tag=20 -- set interface vlan20 type=internal

Note: I usually specify list of VLANs to be allowed on trunk link

root@Switch1:~# ovs-vsctl add-port br0 eth0 trunks=10,20

root@Switch1:~# ovs-vsctl add-port br0 eth1 trunks=10,20

Unfortunately if we do it now, we cannot create bond0 interface, later in Part2. In this case a warning message informs us that eth0 and eth1 already exists on bridge br0. Therefore we skip a trunk definition here and we will configure the trunk later together with bonding configuration.

Start Quagga vtysh shell.

root@Switch1:~# vtysh

Hello, this is Quagga (version 0.99.20).

Copyright 1996-2005 Kunihiro Ishiguro, et al.

Switch1# conf t

Switch1(config)# interface vlan10

Switch1(config-if)# ip address 192.168.10.1/24

Switch1(config-if)# no shutdown

Switch1(config-if)#

Switch1(config-if)# interface vlan20

Switch1(config-if)# ip address 192.168.20.1/24

Switch1(config-if)# no shutdown

Switch1(config-if)# do wr

Switch1(config-if)# exit

Switch1(config)# exit

Switch1# exit.

Save Microcore configuration.

root@Switch1:~# /usr/bin/filetool.sh -b

Ctrl+S

2. Switch 2 configuration – hostname, trunks, vlan interfaces

tc@box:~$ echo "hostname Switch2" >> /opt/bootlocal.sh

tc@box:~$ sudo hostname Switch2

tc@Switch1:~$ sudo su

root@Switch2:~# ovs-vsctl add-br br0

root@Switch2:~# ovs-vsctl add-port br0 vlan10 tag=10 -- set interface vlan10 type=internal

root@Switch2:~# ovs-vsctl add-port br0 vlan20 tag=20 -- set interface vlan20 type=internal

Start Quagga vtysh shell.

root@Switch2:~# vtysh

Hello, this is Quagga (version 0.99.20).

Copyright 1996-2005 Kunihiro Ishiguro, et al.

Switch2# conf t

Switch2(config)# interface vlan10

Switch2(config-if)# ip address 192.168.10.2/24

Switch2(config-if)# no shutdown

Switch2(config-if)#

Switch2(config-if)# interface vlan20

Switch2(config-if)# ip address 192.168.20.2/24

Switch2(config-if)# no shutdown

Switch2(config-if)# do wr

Switch2(config-if)# exit

Switch2(config)# exit

Switch2# exit

root@Switch2:~#

Save Microcore configuration.

root@Switch2:~# /usr/bin/filetool.sh -b

Ctrl+S

Part2 – Bonding

Now, it is time to start playing game called bonding. They are not any rules in our game but it is not bad idea to read documentation first. Page number ten could answer many future question.

http://openvswitch.org/ovs-vswitchd.conf.db.5.pdf

As you can see, they are four bond modes with balance-slb as default mode. Modes are described on page 11.

1. Switch1 and Switch2 configuration for static bonding

root@Switch1:~# ovs-vsctl add-bond br0 bond0 eth0 eth1 trunks=10,20

root@Switch2:~# ovs-vsctl add-bond br0 bond0 eth0 eth1 trunks=10,20

2. Static Bonding Testing

Check bond interface bond0 on Switch1.

root@Switch1:~# ovs-appctl bond/show bond0

bond_mode: balance-slb

bond-hash-algorithm: balance-slb

bond-hash-basis: 0

updelay: 0 ms

downdelay: 0 ms

next rebalance: 9476 ms

lacp_negotiated: false

slave eth1: enabled

may_enable: true

slave eth0: enabled

active slave

may_enable: true

Now do the same for bond0 on Switch2.

root@Switch2:~# ovs-appctl bond/show bond0

bond_mode: balance-slb

bond-hash-algorithm: balance-slb

bond-hash-basis: 0

updelay: 0 ms

downdelay: 0 ms

next rebalance: 7420 ms

lacp_negotiated: false

slave eth1: enabled

may_enable: true

slave eth0: enabled

active slave

may_enable: true

Now, start pinging IP address 192.168.10.2 from Switch1. Ping is supposed to be working. While pinging, start tcpdump to listen on interface ethernet1 of Switch2

root@Switch2:~# tcpdump -i eth1

tcpdump: WARNING: eth1: no IPv4 address assigned

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), capture size 68 bytes

11:47:07.214753 IP 192.168.10.2 > 192.168.10.1: ICMP echo reply, id 36360, seq 15, length 64

11:47:08.215483 IP 192.168.10.2 > 192.168.10.1: ICMP echo reply, id 36360, seq 16, length 64

11:47:09.215823 IP 192.168.10.2 > 192.168.10.1: ICMP echo reply, id 36360, seq 17, length 64

11:47:10.216846 IP 192.168.10.2 > 192.168.10.1: ICMP echo reply, id 36360, seq 18, length 64

11:47:11.217868 IP 192.168.10.2 > 192.168.10.1: ICMP echo reply, id 36360, seq 19, length 64

<output truncated>

15 packets captured

15 packets received by filter

0 packets dropped by kernel

Interface Ethernet1 sends icmp echo reply messages back to Switch1. As they are not any ICMP echo requests listed in the output, only the interface Ethernet0 receives these ICMP requests coming from Switch1.

My question is, what happens if we shutdown Ethernet0 on Switch2? Will be ICMP requests interrupted and possibly re-forwarded via interface Ethernet0 of Switch1? If yes, how long does it take?

root@Switch2:~# ifconfig eth0 down

Check the bond interface bond0 on Switch2 again. As soon as Switch2 detects failure of its interface, Openvswitch puts slave eth0 do disabled state

root@Switch2:~# ovs-appctl bond/show bond0

bond_mode: balance-slb

bond-hash-algorithm: balance-slb

bond-hash-basis: 0

updelay: 0 ms

downdelay: 0 ms

next rebalance: 7612 ms

lacp_negotiated: false

slave eth1: enabled

active slave

may_enable: true

hash 52: 0 kB load

slave eth0: disabled

may_enable: false

Switch1 cannot detect failure of far end interface Ethernet0. It continues to send ICMP requests to Switch2 via its Ethernet0 interface. Stop ping command and check bond interface bond0 on Switch1. Obviously, Ethernet0 is still enabled in bundle

root@Switch1:~# ovs-appctl bond/show bond0

bond_mode: balance-slb

bond-hash-algorithm: balance-slb

bond-hash-basis: 0

updelay: 0 ms

downdelay: 0 ms

next rebalance: 4007 ms

lacp_negotiated: false

slave eth1: enabled

may_enable: true

slave eth0: enabled

active slave

may_enable: true

hash 133: 0 kB load

This behaviour we cannot tolerate. We need such as mechanism which helps to detect far end failure. Therefore LACP must be deployed in your configuration.

According to wiki, LACP works by sending frames (LACPDUs) down all links that have the protocol enabled. These are two main advantages comparing to static bonding.

1. Failover when a link fails when the peer will not see the link down. With static link aggregation the peer would continue sending traffic down the link causing it to be lost.

2. The device can confirm that the configuration at the other end can handle link aggregation. With Static link aggregation a cabling or configuration mistake could go undetected and cause undesirable network behaviour.

Uhm, it looks like exactly what we want. So do not hesitate and let’s go configure bonding with LACP.

3. Switch1 and Switch2 configuration for bonding with LACP

Delete existing bond0 port.

root@Switch1:~# ovs-vsctl del-port br0 bond0

root@Switch2:~# ovs-vsctl del-port br0 bond0

Create a new bond interface.

root@Switch1:~# ovs-vsctl add-bond br0 bond0 eth0 eth1 lacp=active trunks=10,20

root@Switch2:~# ovs-vsctl add-bond br0 bond0 eth0 eth1 lacp=active trunks=10,20

Check bonding on Switch1.

root@Switch1:~# ovs-appctl bond/show bond0

bond_mode: balance-slb

bond-hash-algorithm: balance-slb

bond-hash-basis: 0

updelay: 0 ms

downdelay: 0 ms

next rebalance: 7763 ms

lacp_negotiated: true

slave eth0: disabled

may_enable: false

slave eth1: enabled

active slave

may_enable: true

Notice state of Ethernet0 interface. Remember, we shut-downed Ethernet0 on Switch2. Thanks to LACP, Ethernet0 is disabled in bundle on Switch1. It is awesome!

Note Even an interface Ethernet0 of Switch1 is disabled in bundle, it remains in up state

root@Switch1:~# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:AA:00:D8:E6:00

inet6 addr: fe80::2aa:ff:fed8:e600/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:112 errors:0 dropped:0 overruns:0 frame:0

TX packets:2030 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:36156 (35.3 KiB) TX bytes:336741 (328.8 KiB)

4. LACP bonding testing

The last point we want to test is how much time is required for transition for interface in bundle to disabled state, if far end fails. Logically it should depend how often are LACP messages exchanged between peers.

Start tcpdump listening on eth0 of Switch1. Then bring interface eth0 up on Switch2. Right after LACP messages are exchanged via eth0 between Switch1 and Switch2, Ethernet0 interface is brought to enabled state in bundle. See the output below

root@Switch1:~# tcpdump -i eth0 -v

tcpdump: WARNING: eth0: no IPv4 address assigned

tcpdump: listening on eth0, link-type EN10MB (Ethernet), capture size 68 bytes

< DHCP requests>

14:00:14.555758 IP (tos 0×0, ttl 64, id 0, offset 0, flags [none], proto UDP (17), length 317) 0.0.0.0.bootpc > 255.255.255.255.bootps: BOOTP/DHCP, Request [|bootp]

<IPV6 messages coming from Switch1>

14:00:18.457096 00:aa:00:85:34:00 (oui Unknown) > 33:33:00:00:00:16 (oui Unknown), ethertype IPv6 (0x86dd), length 110:

0×0000: 6000 0000 0038 0001 0000 0000 0000 0000 `….8……….

0×0010: 0000 0000 0000 0000 ff02 0000 0000 0000 …………….

0×0020: 0000 0000 0000 0016 3a00 0502 ……..:…

14:00:18.957535 00:aa:00:85:34:00 (oui Unknown) > 33:33:ff:85:34:00 (oui Unknown), ethertype IPv6 (0x86dd), length 78:

0×0000: 6000 0000 0018 3aff 0000 0000 0000 0000 `…..:………

0×0010: 0000 0000 0000 0000 ff02 0000 0000 0000 …………….

0×0020: 0000 0001 ff85 3400 8700 1173 ……4….s

<LACP message coming from Switch1. Notice System 00:00:00:00:00:00. It means that Switch2 has not known about partner’s system yet>

14:00:19.217584 LACPv1, length: 110

Actor Information TLV (0×01), length: 20

System 00:aa:00:85:34:00 (oui Unknown), System Priority 65534, Key 5, Port 6, Port Priority 65535

State Flags [Activity, Aggregation, Default]

Partner Information TLV (0×02), length: 20

System 00:00:00:00:00:00 (oui Ethernet), System Priority 0, Key 0, Port 0, Port Priority 0

State Flags [none]

Collector Information TLV (0×03), length: 16

packet exceeded snapshot

<LACP message sent to Switch2. As Switch1 knows partner ID 00:aa:00:85:34:00 – MAC address of interface eth0 of Switch2>

14:00:19.217914 LACPv1, length: 110

Actor Information TLV (0×01), length: 20

System 00:aa:00:d8:e6:00 (oui Unknown), System Priority 65534, Key 5, Port 6, Port Priority 65535

State Flags [Activity, Aggregation, Synchronization, Collecting, Distributing]

Partner Information TLV (0×02), length: 20

System 00:aa:00:85:34:00 (oui Unknown), System Priority 65534, Key 5, Port 6, Port Priority 65535

State Flags [Activity, Aggregation, Default]

Collector Information TLV (0×03), length: 16

packet exceeded snapshot

<LACP message sent to Switch1. Now, Switch1 knows partner’s ID 00:aa:00:d8:e6:00 – MAC address of interface eth0 of Switch1>

14:00:19.220189 LACPv1, length: 110

Actor Information TLV (0×01), length: 20

System 00:aa:00:85:34:00 (oui Unknown), System Priority 65534, Key 5, Port 6, Port Priority 65535

State Flags [Activity, Aggregation, Synchronization, Collecting, Distributing]

Partner Information TLV (0×02), length: 20

System 00:aa:00:d8:e6:00 (oui Unknown), System Priority 65534, Key 5, Port 6, Port Priority 65535

State Flags [Activity, Aggregation, Synchronization, Collecting, Distributing]

Collector Information TLV (0×03), length: 16

packet exceeded snapshot

Explanation

00:aa:00:85:34:00 – MAC address of eth0 interface of Switch2

00:aa:00:d8:e6:00 – MAC address of eth0 interface of Switch1:

Nice! We can see structure of LACP messages but still do not know anything about time required for transition. It is time to check it.

Start pinging 192.168.10.2 from Switch1 and with the help of tcpdump find an interface where packets enter Switch2. In my case it is Ethernet1. Shutdown this interface and cheek the output of ping command. You see, ping is interrupted and it took approximately 50 seconds for ping to be recovered. It means that for 50 seconds interface Ethernet1 of Switch1 had been in enabled state. It is too much. It must be a way to short this time to acceptable level.

Issue the command below on Switch1. LACP statistic can be found there.

root@Switch1:~# ovs-appctl lacp/show bond0

lacp: bond0

status: active negotiated

sys_id: 00:aa:00:d8:e6:00

sys_priority: 65534

aggregation key: 5

lacp_time: slow

slave: eth0: current attached

port_id: 6

port_priority: 65535

actor sys_id: 00:aa:00:d8:e6:00

actor sys_priority: 65534

actor port_id: 6

actor port_priority: 65535

actor key: 5

actor state: activity aggregation synchronized collecting distributing

partner sys_id: 00:aa:00:85:34:00

partner sys_priority: 65534

partner port_id: 6

partner port_priority: 65535

partner key: 5

partner state: activity aggregation synchronized collecting distributing

slave: eth1: current attached

port_id: 5

port_priority: 65535

actor sys_id: 00:aa:00:d8:e6:00

actor sys_priority: 65534

actor port_id: 5

actor port_priority: 65535

actor key: 5

actor state: activity aggregation synchronized collecting distributing

partner sys_id: 00:aa:00:85:34:00

partner sys_priority: 65534

partner port_id: 5

partner port_priority: 65535

partner key: 5

partner state: activity aggregation synchronized collecting distributing

Focus on lacp-time parameter which is set to slow. According to documentation it is:

The LACP timing used on this Port. Possible values are fast, slow and a positive number of milliseconds. By default slow is used. When configured to be fast LACP heartbeats are requested at a rate of once per second causing connectivity problems to be detected more quickly. In slow mode, heartbeats are requested at a rate of once every 30 seconds.

That is exactly what we need. Changing lacp_time from slow to fast should help us to detect failure of peer’s interface more quickly.

5. Switch1 and Switch2 configuration for bonding with LACP and lacp time set to fast

Delete existing bond interface bond0.

root@Switch1:~# ovs-vsctl del-port br0 bond0

root@Switch2:~# ovs-vsctl del-port br0 bond0

Create a new bond interface.

root@Switch1:~# ovs-vsctl add-bond br0 bond0 eth0 eth1 lacp=active other_config:lacp-time=fast trunks=10,20

root@Switch2:~# ovs-vsctl add-bond br0 bond0 eth0 eth1 lacp=active other_config:lacp_time=fast trunks=10,20

Save configuration.

root@Switch1:~# /usr/bin/filetool.sh -b

root@Switch2:~# /usr/bin/filetool.sh -b

Ctrl+S

If you want to check interval of sending LACP messages, start tcpdump on Switch2 while pinging IP address of VLAN interface of Switch2 from Switch1. Now, LACP message are sent every 1 second

root@Switch2:~# tcpdump -i eth0

tcpdump: WARNING: eth0: no IPv4 address assigned

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 68 bytes

21:42:25.525007 LACPv1, length: 110

21:42:26.529846 LACPv1, length: 110

21:42:27.532092 LACPv1, length: 110

21:42:28.534532 LACPv1, length: 110

21:42:29.543080 LACPv1, length: 110

21:42:30.545620 LACPv1, length: 110

21:42:31.547848 LACPv1, length: 110

21:42:32.550717 LACPv1, length: 110

21:42:33.551271 LACPv1, length: 110

21:42:34.553382 LACPv1, length: 110

10 packets captured

10 packets received by filter

0 packets dropped by kernel

6. LACP bonding testing with enabled lacp_time=fast option

Start pinging 192.168.10.2 from Switch1 and find interface receiving ICMP echo requests on Switch2. In my case it is interface eth0. While pinging, shutdown this interface and check ping statistic

root@Switch1:~# ping 192.168.10.2

PING 192.168.10.2 (192.168.10.2): 56 data bytes

64 bytes from 192.168.10.2: seq=0 ttl=64 time=1.810 ms

64 bytes from 192.168.10.2: seq=1 ttl=64 time=0.940 ms

64 bytes from 192.168.10.2: seq=2 ttl=64 time=1.610 ms

64 bytes from 192.168.10.2: seq=3 ttl=64 time=0.755 ms

<output truncated>

— 192.168.10.2 ping statistics —

27 packets transmitted, 24 packets received, 11% packet loss

round-trip min/avg/max = 0.755/3.378/48.484 ms

You see, three packet are lost. That is what we can call link redundancy if one of links fails.

Following commands helpful during troubleshooting.

Show MAC address table.

root@Switch1:~# ovs-appctl fdb/show br0

List ports.

root@Switch1:~# ovs-vsctl list port

END.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· winform 绘制太阳,地球,月球 运作规律

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现