paper

import torch.nn as nn

import torch

import torch.nn.functional as F

def build_act_layer(act_type):

"""Build activation layer."""

if act_type is None:

return nn.Identity()

assert act_type in ['GELU', 'ReLU', 'SiLU']

if act_type == 'SiLU':

return nn.SiLU()

elif act_type == 'ReLU':

return nn.ReLU()

else:

return nn.GELU()

class ElementScale(nn.Module):

"""A learnable element-wise scaler."""

def __init__(self, embed_dims, init_value=0., requires_grad=True):

super(ElementScale, self).__init__()

self.scale = nn.Parameter(

init_value * torch.ones((1, embed_dims, 1, 1)),

requires_grad=requires_grad

)

def forward(self, x):

return x * self.scale

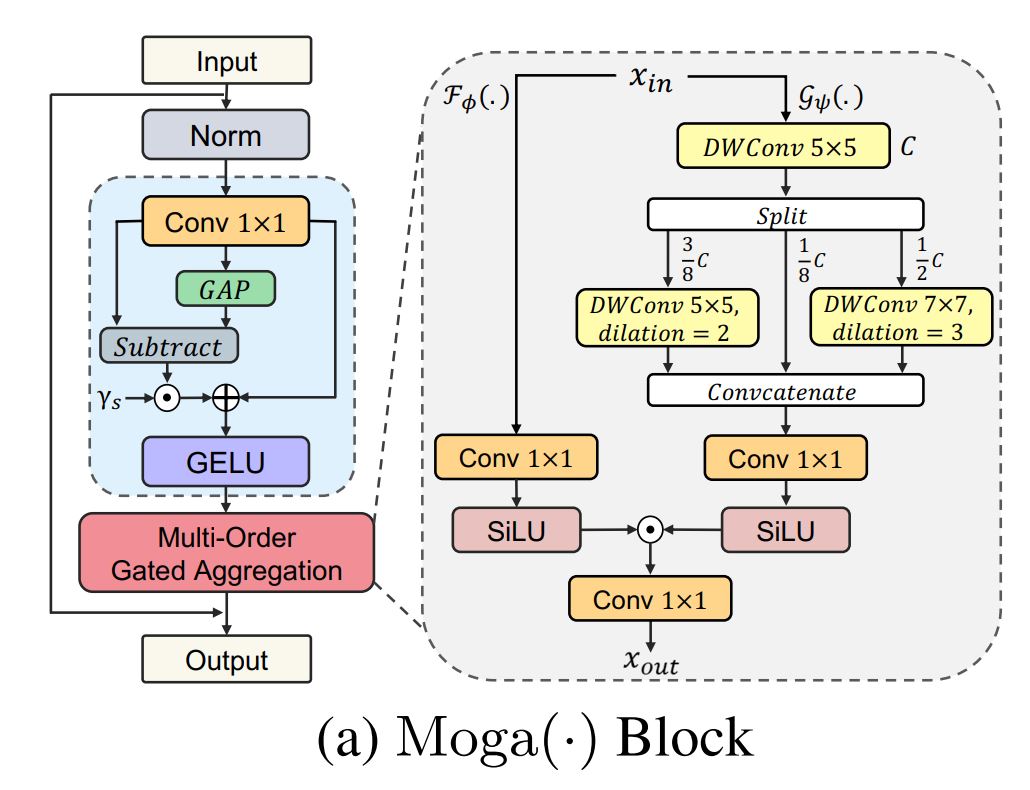

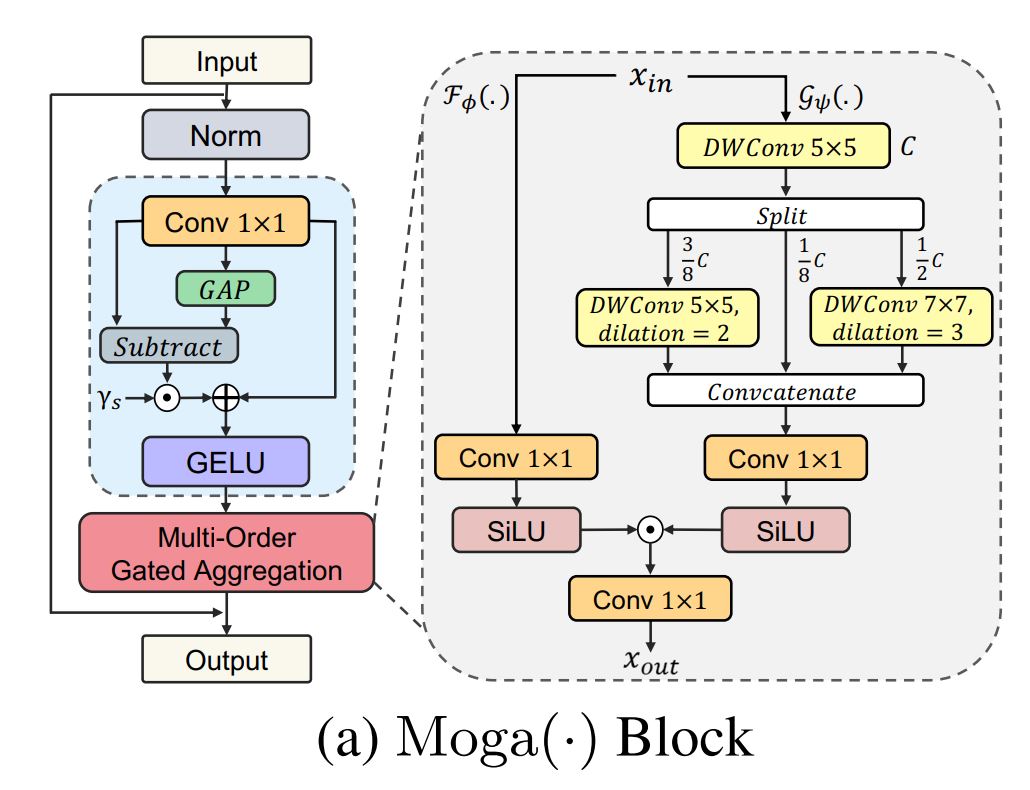

class MultiOrderDWConv(nn.Module):

"""Multi-order Features with Dilated DWConv Kernel.

Args:

embed_dims (int): Number of input channels.

dw_dilation (list): Dilations of three DWConv layers.

channel_split (list): The raletive ratio of three splited channels.

"""

def __init__(self,

embed_dims,

dw_dilation=[1, 2, 3,],

channel_split=[1, 3, 4,],

):

super(MultiOrderDWConv, self).__init__()

'''

1/8 3/8 4/8

1/8 dim 3/8 dim 4/8 dim

'''

self.split_ratio = [i / sum(channel_split) for i in channel_split]

self.embed_dims_1 = int(self.split_ratio[1] * embed_dims)

self.embed_dims_2 = int(self.split_ratio[2] * embed_dims)

self.embed_dims_0 = embed_dims - self.embed_dims_1 - self.embed_dims_2

self.embed_dims = embed_dims

assert len(dw_dilation) == len(channel_split) == 3

assert 1 <= min(dw_dilation) and max(dw_dilation) <= 3

assert embed_dims % sum(channel_split) == 0

# basic DW conv

self.DW_conv0 = nn.Conv2d(

in_channels=self.embed_dims,

out_channels=self.embed_dims,

kernel_size=5,

padding=(1 + 4 * dw_dilation[0]) // 2,

groups=self.embed_dims,

stride=1, dilation=dw_dilation[0],

)

# DW conv 1

self.DW_conv1 = nn.Conv2d(

in_channels=self.embed_dims_1,

out_channels=self.embed_dims_1,

kernel_size=5,

padding=(1 + 4 * dw_dilation[1]) // 2,

groups=self.embed_dims_1,

stride=1, dilation=dw_dilation[1],

)

# DW conv 2

self.DW_conv2 = nn.Conv2d(

in_channels=self.embed_dims_2,

out_channels=self.embed_dims_2,

kernel_size=7,

padding=(1 + 6 * dw_dilation[2]) // 2,

groups=self.embed_dims_2,

stride=1, dilation=dw_dilation[2],

)

# a channel convolution

self.PW_conv = nn.Conv2d( # point-wise convolution

in_channels=embed_dims,

out_channels=embed_dims,

kernel_size=1)

def forward(self, x):

'''

'''

x_0 = self.DW_conv0(x)

x_1 = self.DW_conv1(

x_0[:, self.embed_dims_0: self.embed_dims_0+self.embed_dims_1, ...])

x_2 = self.DW_conv2(

x_0[:, self.embed_dims-self.embed_dims_2:, ...])

x = torch.cat([

x_0[:, :self.embed_dims_0, ...], x_1, x_2], dim=1)

x = self.PW_conv(x)

return x

class MultiOrderGatedAggregation(nn.Module):

"""Spatial Block with Multi-order Gated Aggregation.

Args:

embed_dims (int): Number of input channels.

attn_dw_dilation (list): Dilations of three DWConv layers.

attn_channel_split (list): The raletive ratio of splited channels.

attn_act_type (str): The activation type for Spatial Block.

Defaults to 'SiLU'.

"""

def __init__(self,

embed_dims,

attn_dw_dilation=[1, 2, 3],

attn_channel_split=[1, 3, 4],

attn_act_type='SiLU',

attn_force_fp32=False,

):

super(MultiOrderGatedAggregation, self).__init__()

self.embed_dims = embed_dims

self.attn_force_fp32 = attn_force_fp32

self.proj_1 = nn.Conv2d(

in_channels=embed_dims, out_channels=embed_dims, kernel_size=1)

self.gate = nn.Conv2d(

in_channels=embed_dims, out_channels=embed_dims, kernel_size=1)

self.value = MultiOrderDWConv(

embed_dims=embed_dims,

dw_dilation=attn_dw_dilation,

channel_split=attn_channel_split,

)

self.proj_2 = nn.Conv2d(

in_channels=embed_dims, out_channels=embed_dims, kernel_size=1)

# activation for gating and value

self.act_value = build_act_layer(attn_act_type)

self.act_gate = build_act_layer(attn_act_type)

# decompose

self.sigma = ElementScale(

embed_dims, init_value=1e-5, requires_grad=True)

def feat_decompose(self, x):

'''

不改变宽高和维度的 点卷积

'''

x = self.proj_1(x)

# x_d: [B, C, H, W] -> [B, C, 1, 1] 计算平均值

x_d = F.adaptive_avg_pool2d(x, output_size=1)

x = x + self.sigma(x - x_d) # 每层减去平均值 再缩放 再和原来的相加

x = self.act_value(x)

return x

def forward_gating(self, g, v):

with torch.autocast(device_type='cuda', enabled=False):

g = g.to(torch.float32)

v = v.to(torch.float32)

return self.proj_2(self.act_gate(g) * self.act_gate(v))

def forward(self, x):

shortcut = x.clone()

# proj 1x1

x = self.feat_decompose(x)

# gating and value branch

g = self.gate(x) # 左分支

v = self.value(x) #右分支

# aggregation

if not self.attn_force_fp32: # 默认走这个分支

x = self.proj_2(self.act_gate(g) * self.act_gate(v))

else:

x = self.forward_gating(self.act_gate(g), self.act_gate(v))

x = x + shortcut

return x

if __name__ == '__main__':

input = torch.randn(1, 64, 32, 32).cuda()# 输入 B C H W

block = MultiOrderGatedAggregation(embed_dims=64).cuda()

output = block(input)

print(input.size())

print(output.size())