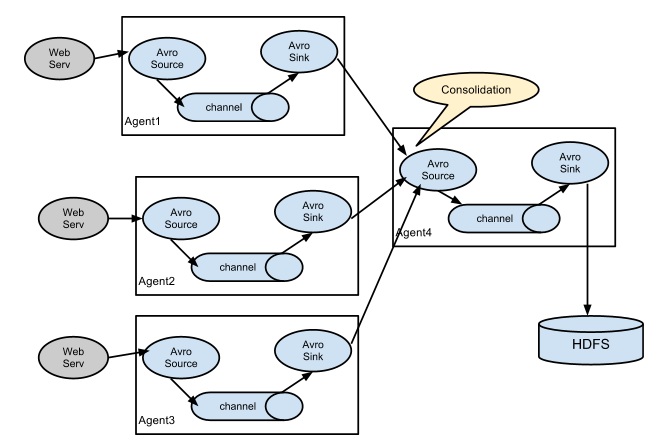

多个子节点收集日志-主节点上传到HDFS

Master:

----------------------

#MasterAgent

MasterAgent.channels = c1

MasterAgent.sources = s1

MasterAgent.sinks = k1

#MasterAgent Avro Source

MasterAgent.sources.s1.type = avro

MasterAgent.sources.s1.bind = 192.168.192.128

MasterAgent.sources.s1.port = 4444

##MasterAgent FileChannel

#MasterAgent.channels.c1.type = file

#MasterAgent.channels.c1.checkpointDir = /home/hadoop/data/spool/checkpoint

#MasterAgent.channels.c1.dataDirs = /home/spool/data

#MasterAgent.channels.c1.capacity = 200000000

#MasterAgent.channels.c1.keep-alive = 30

#MasterAgent.channels.c1.write-timeout = 30

#MasterAgent.channels.c1.checkpoint-timeout=600

#MasterAgent Memory Channel

MasterAgent.channels.c1.type = memory

MasterAgent.channels.c1.capacity = 1000

MasterAgent.channels.c1.transactionCapacity = 100

#MasterAgent.channels.c1.byteCapacityBufferPercentage = 20

#MasterAgent.channels.c1.byteCapacity = 800

# Describe the sink

MasterAgent.sinks.k1.type = hdfs

MasterAgent.sinks.k1.hdfs.path =hdfs://qm1711/flume_data

MasterAgent.sinks.k1.hdfs.filePrefix = %Y_%m_%d

MasterAgent.sinks.k1.hdfs.fileSuffix = .log

MasterAgent.sinks.k1.hdfs.round = true

MasterAgent.sinks.k1.hdfs.roundValue = 1

MasterAgent.sinks.k1.hdfs.roundUnit = minute

MasterAgent.sinks.k1.hdfs.useLocalTimeStamp = true

MasterAgent.sinks.k1.hdfs.fileType=DataStream

MasterAgent.sinks.k1.hdfs.writeFormat=Text

MasterAgent.sinks.k1.hdfs.rollInterval=0

MasterAgent.sinks.k1.hdfs.rollSize=10240

MasterAgent.sinks.k1.hdfs.rollCount=0

MasterAgent.sinks.k1.hdfs.idleTimeout=60

MasterAgent.sinks.k1.hdfs.callTimeout=60000

MasterAgent.sources.s1.channels = c1

MasterAgent.sinks.k1.channel = c1

-------------

Slave:

#SlavesAgent

SlavesAgent.channels = c1

SlavesAgent.sources = s1

SlavesAgent.sinks = k1

#SlavesAgent Spooling Directory Source

SlavesAgent.sources.s1.type = spooldir

SlavesAgent.sources.s1.spoolDir =/home/hadoop/logs/

SlavesAgent.sources.s1.fileHeader = true

SlavesAgent.sources.s1.deletePolicy =immediate

SlavesAgent.sources.s1.batchSize =1000

SlavesAgent.sources.s1.deserializer.maxLineLength =1048576

#SlavesAgent Memory Channel

SlavesAgent.channels.c1.type = memory

SlavesAgent.channels.c1.capacity = 1000

SlavesAgent.channels.c1.transactionCapacity = 100

#SlavesAgent.channels.c1.byteCapacityBufferPercentage = 20

#SlavesAgent.channels.c1.byteCapacity = 800

# SlavesAgent Sinks

SlavesAgent.sinks.k1.type = avro

# connect to CollectorMainAgent

SlavesAgent.sinks.k1.hostname = 192.168.192.128

SlavesAgent.sinks.k1.port = 4444

SlavesAgent.sources.s1.channels =c1

SlavesAgent.sinks.k1.channel = c1

命令:

开启agent:

flume-ng agent -c . -f /home/hadoop/flume-1.7.0/conf/myconf/MasterNode.conf -n MasterAgent -Dflume.root.logger=INFO,console

flume-ng agent -c . -f /home/hadoop/flume-1.7.0/conf/myconf/SlavesNode.conf -n SlavesAgent -Dflume.root.logger=INFO,console

执行要监控的脚本文件

flume-ng avro-client -c . -H localhost -p -F /data.txt