吴恩达《深度学习》-课后测验-第五门课 序列模型(Sequence Models)-Week 2: Natural Language Processing and Word Embeddings (第二周测验:自然语言处理与词嵌入)

Week 2 Quiz: Natural Language Processing and Word Embeddings (第二周测验:自然语言处理与词嵌入)

1.Suppose you learn a word embedding for a vocabulary of 10000 words. Then the embedding vectors should be 10000 dimensional, so as to capture the full range of variation and meaning in those words. (假设你学习了一个为 10000 个单词的词汇表嵌入的单词,那么嵌入向量应 该为 10000 维,这样就可以捕捉到所有的变化和意义)

【 】 True(正确) 【 】 False(错误)

答案

False

Note: The dimension of word vectors is usually smaller than the size of the vocabulary. Most common sizes for word vectors ranges between 50 and 400. (注:词向量的维数通常小于词汇表的维数,词向量最常见的大小在 50 到 400 之间。)

2.What is t-SNE?( t-SNE 是什么?)

【 】 A linear transformation that allows us to solve analogies on word vectors(一种可以让我们解决词向量的相似性的线性变换)

【 】 A non-linear dimensionality reduction technique(一种非线性降维技术)

【 】 A supervised learning algorithm for learning word embeddings(一种学习词嵌入的监督学习算法)

【 】 An open-source sequence modeling library(一个开源序列建模库)

答案

【★】 A non-linear dimensionality reduction technique(一种非线性降维技术)

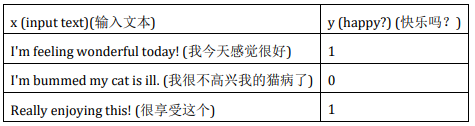

3.Suppose you download a pre-trained word embedding which has been trained on a huge corpus of text. You then use this word embedding to train an RNN for a language task of recognizing if someone is happy from a short snippet of text, using a small training set. (假设 你下载了一个经过预先训练的词嵌入模型,该模型是在一个庞大的语料库上训练的出来的。 然后使用这个词嵌入来训练一个 RNN 来完成一项语言任务,即使用一个小的训练集从一小 段文字中识别出某人是否快乐。)

【 】 True(正确)

【 】 False(错误)

答案

True

Note: Then even if the word "ecstatic" does not appear in your small training set, your RNN might reasonably be expected to recognize "I’m ecstatic" as deserving a label 𝑦 = 1. (注:即使“欣喜若狂”这个词没有出现在你的小训练集中,你的 RNN 也会理所当然的认为“我欣喜若狂”应该被贴 上“y=1”的标签。)

4.Which of these equations do you think should hold for a good word embedding? (Check all that apply) (你认为以下哪些公式是合适的词嵌入?)

【 】 \(e_{𝑏𝑜𝑦} − 𝑒_{𝑔𝑖𝑟𝑙} ≈ 𝑒_{𝑏𝑟𝑜𝑡ℎ𝑒𝑟} − 𝑒_{𝑠𝑖𝑠𝑡𝑒𝑟}\) 【 】 \(𝑒_{𝑏𝑜𝑦} − 𝑒_{𝑔𝑖𝑟𝑙} ≈ 𝑒_{𝑠𝑖𝑠𝑡𝑒𝑟} − 𝑒_{𝑏𝑟𝑜𝑡ℎ𝑒𝑟}\)

【 】 \(𝑒_{𝑏𝑜𝑦} − 𝑒_{𝑏𝑟𝑜𝑡ℎ𝑒𝑟} ≈ 𝑒_{𝑔𝑖𝑟𝑙} − 𝑒_{𝑠𝑖𝑠𝑡𝑒𝑟}\) 【 】 \(𝑒_{𝑏𝑜𝑦} − 𝑒_{𝑏𝑟𝑜𝑡ℎ𝑒𝑟} ≈ 𝑒_{𝑠𝑖𝑠𝑡𝑒𝑟} − 𝑒_{𝑔𝑖𝑟𝑙}\)

答案

【★】 𝑒𝑏𝑜𝑦 − 𝑒𝑔𝑖𝑟𝑙 ≈ 𝑒𝑏𝑟𝑜𝑡ℎ𝑒𝑟 − 𝑒𝑠𝑖𝑠𝑡𝑒r

【★】 𝑒𝑏𝑜𝑦 − 𝑒𝑏𝑟𝑜𝑡ℎ𝑒𝑟 ≈ 𝑒𝑔𝑖𝑟𝑙 − 𝑒𝑠𝑖𝑠𝑡𝑒r

\5. Let 𝐸 be an embedding matrix, and let \(𝑜_{1234}\) be a one-hot vector,corresponding to word 1234. Then to get the embedding of word 1234, Why don't we call \(𝐸^𝑇 ∗ 𝑜_{1234}\) in Python?( 设 𝐸为嵌入矩阵,\(𝑜_{1234}\)为独热向量,对应单词 1234,那么为了得到嵌入单词 1234,为什么 不在 Python 中调用\(𝐸^𝑇 ∗ 𝑜_{1234}\))

【 】 It is computationally wasteful(这非常耗费计算资源)

【 】The correct formula is \(𝐸^𝑇 ∗ 𝑜_{1234}\)()

【 】This doesnt handle unknown words (这不能处理未知单词)

【 】None of the above: calling the python snippet as described above is fine(以上都不是,调用 上面描述的 Python 代码片段是可以的)

答案

【★】 It is computationally wasteful(这非常耗费计算资源)

Note:Yes, the element-Wise multiplication will be extremely inefficient(注:是的,基于元素乘法效率非常低)

\6. When learning word embeddings, we create an artificial task of estimating 𝑃(𝑡𝑎𝑟𝑔𝑒𝑡|𝑐𝑜𝑛𝑡𝑒𝑥𝑡). It is okay if we do poorly on this artificial prediction task; the more important by-product of this task is that we learn a useful set of word embeddings. (在学习词嵌入时,我们创建了一个估算𝑃(𝑡𝑎𝑟𝑔𝑒𝑡|𝑐𝑜𝑛𝑡𝑒𝑥𝑡)的人工任务,如果我们在这个人工预测上做得不好也没关系:这个任务一个重要的副产品就是我们学习了一组有用的词嵌入)

【 】 True(正确) 【 】 False(错误)

答案

True

7.In the word2vec algorithm, you estimate 𝑃(𝑡|𝑐), where 𝑡 is the target word and 𝑐 is a context word. How are 𝑡 and 𝑐 chosen from the training set? Pick the best answer. (在 word2vec 算法中,估计𝑃(𝑡|𝑐),其中𝑡是目标词,𝑐是上下文词,如何从训练集中选择𝑡和𝑐?选择最好的答 案)

【 】𝑐 and 𝑡 are chosen to be nearby words. (𝑐和𝑡被选为邻近词)

【 】𝑐 is a sequence of several words immediately before 𝑡.( 𝑐是紧接在𝑡前面的几个词的序列)

【 】𝑐 is the sequence of all the words in the sentence before 𝑡.( 𝑐是在𝑡之前所有单词的序列)

【 】𝑐 is the one word that comes immediately before 𝑡. (𝑐是在𝑡之前出现的一个词)

答案

【★】𝑐 and 𝑡 are chosen to be nearby words. (𝑐和𝑡被选为邻近词)

8.Suppose you have a 10000 word vocabulary, and are learning 500- dimensional word embeddings. The word2vec model uses the following softmax function: Which of these statements are correct? Check all that apply. (假设你有 10000 个词汇表,并且正在学习 500 维的词嵌入, word2vec 模型使用了以下 softmax 函数:这些语句中哪一个是正确的?多选 题)

【 】 𝜃𝑡 and 𝑒𝑐 are both 500 dimensional vectors.( 𝜃𝑡 和𝑒𝑐 都是 500 维向量)

【 】 𝜃𝑡 and 𝑒𝑐 are both 10000 dimensional vectors.( 𝜃𝑡 和𝑒𝑐 都是 1000 维向量)

【 】 𝑒𝑐𝜃𝑡 and 𝑒𝑐 are both trained with an optimization algorithm such as Adam or gradient descent.( 𝑒𝑐𝜃𝑡和𝑒𝑐都使用了优化算法的训练,比如 Adam 或者梯度下降)

【 】 After training, we should expect 𝜃𝑡 to be very close to 𝑒𝑐 when 𝑡 and 𝑐 are the same word.( 当𝑡和𝑐是同一个单词,我们期望经过训练 𝜃𝑡应该非常接近𝑒𝑐 )

答案

【★】 𝜃𝑡 and 𝑒𝑐 are both 500 dimensional vectors.( 𝜃𝑡 和𝑒𝑐 都是 500 维向量)

【★】 𝑒𝑐𝜃𝑡 and 𝑒𝑐 are both trained with an optimization algorithm such as Adam or gradient descent.( 𝑒𝑐𝜃𝑡和𝑒𝑐都使用了优化算法的训练,比如 Adam 或者梯度下降)

9.Suppose you have a 10000 word vocabulary, and are learning 500- dimensional word embeddings. The GloVe model minimizes this objective: Which of these statements are correct? Check all that apply. (假设你有 10000 个词汇表,并且正在学习 500 维的词嵌入, GloVe 模型最小化了这个目标:这些表述中哪一个是正确的?多选题)

【 】𝜃𝑖 and 𝑒𝑗 should be initialized to 0 at the beginning of training. (𝜃𝑖 和 𝑒𝑗在训练开始时应该初始化为 0)

【 】 𝜃𝑖 and 𝑒𝑗 should be initialized randomly at the beginning of training. (𝜃𝑖 和 𝑒𝑗在训练开始 时应该随机初始化)

【 】𝑋𝑖𝑗 is the number of times word i appears in the context of word j. (𝑋𝑖𝑗是单词 i 在单词 j 上下文中出现的次数)

【 】The weighting function 𝑓(. ) must satisfy 𝑓(0) = 0. Note:The weighting function helps prevent learning only from extremely common word pairs. It is not necessary that it satisfies this function. (权重函数𝑓(. )必须满足𝑓(0) = 0,注:权重函数有助于防止学习到极端常见的单词对,它不需要满足这个函数)

答案

【★】 𝜃𝑖 and 𝑒𝑗 should be initialized randomly at the beginning of training. (𝜃𝑖 和 𝑒𝑗在训练开始 时应该随机初始化)

【★】𝑋𝑖𝑗 is the number of times word i appears in the context of word j. (𝑋𝑖𝑗是单词 i 在单词 j 上下文中出现的次数)

【★】The weighting function 𝑓(. ) must satisfy 𝑓(0) = 0. Note:The weighting function helps prevent learning only from extremely common word pairs. It is not necessary that it satisfies this function. (权重函数𝑓(. )必须满足𝑓(0) = 0,注:权重函数有助于防止学习到极端常见的单 词对,它不需要满足这个函数)

10.You have trained word embeddings using a text dataset of 𝑚1words. You are considering using these word embeddings for a language task, for which you have a separate labeled dataset of 𝑚2 words. Keeping in mind that using word embeddings is a form of transfer learning, under which of these circumstance would you expect the word embeddings to be helpful? (你已经使用 𝑚1的文本数据集训练了词嵌入。当你有一个单独标记的 𝑚2 数据集你正在考虑使用这些词嵌入 到语言任务中。记住,使用使用词嵌入是一种迁移学习的形式,你希望哪种情况对词嵌入有帮助?)

【 】𝑚1»𝑚2 【 】𝑚1«𝑚2

答案

【★】𝑚1»𝑚2

浙公网安备 33010602011771号

浙公网安备 33010602011771号