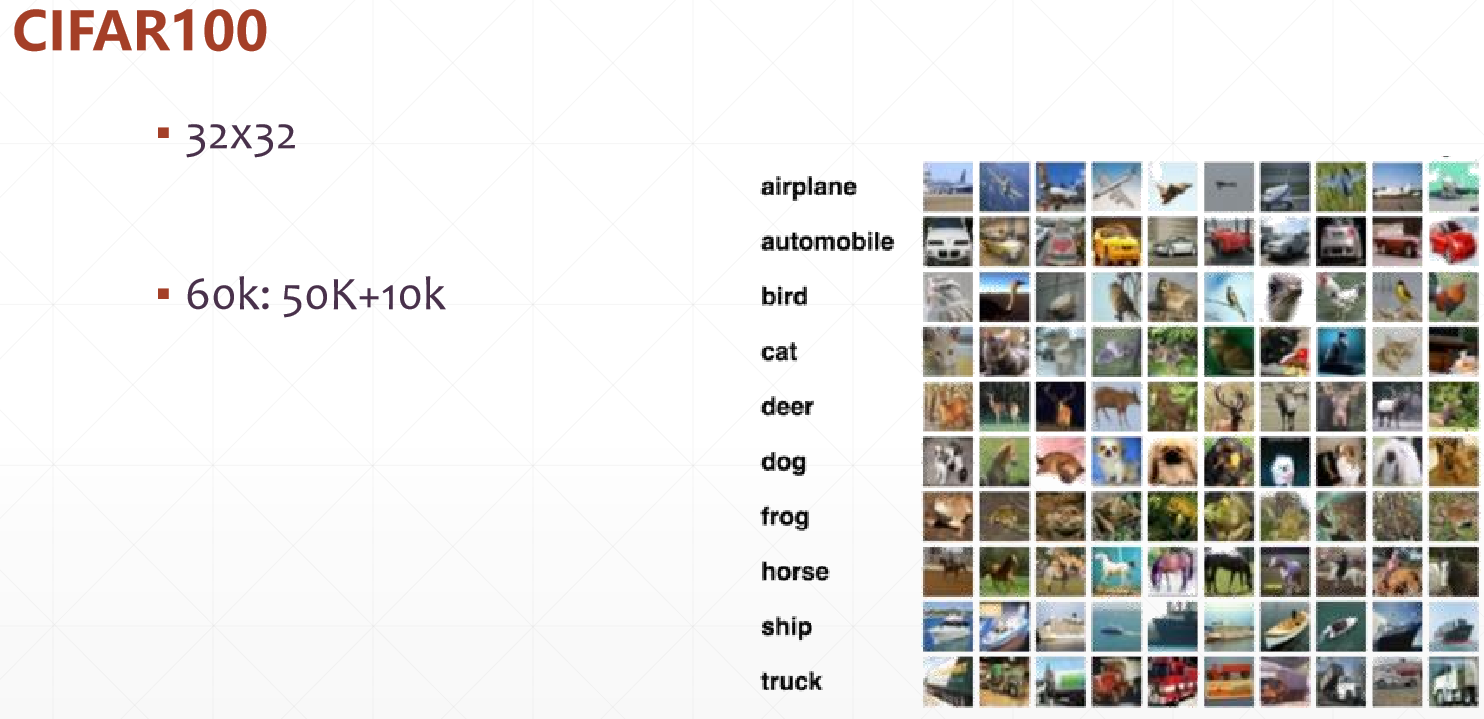

27、CIFAR100实战

CIFAR100和cifar10的数据量一样,10是将数据集分为10类,而100是将每一类再分为10类

1、Pipeline

Load datasets

Build Network

Train

Test

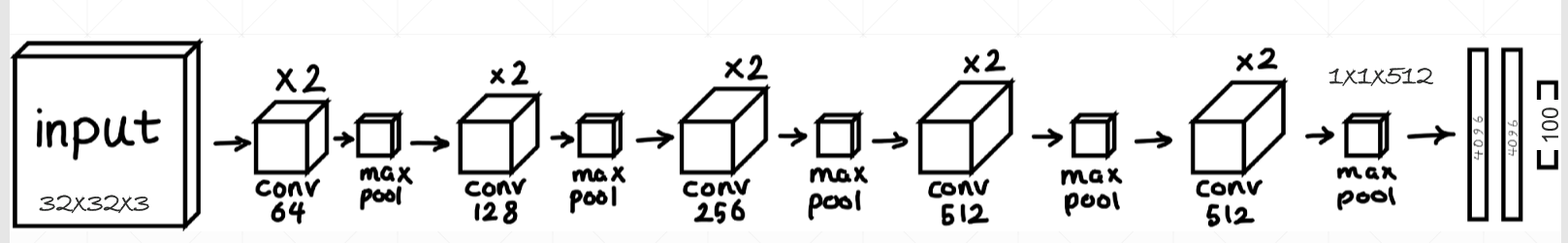

2、layers 13层网络

3、代码

1 import tensorflow as tf 2 from tensorflow.keras import layers, optimizers, datasets, Sequential 3 import os 4 5 os.environ['TF_CPP_MIN_LOG_LEVEL']='2' 6 tf.random.set_seed(2345) 7 8 gpus = tf.config.experimental.list_physical_devices(device_type='GPU') 9 for gpu in gpus: 10 tf.config.experimental.set_memory_growth(gpu, True) 11 12 13 conv_layers = [ # 5 units of conv + max pooling 14 # unit 1 每个unit包含两个卷积层和一个最大化池化层 15 layers.Conv2D(64, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), #64个3x3 kernel,对图片进行扩充 16 layers.Conv2D(64, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 17 layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'), #池化尺寸为2x2.步长为2 18 19 # unit 2 20 layers.Conv2D(128, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 21 layers.Conv2D(128, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 22 layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'), 23 24 # unit 3 25 layers.Conv2D(256, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 26 layers.Conv2D(256, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 27 layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'), 28 29 # unit 4 30 layers.Conv2D(512, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 31 layers.Conv2D(512, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 32 layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'), 33 34 # unit 5 保持512不变,因为参数量已经很多了 35 layers.Conv2D(512, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 36 layers.Conv2D(512, kernel_size=[3, 3], padding="same", activation=tf.nn.relu), 37 layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same') 38 39 ] 40 41 42 def preprocess(x, y): 43 # [0~1] 归一化 44 x = tf.cast(x, dtype=tf.float32) / 255. 45 y = tf.cast(y, dtype=tf.int32) 46 return x,y 47 48 49 (x,y), (x_test, y_test) = datasets.cifar100.load_data() 50 y = tf.squeeze(y, axis=1) 51 y_test = tf.squeeze(y_test, axis=1) 52 print(x.shape, y.shape, x_test.shape, y_test.shape) 53 54 55 train_db = tf.data.Dataset.from_tensor_slices((x,y)) 56 train_db = train_db.shuffle(1000).map(preprocess).batch(128) 57 58 test_db = tf.data.Dataset.from_tensor_slices((x_test,y_test)) 59 test_db = test_db.map(preprocess).batch(64) 60 61 sample = next(iter(train_db)) 62 print('sample:', sample[0].shape, sample[1].shape, 63 tf.reduce_min(sample[0]), tf.reduce_max(sample[0])) 64 65 66 def main(): 67 68 # [b, 32, 32, 3] => [b, 1, 1, 512] 经过卷积网络之后的shape改变 69 conv_net = Sequential(conv_layers) #卷积网络 70 71 fc_net = Sequential([ 72 layers.Dense(256, activation=tf.nn.relu), 73 layers.Dense(128, activation=tf.nn.relu), 74 layers.Dense(100, activation=None), #最后一层设置为100类 75 ]) 76 77 #网络的建立 78 conv_net.build(input_shape=[None, 32, 32, 3]) 79 fc_net.build(input_shape=[None, 512]) 80 optimizer = optimizers.Adam(lr=1e-4) 81 82 # [1, 2] + [3, 4] => [1, 2, 3, 4] 83 variables = conv_net.trainable_variables + fc_net.trainable_variables #求导的参数,两个列表相加相当于拼接 84 85 for epoch in range(50): 86 87 for step, (x,y) in enumerate(train_db): 88 89 with tf.GradientTape() as tape: 90 # [b, 32, 32, 3] => [b, 1, 1, 512] 91 out = conv_net(x) 92 # flatten, => [b, 512] 93 out = tf.reshape(out, [-1, 512]) 94 # [b, 512] => [b, 100] 95 logits = fc_net(out) 96 # [b] => [b, 100] 97 y_onehot = tf.one_hot(y, depth=100) 98 # compute loss 99 loss = tf.losses.categorical_crossentropy(y_onehot, logits, from_logits=True) 100 loss = tf.reduce_mean(loss) 101 102 grads = tape.gradient(loss, variables) 103 optimizer.apply_gradients(zip(grads, variables)) 104 105 if step %100 == 0: 106 print(epoch, step, 'loss:', float(loss)) 107 108 109 110 total_num = 0 111 total_correct = 0 112 for x,y in test_db: 113 114 out = conv_net(x) 115 out = tf.reshape(out, [-1, 512]) 116 logits = fc_net(out) 117 prob = tf.nn.softmax(logits, axis=1) 118 pred = tf.argmax(prob, axis=1) 119 pred = tf.cast(pred, dtype=tf.int32) 120 121 correct = tf.cast(tf.equal(pred, y), dtype=tf.int32) 122 correct = tf.reduce_sum(correct) 123 124 total_num += x.shape[0] 125 total_correct += int(correct) 126 127 acc = total_correct / total_num 128 print(epoch, 'acc:', acc) 129 130 131 132 if __name__ == '__main__': 133 main()