13、数据集加载

① keras.datasets 加载数据集准备

② tf.data.Dataset.from_tensor_slices 将数据加载进内存,转换为tensor

- shuffle 打乱顺序

- map 自动进行函数转换

- batch 批量操作

- repeat 重复

③ Pipeline 多线程加载大型数据集

(1)keras.datasets 加载数据集准备

① boston housing

”Boston housing price regression dataset. 波斯顿房价回归数据集

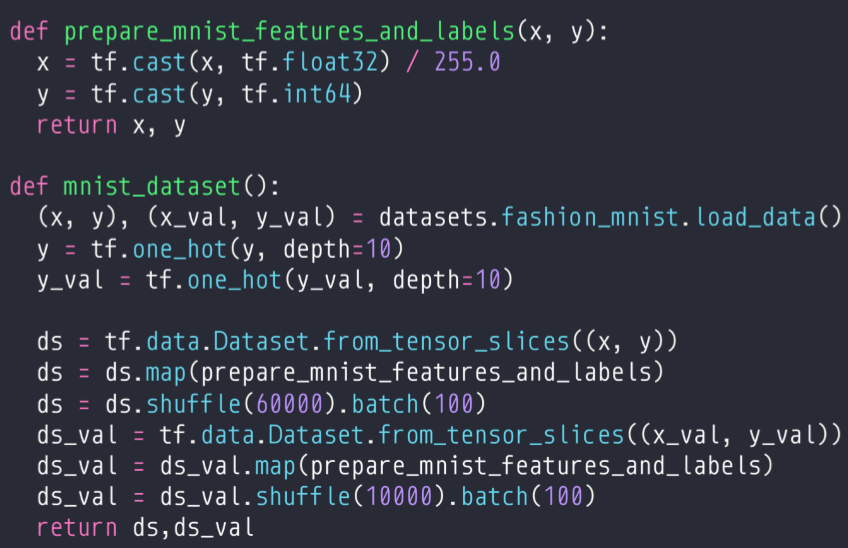

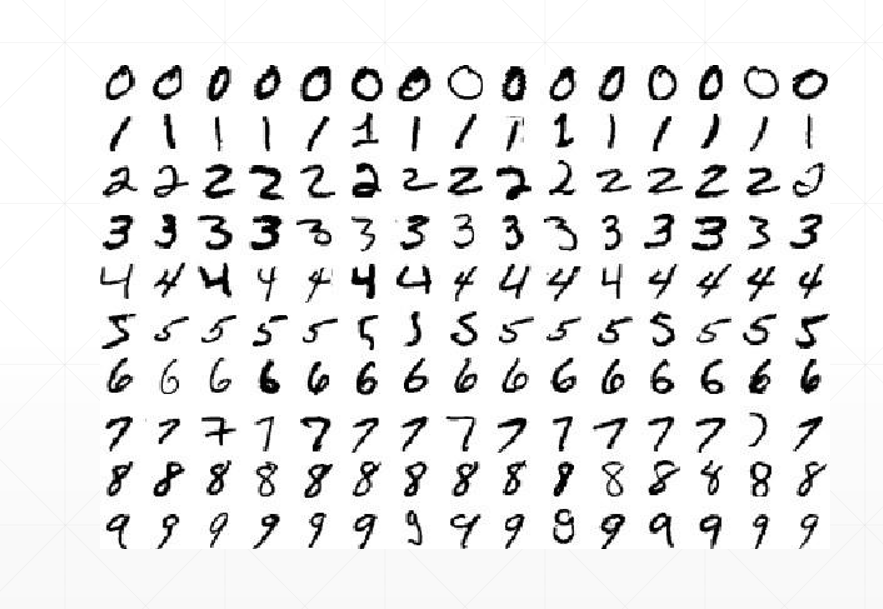

② mnist/fashion mnist

MNIST/Fashion-MNIST dataset. 手写数据集

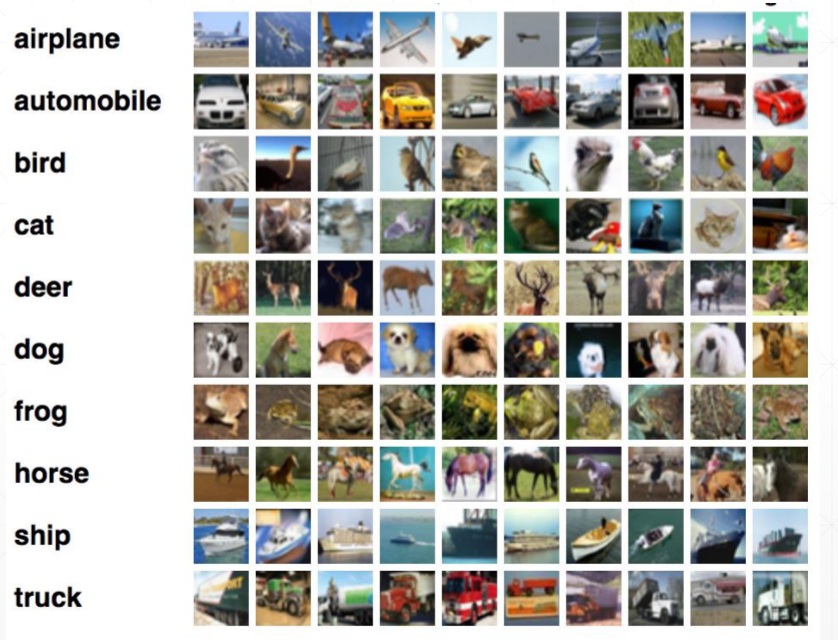

③ cifar1o/100

”small images classification dataset. 图片数据集

④ imdb

”sentiment classification dataset. 对NLP处理的数据集 (情感分类数据集)

(2)MNIST 手写数据集

每个数字的图片像素是28x28,通道数为1,70k的图片数量分为60k训练集和10k的测试集

1 (x,y),(x_test,y_test) = datasets.mnist.load_data() 2 print(x[1]) 3 print(x.shape) 4 print(y[:20]) 5 6 y_onehot = tf.one_hot(y, depth=10) #one_hot编码 7 print(y_onehot[:10])

输出:

[[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 51 159 253 159 50 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 48 238 252 252 252 237 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 54 227 253 252 239 233 252 57 6 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 10 60 224 252 253 252 202 84 252 253 122 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 163 252 252 252 253 252 252 96 189 253 167 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 51 238 253 253 190 114 253 228 47 79 255 168 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 48 238 252 252 179 12 75 121 21 0 0 253 243 50 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 38 165 253 233 208 84 0 0 0 0 0 0 253 252 165 0 0 0 0 0] [ 0 0 0 0 0 0 0 7 178 252 240 71 19 28 0 0 0 0 0 0 253 252 195 0 0 0 0 0] [ 0 0 0 0 0 0 0 57 252 252 63 0 0 0 0 0 0 0 0 0 253 252 195 0 0 0 0 0] [ 0 0 0 0 0 0 0 198 253 190 0 0 0 0 0 0 0 0 0 0 255 253 196 0 0 0 0 0] [ 0 0 0 0 0 0 76 246 252 112 0 0 0 0 0 0 0 0 0 0 253 252 148 0 0 0 0 0] [ 0 0 0 0 0 0 85 252 230 25 0 0 0 0 0 0 0 0 7 135 253 186 12 0 0 0 0 0] [ 0 0 0 0 0 0 85 252 223 0 0 0 0 0 0 0 0 7 131 252 225 71 0 0 0 0 0 0] [ 0 0 0 0 0 0 85 252 145 0 0 0 0 0 0 0 48 165 252 173 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 86 253 225 0 0 0 0 0 0 114 238 253 162 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 85 252 249 146 48 29 85 178 225 253 223 167 56 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 85 252 252 252 229 215 252 252 252 196 130 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 28 199 252 252 253 252 252 233 145 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 25 128 252 253 252 141 37 0 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0] [ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]] (60000, 28, 28) [5 0 4 1 9 2 1 3 1 4 3 5 3 6 1 7 2 8 6 9] tf.Tensor( [[0. 0. 0. 0. 0. 1. 0. 0. 0. 0.] [1. 0. 0. 0. 0. 0. 0. 0. 0. 0.] [0. 0. 0. 0. 1. 0. 0. 0. 0. 0.] [0. 1. 0. 0. 0. 0. 0. 0. 0. 0.] [0. 0. 0. 0. 0. 0. 0. 0. 0. 1.] [0. 0. 1. 0. 0. 0. 0. 0. 0. 0.] [0. 1. 0. 0. 0. 0. 0. 0. 0. 0.] [0. 0. 0. 1. 0. 0. 0. 0. 0. 0.] [0. 1. 0. 0. 0. 0. 0. 0. 0. 0.] [0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]], shape=(10, 10), dtype=float32)

(3)CIFAR10/100

每个图片像素是32x32,通道数为3,70k的图片数量分为60k训练集和10k的测试集

1 (x,y),(x_test,y_test) = datasets.cifar10.load_data() 2 print(x.shape) #(50000, 32, 32, 3) 3 print(y[:20]) 4 5 y_onehot = tf.one_hot(y, depth=10) 6 print(y_onehot[:10])

输出:

(50000, 32, 32, 3) [[6] [9] [9] [4] [1] [1] [2] [7] [8] [3] [4] [7] [7] [2] [9] [9] [9] [3] [2] [6]] tf.Tensor( [[[0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]] [[0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]] [[0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]] [[0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]] [[0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]] [[0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]] [[0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]] [[0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]] [[0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]] [[0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]]], shape=(10, 1, 10), dtype=float32)

(3)tf.data.Dataset

① tf.data.Dataset.from_tensor_slices()

将numpy转换为tensor

1 (x,y),(x_test,y_test) = datasets.cifar10.load_data() 2 db = tf.data.Dataset.from_tensor_slices(x_test) #转换为tensor 3 print(db) #得到一个数据集对象 4 5 db_ = next(iter(db)) #iter(db)得到迭代器,使用next方法得到一张图片 6 print(db_.shape) #(32, 32, 3)

② .shuffle

在做DP时,神经网络具有很强的记忆功能,如果总是按照固定的顺序进行训练,就会导致网络找到捷径影响预测

1 db = tf.data.Dataset.from_tensor_slices(x_test) 2 db = db.shuffle(10000) 3 print(db) #生成一个对象,<ShuffleDataset shapes: (32, 32, 3), types: tf.uint8> 4 print(next(iter(db)))

③ .map

1 def preProcess(x,y): 2 x = tf.cast(x, dtype = tf.float32)/255 3 y = tf.cast(y, dtype = tf.int32) 4 y = tf.one_hot(y,depth = 10) 5 return x, y 6 7 (x,y),(x_test,y_test) = datasets.cifar10.load_data() 8 db = tf.data.Dataset.from_tensor_slices((x_test, y_test)) 9 db2 = db.map(preProcess) 10 print(next(iter(db2)))

④ .batch

1 db3 = db2.batch(32) 2 res = next(iter(db3)) 3 print(res[0].shape, res[1].shape) #(32, 32, 32, 3) (32, 1, 10)

⑤ .repeat()

db4 = db3.repeat() print(db4)