前端学习 Nginx

前端学习 nginx

本篇主要讲解 nginx 常用命令、基础概念(正向/反向代理、负载均衡、动静分离、高可用)、配置文件结构,并通过简单的实验来体验反向代理和负载均衡,最后说一下 nginx 原理。

为什么需要学习 nginx

场景1:跨域需求。比如后端不愿意使用 cors,前端可用 proxy + nginx 来实现跨域

场景2:后端程序员用诧异的表情说:你(前端程序员)没有搞过 nginx?

nginx 是什么

nginx是一个高性能的HTTP和反向代理web服务器。

其特点占有内存少,并发能力强(能够支持高达 5 万个并发连接数的响应),事实上 nginx 的并发能力在同类型的网页服务器中表现较好。

安装

nginx 已被其他同事安装,笔者这部分就暂时跳过。

正向/反向代理

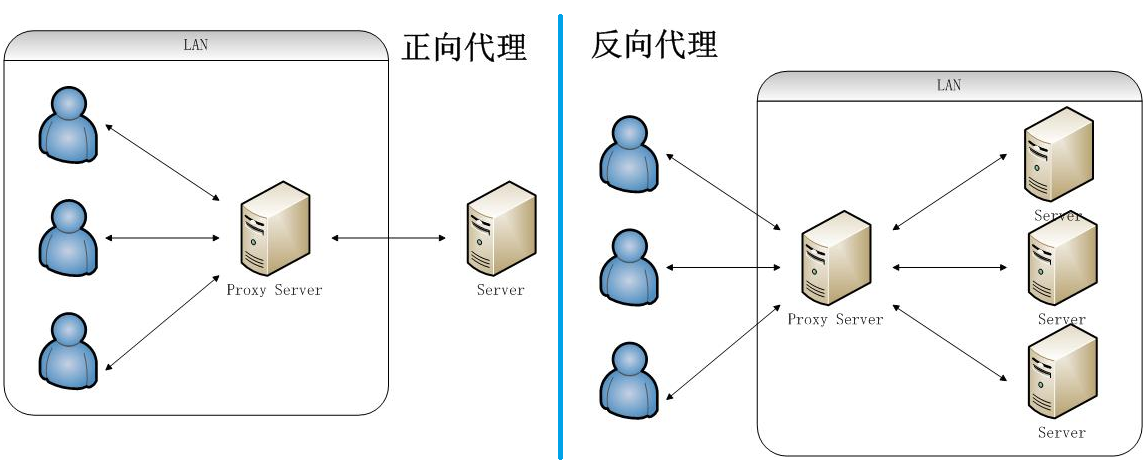

- 正向代理是

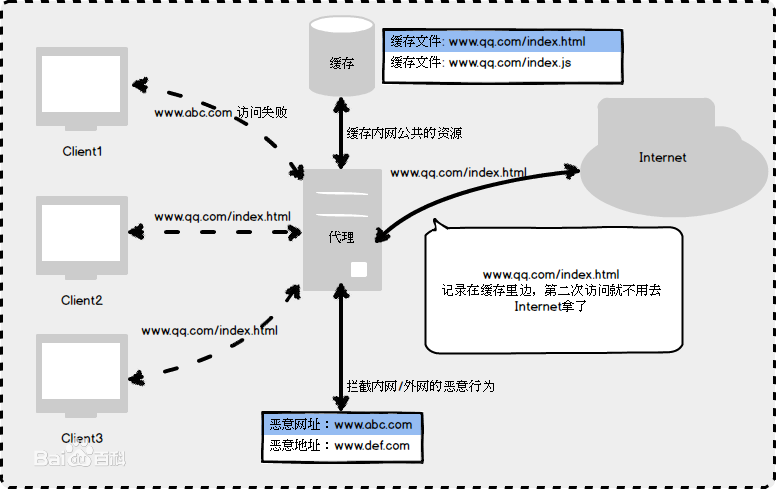

代理客户端。客户端向代理发送一个请求并指定目标(原始服务器)。典型用途是为在防火墙内的局域网客户端提供访问Internet的途径,还可以使用缓冲特性减少网络使用率。就像这样:

- 反向代理是

代理服务器。对于用户而言,反向代理服务器就相当于目标服务器,用户不需要知道目标服务器的地址,也无须在用户端作任何设置。反向代理可以隐藏服务器真实ip、给多台服务器做负载均衡。

负载均衡

最简单的项目结构如下:

客户端 <---> 服务(1台) <---> 数据库

客户端发送请求到服务器,服务器查询数据库并返回数据给客户端。

某天用户数量剧增,并发数多了起来,一台服务器根本扛不住。

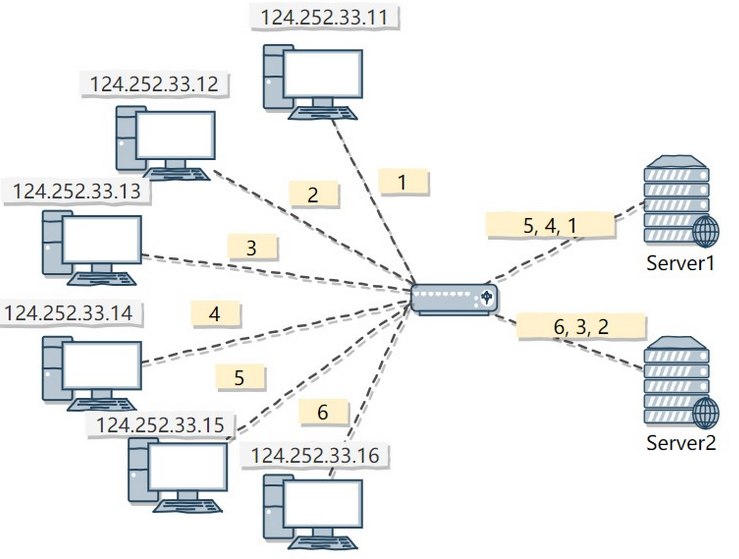

这就好比有一根很大的木头(请求数多,要处理的事情也多),一匹马(一台服务器)拉不动,通常我们不会换一匹更强壮的马(更好的服务器),那样费用会很高,而且也不一定拉得动这根大木头,可以多找几匹普通的马。就像下图:

再增加一台服务器,然后通过负载均衡策略,把 6 个请求平分给多台服务器,从而提高系统并发数。

动静分离

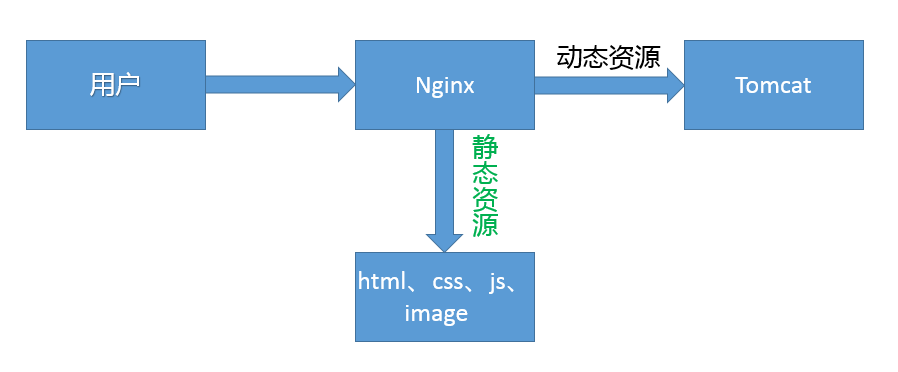

动静分离是指在web服务器架构中,将静态页面与动态页面或者静态内容接口和动态内容接口分开,进而提升整个服务访问性能和可维护性。

假如用户访问一个网页,发起10个请求,如果都由同一台服务器处理,该服务器就得处理 10个;如果将其中5个静态请求(html、css、js、image)抽离出去(比如放入静态资源服务器),该服务器(例如 tomcat)的压力就会相应减小,而且静态资源更好部署和扩展。就像这样:

动静分离实现有:

- 将静态文件独立成单独的域名,放在独立的服务区(目前主流)

- 动态和静态一起发布,通过 nginx 区分

比如静态资源放在安装 nginx 的服务器中,动态资源可走代理(到 tomcat)

常用命令

nginx -h

nginx -h 帮助信息。例如 -v 查看版本、-s 给主进程(master process)发送信号(stop、reload等)

root@linux:/# nginx -h

nginx version: nginx/1.18.0 (Ubuntu)

Usage: nginx [-?hvVtTq] [-s signal] [-c filename] [-p prefix] [-g directives]

Options:

-?,-h : this help

-v : show version and exit

-V : show version and configure options then exit

-t : test configuration and exit

-T : test configuration, dump it and exit

-q : suppress non-error messages during configuration testing

-s signal : send signal to a master process: stop, quit, reopen, reload

-p prefix : set prefix path (default: /usr/share/nginx/)

-c filename : set configuration file (default: /etc/nginx/nginx.conf)

-g directives : set global directives out of configuration file

nginx -v

nginx -v 查看版本

root@linux:/# nginx -v

nginx version: nginx/1.18.0 (Ubuntu)

man nginx

man nginx 使用手册。从中可以看到 -s 是给住进程发信号,里面有 stop、quit、reload等命令,还可得知 nginx 的主配置文件 /etc/nginx/nginx.conf,错误日志 /var/log/nginx/error.log 等等。

// 将 nginx 手册生成到 nginx-man.txt 文件

root@linux:/# man nginx >> nginx-man.txt

root@linux:/# cat nginx-man.txt

NGINX(8) BSD System Manager's Manual NGINX(8)

NAME

nginx — HTTP and reverse proxy server, mail proxy server

SYNOPSIS

nginx [-?hqTtVv] [-c file] [-g directives] [-p prefix] [-s signal]

DESCRIPTION

nginx (pronounced “engine x”) is an HTTP and reverse proxy server, a mail proxy server, and a generic TCP/UDP proxy server. It is known for its high performance, stability, rich feature set, simple

configuration, and low resource consumption.

The options are as follows:

-?, -h Print help.

-c file Use an alternative configuration file.

-g directives Set global configuration directives. See EXAMPLES for details.

-p prefix Set the prefix path. The default value is /usr/share/nginx.

-q Suppress non-error messages during configuration testing.

-s signal Send a signal to the master process. The argument signal can be one of: stop, quit, reopen, reload. The following table shows the corresponding system signals:

stop SIGTERM

quit SIGQUIT

reopen SIGUSR1

reload SIGHUP

-T Same as -t, but additionally dump configuration files to standard output.

-t Do not run, just test the configuration file. nginx checks the configuration file syntax and then tries to open files referenced in the configuration file.

-V Print the nginx version, compiler version, and configure script parameters.

-v Print the nginx version.

SIGNALS

The master process of nginx can handle the following signals:

SIGINT, SIGTERM Shut down quickly.

SIGHUP Reload configuration, start the new worker process with a new configuration, and gracefully shut down old worker processes.

SIGQUIT Shut down gracefully.

SIGUSR1 Reopen log files.

SIGUSR2 Upgrade the nginx executable on the fly.

SIGWINCH Shut down worker processes gracefully.

While there is no need to explicitly control worker processes normally, they support some signals too:

SIGTERM Shut down quickly.

SIGQUIT Shut down gracefully.

SIGUSR1 Reopen log files.

DEBUGGING LOG

To enable a debugging log, reconfigure nginx to build with debugging:

./configure --with-debug ...

and then set the debug level of the error_log:

error_log /path/to/log debug;

It is also possible to enable the debugging for a particular IP address:

events {

debug_connection 127.0.0.1;

}

ENVIRONMENT

The NGINX environment variable is used internally by nginx and should not be set directly by the user.

FILES

/run/nginx.pid

Contains the process ID of nginx. The contents of this file are not sensitive, so it can be world-readable.

/etc/nginx/nginx.conf

The main configuration file.

/var/log/nginx/error.log

Error log file.

EXIT STATUS

Exit status is 0 on success, or 1 if the command fails.

EXAMPLES

Test configuration file ~/mynginx.conf with global directives for PID and quantity of worker processes:

nginx -t -c ~/mynginx.conf \

-g "pid /var/run/mynginx.pid; worker_processes 2;"

SEE ALSO

Documentation at http://nginx.org/en/docs/.

For questions and technical support, please refer to http://nginx.org/en/support.html.

HISTORY

Development of nginx started in 2002, with the first public release on October 4, 2004.

AUTHORS

Igor Sysoev <igor@sysoev.ru>.

This manual page was originally written by Sergey A. Osokin <osa@FreeBSD.org.ru> as a result of compiling many nginx documents from all over the world.

BSD December 5, 2019 BSD

nginx -s stop

nginx -s stop 停止服务

目前 nginx 是启动状态:

root@linux:/# systemctl status nginx

● nginx.service - A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; disabled; vendor preset: enabled)

Active: active (running) since Wed 2022-11-19 14:03:19 CST; 6 days ago

Docs: man:nginx(8)

Process: 557940 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Process: 557941 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Main PID: 557942 (nginx)

Tasks: 5 (limit: 4549)

Memory: 2.9M

CGroup: /system.slice/nginx.service

├─557942 nginx: master process /usr/sbin/nginx -g daemon on; master_process on;

├─557943 nginx: worker process

├─557944 nginx: worker process

├─557945 nginx: worker process

└─557946 nginx: worker process

11月 19 14:03:19 linux systemd[1]: Starting A high performance web server and a reverse proxy server...

执行 nginx -s stop 停止服务,好似失败(Active: inactive (dead)):

root@linux:/# nginx -s stop

root@linux:/# systemctl status nginx

● nginx.service - A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:nginx(8)

11月 19 14:03:19 linux systemd[1]: Starting A high performance web server and a reverse proxy server...

11月 19 14:03:19 linux systemd[1]: Started A high performance web server and a reverse proxy server.

11月 15 16:45:29 linux systemd[1]: nginx.service: Succeeded.

执行 nginx -s reopen 报错如下:

root@linux:/# nginx -s reopen

nginx: [error] open() "/run/nginx.pid" failed (2: No such file or directory)

可能是80 或者 443 端口被占用了,启动不了。把占用 80/443 的进程杀掉,再次启动(执行 systemctl start nginx)则成功了:

root@linux:/# fuser -k 443/tcp

root@linux:/# fuser -k 80/tcp

80/tcp: 2539299 2539300 2539301 2539302 2539303

root@linux:/# systemctl start nginx

root@linux:/# systemctl status nginx

● nginx.service - A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; disabled; vendor preset: enabled)

Active: active (running) since Wed 2022-11-19 16:03:40 CST; 3s ago

Docs: man:nginx(8)

Process: 2539511 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Process: 2539512 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Main PID: 2539513 (nginx)

Tasks: 5 (limit: 4549)

Memory: 4.5M

CGroup: /system.slice/nginx.service

├─2539513 nginx: master process /usr/sbin/nginx -g daemon on; master_process on;

├─2539514 nginx: worker process

├─2539515 nginx: worker process

├─2539516 nginx: worker process

└─2539517 nginx: worker process

nginx -s reload

nginx -s reload。重新加载 nginx 配置文件(/etc/nginx/nginx.conf)。比如你修改了配置文件,需要重新加载才会生效。以下是配置为文件前 20 行内容:

root@linux:/# nginx -s reload

root@linux:/#

root@linux:/# head -20 /etc/nginx/nginx.conf

user www-data;

worker_processes auto;

pid /run/nginx.pid;

include /etc/nginx/modules-enabled/*.conf;

events {

worker_connections 768;

# multi_accept on;

}

http {

##

# Basic Settings

##

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

Tip:更多 nginx 命令可以参考这里

配置文件结构

在 man nginx 中可以知晓 nginx 配置文件,笔者的是 /etc/nginx/nginx.conf。

nginx 配置文件分为 3 大部分:

全局块。文件开头到 events 之间。设置影响 nginx 整体运行的配置指令。events 块。配置影响nginx服务器或与用户的网络连接。工作中应灵活配置http 块。配置最频繁的,代理、缓存、日志定义等绝大多数功能和第三方模块的配置都在这里。里面又包含http 全局块、server 块

# 全局块

user www-data;

# worker process。下面讲原理时会说到。值越大,处理并发也越多,建议保持和服务器的 cpu 个数相同

worker_processes auto;

...

# events块

events {

# 每个 worker_processes 支持的最大连接数是 768

worker_connections 768;

...

}

# http块

http

{

# http全局块

include mime.types;

default_type text/html;

gzip on;

...

# server 块(配置虚拟主机的相关参数,一个http中可以有多个server。)

server

{

# server全局块

...

location [PATTERN] # location块(配置请求的路由,以及各种页面的处理情况。)

{

...

}

location [PATTERN]

{

...

}

}

server

{

...

}

}

Tip:更多详细的介绍请看这里

nginx 实例

反向代理1

需求:通过 nginx 访问 tomcat 中的资源。

Tip:本篇采用两台机器测试,一台安装 nginx 的 linux(ubuntu 20.04),ip 为 192.168.1.123,一台是 windows,ip 是 192.168.1.135。

- 首先将 nginx 的配置文件重置

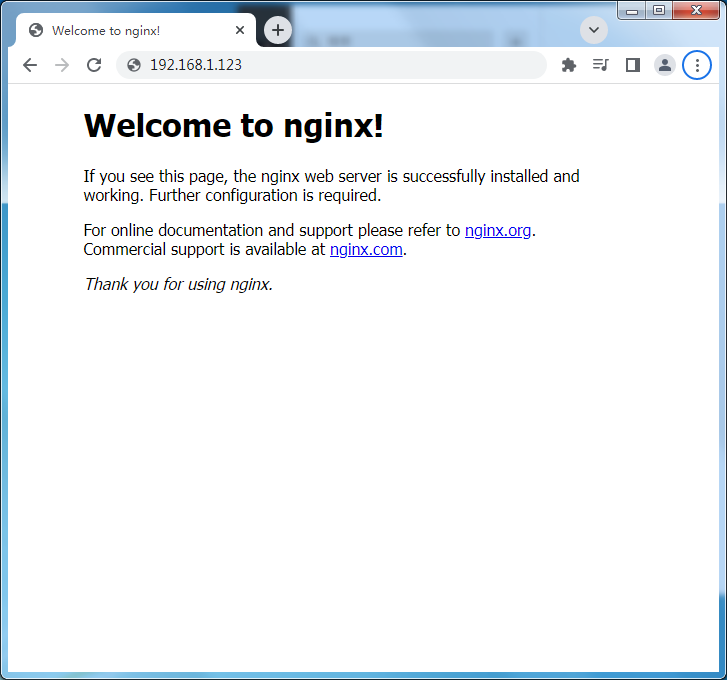

由于 nginx 已被其他同事安装使用,笔者直接从 runoob 拷贝一份配置文件替换 nginx.conf,然后重新加载(nginx -s reload)配置文件,再次通过浏览器访问安装 nginx 的服务器,web 页面显示Welcome to nginx!。就像这样:

以下是拷贝的 nginx 配置文件,许多用 # 注释的代码其实就是供我们参考的配置例子:

#user nobody;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

- windows 机器用 anywhere(随启随用的静态文件服务器) 启动一个服务用来模拟 tomcat。

新建文件夹server1,里面新建index.html,内容如下:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Server1</title>

</head>

<body>

Server1

</body>

</html>

接着安装 anywhere 并在 server1 下启动服务。就像这样:

// 初始化项目

$ npm init -y

// 安装 anywhere

$ npm i anywhere

// 在 server1 下启动服务

Administrator@ /e/nginx/server1 (master)

$ npx anywhere

Running at http://192.168.235.1:8000/

Also running at https://192.168.235.1:8001/

浏览器访问启动的服务 http://192.168.235.1:8000/,页面显示 Server1。

- 在 nginx 中配置代理,当我们访问 nginx(

http://192.168.1.123/)时返回 tomcat(http://192.168.1.135:8000/)的资源

打开配置文件新增 proxy_pass http://192.168.1.135:8000;,重启加载配置:

// 打开配置文件

root@linux:/etc/nginx# vim nginx.conf

// 修改配置文件并保存

server {

listen 80;

server_name localhost;

location / {

root html;

index index.html index.htm;

+ proxy_pass http://192.168.1.135:8000;

}

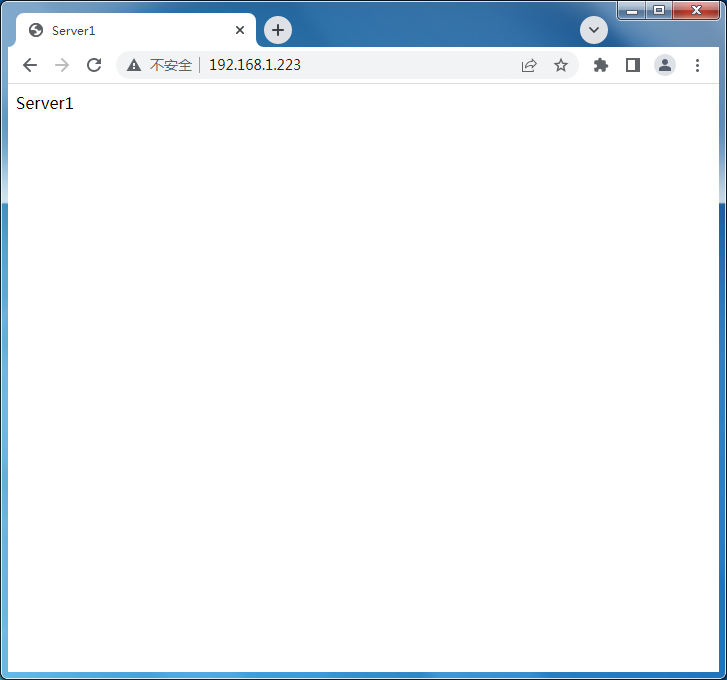

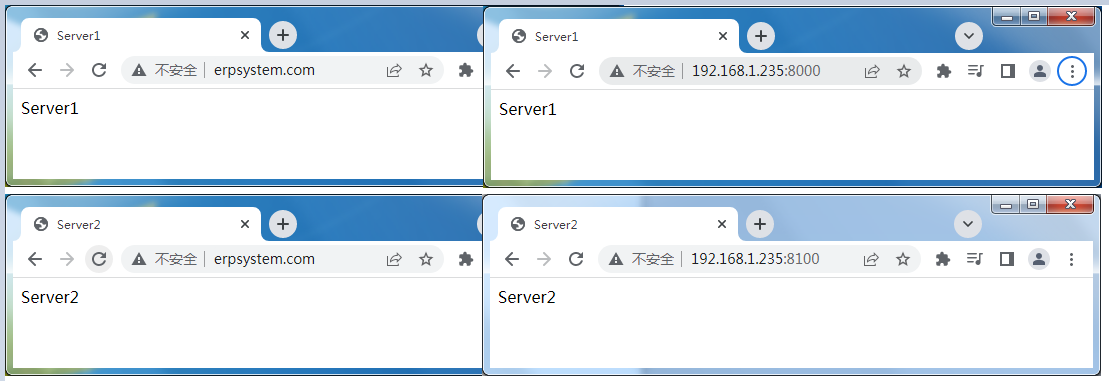

代理成功。请看下图:

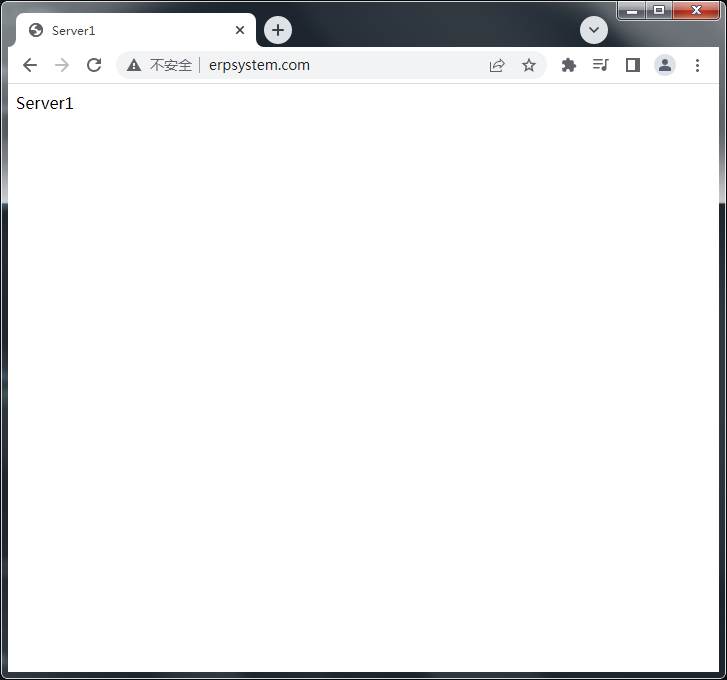

假如这个网站仅对外暴露 nginx,url 是 http://www.erpsystem.com/。就像这样:

笔者通过修改 windows 机器的 host 模拟实现:

// windows 机器中 hosts 文件目录

Administrator@ /c/Windows/System32/drivers/etc

$ ll

total 33

-rw-r--r-- 1 Administrator 197121 862 Jul 15 2021 hosts

Administrator@ /c/Windows/System32/drivers/etc

$ tail -1 hosts

// 增加如下一行映射

192.168.1.123 www.erpsystem.com

至此,完美模拟 nginx 的反向代理,即代理服务器,对客户隐藏真实服务器 ip。

Tip:后续试验将在此基础上进行,比如负载均衡将新建一个网站 server2,并通过 anywhere 启动另一个域名。

反向代理2

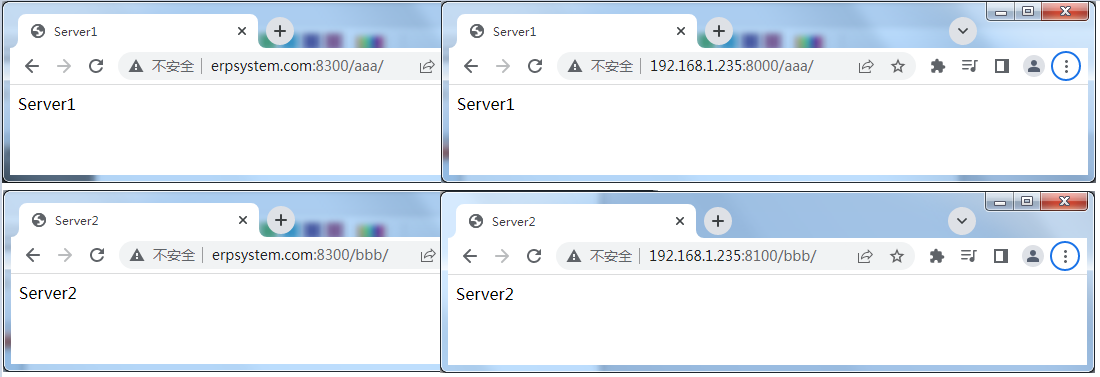

需求:nginx 根据不同的路径访问不同的服务。比如:

- 访问

http://www.erpsystem.com:8300/aaa/,返回 server1(tomcat1的资源) - 访问

http://www.erpsystem.com:8300/bbb/,返回 server2(tomcat2的资源)

修改 nginx 配置文件,增加两个 location 代码块:

server {

listen 8300;

server_name localhost;

# 访问 http://www.erpsystem.com:8300/aaa/ 则会代理到 http://192.168.1.135:8000/aaa/

# ~、~* 指正则匹配,其中 ~* 表示不区分大小写

location ~ /aaa {

root html;

index index.html index.htm;

proxy_pass http://192.168.1.135:8000;

}

location ~ /bbb {

root html;

index index.html index.htm;

proxy_pass http://192.168.1.135:8100;

}

}

然后 nginx -s reload 重新加载配置文件。

调整 server1 的目录结构(将 index.html 放入新建的 aaa 文件夹中):

Administrator@ /e/nginx/server1/aaa (master)

$ ls

index.html

创建 server2:

Administrator@ /e/nginx/server2/bbb (master)

$ ls

index.html

注: 需要将 index.html 用 aaa 或 bbb 文件夹包裹,否则资源找不到。

测试结果如下:

Tip: 比如在浏览器中访问一个不存在的资源 http://192.168.1.135:8100/abc/,可以查看错误日志。就像这样:

root@linux:/# tail -6 /var/log/nginx/error.log

2022/11/19 14:46:34 [notice] 4202#4202: signal process started

2022/11/19 14:51:06 [notice] 5168#5168: signal process started

2022/11/19 14:51:43 [error] 5169#5169: *62 open() "/usr/share/nginx/html/aaa" failed (2: No such file or directory), client: 192.168.1.135, server: localhost, request: "GET /aaa HTTP/1.1", host: "www.erpsystem.com:8300"

2022/11/19 14:52:09 [error] 5169#5169: *62 open() "/usr/share/nginx/html/aaa" failed (2: No such file or directory), client: 192.168.1.135, server: localhost, request: "GET /aaa HTTP/1.1", host: "www.erpsystem.com:8300"

2022/11/19 14:53:17 [notice] 5634#5634: signal process started

2022/11/19 14:55:03 [error] 5635#5635: *74 open() "/usr/share/nginx/html/ccc" failed (2: No such file or directory), client: 192.168.1.135, server: localhost, request: "GET /ccc HTTP/1.1", host: "www.erpsystem.com:8300"

负载均衡

需求:多次访问 http://www.erpsystem.com/,一半返回的 server1 的资源,另一半返回的是 server2 的资源。

效果如下图所示:

首先把 server1 和 server2 准备好:

http://192.168.1.135:8000/ => web 页面显示:server1

http://192.168.1.135:8100/ => web 页面显示:server2

修改 nginx 配置文件:

- 增加负载均衡列表,定义两个服务

- 在 location 中的 proxy_pass 中使用负载均衡

http {

# 负载均衡列表

upstream balancelist {

server 192.168.1.135:8000;

server 192.168.1.135:8100;

}

server {

listen 80;

server_name localhost;

location / {

root html;

index index.html index.htm;

# proxy_pass http://192.168.1.135:8000;

# 引用上面定义的负载均衡的名字

proxy_pass http://balancelist;

}

重新加载配置即可:nginx -s reload

负载均衡策略

- 默认是

轮询,每个请求按时间顺序逐一分配到。

如果后端服务器 down,并能自动剔除该服务。比如上面我们多次请求 www.erpsystem.com,返回的结果依次是 server1、server2、server1、server2...,如果关闭 server2 服务,再次访问,则总是返回 server1。

权重。默认是1,权重越高,分配的客户端就越多。

比如给 server1 分配 weight=10,给 server2 分配 weight=5,测试证明返回 server1 的资源次数要比 server2 多一倍:

# 负载均衡列表

upstream balancelist {

server 192.168.1.135:8000 weight=10;

server 192.168.1.135:8100 weight=5;

}

- ip_hash。每个请求按照访问 ip 的 hash 进行分配,这样每个客户端固定访问一个后端服务区,可解决 session 问题。

比如增加 ip_hash 配置:

# 负载均衡列表

upstream balancelist {

ip_hash;

server 192.168.1.135:8000 weight=10;

server 192.168.1.135:8100 weight=5;

}

重启配置后再次访问,第一次返回 server1,后面请求总是返回 server1。也就是说第一次哪个服务器接待了你,后续都是由他给你服务。

- 第三方。

例如 fair,按后端服务器的响应时间来分配请求,响应时间短的优先分配。

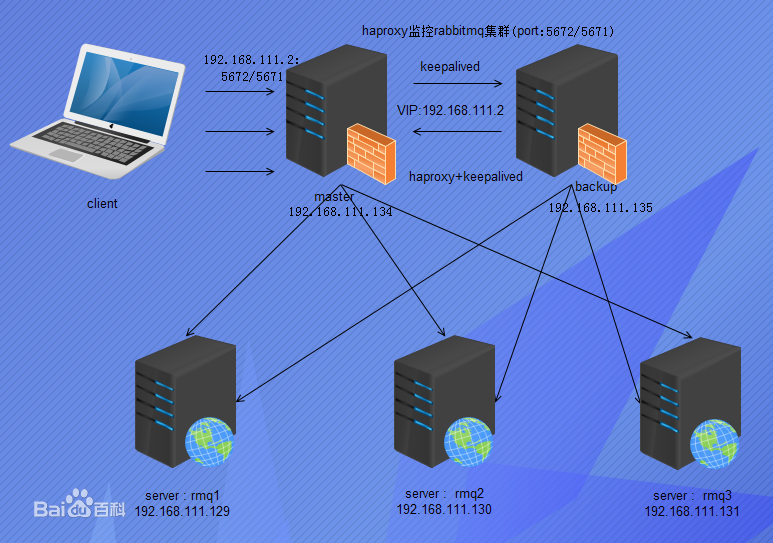

高可用

现在我们通过 nginx 进行负载均衡,比如 tomcat3 挂掉了,nginx 就会将其移除,其他 tomcat 将继续提供服务。

然而 nginx 宕机后,整个应用将不可用。

如果有两台 nginx,其中一台挂掉,另一台能顶替则好了。

我们可以通过 Keepalived(类似于layer3, 4 & 5交换机制的软件) 来实现。就像这样:

上图有两个台 nginx 服务器,一个是 master 主(192.168.111.134),一个 backup 从(192.168.111.l35),两个服务器都安装上 keepalived,共同配置一个对外(对用户)的 虚拟ip 192.168.111.2。当主 nginx 挂掉后,从 backup 就会顶上。

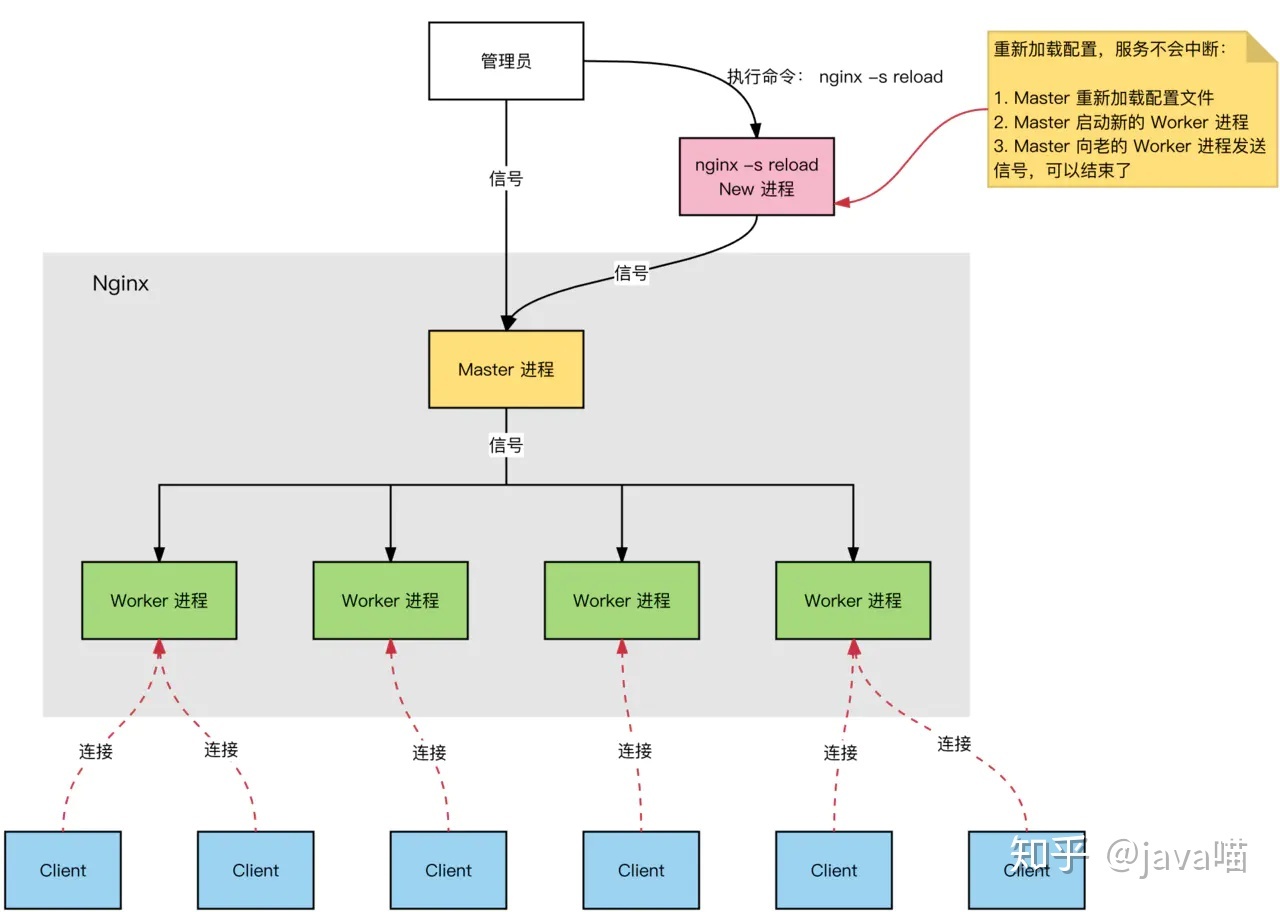

原理

先看下图(from java喵):

启动 nginx 后,可以看到启动了 master 进程数和worker 进程数。比如有请求过来了,master 就会说 “worker 们,来活了”,worker 就会去争抢。

一个 master 多个 worker 有如下好处:

- 方便热部署。执行

nginx -s reload,空闲的 worker 重新加载配置,当前忙的 worker 在处理完后重新加载配置。 - 每个 worder 都是一个

独立进程,其中一个worker 出现问题,其他 worker 继续争抢,服务不会中断

问:设置多少个 worker 合适?

答:worker 数量建议和服务器 cup 数量相同,少了没有充分利用资源,多了会导致 cpu 频繁切换。比如是 4 核,则可设置 worker_processes 4;。

问:Nginx 最大连接数是多少?

答:worder 数乘以每个worker 连接数。即 worker_processes * worker_connections

问:一个请求占几个连接数。

答:2 个或 4 个。如果访问静态资源,worder 直接返回,接收占用一个,返回占一个;如果需要查询数据库,worker 则还需要去和 tomcat 交互,另外又是一个发送,一个接收,所以是4个。

问:例如一个 Master,8 个 worker_processes,worker_connections 是 1024,最大并发数是多少?

答:最大连接数除2或4。如果是静态的就是 (8 * 1024) / 2;如果不是静态的就是 (8 * 1024) / 4

出处:https://www.cnblogs.com/pengjiali/p/16909227.html

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律