HW3

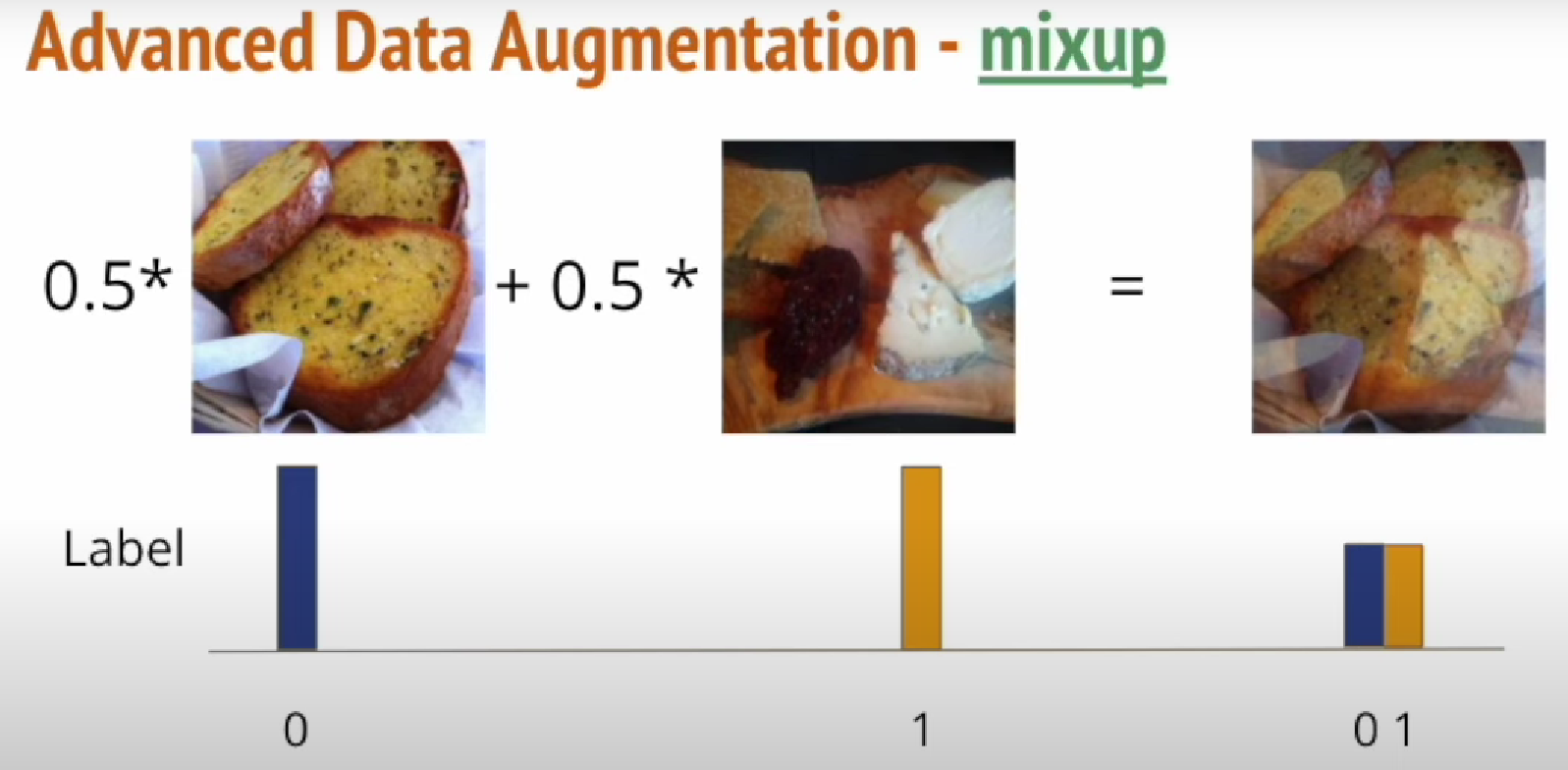

一种新的数据增强的方式mixup:

文件夹结构:

food11:

- training

calss_num.jpg

- validation

class_num.jpg

数据增强:

import torchvision.transforms as transforms # Normally, We don't need augmentations in testing and validation. # All we need here is to resize the PIL image and transform it into Tensor. test_tfm = transforms.Compose([ transforms.Resize((128, 128)), transforms.ToTensor(), ]) # However, it is also possible to use augmentation in the testing phase. # You may use train_tfm to produce a variety of images and then test using ensemble methods train_tfm = transforms.Compose([ # Resize the image into a fixed shape (height = width = 128) transforms.Resize((128, 128)), # You may add some transforms here. # ToTensor() should be the last one of the transforms. transforms.ToTensor(), ])

构建Dataset:

from PIL import Image import os class FoodDataset(Dataset): def __init__(self, path, tfm=test_tfm, files=None): super(FoodDataset).__init__() self.path = path self.files = sorted([os.path.join(path, x) for x in os.listdir(path) if x.endswith(".jpg")]) if files != None: self.files = files print(f"One {path} sample", self.files[0]) self.transform = tfm def __len__(self): return len(self.files) def __getitem__(self, idx): fname = self.files[idx] im = Image.open(fname) im = self.transform(im) # im = self.data[idx] try: label = int(fname.split("/")[-1].split("_")[0]) except: label = -1 # test has no label return im, label _dataset_dir = "./food11" train_set = FoodDataset(os.path.join(_dataset_dir, "training"), tfm=train_tfm) valid_set = FoodDataset(os.path.join(_dataset_dir, "validation"), tfm=test_tfm)

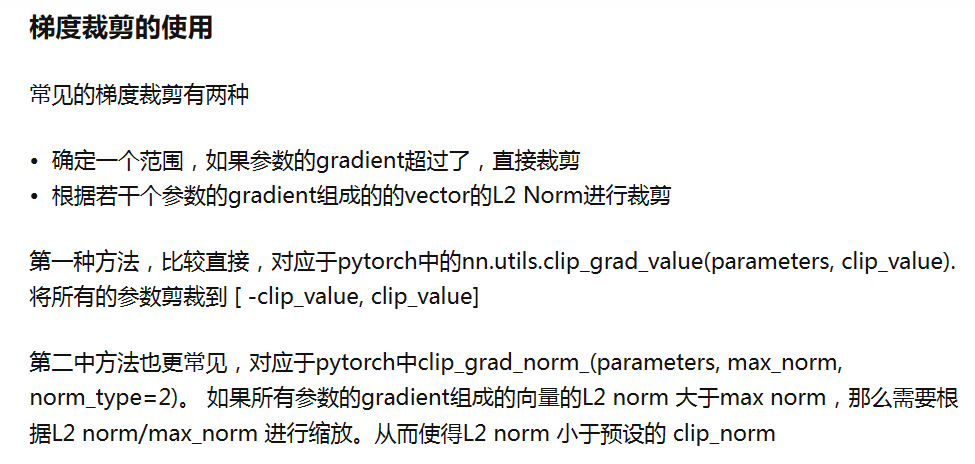

梯度裁剪:(放在反向传播和更新参数之间)

# "cuda" only when GPUs are available. device = "cuda" if torch.cuda.is_available() else "cpu" # The number of training epochs and patience. n_epochs = 3 patience = 300 # If no improvement in 'patience' epochs, early stop # Initialize a model, and put it on the device specified. model = Classifier().to(device) # For the classification task, we use cross-entropy as the measurement of performance. criterion = nn.CrossEntropyLoss() # Initialize optimizer, you may fine-tune some hyperparameters such as learning rate on your own. optimizer = torch.optim.Adam(model.parameters(), lr=0.0003, weight_decay=1e-5) # Initialize trackers, these are not parameters and should not be changed stale = 0 best_acc = 0 for epoch in range(n_epochs): # ---------- Training ---------- # Make sure the model is in train mode before training. model.train() # These are used to record information in training. train_loss = [] train_accs = [] for batch in tqdm(train_loader): # A batch consists of image data and corresponding labels. imgs, labels = batch #imgs = imgs.half() #print(imgs.shape,labels.shape) # Forward the data. (Make sure data and model are on the same device.) logits = model(imgs.to(device)) # Calculate the cross-entropy loss. # We don't need to apply softmax before computing cross-entropy as it is done automatically. loss = criterion(logits, labels.to(device)) # Gradients stored in the parameters in the previous step should be cleared out first. optimizer.zero_grad() # Compute the gradients for parameters. loss.backward() # Clip the gradient norms for stable training. grad_norm = nn.utils.clip_grad_norm_(model.parameters(), max_norm=10) # Update the parameters with computed gradients. optimizer.step() # Compute the accuracy for current batch. acc = (logits.argmax(dim=-1) == labels.to(device)).float().mean() # Record the loss and accuracy. train_loss.append(loss.item()) train_accs.append(acc) train_loss = sum(train_loss) / len(train_loss) train_acc = sum(train_accs) / len(train_accs) # Print the information. print(f"[ Train | {epoch + 1:03d}/{n_epochs:03d} ] loss = {train_loss:.5f}, acc = {train_acc:.5f}") # ---------- Validation ---------- # Make sure the model is in eval mode so that some modules like dropout are disabled and work normally. model.eval() # These are used to record information in validation. valid_loss = [] valid_accs = [] # Iterate the validation set by batches. for batch in tqdm(valid_loader): # A batch consists of image data and corresponding labels. imgs, labels = batch #imgs = imgs.half() # We don't need gradient in validation. # Using torch.no_grad() accelerates the forward process. with torch.no_grad(): logits = model(imgs.to(device)) # We can still compute the loss (but not the gradient). loss = criterion(logits, labels.to(device)) # Compute the accuracy for current batch. acc = (logits.argmax(dim=-1) == labels.to(device)).float().mean() # Record the loss and accuracy. valid_loss.append(loss.item()) valid_accs.append(acc) #break # The average loss and accuracy for entire validation set is the average of the recorded values. valid_loss = sum(valid_loss) / len(valid_loss) valid_acc = sum(valid_accs) / len(valid_accs) # Print the information. print(f"[ Valid | {epoch + 1:03d}/{n_epochs:03d} ] loss = {valid_loss:.5f}, acc = {valid_acc:.5f}") # update logs if valid_acc > best_acc: with open(f"./{_exp_name}_log.txt","a"): print(f"[ Valid | {epoch + 1:03d}/{n_epochs:03d} ] loss = {valid_loss:.5f}, acc = {valid_acc:.5f} -> best") else: with open(f"./{_exp_name}_log.txt","a"): print(f"[ Valid | {epoch + 1:03d}/{n_epochs:03d} ] loss = {valid_loss:.5f}, acc = {valid_acc:.5f}") # save models if valid_acc > best_acc: print(f"Best model found at epoch {epoch}, saving model") torch.save(model.state_dict(), f"{_exp_name}_best.ckpt") # only save best to prevent output memory exceed error best_acc = valid_acc stale = 0 else: stale += 1 if stale > patience: print(f"No improvment {patience} consecutive epochs, early stopping") break

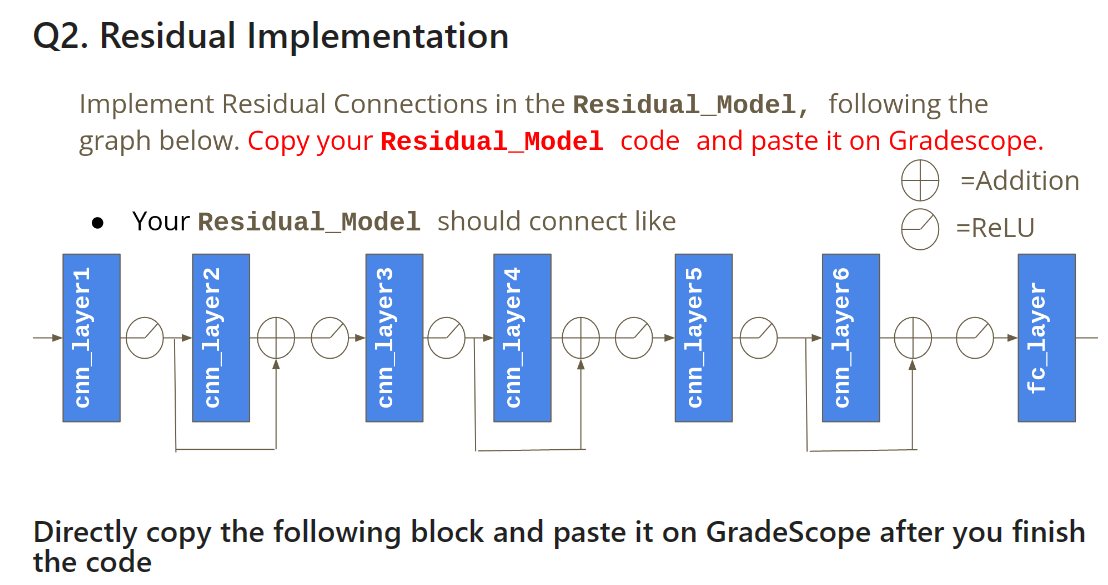

实现Residual Network:

import torch from torch import nn class Residual_Network(nn.Module): def __init__(self): super(Residual_Network, self).__init__() self.cnn_layer1 = nn.Sequential( nn.Conv2d(3, 64, 3, 1, 1), nn.BatchNorm2d(64), ) self.cnn_layer2 = nn.Sequential( nn.Conv2d(64, 64, 3, 1, 1), nn.BatchNorm2d(64), ) self.cnn_layer3 = nn.Sequential( nn.Conv2d(64, 128, 3, 2, 1), nn.BatchNorm2d(128), ) self.cnn_layer4 = nn.Sequential( nn.Conv2d(128, 128, 3, 1, 1), nn.BatchNorm2d(128), ) self.cnn_layer5 = nn.Sequential( nn.Conv2d(128, 256, 3, 2, 1), nn.BatchNorm2d(256), ) self.cnn_layer6 = nn.Sequential( nn.Conv2d(256, 256, 3, 1, 1), nn.BatchNorm2d(256), ) self.fc_layer = nn.Sequential( nn.Linear(256 * 32 * 32, 256), nn.ReLU(), nn.Linear(256, 11) ) self.relu = nn.ReLU() def forward(self, x): # input (x): [batch_size, 3, 128, 128] # output: [batch_size, 11] # Extract features by convolutional layers. x1 = self.cnn_layer1(x) x1 = self.relu(x1) print(x1.shape) x2 = self.cnn_layer2(x1) x2 = self.relu(x2 + x1) # residual connection print(x2.shape) x3 = self.cnn_layer3(x2) x3 = self.relu(x3) print(x3.shape) x4 = self.cnn_layer4(x3) x4 = self.relu(x4 + x3) # residual connection print(x4.shape) x5 = self.cnn_layer5(x4) x5 = self.relu(x5) print(x5.shape) x6 = self.cnn_layer6(x5) x6 = self.relu(x6 + x5) # residual connection print(x6.shape) # The extracted feature map must be flatten before going to fully-connected layers. xout = x6.flatten(1) print(xout.shape) # The features are transformed by fully-connected layers to obtain the final logits. xout = self.fc_layer(xout) return xout x = torch.arange(12*3*128*128).reshape(12, 3, 128, 128) x = x.float() model = Residual_Network() model(x)

浙公网安备 33010602011771号

浙公网安备 33010602011771号