机器学习工程师 - Udacity 机器学习毕业项目 算式识别

import pandas as pd

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

# 统计字符长度

def draw_hist(data, title):

plt.style.use(u'ggplot')

fig = pd.DataFrame(data, index=[0]).transpose()[0].plot(kind='barh')

fig = fig.get_figure()

fig.tight_layout()

plt.title(title)

plt.show()

data_csv = pd.read_csv('F:/train.csv')

img_dirpath = data_csv['filename'].tolist()

label = data_csv['label'].tolist()

X_data, X_valid, y_data, y_valid = train_test_split(img_dirpath, label, test_size=10000, random_state=17)

X_train, X_test, y_train, y_test = train_test_split(X_data, y_data, test_size=10000, random_state=17)

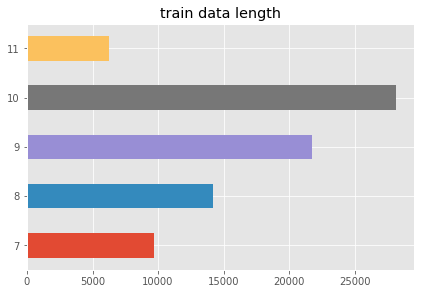

list_length_train = list()

for str in y_train:

list_length_train.append(len(str))

list_length_train = pd.Series(list_length_train).value_counts().to_dict()

draw_hist(list_length_train, 'train data length')

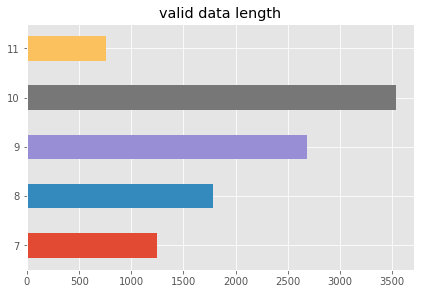

list_length_valid = list()

for str in y_valid:

list_length_valid.append(len(str))

list_length_valid = pd.Series(list_length_valid).value_counts().to_dict()

draw_hist(list_length_valid,'valid data length')

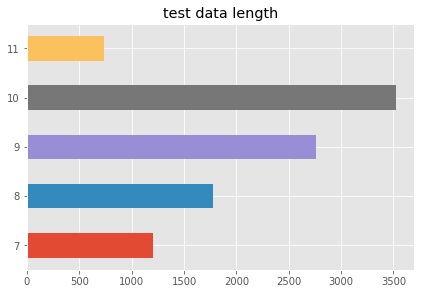

list_length_test = list()

for str in y_test:

list_length_test.append(len(str))

list_length_test = pd.Series(list_length_test).value_counts().to_dict()

draw_hist(list_length_test,'test data length')

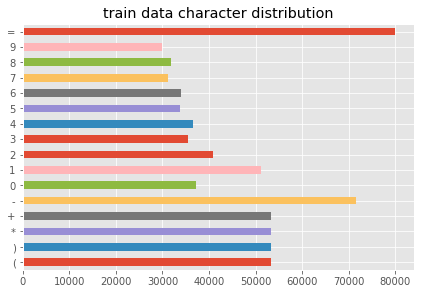

# 统计字符分布

from collections import defaultdict

sum_character_train = defaultdict(int)

for str in y_train:

for character in str:

sum_character_train[character] += 1

sum_character_train = dict(sum_character_train)

draw_hist(sum_character_train,'train data character distribution')

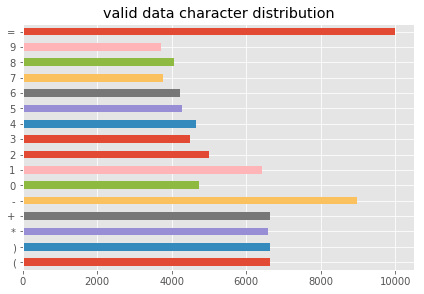

sum_character_valid = defaultdict(int)

for str in y_valid:

for character in str:

sum_character_valid[character] += 1

sum_character_valid = dict(sum_character_valid)

draw_hist(sum_character_valid,'valid data character distribution')

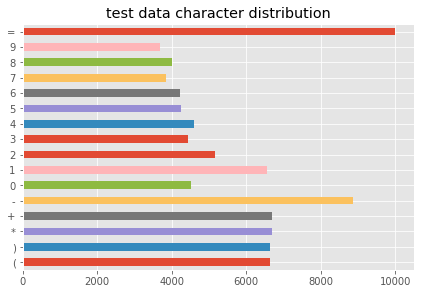

sum_character_test = defaultdict(int)

for str in y_test:

for character in str:

sum_character_test[character] += 1

sum_character_test = dict(sum_character_test)

draw_hist(sum_character_test,'test data character distribution')

# 所有待识别字符

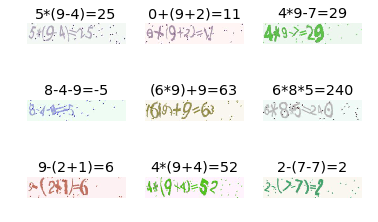

CHAR_VECTOR = "0123456789+-*()=a"

letters = [letter for letter in CHAR_VECTOR]

# 将索引转换为字符

def labels_to_text(labels):

return ''.join(list(map(lambda x: letters[int(x)], labels)))

# 将字符转换为索引

def text_to_labels(text):

return list(map(lambda x: letters.index(x), text))

import cv2

import random

from tqdm import tqdm

class TextImageGenerator:

def __init__(self, rootpath, img_dirpath, label, img_w, img_h, batch_size, downsample_factor, max_text_len):

self.rootpath = rootpath # 文件根目录

self.img_dirpath = img_dirpath

self.label = label

self.img_w = img_w

self.img_h = img_h

self.batch_size = batch_size

self.downsample_factor = downsample_factor

self.max_text_len = max_text_len

self.n = len(self.img_dirpath) # 图片数量

self.indexes = list(range(self.n))

self.cur_index = 0

self.imgs = np.zeros((self.n, self.img_h, self.img_w))

self.texts = []

def build_data(self):

for i, img_file in enumerate(tqdm(self.img_dirpath)):

img = cv2.imread(self.rootpath + img_file, cv2.IMREAD_GRAYSCALE)

img = cv2.resize(img, (self.img_w, self.img_h))

img = img.astype(np.float32)

img = (img / 255.0) * 2.0 - 1.0

self.imgs[i, :, :] = img

self.texts.append(self.label[i])

print(self.n, " Image Loading finish...")

def next_sample(self):

self.cur_index += 1

if self.cur_index >= self.n:

self.cur_index = 0

random.shuffle(self.indexes)

return self.imgs[self.indexes[self.cur_index]], self.texts[self.indexes[self.cur_index]]

def next_batch(self):

while True:

X_data = np.ones([self.batch_size, self.img_w, self.img_h, 1])

Y_data = np.empty([self.batch_size, self.max_text_len])

input_length = np.ones((self.batch_size, 1)) * (self.img_w // self.downsample_factor - 2)

label_length = np.zeros((self.batch_size, 1))

for i in range(self.batch_size):

img, text = self.next_sample()

img = img.T

img = np.expand_dims(img, -1)

X_data[i] = img

labels = text_to_labels(text)

Y_data[i, 0:len(labels)] = labels

Y_data[i, len(labels):13] = 16

label_length[i] = len(text)

inputs = {

'the_input': X_data,

'the_labels': Y_data,

'input_length': input_length,

'label_length': label_length

}

outputs = {'ctc': np.zeros([self.batch_size])}

yield (inputs, outputs)

from keras import backend as K

def ctc_lambda_func(args):

y_pred, labels, input_length, label_length = args

# the 2 is critical here since the first couple outputs of the RNN tend to be garbage:

y_pred = y_pred[:, 2:, :]

return K.ctc_batch_cost(labels, y_pred, input_length, label_length)

from keras.layers import *

from keras.models import *

def get_model(img_w, img_h, num_classes, training):

input_shape = (img_w, img_h, 1) # (128, 64, 1)

# Make Networkw

inputs = Input(name='the_input', shape=input_shape, dtype='float32') # (None, 128, 64, 1)

# Convolution layer (VGG)

inner = Conv2D(64, (3, 3), padding='same', name='conv1', kernel_initializer='he_normal')(inputs) # (None, 128, 64, 64)

inner = BatchNormalization()(inner)

inner = Activation('relu')(inner)

inner = MaxPooling2D(pool_size=(2, 2), name='max1')(inner) # (None,64, 32, 64)

inner = Dropout(0.2)(inner)

inner = Conv2D(128, (3, 3), padding='same', name='conv2', kernel_initializer='he_normal')(inner) # (None, 64, 32, 128)

inner = BatchNormalization()(inner)

inner = Activation('relu')(inner)

inner = MaxPooling2D(pool_size=(2, 2), name='max2')(inner) # (None, 32, 16, 128)

inner = Dropout(0.2)(inner)

inner = Conv2D(256, (3, 3), padding='same', name='conv3', kernel_initializer='he_normal')(inner) # (None, 32, 16, 256)

inner = BatchNormalization()(inner)

inner = Activation('relu')(inner)

inner = Conv2D(256, (3, 3), padding='same', name='conv4', kernel_initializer='he_normal')(inner) # (None, 32, 16, 256)

inner = BatchNormalization()(inner)

inner = Activation('relu')(inner)

inner = MaxPooling2D(pool_size=(1, 2), name='max3')(inner) # (None, 32, 8, 256)

inner = Dropout(0.2)(inner)

# inner = Conv2D(512, (3, 3), padding='same', name='conv5', kernel_initializer='he_normal')(inner) # (None, 32, 8, 512)

# inner = BatchNormalization()(inner)

# inner = Activation('relu')(inner)

# inner = Conv2D(512, (3, 3), padding='same', name='conv6')(inner) # (None, 32, 8, 512)

# inner = BatchNormalization()(inner)

# inner = Activation('relu')(inner)

# inner = MaxPooling2D(pool_size=(1, 2), name='max4')(inner) # (None, 32, 4, 512)

# inner = Dropout(0.2)(inner)

inner = Conv2D(256, (2, 2), padding='same', kernel_initializer='he_normal', name='con7')(inner) # (None, 32, 4, 256)

inner = BatchNormalization()(inner)

inner = Activation('relu')(inner)

# CNN to RNN

inner = Reshape(target_shape=((32, 2048)), name='reshape')(inner) # (None, 32, 2048)

inner = Dense(64, activation='relu', kernel_initializer='he_normal', name='dense1')(inner) # (None, 32, 64)

# RNN layer

lstm_1 = GRU(256, return_sequences=True, kernel_initializer='he_normal', name='lstm1')(inner) # (None, 32, 512)

lstm_1b = GRU(256, return_sequences=True, go_backwards=True, kernel_initializer='he_normal', name='lstm1_b')(inner)

lstm1_merged = add([lstm_1, lstm_1b]) # (None, 32, 512)

lstm1_merged = BatchNormalization()(lstm1_merged)

lstm_2 = GRU(256, return_sequences=True, kernel_initializer='he_normal', name='lstm2')(lstm1_merged)

lstm_2b = GRU(256, return_sequences=True, go_backwards=True, kernel_initializer='he_normal', name='lstm2_b')(lstm1_merged)

lstm2_merged = concatenate([lstm_2, lstm_2b]) # (None, 32, 1024)

lstm2_merged = Dropout(0.2)(lstm2_merged)

# lstm_merged = BatchNormalization()(lstm2_merged)

# transforms RNN output to character activations:

inner = Dense(num_classes, kernel_initializer='he_normal', name='dense2')(lstm2_merged) #(None, 32, 63)

y_pred = Activation('softmax', name='softmax')(inner)

labels = Input(name='the_labels', shape=[max_text_len], dtype='float32') # (None ,8)

input_length = Input(name='input_length', shape=[1], dtype='int64') # (None, 1)

label_length = Input(name='label_length', shape=[1], dtype='int64') # (None, 1)

# Keras doesn't currently support loss funcs with extra parameters so CTC loss is implemented in a lambda layer

loss_out = Lambda(ctc_lambda_func, output_shape=(1,), name='ctc')([y_pred, labels, input_length, label_length]) #(None, 1)

if training:

return Model(inputs=[inputs, labels, input_length, label_length], outputs=loss_out)

else:

return Model(inputs=[inputs], outputs=y_pred)

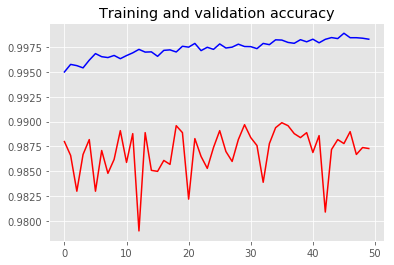

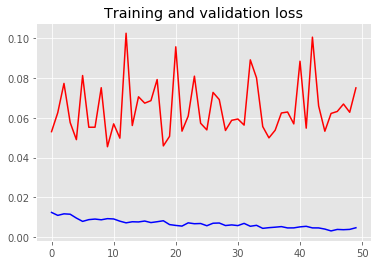

# 绘制准确率

def plot_training(history):

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'b-')

plt.plot(epochs, val_acc, 'r')

plt.title('Training and validation accuracy')

plt.savefig("Training and validation accuracy.png")

plt.figure()

plt.plot(epochs, loss, 'b-')

plt.plot(epochs, val_loss, 'r-')

plt.title('Training and validation loss')

plt.savefig("Training and validation loss.png")

plt.show()

from keras.optimizers import Adadelta

from keras.callbacks import ModelCheckpoint

from keras.utils.vis_utils import plot_model

rootpath = 'F:/'

img_w, img_h = 128, 64

batch_size = 256

downsample_factor = 4

max_text_len = 12

num_classes = len(letters) + 1

model = get_model(img_w, img_h, num_classes, True)

plot_model(model, to_file='model.png', show_shapes=True)

try:

model.load_weights('best_weight.hdf5')

print("...Previous weight data...")

except:

print("...New weight data...")

pass

data_train = TextImageGenerator(rootpath, X_train, y_train, img_w, img_h, batch_size, downsample_factor, max_text_len)

data_train.build_data()

data_valid = TextImageGenerator(rootpath, X_valid, y_valid, img_w, img_h, batch_size, downsample_factor, max_text_len)

data_valid.build_data()

data_test = TextImageGenerator(rootpath, X_test, y_test, img_w, img_h, batch_size, downsample_factor, max_text_len)

data_test.build_data()

checkpoint = ModelCheckpoint(filepath='best_weight.hdf5', monitor='val_acc', verbose=0, save_best_only=True, period=1)

# the loss calc occurs elsewhere, so use a dummy lambda func for the loss

model.compile(loss={'ctc': lambda y_true, y_pred: y_pred}, optimizer=Adadelta(), metrics=['accuracy'])

history_ft = model.fit_generator(generator=data_train.next_batch(),

steps_per_epoch=int(data_train.n / batch_size),

epochs=50,

verbose = 2,

callbacks=[checkpoint],

validation_data=data_valid.next_batch(),

validation_steps=int(data_valid.n / batch_size))

plot_training(history_ft)

posted on 2019-03-14 20:38 paulonetwo 阅读(719) 评论(0) 编辑 收藏 举报