MongoDB集群部署之Replica Set(副本集)、Sharding(分片)【转】

一、集群模式介绍

Mongodb是时下流行的NoSql数据库,它的存储方式是文档式存储,并不是Key-Value形式。MongoDB 集群部署有三种模式,分别为主从复制(Master-Slaver)、副本集(Replica Set)和分片(Sharding)模式。

- Master-Slaver 是一种主从副本的模式,目前已经不推荐使用。

- Replica Set 模式取代了 Master-Slaver 模式,是一种互为主从的关系。Replica Set 将数据复制多份保存,不同服务器保存同一份数据,在出现故障时自动切换,实现故障转移,在实际生产中非常实用。

- Sharding 模式适合处理大量数据,它将数据分开存储,不同服务器保存不同的数据,所有服务器数据的总和即为整个数据集。

Sharding 模式追求的是高性能,而且是三种集群中最复杂的。在实际生产环境中,通常将 Replica Set 和 Sharding 两种技术结合使用。

二、部署Replica Set集群

Replica Set集群当中包含了多份数据,保证主节点挂掉了,备节点能继续提供数据服务,提供的前提就是数据需要和主节点一致。

- Mongodb(M)表示主节点,Mongodb(S)表示备节点,Mongodb(A)表示仲裁节点。主备节点存储数据,仲裁节点不存储数据。客户端同时连接主节点与备节点,不连接仲裁节点。

- 默认设置主节点提供所有增删查改服务,备节点不提供任何服务。但是可以通过设置使备节点提供查询服务,这样就可以减少主节点的压力,当客户端进行数据查询时,请求自动转到备节点上。这个设置叫做Read Preference Modes。

- 仲裁节点是一种特殊的节点,它本身并不存储数据,主要的作用是决定哪一个备节点在主节点挂掉之后提升为主节点,所以客户端不需要连接此节点。这里虽然只有一个备节点,但是仍然需要一个仲裁节点来提升备节点级别。

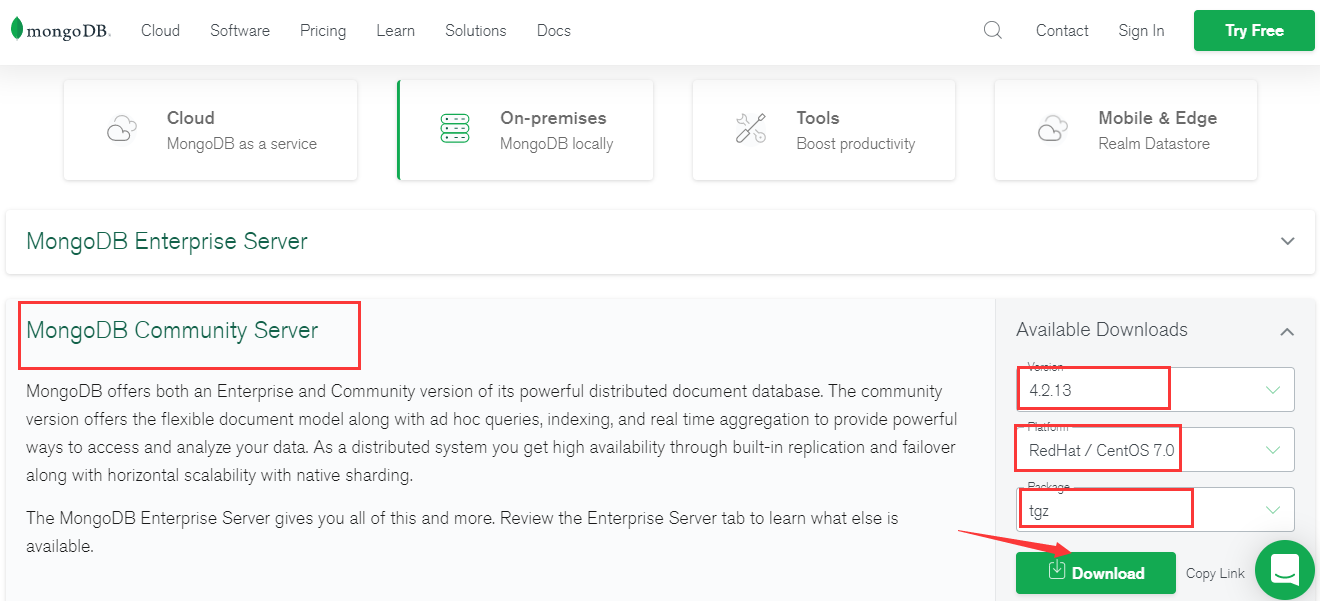

1、下载软件包

官网地址:https://www.mongodb.com/try/download/community

①创建所需目录

mkdir -p /app/mongodb4.2/{logs,conf,data}

mkdir -p /app/mongodb4.2/data/{master,slaver,arbiter}

②解压软件包

tar xf mongodb-linux-x86_64-rhel70-4.2.13.tgz -C /app/mongodb4.2/ mv /app/mongodb4.2/mongodb-linux-x86_64-rhel70-4.2.13/* /app/mongodb4.2/ rm -fr /app/mongodb4.2/mongodb-linux-x86_64-rhel70-4.2.13

③创建MongoDB配置文件、日志文件

touch /app/mongodb4.2/logs/mongodb.log touch /app/mongodb4.2/conf/mongodb.conf

④配置mongodb.conf

port=27018 #以后台方式运行 fork=true #数据库日志存放目录 logpath=/app/mongodb4.2/logs/mongodb.log #以追加的方式记录日志 logappend=true #replica set的名字 replSet=MongoDB-rs #为每一个数据库按照数据库名建立文件夹存放 directoryperdb=true #mongodb操作日志文件的最大大小。单位为Mb,默认为硬盘剩余空间的5% oplogSize=10000 #数据库数据存放目录 dbpath=/app/mongodb4.2/data/master #分发到节点,此处需要修改 #开启用户认证true auth=false #过滤一些无用的日志信息,若需要调试使用请设置为false quiet=true #auth=true ##mongodb所绑定的ip地址 bind_ip = 0.0.0.0

⑤分发软件到节点

scp -rp mongodb4.2 192.168.56.175:/app/ scp -rp mongodb4.2 192.168.56.182:/app/

2、启动MongoDB服务

①启动服务(三个节点都执行)

[root@k8s-node1 app]# /app/mongodb4.2/bin/mongod -f /app/mongodb4.2/conf/mongodb.conf about to fork child process, waiting until server is ready for connections. forked process: 3317 child process started successfully, parent exiting

②配置主、备、仲裁节点

连接任意一台节点

[root@k8s-node1 app]# /app/mongodb4.2/bin/mongo 192.168.56.175:27018

MongoDB shell version v4.2.13

connecting to: mongodb://192.168.56.175:27018/test?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("01ac5aa9-4ae0-446e-bda7-084d12c48683") }

MongoDB server version: 4.2.13

Welcome to the MongoDB shell.

执行节点配置命令

> use admin

switched to db admin

> cfg={ _id:"MongoDB-rs", members:[ {_id:0,host:'192.168.56.175:27018',priority:2}, {_id:1,host:'192.168.56.176:27018',priority:1}, {_id:2,host:'192.168.56.182:27018',arbiterOnly:true}] };

{

"_id" : "MongoDB-rs",

"members" : [

{

"_id" : 0,

"host" : "192.168.56.175:27018",

"priority" : 2

},

{

"_id" : 1,

"host" : "192.168.56.176:27018",

"priority" : 1

},

{

"_id" : 2,

"host" : "192.168.56.182:27018",

"arbiterOnly" : true

}

]

}

> rs.initiate(cfg) #使配置生效

{ "ok" : 1 }

cfg是可以任意的名字,相当于定义一个变量。

_id表示replica set的名字

members里包含的是所有节点的地址以及优先级。优先级最高的即成为主节点,即这里的192.168.56.175:27018。

特别注意第三个节点的配置arbiterOnly:true,这个千万不能少了,不然主备模式就不能生效。

③查看集群状态

MongoDB-rs:SECONDARY> rs.status()

{

"set" : "MongoDB-rs",

"date" : ISODate("2021-03-31T03:04:11.467Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"majorityVoteCount" : 2,

"writeMajorityCount" : 2,

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1617159844, 1),

"t" : NumberLong(1)

},

"lastCommittedWallTime" : ISODate("2021-03-31T03:04:04.141Z"),

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1617159844, 1),

"t" : NumberLong(1)

},

"readConcernMajorityWallTime" : ISODate("2021-03-31T03:04:04.141Z"),

"appliedOpTime" : {

"ts" : Timestamp(1617159844, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1617159844, 1),

"t" : NumberLong(1)

},

"lastAppliedWallTime" : ISODate("2021-03-31T03:04:04.141Z"),

"lastDurableWallTime" : ISODate("2021-03-31T03:04:04.141Z")

},

"lastStableRecoveryTimestamp" : Timestamp(1617159814, 1),

"lastStableCheckpointTimestamp" : Timestamp(1617159814, 1),

"electionCandidateMetrics" : {

"lastElectionReason" : "electionTimeout",

"lastElectionDate" : ISODate("2021-03-31T02:59:34.104Z"),

"electionTerm" : NumberLong(1),

"lastCommittedOpTimeAtElection" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"lastSeenOpTimeAtElection" : {

"ts" : Timestamp(1617159562, 1),

"t" : NumberLong(-1)

},

"numVotesNeeded" : 2,

"priorityAtElection" : 2,

"electionTimeoutMillis" : NumberLong(10000),

"numCatchUpOps" : NumberLong(0),

"newTermStartDate" : ISODate("2021-03-31T02:59:34.134Z"),

"wMajorityWriteAvailabilityDate" : ISODate("2021-03-31T02:59:35.580Z")

},

"members" : [

{

"_id" : 0,

"name" : "192.168.56.175:27018",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 820,

"optime" : {

"ts" : Timestamp(1617159844, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2021-03-31T03:04:04Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1617159574, 1),

"electionDate" : ISODate("2021-03-31T02:59:34Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.56.176:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 288,

"optime" : {

"ts" : Timestamp(1617159844, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1617159844, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2021-03-31T03:04:04Z"),

"optimeDurableDate" : ISODate("2021-03-31T03:04:04Z"),

"lastHeartbeat" : ISODate("2021-03-31T03:04:10.275Z"),

"lastHeartbeatRecv" : ISODate("2021-03-31T03:04:09.717Z"),

"pingMs" : NumberLong(1),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.56.175:27018",

"syncSourceHost" : "192.168.56.175:27018",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.56.182:27018",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 288,

"lastHeartbeat" : ISODate("2021-03-31T03:04:10.275Z"),

"lastHeartbeatRecv" : ISODate("2021-03-31T03:04:09.560Z"),

"pingMs" : NumberLong(1),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1617159844, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1617159844, 1)

}

至此Replica Set集群模式部署完毕

三、增加安全认证机制KeyFile

1、生成Key

mkdir -p /app/mongodb4.2/key openssl rand -base64 745 > /app/mongodb4.2/key/mongodb-keyfile chmod 600 /app/mongodb4.2/key/mongodb-keyfile

2、分发Key文件到节点

scp /app/mongodb4.2/key/mongodb-keyfile 192.168.56.176:/app/mongodb4.2/key/ scp /app/mongodb4.2/key/mongodb-keyfile 192.168.56.182:/app/mongodb4.2/key/

3、修改配置文件

vim mongodb.conf keyFile=/app/mongodb4.2/key/mongodb-keyfile

4、启动服务

/app/mongodb4.2/bin/mongod -f /app/mongodb4.2/conf/mongodb.conf

5、关闭服务

/app/mongodb4.2/bin/mongod -f /app/mongodb4.2/conf/mongodb.conf --shutdown

6、查看集群状态

MongoDB-rs:PRIMARY> rs.status()

{

"set" : "MongoDB-rs",

"date" : ISODate("2021-03-31T08:27:22.429Z"),

"myState" : 1,

"term" : NumberLong(4),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"majorityVoteCount" : 2,

"writeMajorityCount" : 2,

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1617179232, 1),

"t" : NumberLong(4)

},

"lastCommittedWallTime" : ISODate("2021-03-31T08:27:12.669Z"),

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1617179232, 1),

"t" : NumberLong(4)

},

"readConcernMajorityWallTime" : ISODate("2021-03-31T08:27:12.669Z"),

"appliedOpTime" : {

"ts" : Timestamp(1617179232, 1),

"t" : NumberLong(4)

},

"durableOpTime" : {

"ts" : Timestamp(1617179232, 1),

"t" : NumberLong(4)

},

"lastAppliedWallTime" : ISODate("2021-03-31T08:27:12.669Z"),

"lastDurableWallTime" : ISODate("2021-03-31T08:27:12.669Z")

},

"lastStableRecoveryTimestamp" : Timestamp(1617179202, 1),

"lastStableCheckpointTimestamp" : Timestamp(1617179202, 1),

"electionCandidateMetrics" : {

"lastElectionReason" : "priorityTakeover",

"lastElectionDate" : ISODate("2021-03-31T08:26:12.634Z"),

"electionTerm" : NumberLong(4),

"lastCommittedOpTimeAtElection" : {

"ts" : Timestamp(1617179171, 1),

"t" : NumberLong(3)

},

"lastSeenOpTimeAtElection" : {

"ts" : Timestamp(1617179171, 1),

"t" : NumberLong(3)

},

"numVotesNeeded" : 2,

"priorityAtElection" : 2,

"electionTimeoutMillis" : NumberLong(10000),

"priorPrimaryMemberId" : 1,

"numCatchUpOps" : NumberLong(0),

"newTermStartDate" : ISODate("2021-03-31T08:26:12.667Z"),

"wMajorityWriteAvailabilityDate" : ISODate("2021-03-31T08:26:14.696Z")

},

"electionParticipantMetrics" : {

"votedForCandidate" : true,

"electionTerm" : NumberLong(3),

"lastVoteDate" : ISODate("2021-03-31T08:26:01.824Z"),

"electionCandidateMemberId" : 1,

"voteReason" : "",

"lastAppliedOpTimeAtElection" : {

"ts" : Timestamp(1617177014, 1),

"t" : NumberLong(1)

},

"maxAppliedOpTimeInSet" : {

"ts" : Timestamp(1617177014, 1),

"t" : NumberLong(1)

},

"priorityAtElection" : 2

},

"members" : [

{

"_id" : 0,

"name" : "192.168.56.175:27018",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 97,

"optime" : {

"ts" : Timestamp(1617179232, 1),

"t" : NumberLong(4)

},

"optimeDate" : ISODate("2021-03-31T08:27:12Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1617179172, 1),

"electionDate" : ISODate("2021-03-31T08:26:12Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.56.176:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 91,

"optime" : {

"ts" : Timestamp(1617179232, 1),

"t" : NumberLong(4)

},

"optimeDurable" : {

"ts" : Timestamp(1617179232, 1),

"t" : NumberLong(4)

},

"optimeDate" : ISODate("2021-03-31T08:27:12Z"),

"optimeDurableDate" : ISODate("2021-03-31T08:27:12Z"),

"lastHeartbeat" : ISODate("2021-03-31T08:27:20.698Z"),

"lastHeartbeatRecv" : ISODate("2021-03-31T08:27:20.867Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.56.175:27018",

"syncSourceHost" : "192.168.56.175:27018",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.56.182:27018",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 87,

"lastHeartbeat" : ISODate("2021-03-31T08:27:20.698Z"),

"lastHeartbeatRecv" : ISODate("2021-03-31T08:27:20.797Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1617179232, 1),

"signature" : {

"hash" : BinData(0,"uXViIfbqr0npQMxp/pJ4jAO/GfY="),

"keyId" : NumberLong("6945647482743291907")

}

},

"operationTime" : Timestamp(1617179232, 1)

}

MongoDB启用认证后,不仅客户端连接需要认证,服务内部不同节点之间,也是需要相互认证的,如果仅仅是启用认证方式,不设定服务内部之间的认证参数,则服务内部各节点之间是无法成功通信的,就造成了当前登录节点一直是恢复中的状态,而其他节点应该是 not reachable的状态,所以会显示为 not reachable/healthy

mongodb提供了两种方式:一种是 keyfile的方式,一种是证书方式,详细官方介绍 https://docs.mongodb.com/manual/core/security-internal-authentication/

四、分片集群部署

1、分片集群架构图

2、分片技术介绍

分片(sharding)是MongoDB用来将大型集合分割到不同服务器(或者说一个集群)上所采用的方法。MongoDB的最大特点在于它几乎能自动完成所有事情,只要告诉MongoDB要分配数据,它就能自动维护数据在不同服务器之间的均衡。MongoDB分片集群,英文名称为: Sharded Cluster旨在通过横向扩展,来提高数据吞吐性能、增大数据存储量。分片集群由三个组件:“mongos”, “config server”, “shard” 组成。

- mongos :mongos充当查询路由器,在客户端应用程序和分片群集之间提供接口。

- config server:配置服务器存储集群的元数据和配置设置。从MongoDB 3.4开始,配置服务器必须部署为副本集(CSRS)。

- shard:真正的数据存储位置,以chunk为单位存数据,每个shard都可以部署为副本集。

服务器分配

3、config server服务部署

①创建所需目录

mkdir -p /app/mongodb-cluster/{mongos,config,shard1,shard2,shard3}/{data,log,conf}

├── config

│ ├── conf

│ ├── data

│ └── log

├── mongos

│ ├── conf

│ ├── data

│ └── log

├── shard1

│ ├── conf

│ ├── data

│ └── log

├── shard2

│ ├── conf

│ ├── data

│ └── log

└── shard3

├── conf

├── data

└── log

②分片集群各部分组件搭建顺序

“config server” -> 2. “shard” -> 3. “mongos” #启动服务顺序一样 "config server"由三台主机组成,每台主机上运行一个mongod进程,三台主机的mongod组成一个副本集。

③创建MongoDB配置文件、日志文件

touch /app/mongodb-cluster/{mongos,config,shard1,shard2,shard3}/conf/mongodb.conf

touch /app/mongodb-cluster/{mongos,config,shard1,shard2,shard3}/log/mongodb.log

④config server配置文件

port=27017 #以后台方式运行 fork=true #数据库日志存放目录 logpath=/app/mongodb-cluster/config/log/mongodb.log #数据库存放目录 dbpath=/app/mongodb-cluster/config/data #以追加的方式记录日志 logappend=true #replica set的名字 replSet=rs-config #必须配置 configsvr = true #为每一个数据库按照数据库名建立文件夹存放 directoryperdb=true #mongodb操作日志文件的最大大小。单位为Mb,默认为硬盘剩余空间的5% oplogSize=10000 #数据库数据存放目录 #开启用户认证true auth=false #过滤一些无用的日志信息,若需要调试使用请设置为false quiet=true #auth=true ##mongodb所绑定的ip地址 bind_ip = 0.0.0.0

三台主机的mongodb.conf配置相同,分发上面配置文件到节点

scp -rp /app/mongodb-cluster 192.168.56.176:/app/ scp -rp /app/mongodb-cluster 192.168.56.182:/app/

⑤启动config server(三个节点)

/app/mongodb-cluster/bin/mongod -f /app/mongodb-cluster/config/conf/mongodb.conf

⑥初始化config server副本集

任意节点登录执行

/app/mongodb-cluster/bin/mongo 192.168.56.175:27017

> rs.initiate(

... {

... "_id" : "rs-config",

... "members" : [

... {"_id" : 0, "host" : "192.168.56.175:27017"},

... {"_id" : 1, "host" : "192.168.56.176:27017"},

... {"_id" : 2, "host" : "192.168.56.182:27017"}

... ]

... }

... )

#以下为输出

{

"ok" : 1,

"$gleStats" : {

"lastOpTime" : Timestamp(1617785674, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0)

}

出现 ok 1 则成功

4、shard分片服务部署

①创建shard1配置文件

port=27018 #以后台方式运行 fork=true #数据库日志存放目录 logpath=/app/mongodb-cluster/shard1/log/mongodb.log #数据库存放目录 dbpath=/app/mongodb-cluster/shard1/data #以追加的方式记录日志 logappend=true #replica set的名字 replSet=rs-shard1 #必须配置 shardsvr=true #为每一个数据库按照数据库名建立文件夹存放 directoryperdb=true #mongodb操作日志文件的最大大小。单位为Mb,默认为硬盘剩余空间的5% oplogSize=10000 #数据库数据存放目录 #开启用户认证true auth=false #过滤一些无用的日志信息,若需要调试使用请设置为false quiet=true #auth=true ##mongodb所绑定的ip地址 bind_ip = 0.0.0.0

②分发配置文件到节点

scp mongodb.conf 192.168.56.176:/app/mongodb-cluster/shard1/conf/ scp mongodb.conf 192.168.56.182:/app/mongodb-cluster/shard1/conf/

③启动三台服务器的shard1 server

/app/mongodb-cluster/bin/mongod -f /app/mongodb-cluster/shard1/conf/mongodb.conf

任选分片内的一个节点,执行初始化命令

/app/mongodb-cluster/bin/mongo 192.168.56.175:27018

> rs.initiate(

... {

... "_id" : "rs-shard1",

... "members" : [

... {"_id" : 0, "host" : "192.168.56.175:27018"},

... {"_id" : 1, "host" : "192.168.56.176:27018"},

... {"_id" : 2, "host" : "192.168.56.182:27018"}

... ]

... }

... )

#输出结果

{ "ok" : 1 }

④创建shard2配置文件

port=27019 #以后台方式运行 fork=true #数据库日志存放目录 logpath=/app/mongodb-cluster/shard2/log/mongodb.log #数据库存放目录 dbpath=/app/mongodb-cluster/shard2/data #以追加的方式记录日志 logappend=true #replica set的名字 replSet=rs-shard2 #必须配置 shardsvr=true #为每一个数据库按照数据库名建立文件夹存放 directoryperdb=true #mongodb操作日志文件的最大大小。单位为Mb,默认为硬盘剩余空间的5% oplogSize=10000 #数据库数据存放目录 #开启用户认证true auth=false #过滤一些无用的日志信息,若需要调试使用请设置为false quiet=true #auth=true ##mongodb所绑定的ip地址 bind_ip = 0.0.0.0

分发配置文件到节点

scp mongodb.conf 192.168.56.176:/app/mongodb-cluster/shard2/conf/ scp mongodb.conf 192.168.56.182:/app/mongodb-cluster/shard2/conf/

启动三台服务器的shard2 server

/app/mongodb-cluster/bin/mongod -f /app/mongodb-cluster/shard2/conf/mongodb.conf

任选分片内的一个节点,执行初始化命令

/app/mongodb-cluster/bin/mongo 192.168.56.175:27019

> rs.initiate(

... {

... "_id" : "rs-shard2",

... "members" : [

... {"_id" : 0, "host" : "192.168.56.175:27019"},

... {"_id" : 1, "host" : "192.168.56.176:27019"},

... {"_id" : 2, "host" : "192.168.56.182:27019"}

... ]

... }

... )

#输出结果

{ "ok" : 1 }

5、mongos服务部署

①创建mongos配置文件

port=27020 #以后台方式运行 fork=true #数据库日志存放目录 logpath=/app/mongodb-cluster/mongos/log/mongodb.log #以追加的方式记录日志 logappend=true #Mongos路由配置 configdb =rs-config/192.168.29.175:27017,192.168.29.176:27017,192.168.29.182:27017 #过滤一些无用的日志信息,若需要调试使用请设置为false quiet=true ##mongodb所绑定的ip地址 bind_ip = 0.0.0.0 # max connections maxConns=200

mongos可以为1个,也可以为多个。配置了三个节点,每个mongos配置相对独立,配置文件相同

②分发配置文件

scp mongodb.conf 192.168.56.176:/app/mongodb-cluster/mongos/conf/ scp mongodb.conf 192.168.56.182:/app/mongodb-cluster/mongos/conf/

③启动mongos

/app/mongodb-cluster/bin/mongos -f /app/mongodb-cluster/mongos/conf/mongodb.conf

④添加分片

连接任意一个mongos,添加分片信息

/app/mongodb-cluster/bin/mongo 192.168.56.175:27020

mongos> use admin

switched to db admin

mongos> sh.addShard("rs-shard1/192.168.56.175:27018,192.168.56.176:27018,192.168.56.182:27018")

{

"shardAdded" : "rs-shard1",

"ok" : 1,

"operationTime" : Timestamp(1618540303, 8),

"$clusterTime" : {

"clusterTime" : Timestamp(1618540303, 8),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.addShard("rs-shard2/192.168.56.175:27019,192.168.56.175:27019,192.168.56.182:27019")

{

"shardAdded" : "rs-shard2",

"ok" : 1,

"operationTime" : Timestamp(1618540329, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1618540329, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

⑤查看集群分片状态

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("606d735537ca11c12cc9925c")

}

shards:

{ "_id" : "rs-shard1", "host" : "rs-shard1/192.168.56.175:27018,192.168.56.176:27018,192.168.56.182:27018", "state" : 1 }

{ "_id" : "rs-shard2", "host" : "rs-shard2/192.168.56.175:27019,192.168.56.176:27019,192.168.56.182:27019", "state" : 1 }

active mongoses:

"4.2.13" : 3

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

至此,一个基本的"sharded cluster",就已经搭建完成

6、使用分片

①开启数据库分片

mongos> use admin

switched to db admin

mongos> sh.enableSharding("test") #以test库为例

{

"ok" : 1,

"operationTime" : Timestamp(1618540808, 6),

"$clusterTime" : {

"clusterTime" : Timestamp(1618540808, 7),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

②开启集合分片

mongos> sh.shardCollection("test.coll", {"name" : "hashed"}) #以集合test.coll为例

{

"collectionsharded" : "test.coll",

"collectionUUID" : UUID("9263ed96-7b4f-49ca-8351-9f8f990375d6"),

"ok" : 1,

"operationTime" : Timestamp(1618540982, 54),

"$clusterTime" : {

"clusterTime" : Timestamp(1618540982, 54),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

说明:

(1) 第一个参数为集合的完整namespace名称

(2) 第二个参数为片键,指定根据哪个字段进行分片

③插入数据验证数据分片

use test

for (var i = 1; i <= 100000; i++){

db.coll.insert({"id" : i, "name" : "name" + i});

}

④查看状态验证

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("606d735537ca11c12cc9925c")

}

shards:

{ "_id" : "rs-shard1", "host" : "rs-shard1/192.168.56.175:27018,192.168.56.176:27018,192.168.56.182:27018", "state" : 1 }

{ "_id" : "rs-shard2", "host" : "rs-shard2/192.168.56.175:27019,192.168.56.176:27019,192.168.56.182:27019", "state" : 1 }

active mongoses:

"4.2.13" : 3

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

512 : Success

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

rs-shard1 512

rs-shard2 512

too many chunks to print, use verbose if you want to force print

{ "_id" : "test", "primary" : "rs-shard2", "partitioned" : true, "version" : { "uuid" : UUID("44ca8ee0-262b-475a-9a98-f94fe37488c4"), "lastMod" : 1 } }

test.coll

shard key: { "name" : "hashed" }

unique: false

balancing: true

chunks:

rs-shard1 2

rs-shard2 2

{ "name" : { "$minKey" : 1 } } -->> { "name" : NumberLong("-4611686018427387902") } on : rs-shard1 Timestamp(1, 0)

{ "name" : NumberLong("-4611686018427387902") } -->> { "name" : NumberLong(0) } on : rs-shard1 Timestamp(1, 1)

{ "name" : NumberLong(0) } -->> { "name" : NumberLong("4611686018427387902") } on : rs-shard2 Timestamp(1, 2)

{ "name" : NumberLong("4611686018427387902") } -->> { "name" : { "$maxKey" : 1 } } on : rs-shard2 Timestamp(1, 3)

说明:分片1和分片2各有两个chunks分片,数据已均匀分布在两个分片上。

五、添加集群认证

1、生成并分发密钥文件keyfile

mkdir /app/mongodb-cluster/key -p openssl rand -base64 745 > /app/mongodb-cluster/key/mongodb-keyfile chmod 600 /app/mongodb-cluster/key/mongodb-keyfile scp -rp /app/mongodb-cluster/key 192.168.56.176:/app/mongodb-cluster/ scp -rp /app/mongodb-cluster/key 192.168.56.182:/app/mongodb-cluster/

2、添加超级管理员用户

连接任意一个mongos,创建超级管理员用户

db.createUser(

{

user: "root",

pwd: "root",

roles: [{role : "root", db : "admin"}]

}

)

输出信息:

mongos> use admin

switched to db admin

mongos> db.createUser(

... {

... user: "root",

... pwd: "root",

... roles: [{role : "root", db : "admin"}]

... }

... )

Successfully added user: {

"user" : "root",

"roles" : [

{

"role" : "root",

"db" : "admin"

}

]

}

在"mongos"上添加用户,用户信息实际保存在"config server"上,"mongos"本身不存储任何数据,包括用户信息。然而,"mongos"上创建的用户,是不会自动添加到"shard"分片服务器上的。为了以后方便维护shard分片服务器,分别登录到每个分片服务器的"primary"节点,添加管理员用户。

3、为分片集群创建账号

①shard1配置

use admin

db.createUser(

{

user: "admin",

pwd: "admin",

roles: [ { role: "userAdminAnyDatabase", db: "admin" } ]

}

)

输出信息:

/app/mongodb-cluster/bin/mongo 192.168.56.175:27018

rs-shard1:PRIMARY> use admin

switched to db admin

rs-shard1:PRIMARY> db.createUser(

... {

... user: "admin",

... pwd: "admin",

... roles: [ { role: "userAdminAnyDatabase", db: "admin" } ]

... }

... )

Successfully added user: {

"user" : "admin",

"roles" : [

{

"role" : "userAdminAnyDatabase",

"db" : "admin"

}

]

}

②shard2配置

/app/mongodb-cluster/bin/mongo 192.168.56.175:27019

rs-shard2:PRIMARY> use admin

switched to db admin

rs-shard2:PRIMARY> db.createUser(

... {

... user: "admin",

... pwd: "admin",

... roles: [ { role: "userAdminAnyDatabase", db: "admin" } ]

... }

... )

Successfully added user: {

"user" : "admin",

"roles" : [

{

"role" : "userAdminAnyDatabase",

"db" : "admin"

}

]

}

4、开启安全认证

①修改mongos,config,shard1,shard2配置文件添加认证参数

auth = true keyFile = /app/mongodb-cluster/key/mongodb-keyfile

vim config/conf/mongodb.conf vim mongos/conf/mongodb.conf #mongos不加参数auth=true vim shard1/conf/mongodb.conf vim shard2/conf/mongodb.conf

②停止所有mongodb程序

pkill mongod && pkill mongos #杀不掉就多杀几次

③启动服务

启动顺序:1、config server -> shard1、shard2 -> mongos

/app/mongodb-cluster/bin/mongod -f /app/mongodb-cluster/config/conf/mongodb.conf /app/mongodb-cluster/bin/mongod -f /app/mongodb-cluster/shard1/conf/mongodb.conf /app/mongodb-cluster/bin/mongod -f /app/mongodb-cluster/shard2/conf/mongodb.conf /app/mongodb-cluster/bin/mongos -f /app/mongodb-cluster/mongos/conf/mongodb.conf

④验证用户访问

任意连接一个mongos

[root@k8s-node1 ~]# /app/mongodb-cluster/bin/mongo --port 27020 -u "root" -p "root" --authenticationDatabase "admin"

MongoDB shell version v4.2.13

connecting to: mongodb://127.0.0.1:27020/?authSource=admin&compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("699a4543-3d62-421f-bbb3-32f1f250a9d4") }

MongoDB server version: 4.2.13

Server has startup warnings:

2021-04-16T11:45:38.158+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2021-04-16T11:45:38.158+0800 I CONTROL [main]

mongos> show dbs;

admin 0.000GB

config 0.003GB

test 0.005GB

转自

linux运维、架构之路-MongoDB集群部署 - 闫新江 - 博客园

https://www.cnblogs.com/yanxinjiang/p/14600568.html