2.安装Spark与Python练习

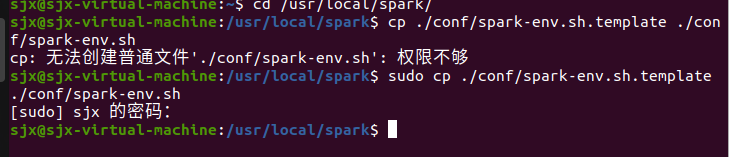

一、安装Spark

1.检查基础环境hadoop,jdk(已提前完成)

2.下载spark(已提前完成)

3.解压,文件夹重命名、权限(已提前完成)

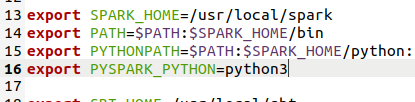

4.配置文件

5.环境变量

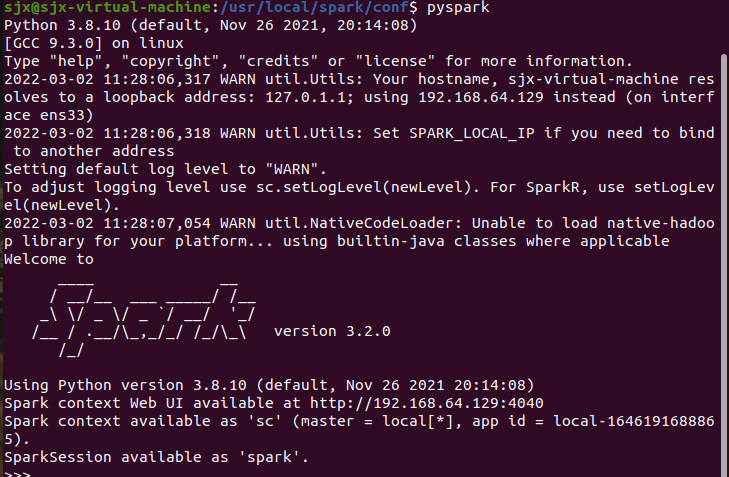

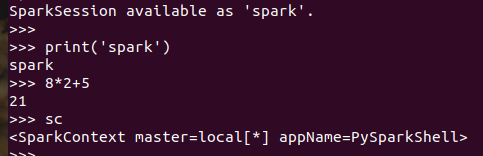

6.试运行Python代码

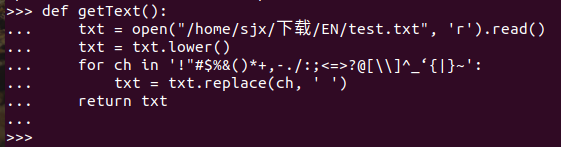

二、Python编程练习:英文文本的词频统计

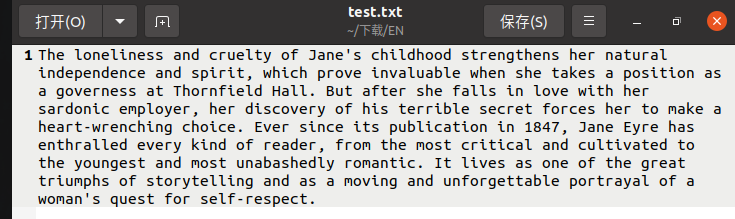

1.准备文本文件

2.读文件

txt = open("/home/sjx/下载/test.txt", 'r').read()

3.预处理:大小写,标点符号,停用词

1 txt = txt.lower() 2 for ch in '!"#$%&()*+,-./:;<=>?@[\\]^_‘{|}~': 3 txt = txt.replace(ch, ' ')

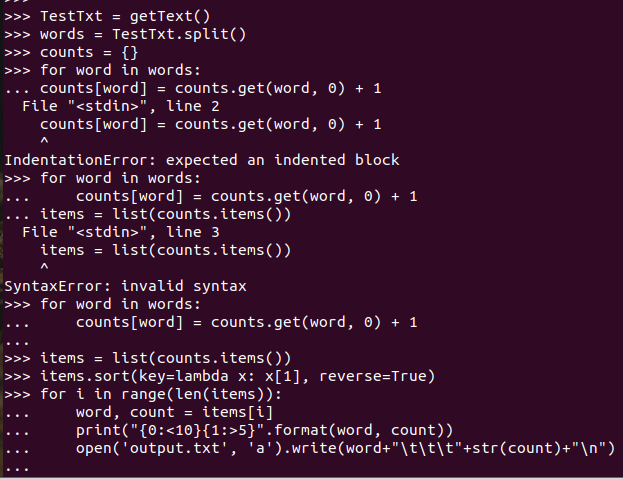

4.分词

words = TestTxt.split()

5.统计每个单词出现的次数

1 for word in words: 2 counts[word] = counts.get(word, 0) + 1

6.按词频大小排序

1 items = list(counts.items()) 2 items.sort(key=lambda x: x[1], reverse=True)

7.结果写文件

1 for i in range(len(items)): 2 word, count = items[i] 3 print("{0:<10}{1:>5}".format(word, count)) 4 open('output.txt', 'a').write(word+"\t\t\t"+str(count)+"\n")

完整代码:

1 def getText(): 2 txt = open("/home/sjx/下载/EN/test.txt", 'r').read() 3 txt = txt.lower() 4 for ch in '!"#$%&()*+,-./:;<=>?@[\\]^_‘{|}~': 5 txt = txt.replace(ch, ' ') 6 return txt 7 8 9 TestTxt = getText() 10 words = TestTxt.split() 11 counts = {} 12 for word in words: 13 counts[word] = counts.get(word, 0) + 1 14 items = list(counts.items()) 15 items.sort(key=lambda x: x[1], reverse=True) 16 for i in range(len(items)): 17 word, count = items[i] 18 print("{0:<10}{1:>5}".format(word, count)) 19 open('output.txt', 'a').write(word+"\t\t\t"+str(count)+"\n")

运行截图: