scrapy 爬取图片 最基本操作

使用scrapy里自带的Image功能下载,下面贴代码,解释在代码的注释里。

items.py

1 import scrapy 2 3 class ImageItem(scrapy.Item): 4 #注意这里的item是ImageItem

5 image_urls = scrapy.Field() 6 images = scrapy.Field() 7 8 #image_urls和images是固定的,不能改名字

settings.py

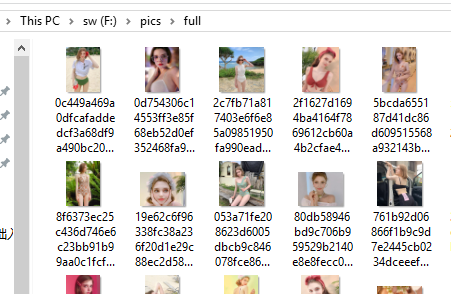

1 USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 Safari/537.36' 2 #上面只是个访问header,加个降低被拒绝的保险 3 4 ITEM_PIPELINES = { 5 'scrapy.pipelines.images.ImagesPipeline': 1 6 } 7 #打开Images的通道 8 9 IMAGES_STORE = 'F:\\pics' 10 #一定要设置这个存储地址,要是真实的硬盘,可以不创建pics文件夹,会自己生成,还生成默认的full文件夹

spider.py(这里是carhome)

1 import scrapy 2 3 from car.items import ImageItem 4 5 class CarhomeSpider(scrapy.Spider): 6 name = 'carhome' 7 allowed_domains = ['sohu.com'] 8 start_urls = ['http://www.sohu.com/a/337634404_100032610'] 9 download_delay = 1 10 11 def parse(self, response): 12 item = ImageItem() 13 srcs = response.css('.article img::attr(src)').extract() #用css方法找到的所有图片地址 14 item['image_urls'] = srcs 15 yield item

pipelines.py

1 from scrapy.pipelines.images import ImagesPipeline 2 from scrapy.exceptions import DropItem 3 from scrapy.http import Request 4 #这里的两个函数get_media_requests和item_completed都是scrapy的内置函数,想重命名的就这这里操作 5 #可以直接复制这里的代码就可以用了 6 class MyImagesPipeline(ImagesPipeline) : 7 def get_media_requests(self, item, info) : 8 for image_url in item['image_urls'] : 9 yield Request(image_url) 10 11 def item_completed(self, results, item, info) : 12 image_path = [x['path'] for ok, x in results if ok] 13 if not image_path : 14 raise DropItem('Item contains no images') 15 item['image_paths'] = image_path 16 return item

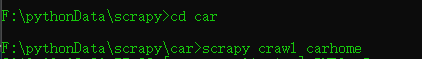

代码就这么多,下面执行一下:

文件夹结果:

还不会的快去试试吧!

浙公网安备 33010602011771号

浙公网安备 33010602011771号