pyspark mongodb yarn

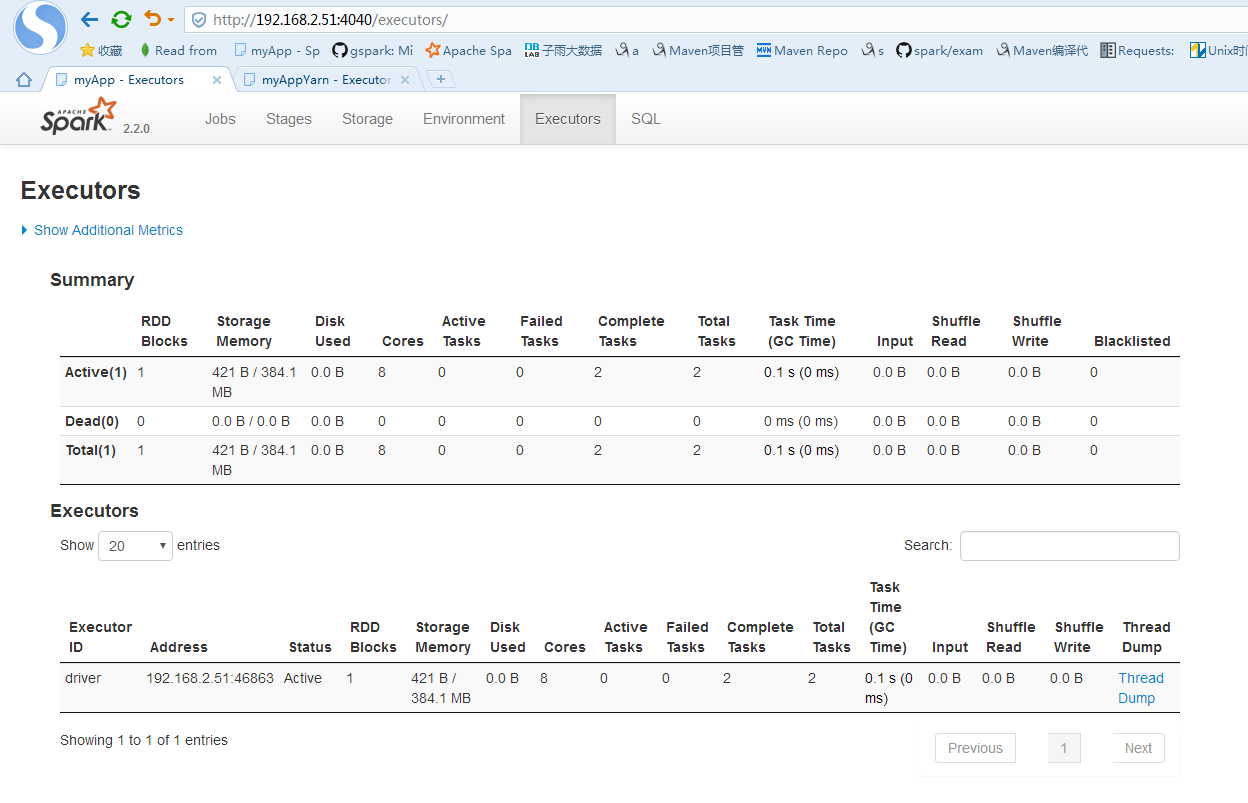

from pyspark.sql import SparkSession

my_spark = SparkSession \

.builder \

.appName("myApp") \

.config("spark.mongodb.input.uri", "mongodb://pyspark_admin:admin123@192.168.2.51/pyspark.testpy") \

.config("spark.mongodb.output.uri", "mongodb://pyspark_admin:admin123@192.168.2.51/pyspark.testpy") \

.getOrCreate()

db_rows = my_spark.read.format("com.mongodb.spark.sql.DefaultSource").load().collect()

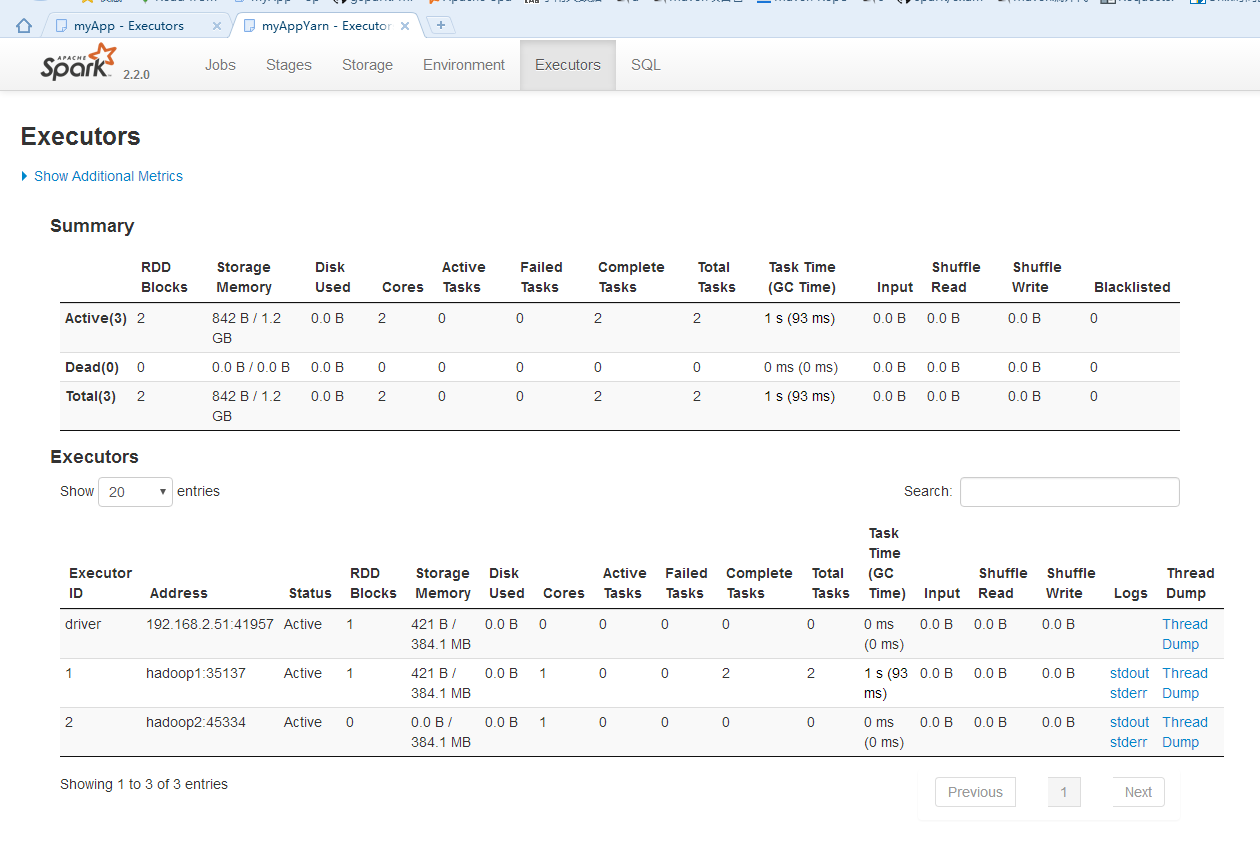

from pyspark.sql import SparkSession

my_spark = SparkSession \

.builder \

.appName("myAppYarn") \

.master('yarn') \

.config("spark.mongodb.input.uri", "mongodb://pyspark_admin:admin123@192.168.2.51/pyspark.testpy") \

.config("spark.mongodb.output.uri", "mongodb://pyspark_admin:admin123@192.168.2.51/pyspark.testpy") \

.getOrCreate()

db_rows = my_spark.read.format("com.mongodb.spark.sql.DefaultSource").load().collect()

http://192.168.2.51:4041/executors/

ssh://root@192.168.2.51:22/usr/bin/python -u /root/.pycharm_helpers/pydev/pydevd.py --multiproc --qt-support=auto --client '0.0.0.0' --port 47232 --file /home/data/crontab_chk_url/pyspark/pyspark_yarn_test.py

pydev debugger: process 9892 is connecting

Connected to pydev debugger (build 172.4343.24)

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

17/12/03 21:40:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/12/03 21:40:24 WARN util.Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

17/12/03 21:40:26 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

浙公网安备 33010602011771号

浙公网安备 33010602011771号