启动hadoop

cd /usr/local/hadoop/hadoop

$hadoop namenode -format # 启动前格式化namenode

$./sbin/start-all.sh

检查是否启动成功

[hadoop@hadoop1 hadoop]$ jps

16855 NodeManager

16999 Jps

16090 NameNode

16570 ResourceManager

16396 SecondaryNameNode

[hadoop@hadoop1 hadoop]$

/usr/local/hadoop/hadoop

[hadoop@hadoop2 hadoop]$ jps

1378 NodeManager

1239 DataNode

1528 Jps

[hadoop@hadoop2 hadoop]$

停止

$./sbin/stop-all.sh

启动spark

cd /usr/local/hadoop/spark/

./sbin/start-all.sh

检查是否启动成功

[hadoop@hadoop1 spark]$ jps

16855 NodeManager

17223 Master

17447 Jps

17369 Worker

16090 NameNode

16570 ResourceManager

16396 SecondaryNameNode

[hadoop@hadoop1 spark]$

[hadoop@hadoop2 spark]$ jps

1378 NodeManager

1239 DataNode

2280 Worker

2382 Jps

[hadoop@hadoop2 spark]$

停止

$./sbin/stop-all.sh

![]()

pyspark_mongo复制集_hdfs_hbase

启动mongo复制集

[

/usr/local/mongodb/安装后,初始化

mkdir -p /mnt/mongodb_data/{data,log};

mkdir conf/;

vim /usr/local/mongodb/conf/mongod.conf;

[

mv /mnt/mongodb_data/data /mnt/mongodb_data/data$(date +"%Y%m%d_%H%I%S"); mkdir /mnt/mongodb_data/data;

]

``

bind_ip=0.0.0.0

port=27017

dbpath=/mnt/mongodb_data/data

logpath=/mnt/mongodb_data/log/mongod.log

pidfilepath=/usr/local/mongodb/mongo.pid

fork=true

logappend=true

scp /usr/local/mongodb/conf/mongod.conf root@hadoop2:/usr/local/mongodb/conf/;

启动服务

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongod.conf

进入读写窗设置admin账号

/usr/local/mongodb/bin/mongo

use admin;

db.createUser(

{

user: "admin",

pwd: "admin123",

roles: [ { role: "userAdminAnyDatabase", db: "admin" } ,"clusterAdmin"]

}

);

检验是否添加成功

db.getUsers()

关闭服务mongod

db.shutdownServer()

退出mongo

exit

对配置文件加入ip限制和复制集配置

vim /usr/local/mongodb/conf/mongod.conf;

replSet=repl_test

keyFile=/usr/local/mongodb/conf/keyFile

shardsvr=true

directoryperdb=true

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongod.conf

进入任一节点mongo窗,进入读写

/usr/local/mongodb/bin/mongo

use admin;

db.auth("admin","admin123");

设置复制集

rs.status();

rs.();

rs.status();

rs.add("hadoop2:27017");

rs.status();

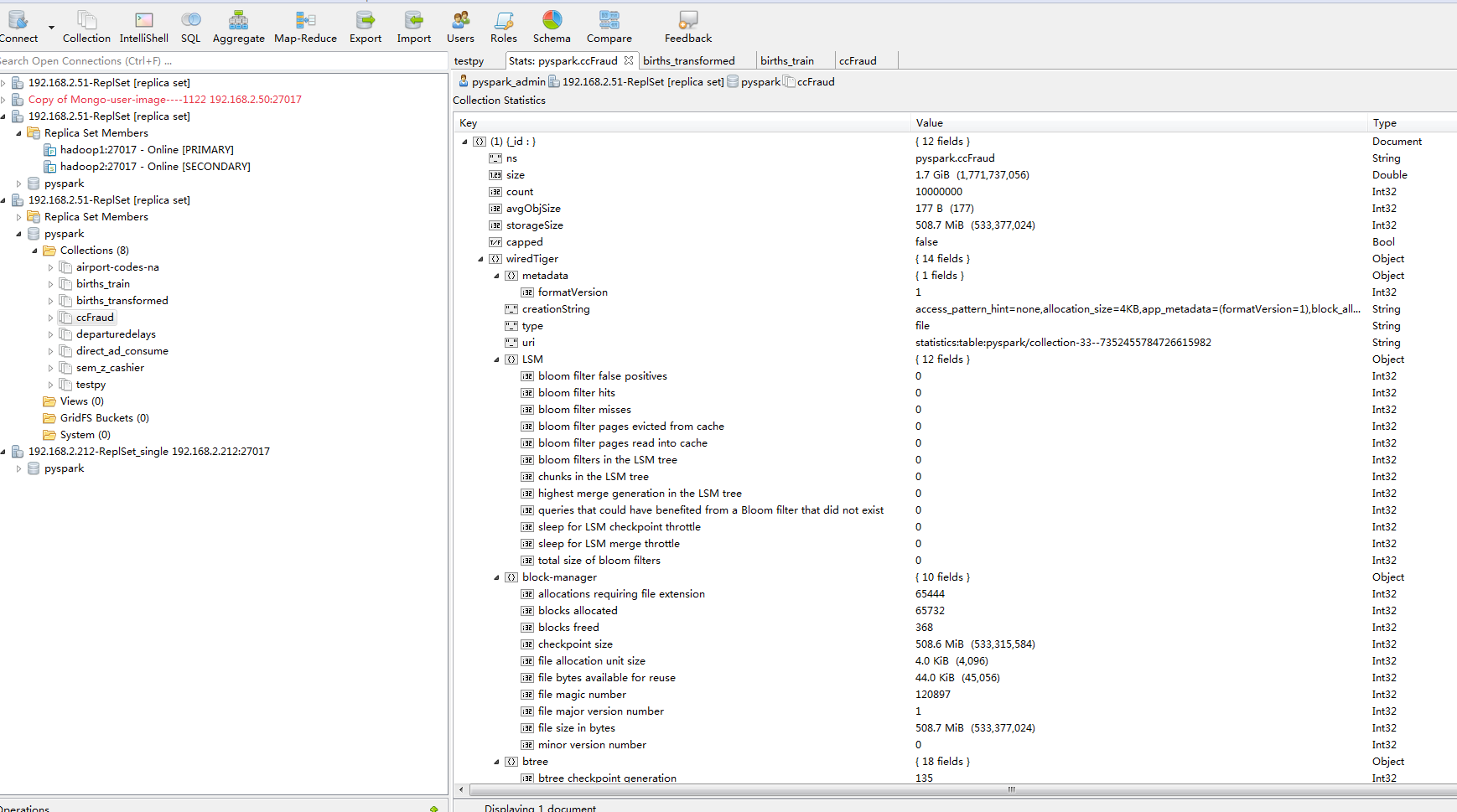

复制集无误后,库表、角色、用户设计;

use pyspark;

db.createUser(

{

user: "pyspark_admin",

pwd: "admin123",

roles: [ "readWrite", "dbAdmin" ]

}

);

db.getRoles();

db.getUsers();

db.auth("pyspark_admin","admin123");

show collections;

db.createCollection('direct_ad_consume');

db.createCollection('sem_z_cashier');

show collections;

db.createCollection('testpy');

db.createCollection('departuredelays');

db.createCollection('airport-codes-na');

db.createCollection('ccFraud');

db.sem_z_cashier.drop();

]

spark数据源;

单个大文件hdfs、hbase

序列化文件mongo复制集,可以考虑spark-mongo组件

执行spark

cd /usr/local/hadoop/

启动hadoop

cd /usr/local/hadoop/hadoop

$hadoop namenode -format # 启动前格式化namenode

$./sbin/start-all.sh

检查是否启动成功

[hadoop@hadoop1 hadoop]$ jps

16855 NodeManager

16999 Jps

16090 NameNode

16570 ResourceManager

16396 SecondaryNameNode

[hadoop@hadoop1 hadoop]$

/usr/local/hadoop/hadoop

[hadoop@hadoop2 hadoop]$ jps

1378 NodeManager

1239 DataNode

1528 Jps

[hadoop@hadoop2 hadoop]$

停止

$./sbin/stop-all.sh

启动spark

cd /usr/local/hadoop/spark/

./sbin/start-all.sh

检查是否启动成功

[hadoop@hadoop1 spark]$ jps

16855 NodeManager

17223 Master

17447 Jps

17369 Worker

16090 NameNode

16570 ResourceManager

16396 SecondaryNameNode

[hadoop@hadoop1 spark]$

[hadoop@hadoop2 spark]$ jps

1378 NodeManager

1239 DataNode

2280 Worker

2382 Jps

[hadoop@hadoop2 spark]$

停止

$./sbin/stop-all.sh

浙公网安备 33010602011771号

浙公网安备 33010602011771号