Boosting AdaBoosting Algorithm

http://math.mit.edu/~rothvoss/18.304.3PM/Presentations/1-Eric-Boosting304FinalRpdf.pdf

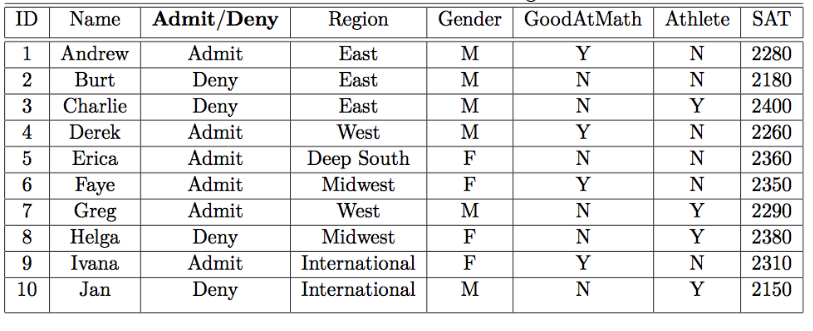

Consider MIT Admissions

【qualitative quantitative】

•

2-class system (Admit/Deny)

•

Both Quantitative Data and Qualitative Data

•

We consider (Y/N) answers to be Quantitative (-1,+1)

•

Region, for instance, is qualitative.

Rules of Thumb, Weak Classifiers

Easy to come up with rules of thumb that correctly classify the training data at

better than chance.

•

E.g. IF “GoodAtMath”==Y THEN predict “Admit”.

•

Difficult to find a single, highly accurate prediction rule. This is where our Weak

Learning Algorithm,AdaBoost, helps us.

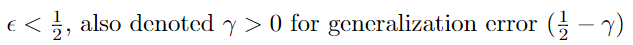

What is a Weak Learner?

【generalization error better than random guessing】

For any distribution, with high probability, given polynomially many examples and polynomial time we can find a classifier with generalization error

better than random guessing.

Weak Learning Assumption

•

We assume that our Weak Learning Algorithm (Weak

Learner) can consistently find weak classifiers (rules of

thumb which classify the data correctly at better than 50%)

•

【boosting】

Given this assumption, we can use boosting to generate a

single weighted classifier which correctly classifies our

training data at 99%-100%.

【AdaBoost Specifics 】

•

How does AdaBoost weight training examples optimally?

•

Focus on difficult data points. The data points that have been

misclassified most by the previous weak classifier.

•

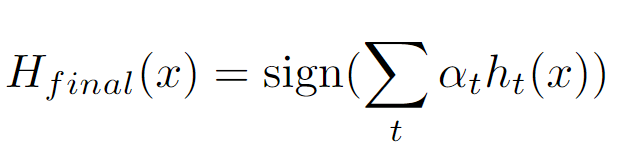

How does AdaBoost combine these weak classifiers into a

comprehensive prediction?

•

Use an optimally weighted majority vote of weak classifier.

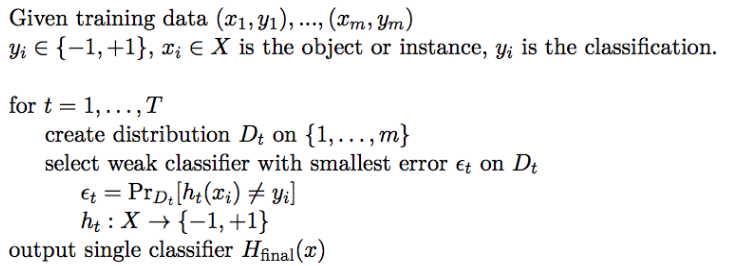

AdaBoost Technical Description

Missing details: How to generate distribution? How to get single classifier?

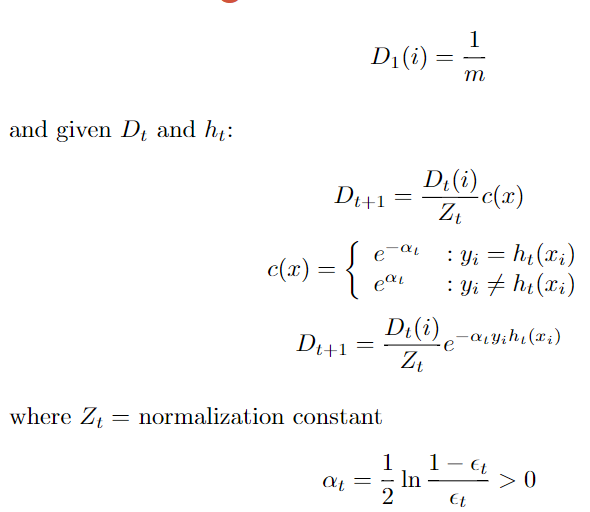

Constructing Dt

Getting a Single Classifier

浙公网安备 33010602011771号

浙公网安备 33010602011771号