I/O 复用 multiplexing data race 同步 coroutine 协程

小结:

1、A file descriptor is considered ready if it is possible to perform the corresponding I/O operation (e.g., read(2)) without blocking.

UNIX网络编程卷1:套接字联网API(第3版)

第一部分 简介和TCP/IP

第一章 简介

接受客户连接,发送应答。

#include "unp.h"

#include <time.h>

// o O

int main(int argc,char ** argv) {

int listenfd,connfd;

struct sockaddr_in servaddr;

char buff[MAXLINE];

time_t ticks;

listenfd=Socket(AF_INET,SOCK_STREAM,0);

bzero(&servaddr,sizeof(servaddr));

servaddr.sin_family = AF_INET;

servaddr.sin_addr.s_addr=htonl(INADDR_ANY);

servaddr.sin_port=htons(13);

Bind(listenfd,(SA *)&servaddr,sizeof(servaddr));

Listen(listenfd,LISTENQ);

for(;;) {

connfd=Accept(listenfd,(SA *) NULL,NULL);

ticks=time(NULL);

snprintf(buff,sizeof(buff),"%.24\r\n",ctime(&ticks));

Write(connfd,buff,strlen(buff));

Close(connfd);

}

}

通常情况下,服务器进程在accept调用中被投入睡眠,等待某个客户连接的到达并被内核接受。

TCP连接使用三路握手(three-way handshake)来建立连接。握手完毕accept返回,其返回值是

一个称为已连接描述符(connected descriptor)的新描述符( 本例中为connfd) 。该描述符用于与新近连接的

那个客户通信。accept为每个连接到本服务器的客户返回一个新的描述符。

第6章 I/O 复用:select和poll函数

Unix可用的5种 I/O模型

1、阻塞式 I/O

2、非阻塞式 I/O

3、 I/O(select和poll函数)

4、信号驱动式 I/O(SIGIO)

5、异步 I/O(POSIX的aio_系列函数)

【select和poll 是同步 I/O】

【内核态 用户态】

一个输入操作通常包含2个阶段:

1、等待数据准备好

2、从内核向进程复制数据

对于一个套接字的输入操作

1、等待数据从网络中到达。当所等待分组到达时,它被复制到内核中的某个缓冲区;

2、把数据从内核缓冲区复制到应用进程缓冲区。

Multiplexing - Wikipedia https://en.wikipedia.org/wiki/Multiplexing

In computing, I/O multiplexing can also be used to refer to the concept of processing multiple input/output events from a single event loop, with system calls like poll[1] and select (Unix).[2]

select(2): synchronous I/O multiplexing - Linux man page https://linux.die.net/man/2/select

Week11-Select.pdf http://www.cs.toronto.edu/~krueger/csc209h/f05/lectures/Week11-Select.pdf

lecture-concurrency-processes.pdf http://www.cs.jhu.edu/~phi/csf/slides/lecture-concurrency-processes.pdf

Multithreaded applications

- If a file descriptor being monitored by select() is closed in another thread, the result is unspecified. On some UNIX systems, select() unblocks and returns, with an indication that the file descriptor is ready (a subsequent I/O operation will likely fail with an error, unless another the file descriptor reopened between the time select() returned and the I/O operations was performed). On Linux (and some other systems), closing the file descriptor in another thread has no effect on select(). In summary, any application that relies on a particular behavior in this scenario must be considered buggy.

Main web server loop:

while (1) {

int clientfd = Accept(serverfd, NULL, NULL);

if (clientfd < 0) { fatal("Error accepting client connection"); }

server_chat_with_client(clientfd, webroot);

close(clientfd);

}

Do you see any limitations of this design? The server can only communicate with one client at a time

Data race 20

A data race is a (potential) bug where two concurrently-executing

paths access a shared variable, and at least one path writes to

the variable

• Paths ‘‘race’’ to access shared data, outcome depends on

which one ‘‘wins’’

Data race is a special case of a race condition, a situation where

an execution outcome depends on unpredictable event sequencing

A data race can cause data invariants to be violated (e.g.,

‘‘g num procs accurately reflects the number of processes running’’)

Solution: synchronization

• Implement a protocol to avoid uncontrolled access to shared data

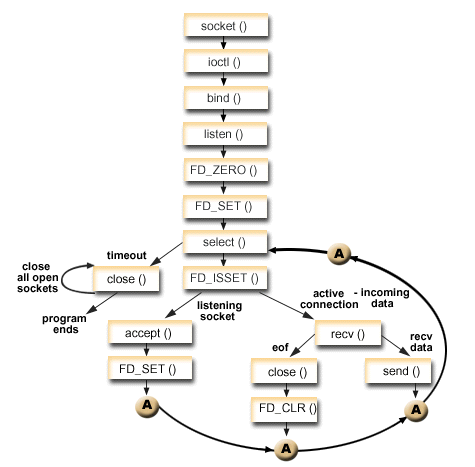

I/O multiplexing main loop 33

Pseudo-code:

create server socket, add to active fd set

while (1) {

wait for fd to become ready (select or poll)

if server socket ready

accept a connection, add it to set

for fd in client connections

if fd is ready for reading, read and update connection state

if fs is ready for writing, write and update connection state

}

Coroutines

One way to reduce the complexity of I/O multiplexing is to implement

communication with clients using coroutines

Coroutines are, essentially, a lightweight way of implementing threads

• But with runtime cost closer to function call overhead

Each client connection is implemented as a coroutine

When a client file descriptor finds that a client fd is ready for

reading or writing, it yields to the client coroutine

The client coroutine does the read or write (which won’t block),

updates state, and then yields back to the server control loop

Example: Nonblocking I/O and select() https://www.ibm.com/support/knowledgecenter/ssw_ibm_i_72/rzab6/xnonblock.htm

Using poll() instead of select() https://www.ibm.com/support/knowledgecenter/ssw_ibm_i_72/rzab6/poll.htm

浙公网安备 33010602011771号

浙公网安备 33010602011771号