Memory Model 内存模型 同步 Hardware Programming Language Memory Models

小结:

1、

与 Java 的内存模型一样,C++11 的内存模型最终也是不正确的。

https://research.swtch.com/hwmm

Hardware Memory Models

A long time ago, when everyone wrote single-threaded programs, one of the most effective ways to make a program run faster was to sit back and do nothing. Optimizations in the next generation of hardware and the next generation of compilers would make the program run exactly as before, just faster. During this fairy-tale period, there was an easy test for whether an optimization was valid: if programmers couldn't tell the difference (except for the speedup) between the unoptimized and optimized execution of a valid program, then the optimization was valid. That is, valid optimizations do not change the behavior of valid programs.

很久以前,当每个人都在编写单线程程序时,使程序运行得更快的最有效方法之一是坐下来什么都不做。下一代硬件和下一代编译器的优化将使程序完全像以前一样运行,只是更快了。在这个童话时期,有一个测试优化是否有效的简单方法:如果程序员不能区分有效程序的未优化执行和优化执行之间的区别(除了加速),那么优化就是有效的。也就是说,有效的优化不会改变有效程序的行为。

One sad day, years ago, the hardware engineers' magic spells for making individual processors faster and faster stopped working. In response, they found a new magic spell that let them create computers with more and more processors, and operating systems exposed this hardware parallelism to programmers in the abstraction of threads. This new magic spell—multiple processors made available in the form of operating-system threads—worked much better for the hardware engineers, but it created significant problems for language designers, compiler writers and programmers.

多年前的一天,令人难过的是,硬件工程师让单个处理器变得越来越快的魔法咒语停止了。作为回应,他们发现了一种新的魔法,使他们能够创造出拥有越来越多处理器的计算机,而操作系统在线程抽象中向程序员展示了这种硬件并行性。这种新的魔法咒语——以操作系统线程的形式提供的多处理器——对硬件工程师来说工作得更好,但它给语言设计师、编译器作者和程序员带来了重大问题。

Many hardware and compiler optimizations that were invisible (and therefore valid) in single-threaded programs produce visible changes in multithreaded programs. If valid optimizations do not change the behavior of valid programs, then either these optimizations or the existing programs must be declared invalid. Which will it be, and how can we decide? 许多在单线程程序中不可见(因此是有效的)的硬件和编译器优化在多线程程序中产生了可见的更改。如果有效的优化没有改变有效程序的行为,那么必须声明这些优化或现有的程序是无效的。到底是哪一种呢?我们如何决定呢?

Here is a simple example program in a C-like language. In this program and in all programs we will consider, all variables are initially set to zero.

// Thread 1 // Thread 2

x = 1; while(done == 0) { /* loop */ }

done = 1; print(x);

If thread 1 and thread 2, each running on its own dedicated processor, both run to completion, can this program print 0?

It depends. It depends on the hardware, and it depends on the compiler. A direct line-for-line translation to assembly run on an x86 multiprocessor will always print 1. But a direct line-for-line translation to assembly run on an ARM or POWER multiprocessor can print 0. Also, no matter what the underlying hardware, standard compiler optimizations could make this program print 0 or go into an infinite loop.

“It depends” is not a happy ending. Programmers need a clear answer to whether a program will continue to work with new hardware and new compilers. And hardware designers and compiler developers need a clear answer to how precisely the hardware and compiled code are allowed to behave when executing a given program. Because the main issue here is the visibility and consistency of changes to data stored in memory, that contract is called the memory consistency model or just memory model. “看情况而定”并不是一个幸福的结局。程序员需要清楚地知道一个程序是否可以在新的硬件和新的编译器上继续工作。硬件设计人员和编译器开发人员需要清楚地知道,在执行给定程序时,允许硬件和编译代码的行为有多精确。因为这里的主要问题是存储在内存中的数据更改的可见性和一致性,所以该契约被称为内存一致性模型或简称为内存模型。

Originally, the goal of a memory model was to define what hardware guaranteed to a programmer writing assembly code. In that setting, the compiler is not involved. Twenty-five years ago, people started trying to write memory models defining what a high-level programming language like Java or C++ guarantees to programmers writing code in that language. Including the compiler in the model makes the job of defining a reasonable model much more complicated. 最初,内存模型的目标是定义编写程序集代码的程序员可以使用哪些硬件。在这种设置中,不涉及编译器。25年前,人们开始尝试编写内存模型,定义像Java或c++这样的高级编程语言对用这种语言编写代码的程序员的保证。在模型中包含编译器使得定义合理模型的工作更加复杂。

This is the first of a pair of posts about hardware memory models and programming language memory models, respectively. My goal in writing these posts is to build up background for discussing potential changes we might want to make in Go's memory model. But to understand where Go is and where we might want to head, first we have to understand where other hardware memory models and language memory models are today and the precarious paths they took to get there. 这是关于硬件内存模型和编程语言内存模型的两篇文章中的第一篇。我写这些文章的目的是为讨论我们可能想要在Go的内存模型中进行的潜在更改建立背景。但是要了解Go的现状和我们的目标,首先我们必须了解其他硬件内存模型和语言内存模型的现状以及它们达到这一目标的不稳定路径。

Again, this post is about hardware. Let's assume we are writing assembly language for a multiprocessor computer. What guarantees do programmers need from the computer hardware in order to write correct programs? Computer scientists have been searching for good answers to this question for over forty years. 同样,这篇文章是关于硬件的。让我们假设我们正在为多处理器计算机编写汇编语言。为了编写正确的程序,程序员需要从计算机硬件那里得到什么保证?40多年来,计算机科学家一直在寻找这个问题的正确答案。

Sequential Consistency

Leslie Lamport's 1979 paper “How to Make a Multiprocessor Computer That Correctly Executes Multiprocess Programs” introduced the concept of sequential consistency:

The customary approach to designing and proving the correctness of multiprocess algorithms for such a computer assumes that the following condition is satisfied: the result of any execution is the same as if the operations of all the processors were executed in some sequential order, and the operations of each individual processor appear in this sequence in the order specified by its program. A multiprocessor satisfying this condition will be called sequentially consistent.

为这种计算机设计和证明多进程算法正确性的习惯方法假设满足以下条件:任何执行的结果都与所有处理器的操作按某种顺序执行相同,并且每个处理器的操作按其程序指定的顺序出现。满足此条件的多处理器称为顺序一致的。

Today we talk about not just computer hardware but also programming languages guaranteeing sequential consistency, when the only possible executions of a program correspond to some kind of interleaving of thread operations into a sequential execution. Sequential consistency is usually considered the ideal model, the one most natural for programmers to work with. It lets you assume programs execute in the order they appear on the page, and the executions of individual threads are simply interleaved in some order but not otherwise rearranged. 今天我们不仅要讨论计算机硬件,还要讨论保证顺序一致性的编程语言,当一个程序的唯一可能执行对应于某种顺序执行的线程操作交错时。顺序一致性通常被认为是理想的模型,是程序员最自然使用的模型。它允许您假设程序按照它们在页面上出现的顺序执行,并且单个线程的执行只是以某种顺序交错,而不是以其他方式重新排列。

One might reasonably question whether sequential consistency should be the ideal model, but that's beyond the scope of this post. I will note only that considering all possible thread interleavings remains, today as in 1979, “the customary approach to designing and proving the correctness of multiprocess algorithms.” In the intervening four decades, nothing has replaced it. 有人可能会合理地质疑顺序一致性是否应该是理想的模型,但这超出了本文的范围。我只会指出,考虑到所有可能的线程交错,今天和1979年一样,仍然是“设计和证明多进程算法正确性的习惯方法”。在其间的四十年中,没有任何东西能取代它。

Earlier I asked whether this program can print 0:

// Thread 1 // Thread 2

x = 1; while(done == 0) { /* loop */ }

done = 1; print(x);

To make the program a bit easier to analyze, let's remove the loop and the print and ask about the possible results from reading the shared variables:

Litmus Test: Message Passing

Can this program seer1=1,r2=0?

// Thread 1 // Thread 2 x = 1 r1 = y y = 1 r2 = x

We assume every example starts with all shared variables set to zero. Because we're trying to establish what hardware is allowed to do, we assume that each thread is executing on its own dedicated processor and that there's no compiler to reorder what happens in the thread: the instructions in the listings are the instructions the processor executes. The name rN denotes a thread-local register, not a shared variable, and we ask whether a particular setting of thread-local registers is possible at the end of an execution. 我们假设每个示例都从所有共享变量设为零开始。因为我们试图确定硬件被允许做什么,我们假设每个线程都在它自己的专用处理器上执行,并且没有编译器对线程中发生的事情进行重新排序:清单中的指令就是处理器执行的指令。名称rN表示线程本地寄存器,而不是共享变量,我们询问在执行结束时是否可以设置特定的线程本地寄存器。

This kind of question about execution results for a sample program is called a litmus test. Because it has a binary answer—is this outcome possible or not?—a litmus test gives us a clear way to distinguish memory models: if one model allows a particular execution and another does not, the two models are clearly different. Unfortunately, as we will see later, the answer a particular model gives to a particular litmus test is often surprising. 这种关于示例程序执行结果的问题被称为石蕊试纸。因为它有一个二元答案——这个结果可能吗?-一个石蕊测试为我们提供了一个清晰的方法来区分内存模型:如果一个模型允许特定的执行,而另一个不允许,这两个模型显然是不同的。不幸的是,正如我们稍后将看到的,特定模型对特定石蕊试金石的回答常常令人惊讶。

If the execution of this litmus test is sequentially consistent, there are only six possible interleavings: 如果这个石蕊测试的执行顺序一致,则只有六种可能的交错:

Since no interleaving ends with r1 = 1, r2 = 0, that result is disallowed. That is, on sequentially consistent hardware, the answer to the litmus test—can this program see r1 = 1, r2 = 0?—is no. 因为没有交叉以r1 = 1, r2 = 0结束,所以不允许该结果。也就是说,在顺序一致的硬件上,石蕊试金石测试的答案是这个程序能看到r1 = 1, r2 = 0吗?——没有。

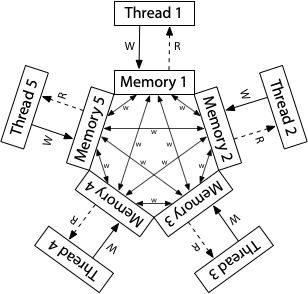

A good mental model for sequential consistency is to imagine all the processors connected directly to the same shared memory, which can serve a read or write request from one thread at a time. There are no caches involved, so every time a processor needs to read from or write to memory, that request goes to the shared memory. The single-use-at-a-time shared memory imposes a sequential order on the execution of all the memory accesses: sequential consistency. 顺序一致性的一个很好的思维模型是想象所有处理器直接连接到相同的共享内存,它可以一次服务于来自一个线程的读或写请求。它不涉及缓存,因此每次处理器需要从内存中读取或写入时,请求都会发送到共享内存。一次使用一次的共享内存对所有内存访问的执行施加了一个顺序:顺序一致性。

(The three memory model hardware diagrams in this post are adapted from Maranget et al., “A Tutorial Introduction to the ARM and POWER Relaxed Memory Models.”)

(本文中的三个内存模型硬件图改编自Maranget等人的《ARM和POWER宽松内存模型入门教程》。)

This diagram is a model for a sequentially consistent machine, not the only way to build one. Indeed, it is possible to build a sequentially consistent machine using multiple shared memory modules and caches to help predict the result of memory fetches, but being sequentially consistent means that machine must behave indistinguishably from this model. If we are simply trying to understand what sequentially consistent execution means, we can ignore all of those possible implementation complications and think about this one model. 此图是顺序一致机器的模型,而不是构建顺序一致机器的唯一方法。实际上,可以使用多个共享内存模块和缓存来构建顺序一致的机器,以帮助预测内存获取的结果,但是顺序一致意味着机器的行为必须与这个模型没有区别。如果我们只是试图理解顺序一致的执行意味着什么,那么我们可以忽略所有可能的实现复杂性,只考虑这个模型。

Unfortunately for us as programmers, giving up strict sequential consistency can let hardware execute programs faster, so all modern hardware deviates in various ways from sequential consistency. Defining exactly how specific hardware deviates turns out to be quite difficult. This post uses as two examples two memory models present in today's widely-used hardware: that of the x86, and that of the ARM and POWER processor families. 不幸的是,对于程序员来说,放弃严格的顺序一致性可以让硬件更快地执行程序,因此所有现代硬件都以各种方式背离了顺序一致性。准确定义具体硬件如何偏离是相当困难的。这篇文章以当今广泛使用的硬件中的两种内存模型为例:一种是x86,一种是ARM和POWER处理器家族。

x86 Total Store Order (x86-TSO)

The memory model for modern x86 systems corresponds to this hardware diagram:

All the processors are still connected to a single shared memory, but each processor queues writes to that memory in a local write queue. The processor continues executing new instructions while the writes make their way out to the shared memory. A memory read on one processor consults the local write queue before consulting main memory, but it cannot see the write queues on other processors. The effect is that a processor sees its own writes before others do. But—and this is very important—all processors do agree on the (total) order in which writes (stores) reach the shared memory, giving the model its name: total store order, or TSO. At the moment that a write reaches shared memory, any future read on any processor will see it and use that value (until it is overwritten by a later write, or perhaps by a buffered write from another processor).

所有处理器仍然连接到单个共享内存,但是每个处理器队列在本地写队列中写入该内存。处理器继续执行新的指令,同时写入操作被发送到共享内存。在一个处理器上读的内存在查询主内存之前先查询本地写队列,但它不能查看其他处理器上的写队列。其结果是,一个处理器比其他处理器先看到自己的写操作。但是——这是非常重要的——所有处理器都同意写入(存储)到达共享内存的(总)顺序,这给模型起了一个名字:总存储顺序(total store order, TSO)。当写操作到达共享内存时,任何处理器上的任何未来读操作都将看到它并使用该值(直到它被以后的写操作覆盖,或者可能被来自另一个处理器的缓冲写操作覆盖)。

The write queue is a standard first-in, first-out queue: the memory writes are applied to the shared memory in the same order that they were executed by the processor. Because the write order is preserved by the write queue, and because other processors see the writes to shared memory immediately, the message passing litmus test we considered earlier has the same outcome as before: r1 = 1, r2 = 0 remains impossible.

写队列是一个标准的先进先出队列:内存写操作按照处理器执行它们的相同顺序应用到共享内存。因为写顺序是由写队列保留的,而且因为其他处理器可以立即看到对共享内存的写操作,所以我们前面考虑的通过石蕊测试的消息与前面的结果相同:r1 = 1, r2 = 0仍然是不可能的。

Litmus Test: Message Passing

Can this program seer1=1,r2=0?

// Thread 1 // Thread 2 x = 1 r1 = y y = 1 r2 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): no.

The write queue guarantees that thread 1 writes x to memory before y, and the system-wide agreement about the order of memory writes (the total store order) guarantees that thread 2 learns of x's new value before it learns of y's new value. Therefore it is impossible for r1 = y to see the new y without r2 = x also seeing the new x. The store order is crucial here: thread 1 writes x before y, so thread 2 must not see the write to y before the write to x.

The sequential consistency and TSO models agree in this case, but they disagree about the results of other litmus tests. For example, this is the usual example distinguishing the two models:

Litmus Test: Write Queue (also called Store Buffer)

Can this program seer1=0,r2=0?

// Thread 1 // Thread 2 x = 1 y = 1 r1 = y r2 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): yes!

In any sequentially consistent execution, either x = 1 or y = 1 must happen first, and then the read in the other thread must observe it, so r1 = 0, r2 = 0 is impossible. But on a TSO system, it can happen that Thread 1 and Thread 2 both queue their writes and then read from memory before either write makes it to memory, so that both reads see zeros.

在任何顺序一致的执行中,x = 1或y = 1必须首先发生,然后另一个线程中的read操作必须观察到它,因此r1 = 0, r2 = 0是不可能的。但是在TSO系统上,可能发生的情况是线程1和线程2都对它们的写操作进行排队,然后在其中一个写操作进入内存之前从内存中读取,这样两个读操作都看到0。

This example may seem artificial, but using two synchronization variables does happen in well-known synchronization algorithms, such as Dekker's algorithm or Peterson's algorithm, as well as ad hoc schemes. They break if one thread isn’t seeing all the writes from another.

这个例子似乎是人为的,但是在著名的同步算法中确实会使用两个同步变量,例如Dekker的算法或Peterson的算法,以及特殊方案。如果一个线程没有看到来自另一个线程的所有写操作,它们就会中断。

To fix algorithms that depend on stronger memory ordering, non-sequentially-consistent hardware supplies explicit instructions called memory barriers (or fences) that can be used to control the ordering. We can add a memory barrier to make sure that each thread flushes its previous write to memory before starting its read:

// Thread 1 // Thread 2 x = 1 y = 1 barrier barrier r1 = y r2 = x

为了修正依赖于更强内存排序的算法,非顺序一致的硬件提供了称为内存屏障(或栅栏)的显式指令,可用于控制排序。我们可以添加一个内存屏障,以确保每个线程在开始读之前将之前的写操作刷新到内存中:

With the addition of the barriers, r1 = 0, r2 = 0 is again impossible, and Dekker's or Peterson's algorithm would then work correctly. There are many kinds of barriers; the details vary from system to system and are beyond the scope of this post. The point is only that barriers exist and give programmers or language implementers a way to force sequentially consistent behavior at critical moments in a program.

加上障碍后,r1 = 0, r2 = 0仍然是不可能的,这样Dekker或Peterson的算法就可以正常工作了。障碍有很多种;具体细节因系统而异,超出了本文的讨论范围。问题的关键在于,障碍的存在给了程序员或语言实现者一种方法,在程序的关键时刻强制执行顺序一致的行为

One final example, to drive home why the model is called total store order. In the model, there are local write queues but no caches on the read path. Once a write reaches main memory, all processors not only agree that the value is there but also agree about when it arrived relative to writes from other processors. Consider this litmus test: 最后一个例子,解释为什么这个模型被称为总商店订单。在该模型中,有本地写队列,但读路径上没有缓存。一旦写操作到达主存,所有处理器不仅同意该值存在,而且还同意相对于其他处理器的写操作,该值何时到达。看看下面这个试金石:

Litmus Test: Independent Reads of Independent Writes (IRIW)

Can this program seer1=1,r2=0,r3=1,r4=0?

(Can Threads 3 and 4 seexandychange in different orders?)

// Thread 1 // Thread 2 // Thread 3 // Thread 4

x = 1 y = 1 r1 = x r3 = y

r2 = y r4 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): no.

If Thread 3 sees x change before y, can Thread 4 see y change before x? For x86 and other TSO machines, the answer is no: there is a total order over all stores (writes) to main memory, and all processors agree on that order, subject to the wrinkle that each processor knows about its own writes before they reach main memory.

如果线程3看到x在y之前发生变化,线程4能看到y在x之前发生变化吗?对于x86和其他TSO机器,答案是否定的:所有存储(写入)到主存的顺序是一致的,所有处理器都同意这个顺序,但每个处理器都知道自己的写入在到达主存之前。

The Path to x86-TSO

The x86-TSO model seems fairly clean, but the path there was full of roadblocks and wrong turns. In the 1990s, the manuals available for the first x86 multiprocessors said next to nothing about the memory model provided by the hardware. x86-TSO模型看起来相当干净,但那里的道路充满了障碍和错误的转弯。在20世纪90年代,第一批x86多处理器的使用手册几乎没有提到硬件提供的内存模型。

As one example of the problems, Plan 9 was one of the first true multiprocessor operating systems (without a global kernel lock) to run on the x86. During the port to the multiprocessor Pentium Pro, in 1997, the developers stumbled over unexpected behavior that boiled down to the write queue litmus test. A subtle piece of synchronization code assumed that r1 = 0, r2 = 0 was impossible, and yet it was happening. Worse, the Intel manuals were vague about the memory model details. 作为问题的一个例子,Plan 9是在x86上运行的第一个真正的多处理器操作系统(没有全局内核锁)。1997年,在多处理器Pentium Pro的移植过程中,开发人员遇到了意料之外的行为,这可以归结为写队列试金石测试。一段微妙的同步代码假设r1 = 0, r2 = 0是不可能的,然而它发生了。更糟糕的是,英特尔手册对内存模型细节的描述非常模糊。

In response to a mailing list suggestion that “it's better to be conservative with locks than to trust hardware designers to do what we expect,” one of the Plan 9 developers explained the problem well: 在回应一个邮件列表的建议时,“最好对锁保持保守,而不是信任硬件设计人员来实现我们的期望”,Plan 9的一名开发人员很好地解释了这个问题:

I certainly agree. We are going to encounter more relaxed ordering in multiprocessors. The question is, what do the hardware designers consider conservative? Forcing an interlock at both the beginning and end of a locked section seems to be pretty conservative to me, but I clearly am not imaginative enough. The Pro manuals go into excruciating detail in describing the caches and what keeps them coherent but don't seem to care to say anything detailed about execution or read ordering. The truth is that we have no way of knowing whether we're conservative enough. 我当然同意。我们将在多处理器中遇到更轻松的排序。问题是,硬件设计师认为什么是保守的?在一个锁的部分的开始和结束都强制互锁对我来说似乎是相当保守的,但我显然没有足够的想象力。Pro手册非常详细地描述了缓存以及如何保持缓存的一致性,但似乎并不关心执行或读取顺序的细节。事实是,我们无法知道自己是否足够保守。

During the discussion, an architect at Intel gave an informal explanation of the memory model, pointing out that in theory even multiprocessor 486 and Pentium systems could have produced the r1 = 0, r2 = 0 result, and that the Pentium Pro simply had larger pipelines and write queues that exposed the behavior more often. 在讨论过程中,Intel的一位架构师对内存模型给出了非正式的解释,他指出,从理论上讲,即使是多处理器486和奔腾系统也可以产生r1 = 0, r2 = 0的结果,而奔腾Pro只是拥有更大的管道和写队列,从而更频繁地暴露这种行为。

The Intel architect also wrote: 这位英特尔架构师还写道:

Loosely speaking, this means the ordering of events originating from any one processor in the system, as observed by other processors, is always the same. However, different observers are allowed to disagree on the interleaving of events from two or more processors. 粗略地说,这意味着来自系统中任何一个处理器的事件的顺序,与其他处理器观察到的一样,总是相同的。但是,不同的观察者可以对来自两个或多个处理器的事件的交错持不同意见。

Future Intel processors will implement the same memory ordering model. 未来的英特尔处理器将实现相同的内存排序模型。

The claim that “different observers are allowed to disagree on the interleaving of events from two or more processors” is saying that the answer to the IRIW litmus test can answer “yes” on x86, even though in the previous section we saw that x86 answers “no.” How can that be? “允许不同的观察者对来自两个或多个处理器的事件交错产生不同意见”的说法是说,IRIW石蕊测试的答案可以在x86上回答“是”,尽管在上一节中我们看到x86的答案是“否”。这怎么可能呢?

The answer appears to be that Intel processors never actually answered “yes” to that litmus test, but at the time the Intel architects were reluctant to make any guarantee for future processors. What little text existed in the architecture manuals made almost no guarantees at all, making it very difficult to program against. 答案似乎是,英特尔处理器从来没有在这个试金石测试中给出“是”的回答,但当时英特尔的架构师不愿意为未来的处理器做出任何保证。架构手册中仅有的少量文本几乎没有任何保证,这使得编程非常困难。

The Plan 9 discussion was not an isolated event. The Linux kernel developers spent over a hundred messages on their mailing list starting in late November 1999 in similar confusion over the guarantees provided by Intel processors.

In response to more and more people running into these difficulties over the decade that followed, a group of architects at Intel took on the task of writing down useful guarantees about processor behavior, for both current and future processors. The first result was the “Intel 64 Architecture Memory Ordering White Paper”, published in August 2007, which aimed to “provide software writers with a clear understanding of the results that different sequences of memory access instructions may produce.” AMD published a similar description later that year in the AMD64 Architecture Programmer's Manual revision 3.14. These descriptions were based on a model called “total lock order + causal consistency” (TLO+CC), intentionally weaker than TSO. In public talks, the Intel architects said that TLO+CC was “as strong as required but no stronger.” In particular, the model reserved the right for x86 processors to answer “yes” to the IRIW litmus test. Unfortunately, the definition of the memory barrier was not strong enough to reestablish sequentially-consistent memory semantics, even with a barrier after every instruction. Even worse, researchers observed actual Intel x86 hardware violating the TLO+CC model. For example:

在接下来的十年里,越来越多的人遇到了这些困难,为了应对这种情况,英特尔的一组架构师承担起了一项任务,即为当前和未来的处理器写下有关处理器行为的有用保证。第一个结果是2007年8月发布的“Intel 64架构内存排序白皮书”,它旨在“为软件作者提供对不同内存访问指令序列可能产生的结果的清晰理解”。同年晚些时候,AMD在AMD64架构程序员手册3.14版中发布了类似的描述。这些描述是基于一个名为“总锁顺序+因果一致性”(TLO+CC)的模型,故意比TSO弱。在公开的谈话中,英特尔的架构师说TLO+CC“和要求的一样强,但没有更强”。特别是,该模型保留了x86处理器对IRIW试金石测试回答“是”的权利。不幸的是,内存屏障的定义不够强大,即使在每个指令之后都有一个屏障,也无法重建顺序一致的内存语义。更糟糕的是,研究人员发现实际的Intel x86硬件违反了TLO+CC模型。例如:

Litmus Test: n6 (Paul Loewenstein)

Can this program end withr1=1,r2=0,x=1?

// Thread 1 // Thread 2 x = 1 y = 1 r1 = x x = 2 r2 = y

On sequentially consistent hardware: no.

On x86 TLO+CC model (2007): no.

On actual x86 hardware: yes!

On x86 TSO model: yes! (Example from x86-TSO paper.)

Revisions to the Intel and AMD specifications later in 2008 guaranteed a “no” to the IRIW case and strengthened the memory barriers but still permitted unexpected behaviors that seem like they could not arise on any reasonable hardware. For example:

Litmus Test: n5

Can this program end withr1=2,r2=1?

// Thread 1 // Thread 2 x = 1 x = 2 r1 = x r2 = x

On sequentially consistent hardware: no.

On x86 specification (2008): yes!

On actual x86 hardware: no.

On x86 TSO model: no. (Example from x86-TSO paper.)

To address these problems, Owens et al. proposed the x86-TSO model, based on the earlier SPARCv8 TSO model. At the time they claimed that “To the best of our knowledge, x86-TSO is sound, is strong enough to program above, and is broadly in line with the vendors’ intentions.” A few months later Intel and AMD released new manuals broadly adopting this model.

为了解决这些问题,Owens等人基于早期的SPARCv8 TSO模型提出了x86-TSO模型。当时他们声称,“据我们所知,x86-TSO是可靠的,它足够强大,可以在上面进行编程,而且大体上符合供应商的意图。”几个月后,英特尔和AMD发布了广泛采用这种模式的新手册。

It appears that all Intel processors did implement x86-TSO from the start, even though it took a decade for Intel to decide to commit to that. In retrospect, it is clear that the Intel and AMD architects were struggling with exactly how to write a memory model that left room for future processor optimizations while still making useful guarantees for compiler writers and assembly-language programmers. “As strong as required but no stronger” is a difficult balancing act. 似乎所有的英特尔处理器都从一开始就实现了x86-TSO,尽管英特尔花了10年的时间才决定致力于此。回想起来,很明显,英特尔和AMD的架构师们都在纠结于如何编写一个内存模型,既能为未来的处理器优化留出空间,又能为编译器编写者和汇编语言程序员提供有用的保证。“要达到要求,但不能更强”是一项艰难的平衡之举。

ARM/POWER Relaxed Memory Model

Now let's look at an even more relaxed memory model, the one found on ARM and POWER processors. At an implementation level, these two systems are different in many ways, but the guaranteed memory consistency model turns out to be roughly similar, and quite a bit weaker than x86-TSO or even x86-TLO+CC. 现在让我们看看一个更轻松的内存模型,在ARM和POWER处理器上发现的那个。在实现级别上,这两个系统在许多方面是不同的,但是保证内存一致性模型结果是大致相似的,而且比x86-TSO甚至x86-TLO+CC弱得多。

The conceptual model for ARM and POWER systems is that each processor reads from and writes to its own complete copy of memory, and each write propagates to the other processors independently, with reordering allowed as the writes propagate. ARM和POWER系统的概念模型是,每个处理器对它自己的完整内存副本进行读写,每个写入都独立地传播到其他处理器,在写入传播时允许重新排序。

Here, there is no total store order. Not depicted, each processor is also allowed to postpone a read until it needs the result: a read can be delayed until after a later write. In this relaxed model, the answer to every litmus test we’ve seen so far is “yes, that really can happen.”

这里没有总订单。没有描述,每个处理器也可以推迟读,直到它需要结果:读可以推迟到后面的写之后。在这个轻松的模型中,我们目前看到的每一个石蕊测试的答案都是“是的,这真的会发生。”

For the original message passing litmus test, the reordering of writes by a single processor means that Thread 1's writes may not be observed by other threads in the same order:

Litmus Test: Message Passing

Can this program seer1=1,r2=0?

// Thread 1 // Thread 2 x = 1 r1 = y y = 1 r2 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): no.

On ARM/POWER: yes!

In the ARM/POWER model, we can think of thread 1 and thread 2 each having their own separate copy of memory, with writes propagating between the memories in any order whatsoever. If thread 1's memory sends the update of y to thread 2 before sending the update of x, and if thread 2 executes between those two updates, it will indeed see the result r1 = 1, r2 = 0.

This result shows that the ARM/POWER memory model is weaker than TSO: it makes fewer requirements on the hardware. The ARM/POWER model still admits the kinds of reorderings that TSO does:

Litmus Test: Store Buffering

Can this program seer1=0,r2=0?

// Thread 1 // Thread 2 x = 1 y = 1 r1 = y r2 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): yes!

On ARM/POWER: yes!

On ARM/POWER, the writes to x and y might be made to the local memories but not yet have propagated when the reads occur on the opposite threads.

Here’s the litmus test that showed what it meant for x86 to have a total store order:

Litmus Test: Independent Reads of Independent Writes (IRIW)

Can this program seer1=1,r2=0,r3=1,r4=0?

(Can Threads 3 and 4 seexandychange in different orders?)

// Thread 1 // Thread 2 // Thread 3 // Thread 4

x = 1 y = 1 r1 = x r3 = y

r2 = y r4 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): no.

On ARM/POWER: yes!

On ARM/POWER, different threads may learn about different writes in different orders. They are not guaranteed to agree about a total order of writes reaching main memory, so Thread 3 can see x change before y while Thread 4 sees y change before x.

As another example, ARM/POWER systems have visible buffering or reordering of memory reads (loads), as demonstrated by this litmus test:

Litmus Test: Load Buffering

Can this program seer1=1,r2=1?

(Can each thread's read happen after the other thread's write?)

// Thread 1 // Thread 2 r1 = x r2 = y y = 1 x = 1

On sequentially consistent hardware: no.

On x86 (or other TSO): no.

On ARM/POWER: yes!

Any sequentially consistent interleaving must start with either thread 1's r1 = x or thread 2's r2 = y. That read must see a zero, making the outcome r1 = 1, r2 = 1 impossible. In the ARM/POWER memory model, however, processors are allowed to delay reads until after writes later in the instruction stream, so that y = 1 and x = 1 execute before the two reads.

Although both the ARM and POWER memory models allow this result, Maranget et al. reported (in 2012) being able to reproduce it empirically only on ARM systems, never on POWER. Here the divergence between model and reality comes into play just as it did when we examined Intel x86: hardware implementing a stronger model than technically guaranteed encourages dependence on the stronger behavior and means that future, weaker hardware will break programs, validly or not.

Like on TSO systems, ARM and POWER have barriers that we can insert into the examples above to force sequentially consistent behaviors. But the obvious question is whether ARM/POWER without barriers excludes any behavior at all. Can the answer to any litmus test ever be “no, that can’t happen?” It can, when we focus on a single memory location.

Here’s a litmus test for something that can’t happen even on ARM and POWER:

Litmus Test: Coherence

Can this program seer1=1,r2=2,r3=2,r4=1?

(Can Thread 3 seex=1beforex=2while Thread 4 sees the reverse?)

// Thread 1 // Thread 2 // Thread 3 // Thread 4

x = 1 x = 2 r1 = x r3 = x

r2 = x r4 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): no.

On ARM/POWER: no.

This litmus test is like the previous one, but now both threads are writing to a single variable x instead of two distinct variables x and y. Threads 1 and 2 write conflicting values 1 and 2 to x, while Thread 3 and Thread 4 both read x twice. If Thread 3 sees x = 1 overwritten by x = 2, can Thread 4 see the opposite?

这个试金石测试与前一个类似,但现在两个线程都写入一个变量x,而不是两个不同的变量x和y。线程1和2将冲突的值1和2写入x,而线程3和线程4都读取x两次。如果线程3看到x = 1被x = 2覆盖,线程4能看到相反的情况吗?

The answer is no, even on ARM/POWER: threads in the system must agree about a total order for the writes to a single memory location. That is, threads must agree which writes overwrite other writes. This property is called called coherence. Without the coherence property, processors either disagree about the final result of memory or else report a memory location flip-flopping from one value to another and back to the first. It would be very difficult to program such a system.

答案是否定的,即使是在ARM/POWER上:系统中的线程必须对写入单个内存位置的总顺序达成一致。也就是说,线程必须同意哪个写操作会覆盖其他写操作。这种性质叫做相干性。如果没有相干属性,处理器要么不同意内存的最终结果,要么报告内存位置从一个值切换到另一个值,然后返回到第一个值。编写这样一个系统是非常困难的。

I'm purposely leaving out a lot of subtleties in the ARM and POWER weak memory models. For more detail, see any of Peter Sewell's papers on the topic. Also, ARMv8 strengthened the memory model by making it “multicopy atomic,” but I won't take the space here to explain exactly what that means.

我故意忽略了ARM和POWER弱内存模型中的许多微妙之处。欲了解更多细节,请参阅Peter Sewell关于该主题的任何论文。此外,ARMv8通过使其成为“多复制原子”来加强内存模型,但我不会在这里占用空间来解释这到底意味着什么。

There are two important points to take away. First, there is an incredible amount of subtlety here, the subject of well over a decade of academic research by very persistent, very smart people. I don't claim to understand anywhere near all of it myself. This is not something we should hope to explain to ordinary programmers, not something that we can hope to keep straight while debugging ordinary programs. Second, the gap between what is allowed and what is observed makes for unfortunate future surprises. If current hardware does not exhibit the full range of allowed behaviors—especially when it is difficult to reason about what is allowed in the first place!—then inevitably programs will be written that accidentally depend on the more restricted behaviors of the actual hardware. If a new chip is less restricted in its behaviors, the fact that the new behavior breaking your program is technically allowed by the hardware memory model—that is, the bug is technically your fault—is of little consolation. This is no way to write programs.

这里有两点值得借鉴。首先,这里有令人难以置信的微妙之处,这个课题由非常执着、非常聪明的人进行了十多年的学术研究。我也没说自己完全懂。这不是我们应该希望向普通程序员解释的东西,也不是我们在调试普通程序时能够希望保持的东西。第二,被允许的和被观察到的之间的差距会给未来带来不幸的意外。如果当前的硬件没有显示出所有允许的行为—特别是当很难从一开始就推理出什么是允许的!—那么不可避免地,程序将会意外地依赖于实际硬件的更受限制的行为。如果新芯片的行为限制较少,那么硬件内存模型在技术上允许破坏程序的新行为——也就是说,从技术上讲,错误是您的错误——这一事实就没有多少安慰作用了。这不是写程序的方法。

Weak Ordering and Data-Race-Free Sequential Consistency

By now I hope you're convinced that the hardware details are complex and subtle and not something you want to work through every time you write a program. Instead, it would help to identify shortcuts of the form “if you follow these easy rules, your program will only produce results as if by some sequentially consistent interleaving.” (We're still talking about hardware, so we're still talking about interleaving individual assembly instructions.) 到目前为止,我希望您已经相信硬件细节是复杂而微妙的,并不是您每次编写程序都想要处理的事情。相反,它将有助于识别表单的快捷方式。如果您遵循这些简单的规则,您的程序将只产生一些顺序一致的交错结果。(我们仍然在谈论硬件,所以我们仍然在谈论交错个别的组装指令。)

Sarita Adve and Mark Hill proposed exactly this approach in their 1990 paper “Weak Ordering – A New Definition”. They defined “weakly ordered” as follows.

Let a synchronization model be a set of constraints on memory accesses that specify how and when synchronization needs to be done.

Hardware is weakly ordered with respect to a synchronization model if and only if it appears sequentially consistent to all software that obey the synchronization model.

弱有序

让同步模型成为内存访问的一组约束,这些约束指定需要如何以及何时完成同步。

当且仅当硬件与所有遵循同步模型的软件顺序一致时,硬件相对于同步模型是弱有序的。

Although their paper was about capturing the hardware designs of that time (not x86, ARM, and POWER), the idea of elevating the discussion above specific designs, keeps the paper relevant today. 尽管他们的论文是关于捕捉当时的硬件设计(不是x86、ARM和POWER),但将讨论提升到具体设计之上的想法,使这篇论文与今天保持了相关性。

I said before that “valid optimizations do not change the behavior of valid programs.” The rules define what valid means, and then any hardware optimizations have to keep those programs working as they might on a sequentially consistent machine. Of course, the interesting details are the rules themselves, the constraints that define what it means for a program to be valid. 我之前说过,“有效优化不会改变有效程序的行为。”这些规则定义了什么是有效的含义,然后任何硬件优化都必须使这些程序像在顺序一致的机器上一样工作。当然,有趣的细节是规则本身,即定义程序有效的含义的约束。

Adve and Hill propose one synchronization model, which they call data-race-free (DRF). This model assumes that hardware has memory synchronization operations separate from ordinary memory reads and writes. Ordinary memory reads and writes may be reordered between synchronization operations, but they may not be moved across them. (That is, the synchronization operations also serve as barriers to reordering.) A program is said to be data-race-free if, for all idealized sequentially consistent executions, any two ordinary memory accesses to the same location from different threads are either both reads or else separated by synchronization operations forcing one to happen before the other. Adve和Hill提出了一种同步模型,他们称之为无数据竞争(DRF)。该模型假设硬件具有独立于普通内存读写的内存同步操作。普通的内存读写可能会在同步操作之间重新排序,但它们可能不会在同步操作之间移动。(也就是说,同步操作也会成为重新排序的障碍。)如果对于所有理想的顺序一致的执行,从不同线程对同一位置的任何两次普通内存访问都是读操作,或者被同步操作分开,强制一个在另一个之前发生,则一个程序被称为无数据竞争。

Let’s look at some examples, taken from Adve and Hill's paper (redrawn for presentation). Here is a single thread that executes a write of variable x followed by a read of the same variable.

The vertical arrow marks the order of execution within a single thread: the write happens, then the read. There is no race in this program, since everything is in a single thread.

In contrast, there is a race in this two-thread program:

Here, thread 2 writes to x without coordinating with thread 1. Thread 2's write races with both the write and the read by thread 1. If thread 2 were reading x instead of writing it, the program would have only one race, between the write in thread 1 and the read in thread 2. Every race involves at least one write: two uncoordinated reads do not race with each other.

To avoid races, we must add synchronization operations, which force an order between operations on different threads sharing a synchronization variable. If the synchronization S(a) (synchronizing on variable a, marked by the dashed arrow) forces thread 2's write to happen after thread 1 is done, the race is eliminated:

Now the write by thread 2 cannot happen at the same time as thread 1's operations.

If thread 2 were only reading, we would only need to synchronize with thread 1's write. The two reads can still proceed concurrently:

Threads can be ordered by a sequence of synchronizations, even using an intermediate thread. This program has no race:

On the other hand, the use of synchronization variables does not by itself eliminate races: it is possible to use them incorrectly. This program does have a race:

Thread 2's read is properly synchronized with the writes in the other threads—it definitely happens after both—but the two writes are not themselves synchronized. This program is not data-race-free.

Adve and Hill presented weak ordering as “a contract between software and hardware,” specifically that if software avoids data races, then hardware acts as if it is sequentially consistent, which is easier to reason about than the models we were examining in the earlier sections. But how can hardware satisfy its end of the contract? Adve和Hill将弱排序描述为“软件和硬件之间的契约”,具体来说,如果软件避免了数据竞争,那么硬件的行为就好像它是顺序一致的,这比我们在前几节中研究的模型更容易推理。但是硬件如何满足合同的最终要求呢?

Adve and Hill gave a proof that hardware “is weakly ordered by DRF,” meaning it executes data-race-free programs as if by a sequentially consistent ordering, provided it meets a set of certain minimum requirements. I’m not going to go through the details, but the point is that after the Adve and Hill paper, hardware designers had a cookbook recipe backed by a proof: do these things, and you can assert that your hardware will appear sequentially consistent to data-race-free programs. And in fact, most relaxed hardware did behave this way and has continued to do so, assuming appropriate implementations of the synchronization operations. Adve and Hill were concerned originally with the VAX, but certainly x86, ARM, and POWER can satisfy these constraints too. This idea that a system guarantees to data-race-free programs the appearance of sequential consistency is often abbreviated DRF-SC. Adve和Hill给出了一个证明,即硬件“是由DRF弱排序的”,这意味着它执行无数据竞争的程序,就像通过顺序一致的顺序一样,只要它满足一组特定的最小需求。我不打算详细介绍,但重点是在Adve和Hill的论文之后,硬件设计者有了一个由证明支持的食谱:做这些事情,您就可以断言您的硬件将按顺序显示与无数据竞争程序一致。事实上,大多数宽松的硬件确实是这样运行的,并且一直是这样运行的,前提是同步操作的适当实现。Adve和Hill最初关注的是VAX,但毫无疑问,x86、ARM和POWER也可以满足这些限制。这种系统保证无数据竞争程序的顺序一致性外观的想法通常缩写为DRF-SC。

DRF-SC marked a turning point in hardware memory models, providing a clear strategy for both hardware designers and software authors, at least those writing software in assembly language. As we will see in the next post, the question of a memory model for a higher-level programming language does not have as neat and tidy an answer. DRF-SC标志着硬件内存模型的一个转折点,为硬件设计者和软件作者(至少是那些用汇编语言编写软件的人)提供了一个清晰的策略。正如我们将在下一篇文章中看到的那样,针对高级编程语言的内存模型问题并没有一个清晰的答案。

The next post in this series is about programming language memory models.

http://webtrans.yodao.com/webTransPc/index.html?from=en&to=zh-CHS&type=2&url=https%3A%2F%2Fresearch.swtch.com%2Fhwmm#/

Programming Language Memory Models

Programming language memory models answer the question of what behaviors parallel programs can rely on to share memory between their threads. For example, consider this program in a C-like language, where both x and done start out zeroed.

// Thread 1 // Thread 2

x = 1; while(done == 0) { /* loop */ }

done = 1; print(x);

The program attempts to send a message in x from thread 1 to thread 2, using done as the signal that the message is ready to be received. If thread 1 and thread 2, each running on its own dedicated processor, both run to completion, is this program guaranteed to finish and print 1, as intended? The programming language memory model answers that question and others like it.

Although each programming language differs in the details, a few general answers are true of essentially all modern multithreaded languages, including C, C++, Go, Java, JavaScript, Rust, and Swift:

- First, if

xanddoneare ordinary variables, then thread 2's loop may never stop. A common compiler optimization is to load a variable into a register at its first use and then reuse that register for future accesses to the variable, for as long as possible. If thread 2 copiesdoneinto a register before thread 1 executes, it may keep using that register for the entire loop, never noticing that thread 1 later modifiesdone. - Second, even if thread 2's loop does stop, having observed

done==1, it may still print thatxis 0. Compilers often reorder program reads and writes based on optimization heuristics or even just the way hash tables or other intermediate data structures end up being traversed while generating code. The compiled code for thread 1 may end up writing toxafterdoneinstead ofbefore, or the compiled code for thread 2 may end up readingxbefore the loop.

Given how broken this program is, the obvious question is how to fix it.

Modern languages provide special functionality, in the form of atomic variables or atomic operations, to allow a program to synchronize its threads. If we make done an atomic variable (or manipulate it using atomic operations, in languages that take that approach), then our program is guaranteed to finish and to print 1. Making done atomic has many effects:

- The compiled code for thread 1 must make sure that the write to

xcompletes and is visible to other threads before the write todonebecomes visible. - The compiled code for thread 2 must (re)read

doneon every iteration of the loop. - The compiled code for thread 2 must read from

xafter the reads fromdone. - The compiled code must do whatever is necessary to disable hardware optimizations that might reintroduce any of those problems.

The end result of making done atomic is that the program behaves as we want, successfully passing the value in x from thread 1 to thread 2.

编程语言内存模型回答了并行程序可以依赖哪些行为在线程之间共享内存的问题。

常见的编译器优化是在变量第一次使用时将其加载到寄存器中,然后尽可能长时间地重用该寄存器,以便将来访问该变量。

编译器经常根据优化启发式(甚至只是哈希表或其他中间数据结构在生成代码时最终被遍历的方式)对程序的读写进行重新排序。

现代语言以原子变量或原子操作的形式提供了特殊功能,以允许程序同步其线程。

In the original program, after the compiler's code reordering, thread 1 could be writing x at the same moment that thread 2 was reading it. This is a data race. In the revised program, the atomic variable done serves to synchronize access to x: it is now impossible for thread 1 to be writing x at the same moment that thread 2 is reading it. The program is data-race-free. In general, modern languages guarantee that data-race-free programs always execute in a sequentially consistent way, as if the operations from the different threads were interleaved, arbitrarily but without reordering, onto a single processor. This is the DRF-SC property from hardware memory models, adopted in the programming language context.

As an aside, these atomic variables or atomic operations would more properly be called “synchronizing atomics.” It's true that the operations are atomic in the database sense, allowing simultaneous reads and writes which behave as if run sequentially in some order: what would be a race on ordinary variables is not a race when using atomics. But it's even more important that the atomics synchronize the rest of the program, providing a way to eliminate races on the non-atomic data. The standard terminology is plain “atomic”, though, so that's what this post uses. Just remember to read “atomic” as “synchronizing atomic” unless noted otherwise.

题外话,这些原子变量或原子操作更恰当地称为“同步原子”。从数据库的意义上讲,操作确实是原子的,允许同时读和写,它们的行为就像按某种顺序顺序运行一样:在普通变量上的竞赛在使用原子时就不是竞赛了。但更重要的是,原子与程序的其余部分同步,提供了一种消除非原子数据上的种族的方法。标准术语是简单的“原子的”,所以这篇文章用的就是这个。只要记住将“atomic”读作“同步原子”,除非另有说明。

The programming language memory model specifies the exact details of what is required from programmers and from compilers, serving as a contract between them. The general features sketched above are true of essentially all modern languages, but it is only recently that things have converged to this point: in the early 2000s, there was significantly more variation. Even today there is significant variation among languages on second-order questions, including:

编程语言内存模型指定了程序员和编译器需要什么的确切细节,充当它们之间的契约。上面描述的特征基本上适用于所有现代语言,但直到最近,事情才趋于这一点:在21世纪初,变化明显更多。即使在今天,不同的语言在二阶问题上也有很大的差异,包括:

- What are the ordering guarantees for atomic variables themselves? 原子变量本身的排序保证是什么?

- Can a variable be accessed by both atomic and non-atomic operations? 原子操作和非原子操作都可以访问变量吗?

- Are there synchronization mechanisms besides atomics? 除了原子之外,还有同步机制吗?

- Are there atomic operations that don't synchronize? 是否存在不同步的原子操作?

- Do programs with races have any guarantees at all? 对于有竞态的程序可以保证同步吗?

After some preliminaries, the rest of this post examines how different languages answer these and related questions, along with the paths they took to get there. The post also highlights the many false starts along the way, to emphasize that we are still very much learning what works and what does not.

在做了一些铺垫之后,本文的其余部分将探讨不同的语言是如何回答这些和相关的问题的,以及它们是如何达到这个目的的。这篇文章还强调了一路走来的许多错误的开始,强调我们仍然在学习什么是有效的,什么是无效的。

Hardware, Litmus Tests, Happens Before, and DRF-SC

Before we get to details of any particular language, a brief summary of lessons from hardware memory models that we will need to keep in mind.

Different architectures allow different amounts of reordering of instructions, so that code running in parallel on multiple processors can have different allowed results depending on the architecture. The gold standard is sequential consistency, in which any execution must behave as if the programs executed on the different processors were simply interleaved in some order onto a single processor. That model is much easier for developers to reason about, but no significant architecture provides it today, because of the performance gains enabled by weaker guarantees. 不同的体系结构允许不同数量的指令重排序,因此在多个处理器上并行运行的代码可以根据体系结构获得不同的结果。黄金标准是顺序一致性,任何执行都必须表现得好像在不同处理器上执行的程序只是以某种顺序交错在一个处理器上。这个模型对开发人员来说要容易得多,但是目前没有一个重要的体系结构提供它,因为较弱的保证所带来的性能收益。

It is difficult to make completely general statements comparing different memory models. Instead, it can help to focus on specific test cases, called litmus tests. If two memory models allow different behaviors for a given litmus test, this proves they are different and usually helps us see whether, at least for that test case, one is weaker or stronger than the other. For example, here is the litmus test form of the program we examined earlier: 比较不同的内存模型很难得出完全通用的结论。相反,它可以帮助专注于特定的测试用例,称为石蕊测试。如果两个记忆模型在给定的石蕊测试中允许不同的行为,这就证明了它们是不同的,通常有助于我们了解,至少在那个测试用例中,是一个比另一个更弱还是更强。例如,以下是我们之前检查的程序的石蕊测试形式:

Litmus Test: Message Passing

Can this program seer1=1,r2=0?

// Thread 1 // Thread 2 x = 1 r1 = y y = 1 r2 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): no.

On ARM/POWER: yes!

In any modern compiled language using ordinary variables: yes!

As in the previous post, we assume every example starts with all shared variables set to zero. The name rN denotes private storage like a register or function-local variable; the other names like x and y are distinct, shared (global) variables. We ask whether a particular setting of registers is possible at the end of an execution. When answering the litmus test for hardware, we assume that there's no compiler to reorder what happens in the thread: the instructions in the listings are directly translated to assembly instructions given to the processor to execute.

在回答硬件的试金石测试时,我们假设没有编译器对线程中发生的事情进行重新排序:清单中的指令直接翻译为交给处理器执行的汇编指令。

The outcome r1 = 1, r2 = 0 corresponds to the original program's thread 2 finishing its loop (done is y) but then printing 0. This result is not possible in any sequentially consistent interleaving of the program operations. For an assembly language version, printing 0 is not possible on x86, although it is possible on more relaxed architectures like ARM and POWER due to reordering optimizations in the processors themselves. In a modern language, the reordering that can happen during compilation makes this outcome possible no matter what the underlying hardware.

对于汇编语言版本,在x86上打印0是不可能的,尽管在ARM和POWER等更宽松的架构上打印0是可能的,因为处理器本身进行了重新排序优化。在现代语言中,无论底层硬件是什么,编译过程中可能发生的重排序都可以实现此结果。

Instead of guaranteeing sequential consistency, as we mentioned earlier, today's processors guarantee a property called “data-race-free sequential-consistency”, or DRF-SC (sometimes also written SC-DRF). A system guaranteeing DRF-SC must define specific instructions called synchronizing instructions, which provide a way to coordinate different processors (equivalently, threads). Programs use those instructions to create a “happens before” relationship between code running on one processor and code running on another.

不是像我们前面提到的那样保证顺序一致性,今天的处理器保证一种称为“数据无竞争顺序一致性”的属性,或称为DRF-SC(有时也写成SC-DRF)。 保证 DRF-SC 的系统必须定义称为同步指令的特定指令,这些指令提供了一种协调不同处理器(相当于线程)的方法。程序使用这些指令在一个处理器上运行的代码和另一个处理器上运行的代码之间创建一种 “发生在之前” 的关系。

For example, here is a depiction of a short execution of a program on two threads; as usual, each is assumed to be on its own dedicated processor:

We saw this program in the previous post too. Thread 1 and thread 2 execute a synchronizing instruction S(a). In this particular execution of the program, the two S(a) instructions establish a happens-before relationship from thread 1 to thread 2, so the W(x) in thread 1 happens before the R(x) in thread 2.

Two events on different processors that are not ordered by happens-before might occur at the same moment: the exact order is unclear. We say they execute concurrently. A data race is when a write to a variable executes concurrently with a read or another write of that same variable. Processors that provide DRF-SC (all of them, these days) guarantee that programs without data races behave as if they were running on a sequentially consistent architecture. This is the fundamental guarantee that makes it possible to write correct multithreaded assembly programs on modern processors.

在不同的处理器上,两个不是按照happens-before顺序的事件可能在同一时刻发生:确切的顺序是不清楚的。我们说它们并行执行。

数据竞争是指对变量的写入与对同一变量的读取或另一次写入同时执行。提供 DRF-SC 的处理器(现在所有这些处理器)保证没有数据竞争的程序的行为就像它们在顺序一致的架构上运行一样。这是使在现代处理器上编写正确的多线程汇编程序成为可能的基本保证。

As we saw earlier, DRF-SC is also the fundamental guarantee that modern languages have adopted to make it possible to write correct multithreaded programs in higher-level languages.

Compilers and Optimizations

We have mentioned a couple times that compilers might reorder the operations in the input program in the course of generating the final executable code. Let's take a closer look at that claim and at other optimizations that might cause problems.

It is generally accepted that a compiler can reorder ordinary reads from and writes to memory almost arbitrarily, provided the reordering cannot change the observed single-threaded execution of the code. For example, consider this program:

w = 1 x = 2 r1 = y r2 = z

Since w, x, y, and z are all different variables, these four statements can be executed in any order deemed best by the compiler.

由于 w、x、y 和 z 都是不同的变量,因此这四个语句可以按编译器认为最佳的任何顺序执行。

Litmus Test: Coherence

Can this program seer1=1,r2=2,r3=2,r4=1?

(Can Thread 3 seex=1beforex=2while Thread 4 sees the reverse?)

// Thread 1 // Thread 2 // Thread 3 // Thread 4

x = 1 x = 2 r1 = x r3 = x

r2 = x r4 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): no.

On ARM/POWER: no.

In any modern compiled language using ordinary variables: yes!

All modern hardware guarantees coherence, which can also be viewed as sequential consistency for the operations on a single memory location. In this program, one of the writes must overwrite the other, and the entire system has to agree about which is which. It turns out that, because of program reordering during compilation, modern languages do not even provide coherence.

所有现代硬件都保证一致性,也可以将其视为单个内存位置上操作的顺序一致性。在这个程序中,一个写入必须覆盖另一个,整个系统必须就哪个是哪个达成一致。事实证明,由于编译期间程序重新排序,现代语言甚至不提供一致性。

Suppose the compiler reorders the two reads in thread 4, and then the instructions run as if interleaved in this order:

// Thread 1 // Thread 2 // Thread 3 // Thread 4

// (reordered)

(1) x = 1 (2) r1 = x (3) r4 = x

(4) x = 2 (5) r2 = x (6) r3 = x

The result is r1 = 1, r2 = 2, r3 = 2, r4 = 1, which was impossible in the assembly programs but possible in high-level languages. In this sense, programming language memory models are all weaker than the most relaxed hardware memory models.

But there are some guarantees. Everyone agrees on the need to provide DRF-SC, which disallows optimizations that introduce new reads or writes, even if those optimizations would have been valid in single-threaded code.

结果是 r1 = 1, r2 = 2, r3 = 2, r4 = 1,这在汇编程序中是不可能的,但在高级语言中是可能的。从这个意义上说,编程语言内存模型都比最宽松的硬件内存模型弱。

但是有一些保证。每个人都同意需要提供 DRF-SC,它不允许引入新读取或写入的优化,即使这些优化在单线程代码中是有效的。

For example, consider this code:

if(c) {

x++;

} else {

... lots of code ...

}

There’s an if statement with lots of code in the else and only an x++ in the if body. It might be cheaper to have fewer branches and eliminate the if body entirely. We can do that by running the x++ before the if and then adjusting with an x-- in the big else body if we were wrong. That is, the compiler might consider rewriting that code to:

x++;

if(!c) {

x--;

... lots of code ...

}

Is this a safe compiler optimization? In a single-threaded program, yes. In a multithreaded program in which x is shared with another thread when c is false, no: the optimization would introduce a race on x that was not present in the original program.

This example is derived from one in Hans Boehm's 2004 paper, “Threads Cannot Be Implemented As a Library,” which makes the case that languages cannot be silent about the semantics of multithreaded execution.

The programming language memory model is an attempt to precisely answer these questions about which optimizations are allowed and which are not. By examining the history of attempts at writing these models over the past couple decades, we can learn what worked and what didn't, and get a sense of where things are headed.

编程语言内存模型试图精确地回答这些问题:哪些优化是允许的,哪些是不允许的。通过研究过去几十年里试图编写这些模型的历史,我们可以了解到哪些是有效的,哪些是无效的,并了解事情的发展方向。

Original Java Memory Model (1996)

The first flaw was that volatile atomic variables were non-synchronizing, so they did not help eliminate races in the rest of the program. The Java version of the message passing program we saw above would be:

int x;

volatile int done;

// Thread 1 // Thread 2

x = 1; while(done == 0) { /* loop */ }

done = 1; print(x);

Because done is declared volatile, the loop is guaranteed to finish: the compiler cannot cache it in a register and cause an infinite loop. However, the program is not guaranteed to print 1. The compiler was not prohibited from reordering the accesses to x and done, nor was it required to prohibit the hardware from doing the same.

Because Java volatiles were non-synchronizing atomics, you could not use them to build new synchronization primitives. In this sense, the original Java memory model was too weak.

因为 done 被声明为 volatile,所以循环保证完成:编译器不能将它缓存在寄存器中并导致无限循环。但是,程序不能保证打印 1。编译器没有被禁止对 x 和 done 的访问重新排序,也没有被要求禁止硬件做同样的事情。

由于 Java volatile 是非同步原子,因此您无法使用它们来构建新的同步原语。从这个意义上说,原始的 Java 内存模型太弱了。

Coherence is incompatible with compiler optimizations

The orginal Java memory model was also too strong: mandating coherence—once a thread had read a new value of a memory location, it could not appear to later read the old value—disallowed basic compiler optimizations. Earlier we looked at how reordering reads would break coherence, but you might think, well, just don't reorder reads. Here's a more subtle way coherence might be broken by another optimization: common subexpression elimination.

Consider this Java program:

// p and q may or may not point at the same object. int i = p.x; // ... maybe another thread writes p.x at this point ... int j = q.x; int k = p.x;

In this program, common subexpression elimination would notice that p.x is computed twice and optimize the final line to k = i. But if p and q pointed to the same object and another thread wrote to p.x between the reads into i and j, then reusing the old value i for k violates coherence: the read into i saw an old value, the read into j saw a newer value, but then the read into k reusing i would once again see the old value. Not being able to optimize away redundant reads would hobble most compilers, making the generated code slower.

原始的 Java 内存模型限制性太强了:强制一致性,这意味着一旦线程读取了内存位置的新值,它以后似乎无法读取旧值——不允许基本的编译器优化。之前我们研究了重新排序读取如何破坏一致性,但您可能会想,好吧,只要不是重新排序读取就能保证不破坏一致性。这里有另一种更微妙的优化方式,但可能会破坏一致性:公共子表达式消除。

在这个程序中,公共子表达式消除会注意到 p.x 计算了两次并将最后一行优化为 k = i。但是如果 p 和 q 指向同一个对象并且另一个线程在读取 i 和 j 之间写入 p.x,那么将旧值 i 重用于 k 违反了一致性:读取 i 看到了旧值,读取 j 看到了 a 较新的值,但随后读入 k 重用 i 将再次看到旧值。无法优化掉冗余读取会妨碍大多数编译器,使生成的代码变慢。

Coherence is easier for hardware to provide than for compilers because hardware can apply dynamic optimizations: it can adjust the optimization paths based on the exact addresses involved in a given sequence of memory reads and writes. In contrast, compilers can only apply static optimizations: they have to write out, ahead of time, an instruction sequence that will be correct no matter what addresses and values are involved. In the example, the compiler cannot easily change what happens based on whether p and q happen to point to the same object, at least not without writing out code for both possibilities, leading to significant time and space overheads. The compiler's incomplete knowledge about the possible aliasing between memory locations means that actually providing coherence would require giving up fundamental optimizations.

Bill Pugh identified this and other problems in his 1999 paper “Fixing the Java Memory Model.”

硬件比编译器更容易提供一致性,因为硬件可以动态优化:它可以根据给定的内存读取和写入序列中涉及的确切地址调整优化路径。相比之下,编译器只能应用静态优化:他们必须提前写出一个指令序列,无论涉及什么地址和值,它都是正确的。在示例中,编译器无法根据 p 和 q 是否碰巧指向同一个对象来轻松更改所发生的情况,至少在不为这两种可能性编写代码的情况下无法更改,从而导致大量的时间和空间开销。编译器对内存位置之间可能存在的别名的不完整信息意味着实际提供一致性将需要放弃基本的优化。

Bill Pugh 在 1999 年的论文 “Fixing the Java Memory Model” 中发现了这个问题和其他问题。

New Java Memory Model (2004)

Because of these problems, and because the original Java Memory Model was difficult even for experts to understand, Pugh and others started an effort to define a new memory model for Java. That model became JSR-133 and was adopted in Java 5.0, released in 2004. The canonical reference is “The Java Memory Model” (2005), by Jeremy Manson, Bill Pugh, and Sarita Adve, with additional details in Manson's Ph.D. thesis. The new model follows the DRF-SC approach: Java programs that are data-race-free are guaranteed to execute in a sequentially consistent manner.

Synchronizing atomics and other operations

As we saw earlier, to write a data-race-free program, programmers need synchronization operations that can establish happens-before edges to ensure that one thread does not write a non-atomic variable concurrently with another thread reading or writing it. In Java, the main synchronization operations are:

- The creation of a thread happens before the first action in the thread.

- An unlock of mutex m happens before any subsequent lock of m.

- A write to volatile variable v happens before any subsequent read of v.

What does “subsequent” mean? Java defines that all lock, unlock, and volatile variable accesses behave as if they ocurred in some sequentially consistent interleaving, giving a total order over all those operations in the entire program. “Subsequent” means later in that total order. That is: the total order over lock, unlock, and volatile variable accesses defines the meaning of subsequent, then subsequent defines which happens-before edges are created by a particular execution, and then the happens-before edges define whether that particular execution had a data race. If there is no race, then the execution behaves in a sequentially consistent manner.

新模型遵循 DRF-SC 方法:保证无数据竞争的 Java 程序以顺序一致的方式执行。

正如我们之前看到的,为了编写一个无数据竞争的程序,程序员需要可以建立发生前面的同步操作,以确保一个线程不会在另一个线程读取或写入它的同时写入非原子变量。在 Java 中,主要的同步操作有:

- 线程的创建发生在线程中的第一个操作之前。

- 互斥锁 m 的解锁发生在 m 的任何 ** 后续 ** 加锁之前。

- 对 volatile 变量 v 的写入发生在对 v 的任何 ** 后续 ** 读取之前。

“后续” 是什么意思? Java 定义所有锁定、解锁和 volatile 变量访问的行为就好像它们以某种顺序一致的交错方式发生,从而给出整个程序中所有这些操作的总顺序。 “后续” 是指在该总顺序中的较晚。即:加锁、解锁和 volatile 变量访问的总顺序定义了 “后续” 的含义,然后 “后续” 定义了某些特定语句的执行创建了哪些发生之前,发生之前定义了该特定执行是否具有数据竞争。如果没有竞争,则执行以顺序一致的方式运行。

The fact that the volatile accesses must act as if in some total ordering means that in the store buffer litmus test, you can’t end up with r1 = 0 and r2 = 0:

Litmus Test: Store Buffering

Can this program seer1=0,r2=0?

// Thread 1 // Thread 2 x = 1 y = 1 r1 = y r2 = x

On sequentially consistent hardware: no.

On x86 (or other TSO): yes!

On ARM/POWER: yes!

On Java using volatiles: no.

In Java, for volatile variables x and y, the reads and writes cannot be reordered: one write has to come second, and the read that follows the second write must see the first write. If we didn’t have the sequentially consistent requirement—if, say, volatiles were only required to be coherent—the two reads could miss the writes.

There is an important but subtle point here: the total order over all the synchronizing operations is separate from the happens-before relationship. It is not true that there is a happens-before edge in one direction or the other between every lock, unlock, or volatile variable access in a program: you only get a happens-before edge from a write to a read that observes the write. For example, a lock and unlock of different mutexes have no happens-before edges between them, nor do volatile accesses of different variables, even though collectively these operations must behave as if following a single sequentially consistent interleaving.

Semantics for racy programs

DRF-SC only guarantees sequentially consistent behavior to programs with no data races. The new Java memory model, like the original, defined the behavior of racy programs, for a number of reasons:

- To support Java’s general security and safety guarantees.

- To make it easier for programmers to find mistakes.

- To make it harder for attackers to exploit problems, because the damage possible due to a race is more limited.

- To make it clearer to programmers what their programs do.

Instead of relying on coherence, the new model reused the happens-before relation (already used to decide whether a program had a race at all) to decide the outcome of racing reads and writes.

The specific rules for Java are that for word-sized or smaller variables, a read of a variable (or field) x must see the value stored by some single write to x. A write to x can be observed by a read r provided r does not happen before w. That means r can observe writes that happen before r (but that aren’t also overwritten before r), and it can observe writes that race with r.

Using happens-before in this way, combined with synchronizing atomics (volatiles) that could establish new happens-before edges, was a major improvement over the original Java memory model. It provided more useful guarantees to the programmer, and it made a large number of important compiler optimizations definitively allowed. This work is still the memory model for Java today. That said, it’s also still not quite right: there are problems with this use of happens-before for trying to define the semantics of racy programs.

新的模型不再依赖一致性,而是重新使用 happens-before 关系(已经用于决定程序是否有竞态)来决定竞争性读写的结果。

Java 的具体规则是,对于字大小或更小的变量,对一个变量(或字段)x 的读取必须看到对 x 的某个单一写所存储的值。对 x 的 w 可以被一个读 r 观察到,只要 r 不发生在 w 之前。这意味着 r 可以观察在 r 之前发生的写入(但在 r 之前也不会被覆盖),并且它可以观察与 r 竞争的 w。

以这种方式使用 happen-before,再加上可以建立新的 happen-before 边的同步原子(volatiles),是对原始 Java 内存模型的一个重大改进。它为程序员提供了更有用的保证,并使大量重要的编译器优化得到了明确的允许。这项工作仍然是现在 Java 的内存模型要做的事。也就是说,它也仍然不完全正确:这种使用 happens-before 来试图定义竞态程序的语义的做法有问题。

Happens-before does not rule out incoherence

The first problem with happens-before for defining program semantics has to do with coherence (again!). (The following example is taken from Jaroslav Ševčík and David Aspinall's paper, “On the Validity of Program Transformations in the Java Memory Model” (2007).)

Here’s a program with three threads. Let’s assume that Thread 1 and Thread 2 are known to finish before Thread 3 starts.

// Thread 1 // Thread 2 // Thread 3

lock(m1) lock(m2)

x = 1 x = 2

unlock(m1) unlock(m2)

lock(m1)

lock(m2)

r1 = x

r2 = x

unlock(m2)

unlock(m1)

Thread 1 writes x = 1 while holding mutex m1. Thread 2 writes x = 2 while holding mutex m2. Those are different mutexes, so the two writes race. However, only thread 3 reads x, and it does so after acquiring both mutexes. The read into r1 can read either write: both happen before it, and neither definitively overwrites the other. By the same argument, the read into r2 can read either write. But strictly speaking, nothing in the Java memory model says the two reads have to agree: technically, r1 and r2 can be left having read different values of x. That is, this program can end with r1 and r2 holding different values. Of course, no real implementation is going to produce different r1 and r2. Mutual exclusion means there are no writes happening between those two reads. They have to get the same value. But the fact that the memory model allows different reads shows that it is, in a certain technical way, not precisely describing real Java implementations.

线程1在持有互斥锁m1时写入x = 1。线程2在持有互斥锁m2时写入x = 2。它们是不同的互斥锁,所以这两个写操作是竞争的。但是,只有线程3读取x,并且它是在获得两个互斥锁之后读取的。

对 r1 的读可以读取任何一个写:这两个写都发生在它之前,而且都没有明确地说是哪个写操作覆盖另一个。根据同样的论点,读到 r2 的时候可以读到任何一个写。

但是严格来说,在 Java 内存模型中没有任何东西说这两个读必须是一致的:r1 和 r2 可以在读取不同的 x 值后离开。当然,没有真正的实现会产生不同的 r1 和 r2,互斥锁的相互排斥意味着在这两个 read 之间不会发生任何 write 操作。他们必须得到相同的值。但是,内存模型允许不同的读 (两次读到不同的值),这一事实表明,在某种技术上,它并没有精确地描述真实的 Java 实现。

The situation gets worse. What if we add one more instruction, x = r1, between the two reads:

// Thread 1 // Thread 2 // Thread 3

lock(m1) lock(m2)

x = 1 x = 2

unlock(m1) unlock(m2)

lock(m1)

lock(m2)

r1 = x

x = r1 // !?

r2 = x

unlock(m2)

unlock(m1)

Now, clearly the r2 = x read must use the value written by x = r1, so the program must get the same values in r1 and r2. The two values r1 and r2 are now guaranteed to be equal.

The difference between these two programs means we have a problem for compilers. A compiler that sees r1 = x followed by x = r1 may well want to delete the second assignment, which is “clearly” redundant. But that “optimization” changes the second program, which must see the same values in r1 and r2, into the first program, which technically can have r1 different from r2. Therefore, according to the Java Memory Model, this optimization is technically invalid: it changes the meaning of the program. To be clear, this optimization would not change the meaning of Java programs executing on any real JVM you can imagine. But somehow the Java Memory Model doesn’t allow it, suggesting there’s more that needs to be said.

For more about this example and others, see Ševčík and Aspinall's paper.

这两个程序之间的差异意味着编译器有问题。如果编译器看到r1 = x后面跟着x = r1,那么很可能想删除第二个赋值,因为它“显然”是多余的。但这种“优化”将第二个程序(它必须在r1和r2中看到相同的值)更改为第一个程序,从技术上讲,第一个程序的r1可以与r2不同。因此,根据Java内存模型,这种优化在技术上是无效的:它改变了程序的含义。需要明确的是,这种优化不会改变在任何您可以想象的真实JVM上执行的Java程序的意义。但是Java内存模型不允许这样做,这意味着还有更多的东西需要说明。

Happens-before does not rule out acausality

That last example turns out to have been the easy problem. Here’s a harder problem. Consider this litmus test, using ordinary (not volatile) Java variables:

Litmus Test: Racy Out Of Thin Air Values

Can this program seer1=42,r2=42?

// Thread 1 // Thread 2 r1 = x r2 = y y = r1 x = r2

(Obviously not!)

All the variables in this program start out zeroed, as always, and then this program effectively runs y = x in one thread and x = y in the other thread. Can x and y end up being 42? In real life, obviously not. But why not? The memory model turns out not to disallow this result.

Suppose hypothetically that “r1 = x” did read 42. Then “y = r1” would write 42 to y, and then the racing “r2 = y” could read 42, causing the “x = r2” to write 42 to x, and that write races with (and is therefore observable by) the original “r1 = x,” appearing to justify the original hypothetical. In this example, 42 is called an out-of-thin-air value, because it appeared without any justification but then justified itself with circular logic. What if the memory had formerly held a 42 before its current 0, and the hardware incorrectly speculated that it was still 42? That speculation might become a self-fulfilling prophecy. (This argument seemed more far-fetched before Spectre and related attacks showed just how aggressively hardware speculates. Even so, no hardware invents out-of-thin-air values this way.)

这个程序中的所有变量一开始都是零,就像往常一样,然后这个程序在一个线程中有效地运行 y=x,在另一个线程中运行 x=y。x 和 y 最终会是 42 吗?在现实生活中,显然不能。但为什么不能呢?事实证明,内存模型并不允许这种结果。

假设 "r1 = x" 确实读取了 42。然后 "y = r1" 会把 42 写入 y,然后竞态的 "r2 = y" 可以读取 42 ,导致 "x = r2" 把 42 写入 x,而这个写入与原来的 "r1 = x" 竞争(因此可以观察到),似乎证明了原来的假设。在这个例子中,42 被称为空中楼阁,因为它的出现没有任何理由,但随后用循环逻辑为自己辩护。如果内存在当前的 0 之前曾有一个 42,而硬件错误地推测它仍然是 42,那会怎样?这种猜测可能会成为一个自圆其说的预言。(在 Spectre 和相关攻击表明硬件的猜测有多么积极之前,这种说法似乎更加牵强。即便如此,也没有哪个硬件会以这种方式发明空穴来风的数值)。)

假设r1 = x的值是42。然后,“y = r1”将把42写为y,然后赛车“r2 = y”可以读为42,导致“x = r2”将42写为x,然后用原始的“r1 = x”写比赛(因此可以观察到),似乎证明了最初的假设。在本例中,42被称为空值,因为它出现时没有任何解释,但随后使用循环逻辑进行解释。如果内存在当前的0之前保存了一个42,而硬件错误地推测它仍然是42呢?这种猜测可能会成为一个自我实现的预言。(在“幽灵党”和相关攻击显示出硬件投机有多么激进之前,这种说法似乎更牵强。即便如此,也没有任何硬件以这种方式凭空创造出价值。)

这个程序不可能在 r1 和 r2 设置为 42 的情况下结束似乎看起来很正常,但是 happens-before 本身并没有解释为什么这不可能发生。这再次表明这个理论存在着某种不完整性。新的 Java 内存模型花了很多时间来解决这种不完整性,关于这一点,很快就会有答案。

It seems clear that this program cannot end with r1 and r2 set to 42, but happens-before doesn’t by itself explain why this can’t happen. That suggests again that there’s a certain incompleteness. The new Java Memory Model spends a lot of time addressing this incompleteness, about which more shortly.

This program has a race—the reads of x and y are racing against writes in the other threads—so we might fall back on arguing that it's an incorrect program. But here is a version that is data-race-free:

Litmus Test: Non-Racy Out Of Thin Air Values

Can this program seer1=42,r2=42?

// Thread 1 // Thread 2

r1 = x r2 = y

if (r1 == 42) if (r2 == 42)

y = r1 x = r2

(Obviously not!)

Since x and y start out zero, any sequentially consistent execution is never going to execute the writes, so this program has no writes, so there are no races. Once again, though, happens-before alone does not exclude the possibility that, hypothetically, r1 = x sees the racing not-quite-write, and then following from that hypothetical, the conditions both end up true and x and y are both 42 at the end. This is another kind of out-of-thin-air value, but this time in a program with no race. Any model guaranteeing DRF-SC must guarantee that this program only sees all zeros at the end, yet happens-before doesn't explain why.

The Java memory model spends a lot of words that I won’t go into to try to exclude these kinds of acausal hypotheticals. Unfortunately, five years later, Sarita Adve and Hans Boehm had this to say about that work:

Prohibiting such causality violations in a way that does not also prohibit other desired optimizations turned out to be surprisingly difficult. … After many proposals and five years of spirited debate, the current model was approved as the best compromise. … Unfortunately, this model is very complex, was known to have some surprising behaviors, and has recently been shown to have a bug.

(Adve and Boehm, “Memory Models: A Case For Rethinking Parallel Languages and Hardware,” August 2010)

由于 x 和 y 开始时是零,任何顺序一致的执行都不会执行写,所以这个程序没有写操作,所以没有数据竞争。不过,仅仅是 happens-before 并不能排除这样的可能性:假设 r1=x 看到了竞态,而没有去写 r1,然后从这个假设出发,条件最后都是真的,x 和 y 在最后都是 42。这是另一种无中生有的情况,但这次是在一个没有数据竞争的程序中。任何保证 DRF-SC 的模型都必须保证这个程序在最后只看到所有的零,然而 happens-before 并没有解释为什么。

禁止这种违反因果关系的行为,同时又不禁止其他想要的优化,结果是出乎意料的困难。... 经过许多建议和五年的激烈辩论,目前的模型被认为是最好的折衷方案。不幸的是,这个模型非常复杂,已知有一些令人惊讶的行为,而且最近被证明有一个错误。

内存模型:重新思考并行语言和硬件的一个案例

C++11 Memory Model (2011)

Let’s put Java to the side and examine C++. Inspired by the apparent success of Java's new memory model, many of the same people set out to define a similar memory model for C++, eventually adopted in C++11. Compared to Java, C++ deviated in two important ways. First, C++ makes no guarantees at all for programs with data races, which would seem to remove the need for much of the complexity of the Java model. Second, C++ provides three kinds of atomics: strong synchronization (“sequentially consistent”), weak synchronization (“acquire/release”, coherence-only), and no synchronization (“relaxed”, for hiding races). The relaxed atomics reintroduced all of Java's complexity about defining the meaning of what amount to racy programs. The result is that the C++ model is more complicated than Java's yet less helpful to programmers. 让我们把Java放到一边,来研究一下c++。受到Java新内存模型明显成功的启发,许多人开始为c++定义一个类似的内存模型,并最终在c++ 11中采用。与Java相比,c++在两个重要方面有所偏离。首先,c++对具有数据竞争的程序完全没有保证,这似乎消除了对Java模型的许多复杂性的需求。其次,c++提供了三种原子:强同步(“顺序一致”)、弱同步(“获取/释放”,一致性惟一)和无同步(“放松”,用于隐藏种族)。轻松的原子结构重新引入了Java在定义什么算得上是活泼的程序的意义方面的所有复杂性。结果是,c++模型比Java模型更复杂,但对程序员的帮助较小。

C++11 also defined atomic fences as an alternative to atomic variables, but they are not as commonly used and I'm not going to discuss them. c++ 11还定义了原子栅栏作为原子变量的替代方案,但它们不常用,我不打算讨论它们。

DRF-SC or Catch Fire

Unlike Java, C++ gives no guarantees to programs with races. Any program with a race anywhere in it falls into “undefined behavior.” A racing access in the first microseconds of program execution is allowed to cause arbitrary errant behavior hours or days later. This is often called “DRF-SC or Catch Fire”: if the program is data-race free it runs in a sequentially consistent manner, and if not, it can do anything at all, including catch fire.

For a longer presentation of the arguments for DRF-SC or Catch Fire, see Boehm, “Memory Model Rationales” (2007) and Boehm and Adve, “Foundations of the C++ Concurrency Memory Model” (2008).

Briefly, there are four common justifications for this position:

- C and C++ are already rife with undefined behavior, corners of the language where compiler optimizations run wild and users had better not wander or else. What's the harm in one more?

- Existing compilers and libraries were written with no regard to threads, breaking racy programs in arbitrary ways. It would be too difficult to find and fix all the problems, or so the argument goes, although it is unclear how those unfixed compilers and libraries are meant to cope with relaxed atomics.

- Programmers who really know what they are doing and want to avoid undefined behavior can use the relaxed atomics.

- Leaving race semantics undefined allows an implementation to detect and diagnose races and stop execution.

Personally, the last justification is the only one I find compelling, although I observe that it is possible to say “race detectors are allowed” without also saying “one race on an integer can invalidate your entire program.”

Here is an example from “Memory Model Rationales” that I think captures the essence of the C++ approach as well as its problems. Consider this program, which refers to a global variable x.

unsigned i = x;

if (i < 2) {

foo: ...

switch (i) {

case 0:

...;

break;

case 1:

...;

break;

}

}

The claim is that a C++ compiler might be holding i in a register but then need to reuse the registers if the code at label foo is complex. Rather than spill the current value of i to the function stack, the compiler might instead decide to load i a second time from the global x upon reaching the switch statement. The result is that, halfway through the if body, i < 2 may stop being true. If the compiler did something like compiling the switch into a computed jump using a table indexed by i, that code would index off the end of the table and jump to an unexpected address, which could be arbitrarily bad.

From this example and others like it, the C++ memory model authors conclude that any racy access must be allowed to cause unbounded damage to the future execution of the program. Personally, I conclude instead that in a multithreaded program, compilers should not assume that they can reload a local variable like i by re-executing the memory read that initialized it. It may well have been impractical to expect existing C++ compilers, written for a single-threaded world, to find and fix code generation problems like this one, but in new languages, I think we should aim higher.

与 Java 不同,C++ 对有数据竞争的程序没有任何保证。任何带有竞争的程序都属于 "undefined behavior"。在程序执行的最初几微秒内的数据竞争被允许在数小时或数天后引起任意的 "undefined behavior"。这通常被称为 “DRF-SC or Catch Fire”:如果程序是无数据竞争的,它就会以顺序一致的方式运行;如果不是,它可以做任何事情。

简而言之,这一立场有四个共同的理由。

- C 和 C++ 已经充斥着各种 undefined behavior,编译器的优化肆意妄为,用户最好不要乱来,否则。多一个又有什么坏处呢?

- 现有的编译器和库是在不考虑线程的情况下编写的,可能会以任意的方式破坏竞态程序。要找到并修复所有的问题太难了,或者说,不清楚那些未修复的编译器和库是如何处理 relaxed atomics 的。

- 真正知道自己在做什么并想避免 undefined behavior 的程序员可以使用 relaxed atomics。

- 不定义竞态语义,可以让执行者检测和诊断出竞态,并停止执行。

就我个人而言,最后一个理由是我认为唯一有说服力的理由,尽管我认为 "允许使用数据竞争检测器" 和 "一个整数的数据竞争让整个程序失效" 只能取其中之一。

C++ 编译器可能将 i 保存在一个寄存器中,但是如果标签 foo 处的代码很复杂的话,就可能需要重新使用这些寄存器。编译器可能不会将 i 的当前值存到函数栈中,而是决定在到达 switch 语句时从全局 x 中第二次加载 i。其结果是,在 if 主体的中途,i<2 可能会停止为真。如果编译器做了一些事情,比如将 switch 编译成一个使用 i 索引的表格来计算跳转,那么这段代码将索引到表格之外,并跳转到一个意外的地址,这可能是相当糟糕的。

从这个例子和其他类似的例子中,C++ 内存模型的作者得出结论,必须允许任何竞争访问对程序的未来执行造成无限制的破坏。我个人的结论是,在一个多线程程序中,编译器不应该假定他们可以通过重新执行初始化 i 的内存读取来重新加载一个局部变量。指望现有的为单线程世界编写的 C++ 编译器发现并修复像这样的代码生成问题很可能是不切实际的,但在新语言中,我认为我们应该有更高的目标。

Digression: Undefined behavior in C and C++