VMA anonymous virtual memory area 匿名虚拟内存区域

Memory mapping — The Linux Kernel documentation https://linux-kernel-labs.github.io/refs/heads/master/labs/memory_mapping.html

SO2 Lecture 07 - Memory Management — The Linux Kernel documentation https://linux-kernel-labs.github.io/refs/heads/master/so2/lec7-memory-management.html

VMA anonymous virtual memory area 匿名虚拟内存区域

Virtual address space - Wikipedia https://en.wikipedia.org/wiki/Virtual_address_space

https://zh.wikipedia.org/wiki/虚拟地址

Virtual memory - Wikipedia https://en.wikipedia.org/wiki/Virtual_memory

https://zh.wikipedia.org/wiki/虚拟内存

Virtual address space

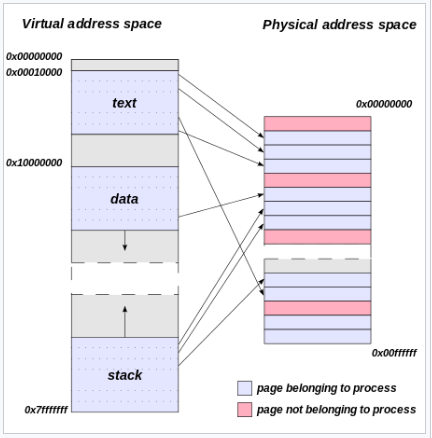

In computing, a virtual address space (VAS) or address space is the set of ranges of virtual addresses that an operating system makes available to a process.[1] The range of virtual addresses usually starts at a low address and can extend to the highest address allowed by the computer's instruction set architecture and supported by the operating system's pointer size implementation, which can be 4 bytes for 32-bit or 8 bytes for 64-bit OS versions. This provides several benefits, one of which is security through process isolation assuming each process is given a separate address space.

Contents

Example[edit]

- In the following description, the terminology used will be particular to the Windows NT operating system, but the concepts are applicable to other virtual memory operating systems.

When a new application on a 32-bit OS is executed, the process has a 4 GiB VAS: each one of the memory addresses (from 0 to 232 − 1) in that space can have a single byte as a value. Initially, none of them have values ('-' represents no value). Using or setting values in such a VAS would cause a memory exception.

0 4 GiB VAS |----------------------------------------------|

Then the application's executable file is mapped into the VAS. Addresses in the process VAS are mapped to bytes in the exe file. The OS manages the mapping:

0 4 GiB VAS |---vvv----------------------------------------| mapping ||| file bytes app

The v's are values from bytes in the mapped file. Then, required DLL files are mapped (this includes custom libraries as well as system ones such as kernel32.dll and user32.dll):

0 4 GiB VAS |---vvv--------vvvvvv---vvvv-------------------| mapping ||| |||||| |||| file bytes app kernel user

The process then starts executing bytes in the exe file. However, the only way the process can use or set '-' values in its VAS is to ask the OS to map them to bytes from a file. A common way to use VAS memory in this way is to map it to the page file. The page file is a single file, but multiple distinct sets of contiguous bytes can be mapped into a VAS:

0 4 GiB VAS |---vvv--------vvvvvv---vvvv----vv---v----vvv--| mapping ||| |||||| |||| || | ||| file bytes app kernel user system_page_file

And different parts of the page file can map into the VAS of different processes:

0 4 GiB VAS 1 |---vvvv-------vvvvvv---vvvv----vv---v----vvv--| mapping |||| |||||| |||| || | ||| file bytes app1 app2 kernel user system_page_file mapping |||| |||||| |||| || | VAS 2 |--------vvvv--vvvvvv---vvvv-------vv---v------|

On Microsoft Windows 32-bit, by default, only 2 GiB are made available to processes for their own use.[2] The other 2 GiB are used by the operating system. On later 32-bit editions of Microsoft Windows it is possible to extend the user-mode virtual address space to 3 GiB while only 1 GiB is left for kernel-mode virtual address space by marking the programs as IMAGE_FILE_LARGE_ADDRESS_AWARE and enabling the /3GB switch in the boot.ini file.[3][4]

On Microsoft Windows 64-bit, in a process running an executable that was linked with /LARGEADDRESSAWARE:NO, the operating system artificially limits the user mode portion of the process's virtual address space to 2 GiB. This applies to both 32- and 64-bit executables.[5][6] Processes running executables that were linked with the /LARGEADDRESSAWARE:YES option, which is the default for 64-bit Visual Studio 2010 and later,[7] have access to more than 2 GiB of virtual address space: up to 4 GiB for 32-bit executables, up to 8 TiB for 64-bit executables in Windows through Windows 8, and up to 128 TiB for 64-bit executables in Windows 8.1 and later.[4][8]

Allocating memory via C's malloc establishes the page file as the backing store for any new virtual address space. However, a process can also explicitly map file bytes.

Linux[edit]

For x86 CPUs, Linux 32-bit allows splitting the user and kernel address ranges in different ways: 3G/1G user/kernel (default), 1G/3G user/kernel or 2G/2G user/kernel.[9]

See also[edit]

Notes[edit]

- ^ IBM Corporation. "What is an address space?". Retrieved August 24, 2013.

- ^ "Virtual Address Space". MSDN. Microsoft.

- ^ "LOADED_IMAGE structure". MSDN. Microsoft.

- ^ Jump up to:a b "4-Gigabyte Tuning: BCDEdit and Boot.ini". MSDN. Microsoft.

- ^ "/LARGEADDRESSAWARE (Handle Large Addresses)". MSDN. Microsoft.

- ^ "Virtual Address Space". MSDN. Microsoft.

- ^ "/LARGEADDRESSAWARE (Handle Large Addresses)". MSDN. Microsoft.

- ^ "/LARGEADDRESSAWARE (Handle Large Addresses)". MSDN. Microsoft.

- ^ "Linux kernel - x86: Memory split".

虚拟地址(英语:Virtual address space)在电脑的专用术语中是指标识一个虚拟(非物理地址)的实体地址。虚拟地址这个术语常用在虚拟内存和虚拟网络地址当中。

参见[编辑]

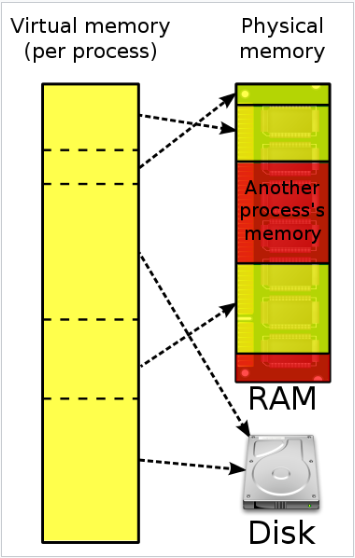

In computing, virtual memory, or virtual storage[b] is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine"[3] which "creates the illusion to users of a very large (main) memory".[4]

The computer's operating system, using a combination of hardware and software, maps memory addresses used by a program, called virtual addresses, into physical addresses in computer memory. Main storage, as seen by a process or task, appears as a contiguous address space or collection of contiguous segments. The operating system manages virtual address spaces and the assignment of real memory to virtual memory. Address translation hardware in the CPU, often referred to as a memory management unit (MMU), automatically translates virtual addresses to physical addresses. Software within the operating system may extend these capabilities, utilizing, e.g., disk storage, to provide a virtual address space that can exceed the capacity of real memory and thus reference more memory than is physically present in the computer.

The primary benefits of virtual memory include freeing applications from having to manage a shared memory space, ability to share memory used by libraries between processes, increased security due to memory isolation, and being able to conceptually use more memory than might be physically available, using the technique of paging or segmentation.

Contents

Properties[edit]

Virtual memory makes application programming easier by hiding fragmentation of physical memory; by delegating to the kernel the burden of managing the memory hierarchy (eliminating the need for the program to handle overlays explicitly); and, when each process is run in its own dedicated address space, by obviating the need to relocate program code or to access memory with relative addressing.

Memory virtualization can be considered a generalization of the concept of virtual memory.

Usage[edit]

Virtual memory is an integral part of a modern computer architecture; implementations usually require hardware support, typically in the form of a memory management unit built into the CPU. While not necessary, emulators and virtual machines can employ hardware support to increase performance of their virtual memory implementations.[5] Older operating systems, such as those for the mainframes of the 1960s, and those for personal computers of the early to mid-1980s (e.g., DOS),[6] generally have no virtual memory functionality,[dubious ] though notable exceptions for mainframes of the 1960s include:

- the Atlas Supervisor for the Atlas

- THE multiprogramming system for the Electrologica X8 (software based virtual memory without hardware support)

- MCP for the Burroughs B5000

- MTS, TSS/360 and CP/CMS for the IBM System/360 Model 67

- Multics for the GE 645

- The Time Sharing Operating System for the RCA Spectra 70/46

During the 1960s and early '70s, computer memory was very expensive. The introduction of virtual memory provided an ability for software systems with large memory demands to run on computers with less real memory. The savings from this provided a strong incentive to switch to virtual memory for all systems. The additional capability of providing virtual address spaces added another level of security and reliability, thus making virtual memory even more attractive to the marketplace.

Most modern operating systems that support virtual memory also run each process in its own dedicated address space. Each program thus appears to have sole access to the virtual memory. However, some older operating systems (such as OS/VS1 and OS/VS2 SVS) and even modern ones (such as IBM i) are single address space operating systems that run all processes in a single address space composed of virtualized memory.

Embedded systems and other special-purpose computer systems that require very fast and/or very consistent response times may opt not to use virtual memory due to decreased determinism; virtual memory systems trigger unpredictable traps that may produce unwanted and unpredictable delays in response to input, especially if the trap requires that data be read into main memory from secondary memory. The hardware to translate virtual addresses to physical addresses typically requires a significant chip area to implement, and not all chips used in embedded systems include that hardware, which is another reason some of those systems don't use virtual memory.

History[edit]

In the 1940s[citation needed] and 1950s, all larger programs had to contain logic for managing primary and secondary storage, such as overlaying. Virtual memory was therefore introduced not only to extend primary memory, but to make such an extension as easy as possible for programmers to use.[7] To allow for multiprogramming and multitasking, many early systems divided memory between multiple programs without virtual memory, such as early models of the PDP-10 via registers.

A claim that the concept of virtual memory was first developed by German physicist Fritz-Rudolf Güntsch at the Technische Universität Berlin in 1956 in his doctoral thesis, Logical Design of a Digital Computer with Multiple Asynchronous Rotating Drums and Automatic High Speed Memory Operation[8][9] does not stand up to careful scrutiny. The computer proposed by Güntsch (but never built) had an address space of 105 words which mapped exactly on to the 105 words of the drums, i.e. the addresses were real addresses and there was no form of indirect mapping, a key feature of virtual memory. What Güntsch did invent was a form of cache memory, since his high-speed memory was intended to contain a copy of some blocks of code or data taken from the drums. Indeed, he wrote (as quoted in translation[10]): “The programmer need not respect the existence of the primary memory (he need not even know that it exists), for there is only one sort of addresses (sic) by which one can program as if there were only one storage.” This is exactly the situation in computers with cache memory, one of the earliest commercial examples of which was the IBM System/360 Model 85.[11] In the Model 85 all addresses were real addresses referring to the main core store. A semiconductor cache store, invisible to the user, held the contents of parts of the main store in use by the currently executing program. This is exactly analogous to Güntsch's system, designed as a means to improve performance, rather than to solve the problems involved in multi-programming.

The first true virtual memory system was that implemented at the University of Manchester to create a one-level storage system[12] as part of the Atlas Computer. It used a paging mechanism to map the virtual addresses available to the programmer on to the real memory that consisted of 16,384 words of primary core memory with an additional 98,304 words of secondary drum memory.[13] The first Atlas was commissioned in 1962 but working prototypes of paging had been developed by 1959.[7]: 2 [14][15] In 1961, the Burroughs Corporation independently released the first commercial computer with virtual memory, the B5000, with segmentation rather than paging.[16][17]

Before virtual memory could be implemented in mainstream operating systems, many problems had to be addressed. Dynamic address translation required expensive and difficult-to-build specialized hardware; initial implementations slowed down access to memory slightly.[7] There were worries that new system-wide algorithms utilizing secondary storage would be less effective than previously used application-specific algorithms. By 1969, the debate over virtual memory for commercial computers was over;[7] an IBM research team led by David Sayre showed that their virtual memory overlay system consistently worked better than the best manually controlled systems.[18] Throughout the 1970s, the IBM 370 series running their virtual-storage based operating systems provided a means for business users to migrate multiple older systems into fewer, more powerful, mainframes that had improved price/performance. The first minicomputer to introduce virtual memory was the Norwegian NORD-1; during the 1970s, other minicomputers implemented virtual memory, notably VAX models running VMS.

Virtual memory was introduced to the x86 architecture with the protected mode of the Intel 80286 processor, but its segment swapping technique scaled poorly to larger segment sizes. The Intel 80386 introduced paging support underneath the existing segmentation layer, enabling the page fault exception to chain with other exceptions without double fault. However, loading segment descriptors was an expensive operation, causing operating system designers to rely strictly on paging rather than a combination of paging and segmentation.[citation needed]

Paged virtual memory[edit]

|

This section needs additional citations for verification.

|

Nearly all current implementations of virtual memory divide a virtual address space into pages, blocks of contiguous virtual memory addresses. Pages on contemporary[c] systems are usually at least 4 kilobytes in size; systems with large virtual address ranges or amounts of real memory generally use larger page sizes.[19]

Page tables[edit]

Page tables are used to translate the virtual addresses seen by the application into physical addresses used by the hardware to process instructions;[20] such hardware that handles this specific translation is often known as the memory management unit. Each entry in the page table holds a flag indicating whether the corresponding page is in real memory or not. If it is in real memory, the page table entry will contain the real memory address at which the page is stored. When a reference is made to a page by the hardware, if the page table entry for the page indicates that it is not currently in real memory, the hardware raises a page fault exception, invoking the paging supervisor component of the operating system.

Systems can have one page table for the whole system, separate page tables for each application and segment, a tree of page tables for large segments or some combination of these. If there is only one page table, different applications running at the same time use different parts of a single range of virtual addresses. If there are multiple page or segment tables, there are multiple virtual address spaces and concurrent applications with separate page tables redirect to different real addresses.

Some earlier systems with smaller real memory sizes, such as the SDS 940, used page registers instead of page tables in memory for address translation.

Paging supervisor[edit]

This part of the operating system creates and manages page tables. If the hardware raises a page fault exception, the paging supervisor accesses secondary storage, returns the page that has the virtual address that resulted in the page fault, updates the page tables to reflect the physical location of the virtual address and tells the translation mechanism to restart the request.

When all physical memory is already in use, the paging supervisor must free a page in primary storage to hold the swapped-in page. The supervisor uses one of a variety of page replacement algorithms such as least recently used to determine which page to free.

Pinned pages[edit]

Operating systems have memory areas that are pinned (never swapped to secondary storage). Other terms used are locked, fixed, or wired pages. For example, interrupt mechanisms rely on an array of pointers to their handlers, such as I/O completion and page fault. If the pages containing these pointers or the code that they invoke were pageable, interrupt-handling would become far more complex and time-consuming, particularly in the case of page fault interruptions. Hence, some part of the page table structures is not pageable.

Some pages may be pinned for short periods of time, others may be pinned for long periods of time, and still others may need to be permanently pinned. For example:

- The paging supervisor code and drivers for secondary storage devices on which pages reside must be permanently pinned, as otherwise paging wouldn't even work because the necessary code wouldn't be available.

- Timing-dependent components may be pinned to avoid variable paging delays.

- Data buffers that are accessed directly by peripheral devices that use direct memory access or I/O channels must reside in pinned pages while the I/O operation is in progress because such devices and the buses to which they are attached expect to find data buffers located at physical memory addresses; regardless of whether the bus has a memory management unit for I/O, transfers cannot be stopped if a page fault occurs and then restarted when the page fault has been processed.[why?]

In IBM's operating systems for System/370 and successor systems, the term is "fixed", and such pages may be long-term fixed, or may be short-term fixed, or may be unfixed (i.e., pageable). System control structures are often long-term fixed (measured in wall-clock time, i.e., time measured in seconds, rather than time measured in fractions of one second) whereas I/O buffers are usually short-term fixed (usually measured in significantly less than wall-clock time, possibly for tens of milliseconds). Indeed, the OS has a special facility for "fast fixing" these short-term fixed data buffers (fixing which is performed without resorting to a time-consuming Supervisor Call instruction).

Multics used the term "wired". OpenVMS and Windows refer to pages temporarily made nonpageable (as for I/O buffers) as "locked", and simply "nonpageable" for those that are never pageable. The Single UNIX Specification also uses the term "locked" in the specification for mlock(), as do the mlock() man pages on many Unix-like systems.

Virtual-real operation[edit]

In OS/VS1 and similar OSes, some parts of systems memory are managed in "virtual-real" mode, called "V=R". In this mode every virtual address corresponds to the same real address. This mode is used for interrupt mechanisms, for the paging supervisor and page tables in older systems, and for application programs using non-standard I/O management. For example, IBM's z/OS has 3 modes (virtual-virtual, virtual-real and virtual-fixed).[21][page needed]

Thrashing[edit]

When paging and page stealing are used, a problem called "thrashing" can occur, in which the computer spends an unsuitably large amount of time transferring pages to and from a backing store, hence slowing down useful work. A task's working set is the minimum set of pages that should be in memory in order for it to make useful progress. Thrashing occurs when there is insufficient memory available to store the working sets of all active programs. Adding real memory is the simplest response, but improving application design, scheduling, and memory usage can help. Another solution is to reduce the number of active tasks on the system. This reduces demand on real memory by swapping out the entire working set of one or more processes.

Segmented virtual memory[edit]

Some systems, such as the Burroughs B5500,[22] use segmentation instead of paging, dividing virtual address spaces into variable-length segments. A virtual address here consists of a segment number and an offset within the segment. The Intel 80286 supports a similar segmentation scheme as an option, but it is rarely used. Segmentation and paging can be used together by dividing each segment into pages; systems with this memory structure, such as Multics and IBM System/38, are usually paging-predominant, segmentation providing memory protection.[23][24][25]

In the Intel 80386 and later IA-32 processors, the segments reside in a 32-bit linear, paged address space. Segments can be moved in and out of that space; pages there can "page" in and out of main memory, providing two levels of virtual memory; few if any operating systems do so, instead using only paging. Early non-hardware-assisted x86 virtualization solutions combined paging and segmentation because x86 paging offers only two protection domains whereas a VMM, guest OS or guest application stack needs three.[26]: 22 The difference between paging and segmentation systems is not only about memory division; segmentation is visible to user processes, as part of memory model semantics. Hence, instead of memory that looks like a single large space, it is structured into multiple spaces.

This difference has important consequences; a segment is not a page with variable length or a simple way to lengthen the address space. Segmentation that can provide a single-level memory model in which there is no differentiation between process memory and file system consists of only a list of segments (files) mapped into the process's potential address space.[27]

This is not the same as the mechanisms provided by calls such as mmap and Win32's MapViewOfFile, because inter-file pointers do not work when mapping files into semi-arbitrary places. In Multics, a file (or a segment from a multi-segment file) is mapped into a segment in the address space, so files are always mapped at a segment boundary. A file's linkage section can contain pointers for which an attempt to load the pointer into a register or make an indirect reference through it causes a trap. The unresolved pointer contains an indication of the name of the segment to which the pointer refers and an offset within the segment; the handler for the trap maps the segment into the address space, puts the segment number into the pointer, changes the tag field in the pointer so that it no longer causes a trap, and returns to the code where the trap occurred, re-executing the instruction that caused the trap.[28] This eliminates the need for a linker completely[7] and works when different processes map the same file into different places in their private address spaces.[29]

Address space swapping[edit]

Some operating systems provide for swapping entire address spaces, in addition to whatever facilities they have for paging and segmentation. When this occurs, the OS writes those pages and segments currently in real memory to swap files. In a swap-in, the OS reads back the data from the swap files but does not automatically read back pages that had been paged out at the time of the swap out operation.

IBM's MVS, from OS/VS2 Release 2 through z/OS, provides for marking an address space as unswappable; doing so does not pin any pages in the address space. This can be done for the duration of a job by entering the name of an eligible[30] main program in the Program Properties Table with an unswappable flag. In addition, privileged code can temporarily make an address space unswappable using a SYSEVENT Supervisor Call instruction (SVC); certain changes[31] in the address space properties require that the OS swap it out and then swap it back in, using SYSEVENT TRANSWAP.[32]

Swapping does not necessarily require memory management hardware, if, for example, multiple jobs are swapped in and out of the same area of storage.

See also[edit]

- CPU design

- Page (computing)

- Cache algorithms

- Memory allocation

- Memory management (operating systems)

- Protected mode, an x86 mode that allows for virtual memory.

- CUDA Pinned memory

- Heterogeneous System Architecture, a series of specifications intended to unify RAM and Graphic's card memory

- Storage virtualization

Virtual memory combines active RAM and inactive memory on DASD[a] to form a large range of contiguous addresses.

虚拟内存是计算机系统内存管理的一种技术。它使得应用程序认为它拥有连续可用的内存(一个连续完整的地址空间),而实际上物理内存通常被分隔成多个内存碎片,还有部分暂时存储在外部磁盘存储器上,在需要时进行数据交换。与没有使用虚拟内存技术的系统相比,使用这种技术使得大型程序的编写变得更容易,对真正的物理内存(例如RAM)的使用也更有效率。此外,虚拟内存技术可以使多个进程共享同一个运行库,并通过分割不同进程的内存空间来提高系统的安全性。

注意:虚拟内存不只是“用磁盘空间来扩展物理内存”的意思——这只是扩充内存级别以使其包含硬盘驱动器而已。把内存扩展到磁盘只是使用虚拟内存技术的一个结果,它的作用也可以通过覆盖或者把处于不活动状态的程序以及它们的数据全部交换到磁盘上等方式来实现。对虚拟内存的定义是基于对地址空间的重定义的,即把地址空间定义为“连续的虚拟内存地址”,以借此“欺骗”程序,使它们以为自己正在使用一大块的“连续”地址。

那些需要快速访问或者相应时间非常稳定的嵌入式系统,以及其他的具有特殊应用的计算机系统,可能会为了避免让运算结果的可预测性降低,而选择不使用虚拟内存。

用途与实现[编辑]

虚拟内存技术是现代计算机系统结构中不可分割的一部分。现代所有用于一般应用的操作系统都对普通的应用程序使用虚拟内存技术,例如文字处理软件,电子制表软件,多媒体播放器等等。大部分架构通过CPU中独立的硬件内存管理单元来辅助实现这一功能。部分仿真器和虚拟机也能够通过宿主系统中的硬件来提高性能。[1]老一些的操作系统,如DOS和1980年代的Windows[2],或者那些1960年代的大型机,一般都没有虚拟内存的功能——但是Atlas,B5000和苹果公司的Lisa都是很值得注意的例外。

从Intel 80286开始,X86指令集通过保护模式引入了虚拟内存技术,但是由于其使用的存储器分段技术对大内存段的支持不佳,后续的80386在分段技术框架下支持了内存分页技术。

Windows操作系统的虚拟内存[编辑]

对于32位进程,其逻辑内存空间为4G。Windows API提供了一套函数操纵进程的虚拟内存:

- VirtualAlloc(PVOID开始地址,SIZE_T大小,DWORD内存类型,DWORD保护属性)。 内存类型有MEM_RESERVE(保留)、MEM_RELEASE(释放)和MEM_COMMIT(提交)。保留是指占用一块逻辑地址空间,但未实际分配物理内存;提交是实际分配物理内存。MEM_RESET用于把内存清零。 保护属性为: PAGE_NOACCESS、PAGE_READONLY、PAGE_READWRITE、PAGE_EXECUTE、PAGE_EXECUTE_READ、PAGE_EXECUTE_READWRITE。

- VirtualProtect(PVOID基地址,SIZE_T大小,DWORD新保护属性,DWORD旧保护属性)。更改保护属性。

- VirtualFree(PVOID基地址,SIZE_T大小,DWORD内存类型)。页面释放。内存类型是MEM_DECOMMIT或者MEM_RELEASE

- VirtualLock

- VirtualUnlock

- VirtualQuery

参见[编辑]

参考资料[编辑]

- ^ AMD-V™ Nested Paging (PDF). AMD. [28 April 2015].

- ^ Windows Version History. Microsoft. 2005-07-19 [2008-12-03]. (原始内容存档于2015-02-26).

- Windows XP的虚拟内存与性能 (页面存档备份,存于互联网档案馆)

- 微软官方内存管理白皮书 (页面存档备份,存于互联网档案馆)

- Linux下内存管理(页面存档备份,存于互联网档案馆)

浙公网安备 33010602011771号

浙公网安备 33010602011771号