centos7二进制安装Hadoop3

一、Hadoop 简介

1.1 Hadoop3核心组件

1 2 3 4 5 | HDFS:分布式文件系统:解决海量数据存储YARN:集群资源管理和任务调度框架:解决资源任务调度MapReduce:分布式计算框架:解决海量数据计算 |

1.2 Hadoop集群简介

1 2 3 | Hadoop集群包括两个集群:HDFS YARN两个集群 逻辑上分离(互不影响、互不依赖) 物理上一起(部分进程在一台服务器上)默认为主从架构 |

1.2.1 HDFS

1 2 3 | 主角色:NameNode(NN)从角色:DataNode(DN)主角色辅助角色:SecondrayNameNode(SNN) |

1.2.2 YARN

1 2 | 主角色:ResourceManager(RM)从角色:NodeManager(NM) |

二、环境信息及准备

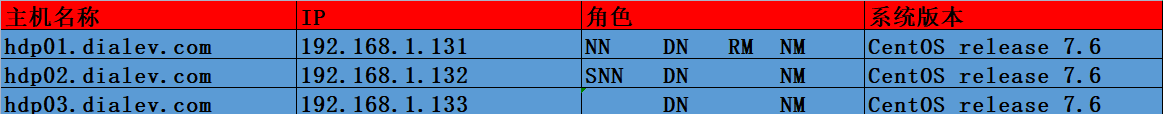

2.1 机器及机器角色规划

2.2 节点添加hosts解析

1 2 3 | 192.168.1.131 hdp01.dialev.com192.168.1.132 hdp02.dialev.com192.168.1.133 hdp03.dialev.com |

2.3 关闭防火墙

2.4 hdp01到三台机器免密

1 2 | echo "StrictHostKeyChecking no" >~/.ssh/configssh-copy-id -i 192.168.1.13{1..3} |

2.5 时间同步

1 2 3 | yum -y install ntpdate ntpdate ntp.aliyun.com echo '*/5 * * * * ntpdate ntp.aliyun.com 2>&1' >> /var/spool/cron/root |

2.6 调大用户文件描述符

1 2 3 4 5 | vim /etc/security/limits.conf* soft nofile 65535* hard nofile 65535 # 配置需要重启才能生效 |

2.7 安装Java环境

1 2 3 4 5 6 7 8 9 10 11 | tar xf jdk-8u65-linux-x64.tar.gz -C /usr/local/cd /usr/local/ln -sv jdk1.8.0_65/ java vim /etc/profile.d/java.shexport JAVA_HOME=/usr/local/javaexport CLASSPATH=$JAVA_HOME/lib/tools.jarexport PATH=$JAVA_HOME/bin:$PATH source /etc/profile.d/java.shjava -version |

三、安装Hadoop

3.1 解压安装包

此篇文档及Hadoop相关文档相关软件包统一在此百度网盘:

链接:https://pan.baidu.com/s/11F4THdIfgrULMn2gNcObRA?pwd=cjll

1 2 3 4 5 6 7 8 9 10 11 12 | # https://archive.apache.org/dist/hadoop/common/ 也可以根据实际部署版本下载tar xf hadoop-3.1.4.tar.gz -C /usr/local/cd /usr/local/ln -sv hadoop-3.1.4 hadoop目录结构:├── bin Hadoop最基本的管理脚本和使用脚本的目录。├── etc Hadoop配置文件目录├── include 编程库头文件,用于C++程序访问HDFS或者编写MapReduce├── lib Hadoop对外提供的编程动态和静态库├── libexec 各个服务对外用的shell配置文件目录,可用于日志输出,启动参数等基本信息├── sbin Hadoop管理脚本所在目录,主要保护HDFS和YARN中各类服务的启动/关闭脚本└── share Hadoop各个模块编译后的jar包目录,官方自带示例 |

1 2 3 4 5 6 7 8 9 10 11 | cd etc/hadoopcp hadoop-env.sh hadoop-env.sh-bakvim hadoop-env.shexport JAVA_HOME=/usr/local/javaexport HDFS_NAMENODE_USER=rootexport HDFS_DATANODE_USER=rootexport HDFS_SECONDARYNAMENODE_USER=rootexport YARN_RESOURCEMANAGER_USER=rootexport YARN_NODEMANAGER_USER=rootexport HADOOP_HOME=/usr/local/hadoopexport PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin |

3.3 指定集群默认配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | cp core-site.xml core-site.xml-bakvim core-site.xml<configuration> <!-- 指定HDFS老大(namenode)的通信地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://hdp01.dialev.com:8020</value> </property> <!-- 指定hadoop运行时产生文件的存储路径 --> <property> <name>hadoop.tmp.dir</name> <value>/Hadoop/tmp</value> </property> <!-- 设置HDFS web UI访问用户 --> <property> <name>hadoop.http.staticuser.user</name> <value>root</value> </property></configuration> |

3.4 修改SNN配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | cp hdfs-site.xml hdfs-site.xml-bakvim hdfs-site.xml<configuration> <!-- 设置namenode的http通讯地址 --> <property> <name>dfs.namenode.http-address</name> <value>hdp01.dialev.com:50070</value> </property> <!-- 设置secondarynamenode的http通讯地址 --> <property> <name>dfs.namenode.secondary.http-address</name> <value>hdp02.dialev.com:50090</value> </property> <!-- 设置namenode存放的路径 --> <property> <name>dfs.namenode.name.dir</name> <value>/Hadoop/name</value> </property> <!-- 设置hdfs副本数量 --> <property> <name>dfs.replication</name> <value>2</value> </property> <!-- 设置datanode存放的路径,如果不指定则使用hadoop.tmp.dir所指路径 --> <property> <name>dfs.datanode.data.dir</name> <value>/Hadoop/data</value> </property></configuration> |

3.5 MapReduce配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | cp mapred-site.xml mapred-site.xml-bakvim mapred-site.xml<configuration> <!-- 通知框架MR使用YARN --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <!-- MR App Mater 环境变量 --> <property> <name>yarn.app.mapreduce.am.env</name> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value> </property> <!-- MR Map Task 环境变量 --> <property> <name>mapreduce.map.env</name> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value> </property> <!-- MR Reduce Task 环境变量 --> <property> <name>mapreduce.reduce.env</name> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value> </property></configuration> |

3.6 YARN配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | cp yarn-site.xml yarn-site.xml-bakvim yarn-site.xml<configuration><property> <name>yarn.resourcemanager.hostname</name> <value>hdp01.dialev.com</value></property><property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value></property><!-- 是否将对容器实施物理内存限制 --><property> <name>yarn.nodemanager.pmem-check-enabled</name> <value>false</value></property><!-- 是否将对容器实施虚拟内存限制。 --><property> <name>yarn.nodemanager.vmem-check-enabled</name> <value>false</value></property><!-- 开启日志聚集 --><property> <name>yarn.log-aggregation-enable</name> <value>true</value></property><!-- 保存的时间7天 --><property> <name>yarn.log-aggregation.retain-seconds</name> <value>604800</value></property></configuration> |

3.7 配置从角色机器地址

1 2 3 4 | vim workershdp01.dialev.comhdp02.dialev.comhdp03.dialev.com |

8.同步集群配置

1 2 3 4 5 | scp -r -q hadoop-3.1.4 192.168.1.132:/usr/local/scp -r -q hadoop-3.1.4 192.168.1.133:/usr/local/# 2 3 两个节点创建软连接ln -sv hadoop-3.1.4 hadoop |

四、启动Hadoop

4.1 初始化名称节点

在hdp01.dialev.com上执行,仅此一次,误操作可以删除初始化目录

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | hdfs namenode -format......2022-12-26 16:40:03,355 INFO util.GSet: 0.029999999329447746% max memory 940.5 MB = 288.9 KB2022-12-26 16:40:03,355 INFO util.GSet: capacity = 2^15 = 32768 entries2022-12-26 16:40:03,406 INFO namenode.FSImage: Allocated new BlockPoolId: BP-631728325-192.168.1.131-16720440033972022-12-26 16:40:03,437 INFO common.Storage: Storage directory /Hadoop/name has been successfully formatted. # /Hadoop/name初始化目录,这行信息表明对应的存储已经格式化成功。2022-12-26 16:40:03,498 INFO namenode.FSImageFormatProtobuf: Saving image file /Hadoop/name/current/fsimage.ckpt_0000000000000000000 using no compression2022-12-26 16:40:03,781 INFO namenode.FSImageFormatProtobuf: Image file /Hadoop/name/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds .2022-12-26 16:40:03,802 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 02022-12-26 16:40:03,820 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid = 0 when meet shutdown.2022-12-26 16:40:03,821 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************SHUTDOWN_MSG: Shutting down NameNode at hdp01.dialev.com/192.168.1.131************************************************************/ |

4.2 启动服务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | 1.自带sh脚本cd hadoop/sbin/HDFS集群: start-dfs.sh stop-dfs.shYARN集群: start-yarn.sh stop-yarn.shHadoop集群(HDFS+YARN): start-all.sh stop-all.shjps #查看命令结果是否与集群规划一致/usr/local/hadoop/logs 日志路径,默认安装目录下logs目录2.手动操作(了解):HDFS集群hdfs --daemon start namenode | datanode |secondarynamenodehdfs --daemon stop namenode | datanode | secondarynamenodeYARN集群yarn --daemon start resourcemanager | nodemanageryarn --daemon stop resourcemanager |nodemanager |

五、验证

5.1 访问相关web UI

1 2 3 4 5 6 7 8 | 1.打印集群状态hdfs dfsadmin -report2.访问YARN的管理界面http://192.168.1.131:8088/cluster/nodes #RM服务所在主机8088端口3.访问namenode的管理页面http://192.168.1.131:50070/dfshealth.html#tab-overview #NN服务所在主机,配置项为 hdfs-site.xml配置文件中dfs.namenode.http-address的值 2.x端口默认为50070 3.x默认为9870 这里50070是因为我没注意新版本改变还是写的2.x配置 |

5.2 测试创建、上传功能

1 2 | hadoop fs -mkdir /bowen #在Hadoop根目录下创建一个 bowen 目录hadoop fs -put yarn-env.sh /bowen #上传文件到bowen目录下 |

5.3 测试MapReduce执行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | cd /usr/local/hadoop/share/hadoop/mapreducehadoop jar hadoop-mapreduce-examples-3.1.4.jar pi 2 4Number of Maps = 2Samples per Map = 4Wrote input for Map #0Wrote input for Map #1Starting Job2022-12-27 09:20:12,868 INFO client.RMProxy: Connecting to ResourceManager at hdp01.dialev.com/192.168.1.131:8032 #首先会连接到RM上申请资源2022-12-27 09:20:14,091 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1672045511416_00012022-12-27 09:20:14,503 INFO input.FileInputFormat: Total input files to process : 22022-12-27 09:20:14,707 INFO mapreduce.JobSubmitter: number of splits:22022-12-27 09:20:15,349 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1672045511416_00012022-12-27 09:20:15,351 INFO mapreduce.JobSubmitter: Executing with tokens: []2022-12-27 09:20:16,072 INFO conf.Configuration: resource-types.xml not found2022-12-27 09:20:16,073 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.2022-12-27 09:20:16,974 INFO impl.YarnClientImpl: Submitted application application_1672045511416_00012022-12-27 09:20:17,204 INFO mapreduce.Job: The url to track the job: http://hdp01.dialev.com:8088/proxy/application_1672045511416_0001/2022-12-27 09:20:17,206 INFO mapreduce.Job: Running job: job_1672045511416_00012022-12-27 09:20:33,618 INFO mapreduce.Job: Job job_1672045511416_0001 running in uber mode : false2022-12-27 09:20:33,621 INFO mapreduce.Job: map 0% reduce 0% #MapReduce有两个阶段,分别是 map和reduce 2022-12-27 09:20:47,862 INFO mapreduce.Job: map 100% reduce 0%2022-12-27 09:20:53,944 INFO mapreduce.Job: map 100% reduce 100%2022-12-27 09:20:53,968 INFO mapreduce.Job: Job job_1672045511416_0001 completed successfully...... |

5.4 集群基准测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | 1.写测试:写入10个文件,每个10MBhadoop jar hadoop-mapreduce-client-jobclient-3.1.4-tests.jar TestDFSIO -write -nrFiles 5 -fileSize 10MB......2022-12-27 09:51:12,775 INFO fs.TestDFSIO: ----- TestDFSIO ----- : write2022-12-27 09:51:12,775 INFO fs.TestDFSIO: Date & time: Tue Dec 27 09:51:12 CST 20222022-12-27 09:51:12,775 INFO fs.TestDFSIO: Number of files: 5 #文件个数2022-12-27 09:51:12,775 INFO fs.TestDFSIO: Total MBytes processed: 50 #总大小2022-12-27 09:51:12,775 INFO fs.TestDFSIO: Throughput mb/sec: 12.49 #吞吐量2022-12-27 09:51:12,775 INFO fs.TestDFSIO: Average IO rate mb/sec: 15.46 #平均IO速率2022-12-27 09:51:12,775 INFO fs.TestDFSIO: IO rate std deviation: 7.51 #IO速率标准偏差2022-12-27 09:51:12,776 INFO fs.TestDFSIO: Test exec time sec: 32.95 #执行时间2.读测试hadoop jar hadoop-mapreduce-client-jobclient-3.1.4-tests.jar TestDFSIO -read -nrFiles 5 -fileSize 10MB......2022-12-27 09:54:23,826 INFO fs.TestDFSIO: ----- TestDFSIO ----- : read2022-12-27 09:54:23,826 INFO fs.TestDFSIO: Date & time: Tue Dec 27 09:54:23 CST 20222022-12-27 09:54:23,826 INFO fs.TestDFSIO: Number of files: 52022-12-27 09:54:23,826 INFO fs.TestDFSIO: Total MBytes processed: 502022-12-27 09:54:23,826 INFO fs.TestDFSIO: Throughput mb/sec: 94.342022-12-27 09:54:23,827 INFO fs.TestDFSIO: Average IO rate mb/sec: 101.262022-12-27 09:54:23,827 INFO fs.TestDFSIO: IO rate std deviation: 30.072022-12-27 09:54:23,827 INFO fs.TestDFSIO: Test exec time sec: 34.393.清理测试数据hadoop jar hadoop-mapreduce-client-jobclient-3.1.4-tests.jar TestDFSIO -clean |

"一劳永逸" 的话,有是有的,而 "一劳永逸" 的事却极少

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· AI 智能体引爆开源社区「GitHub 热点速览」

· Manus的开源复刻OpenManus初探

· 写一个简单的SQL生成工具

2018-02-17 CentOS7.4安装部署openstack [Liberty版] (二)