docke通信之Linux 网络命名空间

一、前言

namespace(命名空间)和cgroup是软件容器化(想想Docker)趋势中的两个主要内核技术。简单来说,cgroup是一种对进程进行统一的资源监控和限制,它控制着你可以使用多少系统资源(CPU,内存等)。而namespace是对全局系统资源的一种封装隔离,它通过Linux内核对系统资源进行隔离和虚拟化的特性,限制了您可以看到的内容。

Linux 3.8内核提供了6种类型的命名空间:Process ID (pid)、Mount (mnt)、Network (net)、InterProcess Communication (ipc)、UTS、User ID (user)。例如,pid命名空间内的进程只能看到同一命名空间中的进程。mnt命名空间,可以将进程附加到自己的文件系统(如chroot)。在本文中,我只关注网络命名空间 Network (net)。

网络命名空间为命名空间内的所有进程提供了全新隔离的网络协议栈。这包括网络接口,路由表和iptables规则。通过使用网络命名空间就可以实现网络虚拟环境,实现彼此之间的网络隔离,这对于云计算中租户网络隔离非常重要,Docker中的网络隔离也是基于此实现的,如果你已经理解了上面所说的,那么我们进入正题,介绍如何使用网络命名空间。

二、环境准备及状态确认

1.创建测试容器

#创建两个测试容器

[root@localhost ~]# docker run -d -it --name busybox_1 busybox /bin/sh -c "while true;do sleep 3600;done"

[root@localhost ~]# docker run -d -it --name busybox_2 busybox /bin/sh -c "while true;do sleep 3600;done"

[root@localhost ~]# docker ps -a

2.查看容器IP及网络联通性

#查看容器的IP

[root@localhost ~]# docker exec -it busybox_1 ip a

[root@localhost ~]# docker exec -it busybox_2 ip a

[root@localhost ~]# docker exec -it busybox_1 /bin/sh

/ # ping 172.17.0.3 #进入容器,ping busybox_2的地址

PING 172.17.0.3 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.133 ms

64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.097 ms

64 bytes from 172.17.0.3: seq=2 ttl=64 time=0.124 ms

[root@localhost ~]# docker exec -it busybox_2 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2): 56 data bytes

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.109 ms

64 bytes from 172.17.0.2: seq=1 ttl=64 time=0.152 ms

现象总结:

同一台宿主机上创建的容器默认是能够互相通信的

三、通过命令行模拟docker容器的网络通信

1.手动创建两个网络名称空间

[root@localhost ~]# ip netns add test1

[root@localhost ~]# ip netns add test2

[root@localhost ~]# ip netns list

test2

test1

2.查看test1网络名称空间网络状态

[root@localhost ~]# ip netns exec test1 ip link

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000 #默认只存在lo接口,并且状态为DOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

3.查看宿主机网络设备

[root@localhost ~]# ip link #发现除了正常系统存在的lo和eth0网络接口外,还存在其他三个接口

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:fd:34:4b brd ff:ff:ff:ff:ff:ff

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:23:c0:91:f9 brd ff:ff:ff:ff:ff:ff

105: veth0a87245@if104: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether f2:be:7e:3c:02:3c brd ff:ff:ff:ff:ff:ff link-netnsid 0

107: vethabf8073@if106: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether d6:dd:20:cf:84:4a brd ff:ff:ff:ff:ff:ff link-netnsid 1

4.创建一对veth pair网络接口

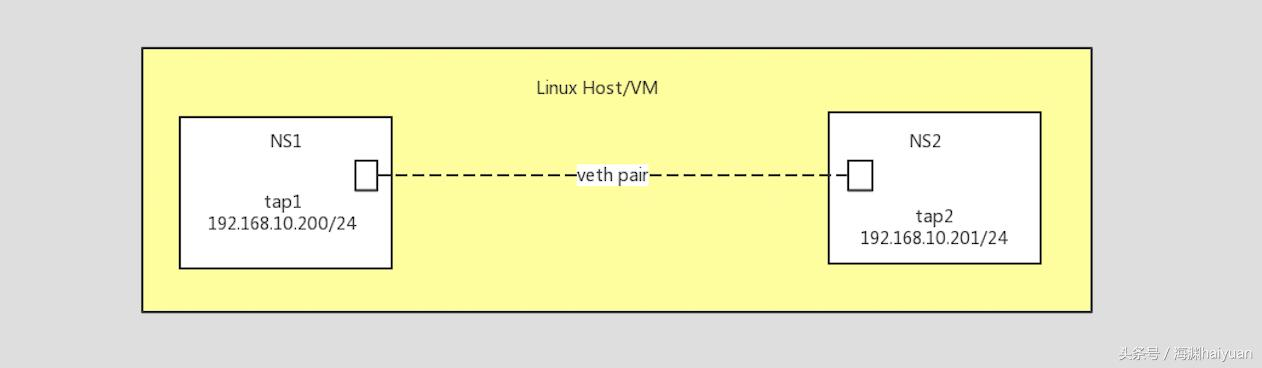

什么是veth pair?

veth pair 不是一个设备,而是一对设备,以连接两个虚拟以太端口。操作veth pair,需要跟namespace一起配合。两个namespace ns1/ns2 中各有一个tap组成veth pair,两个tap 上配置的ip进行互ping

简单的拓扑图:

创建一对veth pair

[root@localhost ~]# ip link add veth-test1 type veth peer name veth-test2 #创建 veth-test1 和 veth-test2 一对pair

[root@localhost ~]# ip link #查看是否添加成功 108 109

108: veth-test2@veth-tes1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether be:08:e6:25:be:01 brd ff:ff:ff:ff:ff:ff

109: veth-tes1@veth-test2: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether da:97:e4:b1:cc:38 brd ff:ff:ff:ff:ff:ff

5.将veth-test1 接口添加到test1网络名称空间中去

[root@localhost ~]# ip link set veth-test1 netns test1

[root@localhost ~]# ip netns exec test1 ip link #查看是否添加成功,状态为DOWN

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

109: veth-tes1@if108: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether da:97:e4:b1:cc:38 brd ff:ff:ff:ff:ff:ff link-netnsid 0

6.检查宿主机本地网络接口(109: veth-tes1@veth-test2已经没有了)

[root@localhost ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:fd:34:4b brd ff:ff:ff:ff:ff:ff

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:23:c0:91:f9 brd ff:ff:ff:ff:ff:ff

105: veth0a87245@if104: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether f2:be:7e:3c:02:3c brd ff:ff:ff:ff:ff:ff link-netnsid 0

107: vethabf8073@if106: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether d6:dd:20:cf:84:4a brd ff:ff:ff:ff:ff:ff link-netnsid 1

108: veth-test2@if109: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether be:08:e6:25:be:01 brd ff:ff:ff:ff:ff:ff link-netnsid 2

7.参照之前步骤,将veth-test2 接口添加到test2网络名称空间中去

[root@localhost ~]# ip link set veth-test2 netns test2

[root@localhost ~]# ip netns exec test2 ip link

8.为两个网络名称空间的网络接口分别添加一个IP地址

[root@localhost ~]# ip netns exec test1 ip addr add 192.168.1.1/24 dev veth-test1 #为test1网络名称空间中veth-test1接口添加IP,地址为192.168.1.1

[root@localhost ~]# ip netns exec test2 ip addr add 192.168.1.2/24 dev veth-test2 #同上

[root@localhost ~]# ip netns exec test1 ip a #查看test1网络名称接口状态,veth-test1已经绑定了IP,但是状态为DOWN

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

109: veth-tes1@if108: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether da:97:e4:b1:cc:38 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 192.168.1.1/24 scope global veth-tes1

valid_lft forever preferred_lft forever

[root@localhost ~]# ip netns exec test2 ip a #同上

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

108: veth-test2@if109: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether be:08:e6:25:be:01 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.2/24 scope global veth-test2

valid_lft forever preferred_lft forever

9.启动以上两个网络接口

[root@localhost ~]# ip netns exec test1 ip link set dev veth-test1 up

[root@localhost ~]# ip netns exec test2 ip link set dev veth-test2 up

[root@localhost ~]# ip netns exec test1 ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

109: veth-tes1@if108: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether da:97:e4:b1:cc:38 brd ff:ff:ff:ff:ff:ff link-netnsid 1

[root@localhost ~]# ip netns exec test2 ip link

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

108: veth-test2@if109: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether be:08:e6:25:be:01 brd ff:ff:ff:ff:ff:ff link-netnsid 0

10.测试通信

#网络通信测试

[root@localhost ~]# ip netns exec test1 ping 192.168.1.2

PING 192.168.1.2 (192.168.1.2) 56(84) bytes of data.

64 bytes from 192.168.1.2: icmp_seq=1 ttl=64 time=0.056 ms

64 bytes from 192.168.1.2: icmp_seq=2 ttl=64 time=0.057 ms

[root@localhost ~]# ip netns exec test2 ping 192.168.1.1

PING 192.168.1.1 (192.168.1.1) 56(84) bytes of data.

64 bytes from 192.168.1.1: icmp_seq=1 ttl=64 time=0.039 ms

64 bytes from 192.168.1.1: icmp_seq=2 ttl=64 time=0.084 ms

"一劳永逸" 的话,有是有的,而 "一劳永逸" 的事却极少

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· winform 绘制太阳,地球,月球 运作规律

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· AI 智能体引爆开源社区「GitHub 热点速览」

· Manus的开源复刻OpenManus初探

· 写一个简单的SQL生成工具

2016-07-11 python之I/O多路复用