图像语义分割——抠图勾画

-

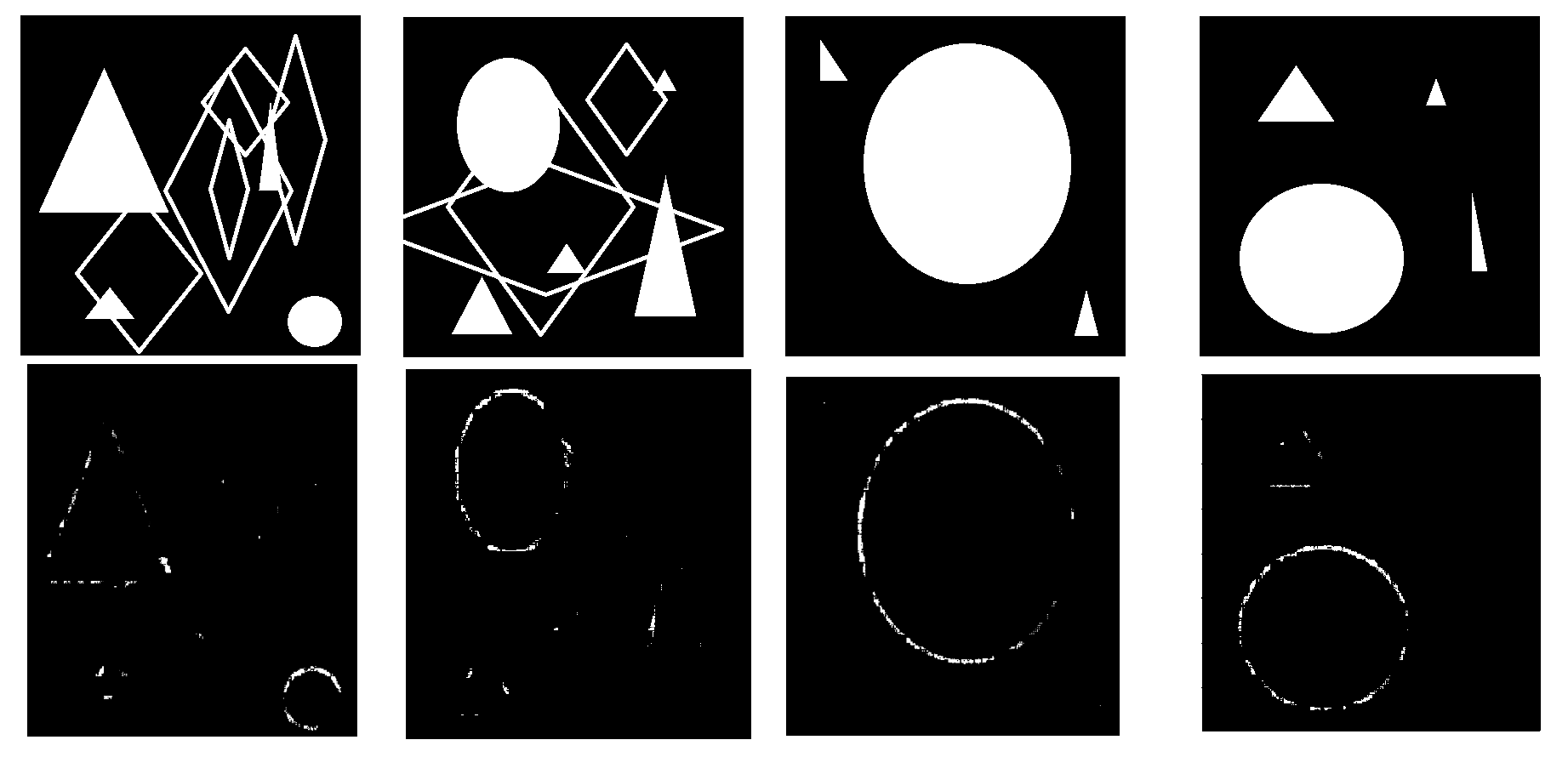

目前所在公司的核心产品,是放疗图像的靶区自动勾画。

-

使用深度学习技术,学习放疗样本,能够针对不同的器官,进行放疗靶区的勾画。

-

使用CNN搭建FCN/U-Net网络结构,训练模型,使模型获得图像语义分隔的能力。(自动驾驶,无人机落点判定都是属于语义分割范畴)。

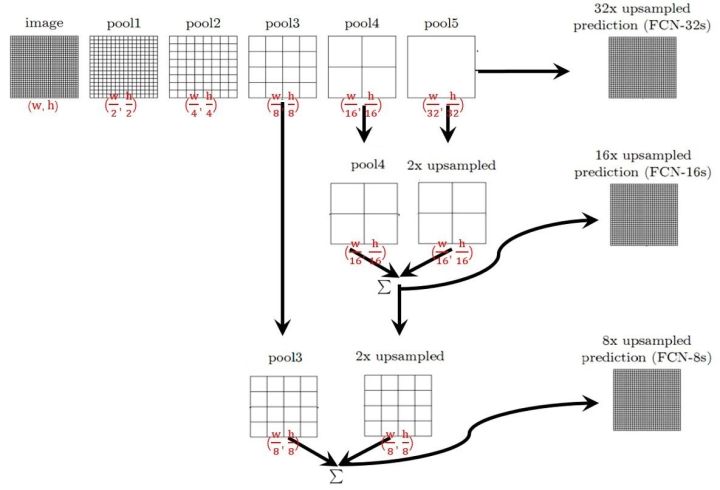

FCN模型结构

模型结构如图所示

模型流程

- 对输入图片image,重复进行CNN卷积、激活函数和池化等处理,得到pool1,pool2,pool3,pool,poo5,5个池化结果

- 将pool5池化结果进行反卷积放大2倍,得到结果A

- 将A与pool4相加得到结果B

- 将结果B进行反卷积放大2倍,得到结果C

- 将结果C与pool3相加得到结果D,

- 将结果D经过激活函数处理,得到最终的图像result

模型代码

weight = {

'w1sub': tf.Variable(tf.random_normal([8, 8, 1, 8], stddev=0.1)),

'w2sub': tf.Variable(tf.random_normal([4, 4, 8, 16], stddev=0.1)),

'w3sub': tf.Variable(tf.random_normal([2, 2, 16, 32], stddev=0.1)),

'w4sub': tf.Variable(tf.random_normal([2, 2, 32, 64], stddev=0.1)),

'w1up': tf.Variable(tf.random_normal([2, 2, 32, 64], stddev=0.1)),

'w2up': tf.Variable(tf.random_normal([2, 2, 16, 32], stddev=0.1)),

'w3up': tf.Variable(tf.random_normal([2, 2, 8, 16], stddev=0.1)),

'w4up': tf.Variable(tf.random_normal([2, 2, 1, 8], stddev=0.1)),

}

biases = {

'b1sub': tf.Variable(tf.random_normal([8], stddev=0.1)),

'b2sub': tf.Variable(tf.random_normal([16], stddev=0.1)),

'b3sub': tf.Variable(tf.random_normal([32], stddev=0.1)),

'b4sub': tf.Variable(tf.random_normal([64], stddev=0.1)),

'b1up': tf.Variable(tf.random_normal([32], stddev=0.1)),

'b2up': tf.Variable(tf.random_normal([16], stddev=0.1)),

'b3up': tf.Variable(tf.random_normal([8], stddev=0.1)),

'b4up': tf.Variable(tf.random_normal([1], stddev=0.1)),

}

def ForwardProcess(inputBatch, w, b, num_size, istrain=False):

inputBatch_r = tf.reshape(inputBatch, shape=[-1, 400, 400, 1])

if istrain:

#dropout处理

inputBatch_r = tf.nn.dropout(inputBatch_r, keep_prob=0.9)

conv1 = tf.nn.conv2d(inputBatch_r, w['w1sub'], strides=[1, 1, 1, 1], padding='SAME') # 8

conv1 = tf.layers.batch_normalization(conv1, training=True)

conv1 = tf.nn.relu(tf.nn.bias_add(conv1, b['b1sub']))

pool1 = tf.nn.max_pool(conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME') # 8

print('pool1的形状')

print(pool1.get_shape())

conv2 = tf.nn.conv2d(pool1, w['w2sub'], strides=[1, 1, 1, 1], padding='SAME') # 16

conv2 = tf.layers.batch_normalization(conv2, training=True)

conv2 = tf.nn.relu(tf.nn.bias_add(conv2, b['b2sub']))

pool2 = tf.nn.max_pool(conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

print('pool2的形状')

print(pool2.get_shape())

conv3 = tf.nn.conv2d(pool2, w['w3sub'], strides=[1, 1, 1, 1], padding='SAME') # 32

conv3 = tf.layers.batch_normalization(conv3, training=True)

conv3 = tf.nn.relu(tf.nn.bias_add(conv3, b['b3sub']))

pool3 = tf.nn.max_pool(conv3, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

print('pool3的形状')

print(pool3.get_shape())

conv4 = tf.nn.conv2d(pool3, w['w4sub'], strides=[1, 1, 1, 1], padding='SAME') # 64

conv4 = tf.layers.batch_normalization(conv4, training=True)

conv4 = tf.nn.relu(tf.nn.bias_add(conv4, b['b4sub']))

pool4 = tf.nn.max_pool(conv4, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

print('pool4的形状')

print(pool4.get_shape())

# 反卷积

print('第一次反卷积,输入的形状')

print(pool4.get_shape())

d_conv1 = tf.nn.conv2d_transpose(value=pool4, filter=w['w1up'], output_shape=[num_size, 50, 50, 32],

strides=[1, 2, 2, 1], padding='SAME')

d_conv1 = tf.add(d_conv1, pool3)

d_conv1 = tf.nn.bias_add(d_conv1, b['b1up'])

d_conv1 = tf.layers.batch_normalization(d_conv1, training=True)

d_conv1 = tf.nn.relu(d_conv1)

print('第二次反卷积,输入的形状')

print(d_conv1.get_shape())

d_conv2 = tf.nn.conv2d_transpose(value=d_conv1, filter=w['w2up'], output_shape=[num_size, 100, 100, 16],

strides=[1, 2, 2, 1], padding='SAME')

d_conv2 = tf.add(d_conv2, pool2)

d_conv2 = tf.nn.bias_add(d_conv2, b['b2up'])

d_conv2 = tf.layers.batch_normalization(d_conv2, training=True)

d_conv2 = tf.nn.relu(d_conv2)

print('第三次反卷积,输入的形状')

print(d_conv2.get_shape())

d_conv3 = tf.nn.conv2d_transpose(value=d_conv2, filter=w['w3up'], output_shape=[num_size, 200, 200, 8],

strides=[1, 2, 2, 1], padding='SAME')

d_conv3 = tf.add(d_conv3, pool1)

d_conv3 = tf.nn.bias_add(d_conv3, b['b3up'])

d_conv3 = tf.layers.batch_normalization(d_conv3, training=True)

d_conv3 = tf.nn.relu(d_conv3)

print('第四次反卷积,输入的形状')

print(d_conv3.get_shape())

d_conv4 = tf.nn.conv2d_transpose(value=d_conv3, filter=w['w4up'], output_shape=[num_size, 400, 400, 1],

strides=[1, 2, 2, 1], padding='SAME')

# d_conv4 = tf.add(d_conv4, inputBatch_r)

d_conv4 = tf.nn.bias_add(d_conv4, b['b4up'])

d_conv4 = tf.layers.batch_normalization(d_conv4, training=True)

d_conv4 = tf.nn.relu(d_conv4)

return d_conv4

未完待续

浙公网安备 33010602011771号

浙公网安备 33010602011771号