测试 【子牙-writing】 大模型

参考:姜子牙大模型系列 | 写作模型ziya-writing开源!开箱即用,快来认领专属你的写作小助手吧

封神榜:https://github.com/IDEA-CCNL/Fengshenbang-LM

姜子牙大模型:https://huggingface.co/IDEA-CCNL/Ziya-LLaMA-13B-v1.1

子牙-writing大模型:

https://huggingface.co/IDEA-CCNL/Ziya-Writing-LLaMa-13B-v1 【老版】

https://huggingface.co/IDEA-CCNL/Ziya-Writing-13B-v2 【最新】

安装环境:一张4090(24G),需量化

引言

ziya-writing模型基于底座模型ziya-llama-13B-pretrain-v1,使用了高质量的中文写作指令数据进行SFT,同时人工标注了大量排序数据进行RLHF。经过两个阶段的精心训练,使得ziya-writing具备了优秀的写作能力。

安装

- 下载模型

git lfs install

git clone https://huggingface.co/IDEA-CCNL/Ziya-Writing-13B-v2.git

- 安装环境

pip install -r requirement.txt

# requirement.txt

transformers>=4.28.1

bitsandbytes>=0.39.0

torch>=1.12.1

numpy>=1.24.3

llama-cpp-python>=0.1.62

测试

使用量化8bit

简单

from transformers import AutoTokenizer

from transformers import LlamaForCausalLM

import torch

# device = torch.device("cuda")

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

path = '/home/trimps/llm_model/Ziya-Writing-13B-v2'

query="帮我写一份去西安的旅游计划"

model = LlamaForCausalLM.from_pretrained(path, load_in_8bit=True,torch_dtype=torch.float16)

# 量化

# model = model.quantize(8).cuda()

tokenizer = AutoTokenizer.from_pretrained(path, use_fast=False)

inputs = '<human>:' + query.strip() + '\n<bot>:'

input_ids = tokenizer(inputs, return_tensors="pt").input_ids.to(device)

generate_ids = model.generate(

input_ids,

max_new_tokens=2048,

do_sample = True,

top_p = 0.85,

temperature = 0.85,

repetition_penalty=1.,

eos_token_id=2,

bos_token_id=1,

pad_token_id=0)

output = tokenizer.batch_decode(generate_ids)[0]

print(output)

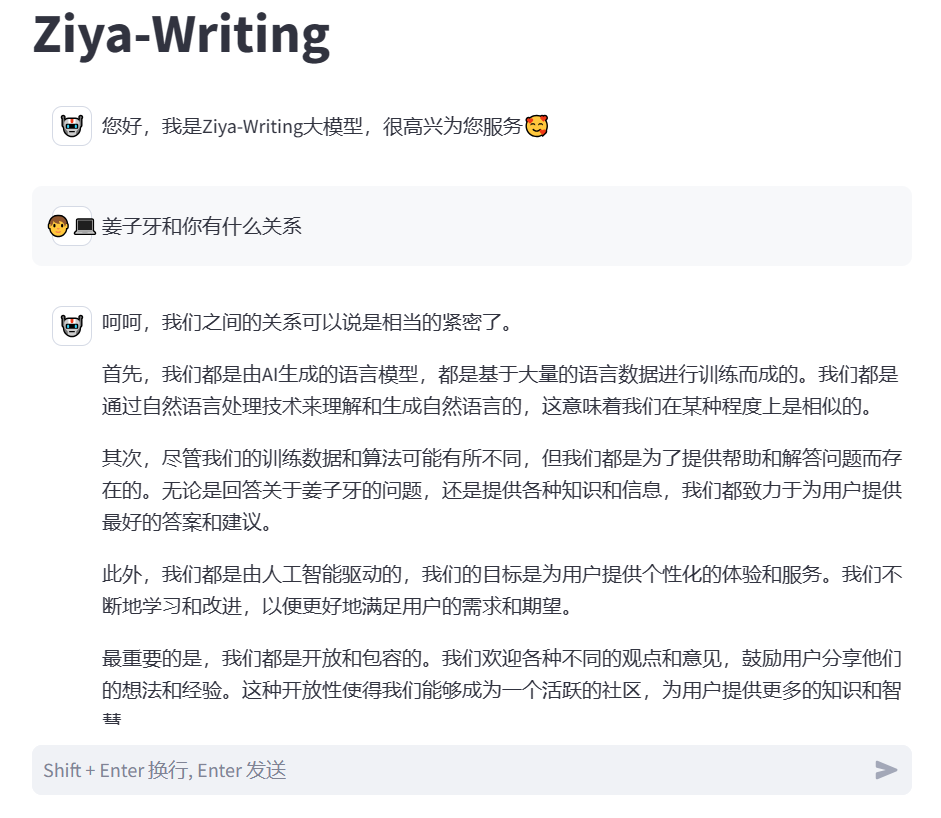

Web

import json,time

import torch

import streamlit as st

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation.utils import GenerationConfig

from transformers import LlamaForCausalLM

st.set_page_config(page_title="Ziya-Writing")

st.title("Ziya-Writing")

path = '/home/trimps/llm_model/Ziya-Writing-13B-v2'

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

@st.cache_resource

def init_model():

model = LlamaForCausalLM.from_pretrained(path, load_in_8bit=True,torch_dtype=torch.float16)

tokenizer = AutoTokenizer.from_pretrained(path, use_fast=False)

return model, tokenizer

# 执行模型

def exec_model(model, tokenizer,prompt):

inputs = '<human>:' + prompt.strip() + '\n<bot> '

input_ids = tokenizer(inputs, return_tensors="pt").input_ids.to(device)

generate_ids = model.generate(

input_ids,

max_new_tokens=1024,

do_sample = True,

top_p = 0.85,

temperature = 0.85,

repetition_penalty=1.,

eos_token_id=2,

bos_token_id=1,

pad_token_id=0)

# print(tokenizer.batch_decode(generate_ids)[0].split('<bot>')[1].strip('</s>'))

output = tokenizer.batch_decode(generate_ids)[0].split('<bot>')[1].strip('</s>')

return output

def main():

model, tokenizer = init_model()

with st.chat_message("assistant", avatar='🤖'):

st.markdown("您好,我是Ziya-Writing大模型,很高兴为您服务🥰")

if prompt := st.chat_input("Shift + Enter 换行, Enter 发送"):

with st.chat_message("user", avatar='🧑💻'):

st.markdown(prompt)

print(f"[user] {prompt}", flush=True)

start = time.time()

with st.chat_message("assistant", avatar='🤖'):

placeholder = st.empty()

response= exec_model(model, tokenizer,prompt)

if torch.backends.mps.is_available():

torch.mps.empty_cache()

placeholder.markdown(response)

end = time.time()

st.write("时间:{}s".format(end-start))

print(response)

if __name__ == "__main__":

main()

- PS:

- 感觉写作能力和通用的模型差不多,比如同体量的(百川2-13B)

- 一张4090跑起来声音快要炸了。