Internet

0x01 URL的解析/反解析/连接

解析

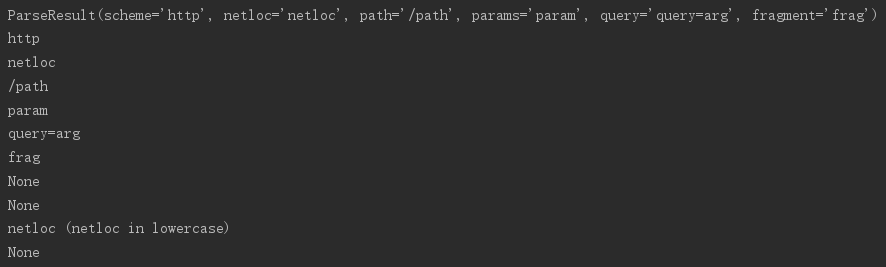

urlparse()--分解URL

# -*- coding: UTF-8 -*- from urlparse import urlparse url = 'http://user:pwd@NetLoc:80/p1;param/p2?query=arg#frag' parsed = urlparse(url) print parsed print parsed.scheme print parsed.netloc print parsed.path print parsed.params print parsed.query print parsed.fragment print parsed.username print parsed.password print parsed.hostname,'(netloc in lowercase)' print parsed.port

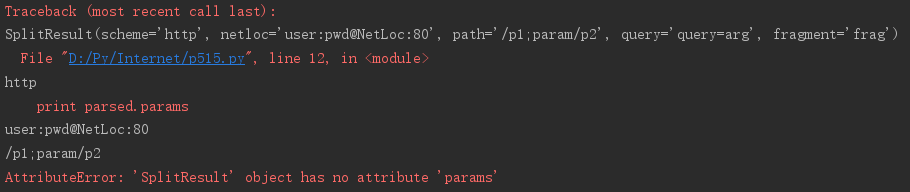

urlsplit()--替换urlparse(),但不会分解参数。(没有params属性)

# -*- coding: UTF-8 -*- from urlparse import urlsplit url = 'http://user:pwd@NetLoc:80/p1;param/p2?query=arg#frag' parsed = urlsplit(url) print parsed print parsed.scheme print parsed.netloc print parsed.path print parsed.params print parsed.query print parsed.fragment print parsed.username print parsed.password print parsed.hostname,'(netloc in lowercase)' print parsed.port

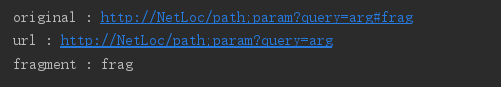

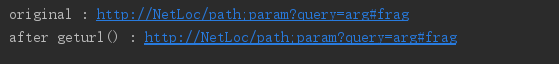

urldefrag()--从URL中剥离出片段标识符

# -*- coding: UTF-8 -*- from urlparse import urldefrag url = 'http://NetLoc/path;param?query=arg#frag' print 'original :',url url,fragment = urldefrag(url) print 'url :',url print 'fragment :',fragment

反解析

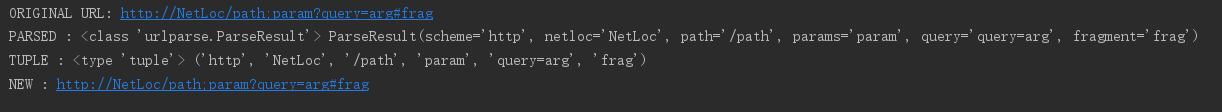

geturl()--只适用于urlparse()或urlsplit()返回的对象

# -*- coding: UTF-8 -*- from urlparse import urlparse url = 'http://NetLoc/path;param?query=arg#frag' print 'original :',url parsed = urlparse(url) print 'after geturl() :',parsed.geturl()

urlunparse()--将包含串的普通元组拼接成一个URL(如果输入URL包含多余部分,重新构造的URL可能会将其去除)

# -*- coding: UTF-8 -*- from urlparse import urlparse,urlunparse url = 'http://NetLoc/path;param?query=arg#frag' print 'ORIGINAL URL:',url parsed = urlparse(url) print 'PARSED :',type(parsed),parsed t = parsed[:] print 'TUPLE :',type(t),t print 'NEW :',urlunparse(t)

连接

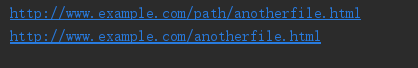

urljoin()--由相对片段构造绝对URL

# -*- coding: UTF-8 -*- from urlparse import urljoin print urljoin('http://www.example.com/path/file.html','anotherfile.html') print urljoin('http://www.example.com/path/file.html','../anotherfile.html')

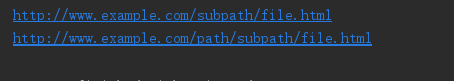

# -*- coding: UTF-8 -*- from urlparse import urljoin print urljoin('http://www.example.com/path/','/subpath/file.html') print urljoin('http://www.example.com/path/','subpath/file.html')

注:如果连接到URL的路径以斜线开头(/),这会将URL的路径重置为顶级路径。如果不是以一个斜线开头,则追加到当前URL路径的末尾。

0x02 BaseHTTPServer--实现web服务器的基类

HTTP GET

下面一个示例展示了一个请求处理器如何向客户返回一个响应

1 # -*- coding: UTF-8 -*- 2 3 from BaseHTTPServer import BaseHTTPRequestHandler 4 import urlparse 5 6 class GetHandler (BaseHTTPRequestHandler): 7 def do_GET(self): 8 parsed_path = urlparse.urlparse(self.path) 9 message_parts = [ 10 'CLIENT VALUES:', 11 'client_address=%s (%s)' % (self.client_address, 12 self.address_string()), 13 'command=%s' % self.command, 14 'path=%s' % self.path, 15 'real_path=%s' % parsed_path.path, 16 'query=%s' % self.request_version, 17 '', 18 'SERVER VALUES:', 19 'server_version=%s' % self.server_version, 20 'sys_version=%s' % self.sys_version, 21 'protocol_version=%s' % self.protocol_version, 22 '', 23 'HEADERS RECEIVED:', 24 ] 25 for name,value in sorted(self.headers.items()): 26 message_parts.append('%s=%s' % (name,value.rstrip())) 27 message_parts.append('') 28 message = '\r\n'.join(message_parts) 29 self.send_response(200) 30 self.end_headers() 31 self.wfile.write(message) 32 return 33 34 if __name__ == '__main__': 35 from BaseHTTPServer import HTTPServer 36 server = HTTPServer(('localhost',8080),GetHandler) 37 print 'Starting server,use <Ctrl+C> to stop' 38 server.serve_forever()

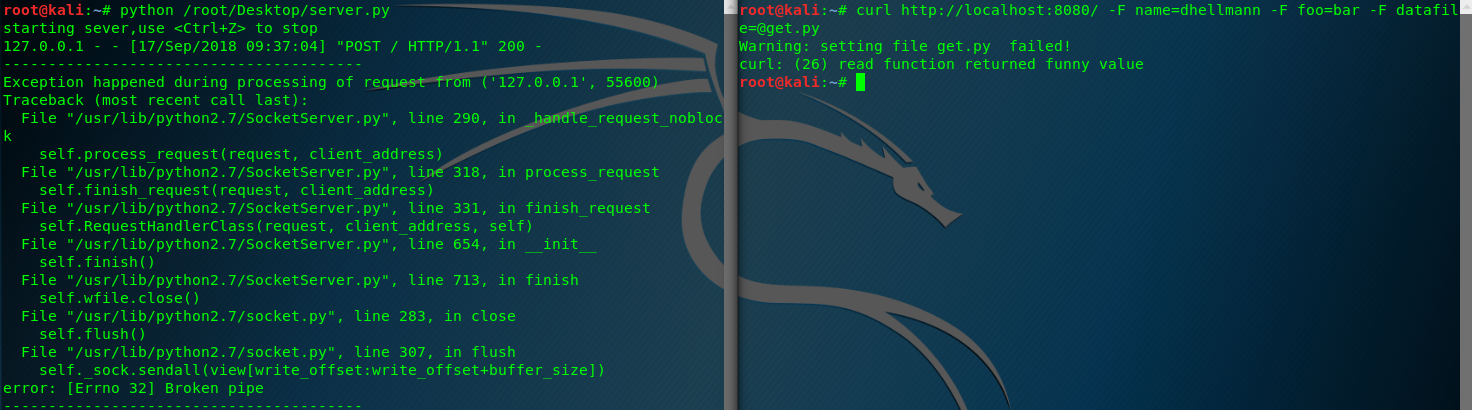

HTTP POST

支持POST请求需要多做一些工作,因为基类不会自动解析表单数据。cgi模块提供了FieldStorage类,如果给定了正确的输入,它知道如何解析表单。

1 # -*- coding: UTF-8 -*- 2 3 from BaseHTTPServer import BaseHTTPRequestHandler 4 import cgi 5 6 7 class PostHandler(BaseHTTPRequestHandler): 8 def do_POST(self): 9 # parse the form data posted 10 form = cgi.FieldStorage( 11 fp=self.rfile, 12 headers=self.headers, 13 environ={'REQUEST_METHOD': 'POST', 14 'CONTENT_TYPE': self.headers['Content-Type'], 15 }) 16 17 # begin the response 18 self.send_response(200) 19 self.end_headers() 20 self.wfile.write('Client:%s\n' % str(self.client_address)) 21 self.wfile.write('User-agent:%s\n' % str(self.headers['user-agent'])) 22 self.wfile.write('Path:%s\n' % self.path) 23 self.wfile.write('Form data:\n') 24 25 # Echo back information about what was posted in the form 26 for field in form.keys(): 27 field_item = form[field] 28 if field_item.filename: 29 # the field contains an uploaded file 30 file_data = field_item.file.read() 31 file_len = len(file_data) 32 del file_data 33 self.wfile.write( 34 '\tUpload %s as "%s" (%d bytes)\n' % (field, field_item.filename, file_len)) 35 else: 36 # regular form values 37 self.wfile.write('\t%s=%s\n' % (field, form[field].value)) 38 39 return 40 41 42 if __name__ == '__main__': 43 from BaseHTTPServer import HTTPServer 44 server = HTTPServer(('localhost', 8080), PostHandler) 45 print 'starting sever,use <Ctrl+Z> to stop' 46 server.serve_forever()

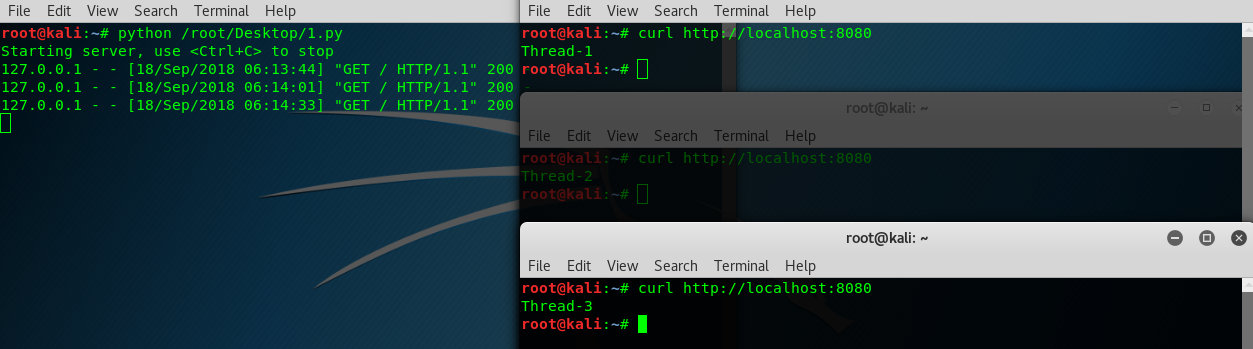

线程与进程

HTTPServer是SocketServer.TCPServer的一个子类,不使用多线程或者多进程来处理请求。要增加线程或进程,需要使用相应的mix-in技术从SocketServer创建一个新类。

1 # -*- coding: UTF-8 -*- 2 3 from BaseHTTPServer import HTTPServer, BaseHTTPRequestHandler 4 from SocketServer import ThreadingMixIn 5 import threading 6 7 class Handler(BaseHTTPRequestHandler): 8 def do_GET(self): 9 self.send_response(200) 10 self.end_headers() 11 message=threading.currentThread().getName() 12 self.wfile.write(message) 13 self.wfile.write('\n') 14 return 15 16 class ThreadedHTTPServer(ThreadingMixIn,HTTPServer): 17 """Handler requests in a separate thread.""" 18 19 if __name__ == '__main__': 20 server = ThreadedHTTPServer(('localhost',8080),Handler) 21 print 'Starting server, use <Ctrl+C> to stop' 22 server.serve_forever()

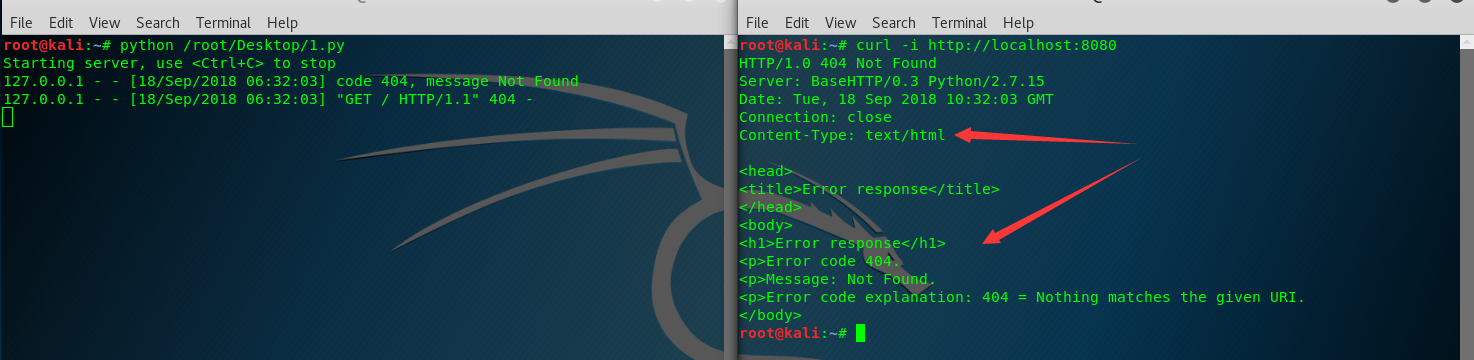

处理错误

1 # -*- coding: UTF-8 -*- 2 3 from BaseHTTPServer import BaseHTTPRequestHandler 4 5 class ErrorHandler(BaseHTTPRequestHandler): 6 def do_GET(self): 7 self.send_error(404) 8 return 9 10 if __name__ == '__main__' 11 from BaseHTTPServer import HTTPServer 12 server = HTTPServer(('localhost',8080),ErrorHandler) 13 print 'Starting server, use <Ctrl+C> to stop' 14 server.serve_forever()

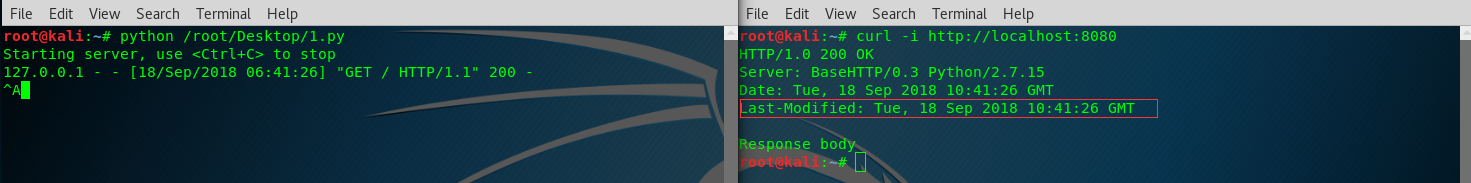

设置首部

send_header()方法将向HTTP响应添加首部数据。

1 # -*- coding: UTF-8 -*- 2 3 from BaseHTTPServer import BaseHTTPRequestHandler 4 import urlparse 5 import time 6 7 class GetHandler(BaseHTTPRequestHandler): 8 def do_GET(self): 9 self.send_response(200) 10 self.send_header('Last-Modified', 11 self.date_time_string(time.time())) 12 self.end_headers() 13 self.wfile.write('Response body \n') 14 return 15 16 if __name__ == '__main__': 17 from BaseHTTPServer import HTTPServer 18 server = HTTPServer(('localhost',8080),GetHandler) 19 print 'Starting server, use <Ctrl+C> to stop' 20 server.serve_forever()

0x03 urllib--网络资源访问

作用:访问不需要验证的远程资源/coocie等等。

利用缓存实现简单获取

urllib提供的urlretrieve()函数提供下载数据的功能。参数:1.URL 2.存放数据的一个临时文件和一个报告下载进度的函数。另外如果UTL指示一个表单,要求提交数据,那么urlretrieve()还有有一个参数表示要传递的数据。调用程序可以直接删除这个文件,或者将这个文件作为一个缓存,使用urlcleanup()将其删除。

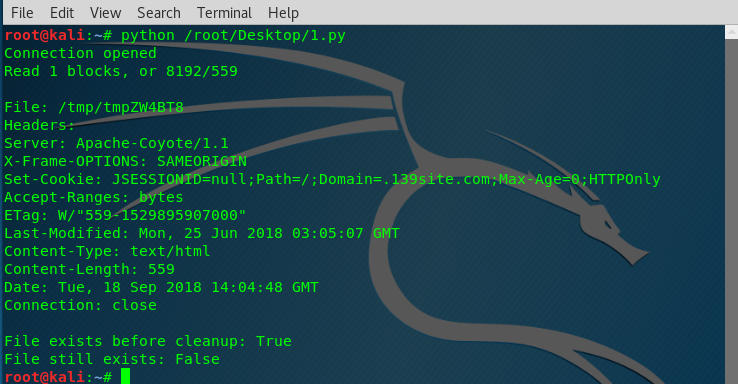

使用一个HTTP GET请求从一个web服务器获取数据的例子:

1 # -*- coding: UTF-8 -*- 2 3 import urllib 4 import os 5 6 def reporthook(blocks_read, block_size, total_size): 7 """total_size is reported in bytes, 8 block_size is the amount read each time. 9 blocks_read is the number of blocks successfully read. 10 """ 11 if not blocks_read: 12 print 'Connection opened' 13 return 14 if total_size < 0: 15 #unknown size 16 print 'Read %d blocks (%d bytes)' % (blocks_read,blocks_read* block_size) 17 else: 18 amount_read = blocks_read * block_size 19 print 'Read %d blocks, or %d/%d' % (blocks_read,amount_read,total_size) 20 return 21 22 try: 23 filename,msg = urllib.urlretrieve('http://blog.doughellmann.com/', reporthook=reporthook) 24 25 print 26 print 'File:',filename 27 print 'Headers:' 28 print msg 29 print 'File exists before cleanup:', os.path.exists(filename) 30 31 finally: 32 urllib.urlcleanup() 33 print 'File still exists:', os.path.exists(filename)

参数编码

对参数编码并追加到URL,从而将它们传递到服务器。(error)

1 # -*- coding: UTF-8 -*- 2 3 import urllib 4 5 query_args = {'q':'query string','foo':'bar'} 6 encoded_args = urllib.urlencode(query_args) 7 print 'Encoded:', encoded_args 8 9 url = 'http://localhost:8080/?' + encoded_args 10 print urllib.urlopen(url).read()

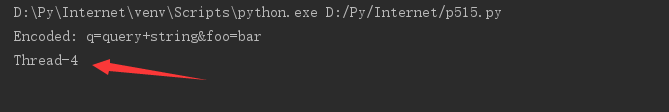

要使用变量的不同出现向查询串传入一个值序列,需要在调用urlencode()时将doseq设置为True。

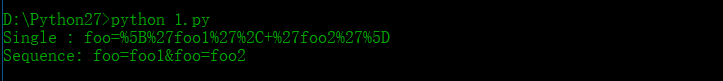

1 # -*- coding: UTF-8 -*- 2 3 import urllib 4 5 query_args = {'foo':['foo1','foo2']} 6 print 'Single :',urllib.urlencode(query_args) 7 print 'Sequence:',urllib.urlencode(query_args,doseq=True)

结果时一个查询串,同一个名称与多个值关联。

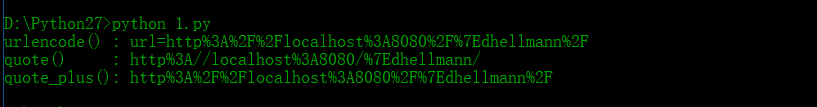

查询参数中可能有一些特殊字符,在服务器端对URL解析时这些字符会带来问题,所以在传递到urlencode()时要对这些特殊字符"加引号"。要在本地对特殊字符加引号从而得到字符串的“安全”版本。

直接使用quote()或quote_plus()函数。

1 # -*- coding: UTF-8 -*- 2 3 import urllib 4 5 url = 'http://localhost:8080/~dhellmann/' 6 print 'urlencode() :',urllib.urlencode({'url':url}) 7 print 'quote() :',urllib.quote(url) 8 print 'quote_plus():',urllib.quote_plus(url)

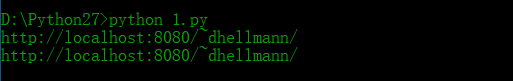

加引号的逆过程

相应的使用unquote()或unquote_plus()函数。

1 # -*- coding: UTF-8 -*- 2 3 import urllib 4 5 print urllib.unquote('http%3A//localhost%3A8080/%7Edhellmann/') 6 print urllib.unquote_plus('http%3A%2F%2Flocalhost%3A8080%2F%7Edhellmann%2F')

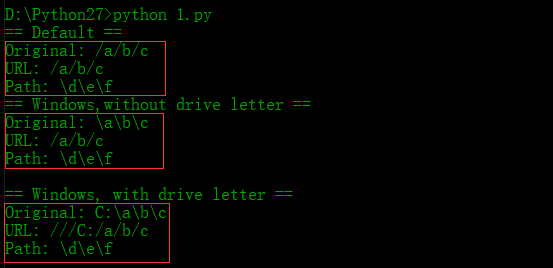

路径与URL

有些操作系统在本地文件和URL中使用不同的值分隔路径的不同部分。为了保证代码可移植,可以使用函数pathname2url()和url2pathname()来回转换。

1 # -*- coding: UTF-8 -*- 2 3 import os 4 from urllib import pathname2url,url2pathname 5 6 print '== Default ==' 7 path = '/a/b/c' 8 print 'Original:',path 9 print 'URL:',pathname2url(path) 10 print 'Path:',url2pathname('/d/e/f') 11 12 13 print '== Windows,without drive letter ==' 14 path = r'\a\b\c' 15 print 'Original:',path 16 print 'URL:',pathname2url(path) 17 print 'Path:',url2pathname('/d/e/f') 18 print 19 20 print '== Windows, with drive letter ==' 21 path = r'C:\a\b\c' 22 print 'Original:',path 23 print 'URL:',pathname2url(path) 24 print 'Path:',url2pathname('/d/e/f') 25 print

0x04 urllib2--网络资源访问

作用:用于打开扩展URL的库,这些URL可以通过定义定制协议处理器来扩展。

urllib2模块提供了一个更新的API来使用URL标识的Internet资源。

HTTP GET

...临场error

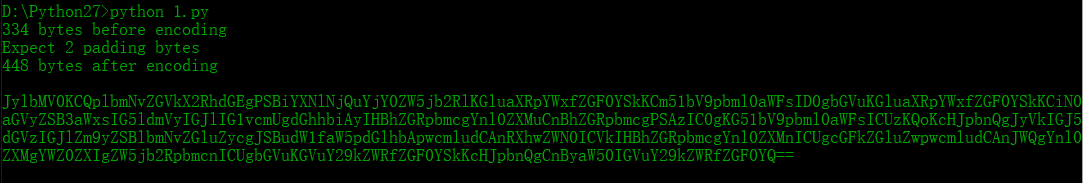

0x05 Base64--用ASCLL编码二进制数据

base64编码

1 # -*- coding: UTF-8 -*- 2 3 import base64 4 import textwrap 5 6 #load this sourse file and strip the header. 7 with open(__file__,'rt') as input: 8 raw = input.read() 9 initial_data = raw.split('#end_pymotw_headers')[1] 10 11 encoded_data = base64.b64encode(initial_data) 12 13 num_initial = len(initial_data) 14 15 #there will never be more than 2 padding bytes. 16 padding = 3 - (num_initial %3) 17 18 print '%d bytes before encoding' % num_initial 19 print 'Expect %d padding bytes' % padding 20 print '%d bytes after encoding' % len(encoded_data) 21 print 22 print encoded_data

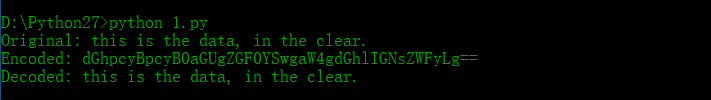

base64解码

1 # -*- coding: UTF-8 -*- 2 3 import base64 4 5 original_string = 'this is the data, in the clear.' 6 print 'Original:' , original_string 7 encoded_string = base64.b64encode(original_string) 8 9 print 'Encoded:',encoded_string 10 11 decoded_string = base64.b64decode(encoded_string) 12 print 'Decoded:',decoded_string

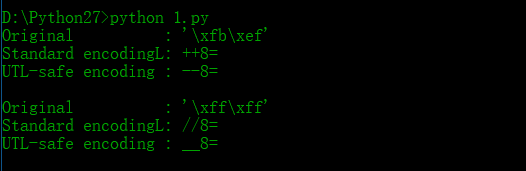

URL安全的变种

因为默认的Base64字母表可能使用+和/,这两个字符在URL中会用到,所以通常很必要使用一个候选编码来替换这些字符。+替换成-,/替换成下划线_

1 # -*- coding: UTF-8 -*- 2 3 import base64 4 5 encodes_with_pluses = chr(251) + chr(239) 6 encodes_with_slashes = chr(255) * 2 7 8 for original in [encodes_with_pluses,encodes_with_slashes]: 9 print 'Original :',repr(original) 10 print 'Standard encodingL:',base64.standard_b64encode(original) 11 print 'UTL-safe encoding :',base64.urlsafe_b64encode(original) 12 print

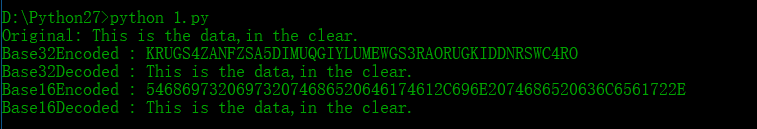

其他编码

1 # -*- coding: UTF-8 -*- 2 3 import base64 4 5 original_string = 'This is the data,in the clear.' 6 print 'Original:', original_string 7 8 #Base32字母表包括ASCLL集中的26个大写字母以及数字2~7 9 encoded_string = base64.b32encode(original_string) 10 print 'Base32Encoded :', encoded_string 11 decoded_string = base64.b32decode(encoded_string) 12 print 'Base32Decoded :', decoded_string 13 14 #Base16函数处理十六进制字母表 15 encoded_string = base64.b16encode(original_string) 16 print 'Base16Encoded :',encoded_string 17 encoded_string = base64.b16decode(encoded_string) 18 print 'Base16Decoded :',encoded_string

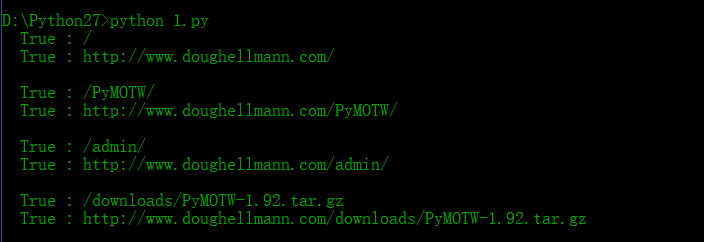

0x06 robotparser--网络蜘蛛访问控制

作用:解析用于控制网络蜘蛛的robots.txt文件

1 # -*- coding: UTF-8 -*- 2 3 import robotparser 4 import urlparse 5 6 AFENT_NAME = 'PyMOTW' 7 URL_BASE = 'http://www.doughellmann.com/' 8 parser = robotparser.RobotFileParser() 9 parser.set_url(urlparse.urljoin(URL_BASE,'robots.txt')) 10 parser.read() 11 12 PATHS = [ 13 '/', 14 '/PyMOTW/', 15 '/admin/', 16 '/downloads/PyMOTW-1.92.tar.gz', 17 ] 18 19 for path in PATHS: 20 print '%6s : %s' % (parser.can_fetch(AFENT_NAME,path),path) 21 url = urlparse.urljoin(URL_BASE,path) 22 print '%6s : %s' % (parser.can_fetch(AFENT_NAME,url),url) 23 print

can_fetch()的URL参数可以是一个相对于网站根目录的相对路径,也可以是一个完全URL。

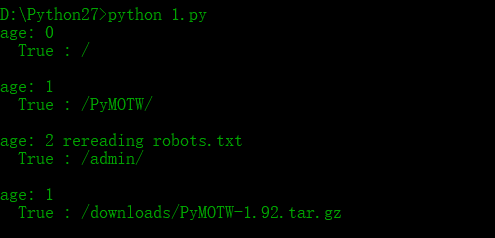

长久蜘蛛

如果一个应用需要花很长时间来处理它下载的资源,或者受到抑制,需要在很多次下载之间暂停,这样的移动应当以其下载内容的寿命为根据,定期检查新的robots.txt文件。这个寿命并不是自动管理的,不过模块提供了一些简便方法,利用这些方法可以更容易地跟踪文件的寿命。

1 # -*- coding: UTF-8 -*- 2 3 import robotparser 4 import urlparse 5 import time 6 7 AGENT_NAME = 'PyMOTW' 8 URL_BASE = 'http://www.doughellmann.com/' 9 parser = robotparser.RobotFileParser() 10 parser.set_url(urlparse.urljoin(URL_BASE,'robots.txt')) 11 parser.read() 12 parser.modified() 13 14 PATHS = [ 15 '/', 16 '/PyMOTW/', 17 '/admin/', 18 '/downloads/PyMOTW-1.92.tar.gz', 19 ] 20 21 for path in PATHS: 22 age = int(time.time() - parser.mtime()) 23 print 'age:',age, 24 if age>1: 25 print 'rereading robots.txt' 26 parser.read() 27 parser.modified() 28 else: 29 print 30 print '%6s : %s' % (parser.can_fetch(AGENT_NAME,path),path) 31 #Simulate delay in processing 32 time.sleep(1) 33 print

如果已下载的文件寿命超过了1秒,这个极端例子就会下载一个新的robots.txt文件。作为一个更好的长久应用,在下载整个文件之前可能会请求文件的修改世界。

0x07 Cookie--HTTP Cookie

创建和设置Cookie

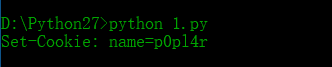

1 # -*- coding: UTF-8 -*- 2 3 import Cookie 4 5 c = Cookie.SimpleCookie() 6 c['name'] = 'p0pl4r' 7 print c

输出是一个合法的Set-Cookie首部,可以作为HTTP响应的一部分传递到客户。

Morsel

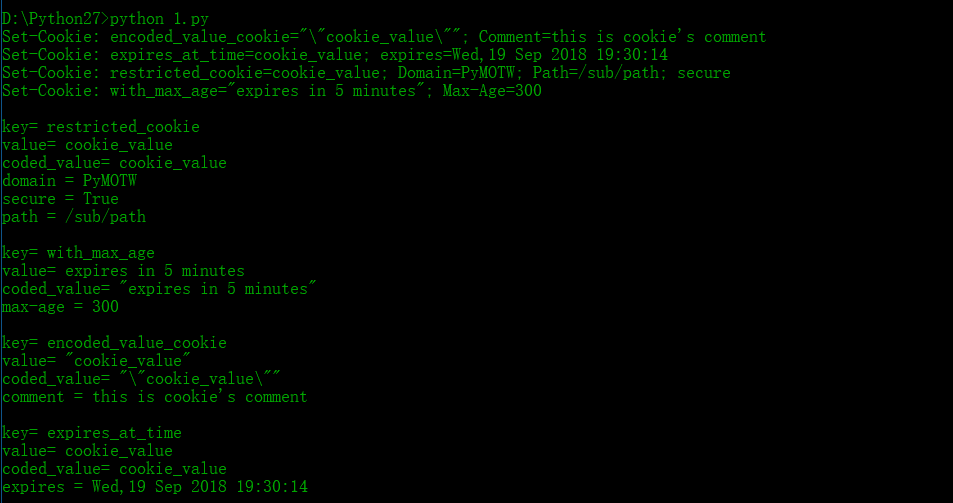

cookie的所有RFC属性都可以通过表示cookie值的Morsel对象来管理,如到期时间/路径/域。

1 # -*- coding: UTF-8 -*- 2 3 import Cookie 4 import datetime 5 6 def show_cookie(c): 7 print c 8 for key,morsel in c.iteritems(): 9 print 10 print 'key=',morsel.key 11 print 'value=',morsel.value 12 print 'coded_value=',morsel.coded_value 13 for name in morsel.keys(): 14 if morsel[name]: 15 print '%s = %s' % (name,morsel[name]) 16 17 c = Cookie.SimpleCookie() 18 19 #A cookie with a value that has to be encoded to fit into the headers 20 c['encoded_value_cookie'] = '"cookie_value"' 21 c['encoded_value_cookie']['comment'] = 'this is cookie\'s comment' 22 23 #A cookie that only applies to part of a site 24 c['restricted_cookie'] = 'cookie_value' 25 c['restricted_cookie']['path'] = '/sub/path' 26 c['restricted_cookie']['domain'] = 'PyMOTW' 27 c['restricted_cookie']['secure'] = 'True' 28 29 #A cookie that expires in 5 minutes 30 c['with_max_age'] = 'expires in 5 minutes' 31 c['with_max_age']['max-age'] = 300 # seconds 32 33 #A cookie that expires at a specific time 34 c['expires_at_time'] = 'cookie_value' 35 time_to_live = datetime.timedelta(hours = 1) 36 expires = datetime.datetime(2018,9,19,18,30,14)+time_to_live 37 38 #Date format:Wdy,DD-Mon-YY HH:MM:SS: GMT 39 expires_at_time = expires.strftime('%a,%d %b %Y %H:%M:%S') 40 c['expires_at_time']['expires'] = expires_at_time 41 show_cookie(c)