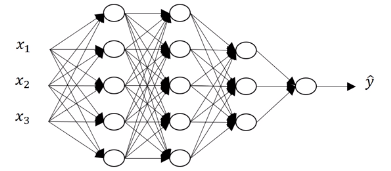

神经网络和深度学习(三)深层神经网络

1、深层神经网络中的前向传播:

(1)单数据情况:

z[1] = w[1]x + b[1]

a[1] = g[1](z[1])

z[2] = w[2]a[1] + b[2]

a[2] = g[2](z[2])

... ...

z[l] = w[l]a[l-1] + b[l]

a[l] = g[l](z[l]) = y^

(2)向量化情况:

Z[1] = W[1]X + b[1]

A[1] = g[1](Z[1])

Z[2] = W[2]A[1] + b[2]

A[2] = g[2](Z[2])

... ...

Z[l] = W[l]A[l-1] + b[l]

A[l] = g[l](Z[l]) = Y^

2、神经网络矩阵的维数:

(1)单数据情况:

a[l]、z[l]、b[l]、db[l] : (n[l], 1)

w[l]、dw[l] : (n[l], n[l-1])

(2)向量化情况:

A[l]、Z[l] : (n[l], m)

b[l]、db[l] : (n[l], 1)

W[l]、dW[l] : (n[l], n[l-1])

3、神经网络块:

4、深层神经网络中的反向传播:

(1)单数据情况:

dz[l] = da[l] * g[l]'(z[l]) ( = a[l] - y)

dw[l] = dz[l]a[l-1]T

db[l] = dz[l]

da[l-1] = w[l]Tdz[l]

dz[l-1] = da[l-1] * g[l-1]'(z[l-1])

... ...

(2)向量化情况:

dZl] = dA[l] * g[l]'(Z[l]) ( = A[l] - Y)

dW[l] = 1/m * dZ[l]A[l-1]T

dB[l] = 1/m * np.sum(dZ[l], axis=1,keepdims=True)

dA[l-1] = W[l]TdZ[l]

dZ[l-1] = dA[l-1] * g[l]'(Z[l])

... ...

5、参数与超参数:

parameters:W[1],b[1],W[2],b[2],... ...

hyper parameters:需要自己设定的参数,如:learning rate; #iterations; #hidden layer; #hidden units; #choice of activation function.