Python 爬取 热词并进行分类数据分析-[简单准备] (2020年寒假小目标05)

日期:2020.01.27

博客期:135

星期一

【本博客的代码如若要使用,请在下方评论区留言,之后再用(就是跟我说一声)】

所有相关跳转:

a.【简单准备】(本期博客)

b.【云图制作+数据导入】

c.【拓扑数据】

d.【数据修复】

e.【解释修复+热词引用】

f.【JSP演示+页面跳转】

g.【热词分类+目录生成】

h.【热词关系图+报告生成】

i . 【App制作】

j . 【安全性改造】

今天问了一下老师,信息领域热词从哪里爬,老师说是IT方面的新闻,嗯~有点儿意思了!

我找到了好多IT网站,但是大多数广告又多,名词也不专一针对信息领域,所以啊我就暂且用例一个相对还好的例子:

数据来源网址:https://news.51cto.com/(最终不一定使用此网站的爬取数据)

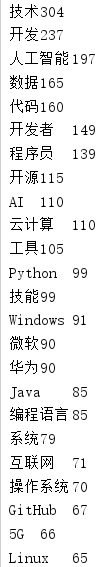

网站的相关热词来源截图:

如图,“智能”、“技术”、“区块链”为爬取目标

进行爬取(因为每一次执行js都会加重爬取任务的负担),当你执行到第100次的时候,你现在要执行第101次的JS,它所消耗的时间大概是27s!所以,这种方法我就爬100次,得到5607条数据:

爬取代码:

1 import parsel 2 from urllib import request 3 import codecs 4 from selenium import webdriver 5 import time 6 7 # [ 对字符串的特殊处理方法-集合 ] 8 class StrSpecialDealer: 9 @staticmethod 10 def getReaction(stri): 11 strs = str(stri).replace(" ","") 12 strs = strs[strs.find('>')+1:strs.rfind('<')] 13 strs = strs.replace("\t","") 14 strs = strs.replace("\r","") 15 strs = strs.replace("\n","") 16 return strs 17 18 class StringWriter: 19 filePath = "" 20 def __init__(self,str): 21 self.filePath = str 22 pass 23 24 def makeFileNull(self): 25 f = codecs.open(self.filePath, "w+", 'utf-8') 26 f.write("") 27 f.close() 28 29 def write(self,stri): 30 f = codecs.open(self.filePath, "a+", 'utf-8') 31 f.write(stri + "\n") 32 f.close() 33 34 35 # [ 连续网页爬取的对象 ] 36 class WebConnector: 37 profile = "" 38 sw = "" 39 # ---[定义构造方法] 40 def __init__(self): 41 self.profile = webdriver.Firefox() 42 self.profile.get('https://news.51cto.com/') 43 self.sw = StringWriter("../testFile/info.txt") 44 self.sw.makeFileNull() 45 46 # ---[定义释放方法] 47 def __close__(self): 48 self.profile.quit() 49 50 # 获取 url 的内部 HTML 代码 51 def getHTMLText(self): 52 a = self.profile.page_source 53 return a 54 55 # 获取页面内的基本链接 56 def getFirstChanel(self): 57 index_html = self.getHTMLText() 58 index_sel = parsel.Selector(index_html) 59 links = index_sel.css('.tag').extract() 60 num = links.__len__() 61 print("Len="+str(num)) 62 for i in range(0,num): 63 tpl = StrSpecialDealer.getReaction(links[i]) 64 self.sw.write(tpl) 65 66 def getMore(self): 67 self.profile.find_element_by_css_selector(".listsmore").click() 68 time.sleep(1) 69 70 def main(): 71 wc = WebConnector() 72 for i in range(0,100): 73 print(i) 74 wc.getMore() 75 wc.getFirstChanel() 76 wc.__close__() 77 78 79 main()

之后再使用MapReduce进行次数统计,就可以了(还可以配合维基百科和百度百科获取(爬取)相关热词的其他信息)

然后是词频统计(因为测试用,数据量不大,就写了简单的Python词频统计程序):

1 import codecs 2 3 4 class StringWriter: 5 filePath = "" 6 7 def __init__(self,str): 8 self.filePath = str 9 pass 10 11 def makeFileNull(self): 12 f = codecs.open(self.filePath, "w+", 'utf-8') 13 f.write("") 14 f.close() 15 16 def write(self,stri): 17 f = codecs.open(self.filePath, "a+", 'utf-8') 18 f.write(stri + "\n") 19 f.close() 20 21 22 class Multi: 23 filePath = "" 24 25 def __init__(self, filepath): 26 self.filePath = filepath 27 pass 28 29 def read(self): 30 fw = open(self.filePath, mode='r', encoding='utf-8') 31 tmp = fw.readlines() 32 return tmp 33 34 35 class Bean : 36 name = "" 37 num = 0 38 39 def __init__(self,name,num): 40 self.name = name 41 self.num = num 42 43 def __addOne__(self): 44 self.num = self.num + 1 45 46 def __toString__(self): 47 return self.name+"\t"+str(self.num) 48 49 def __isName__(self,str): 50 if str==self.name: 51 return True 52 else: 53 return False 54 55 56 class BeanGroup: 57 data = [] 58 59 def __init__(self): 60 self.data = [] 61 62 def __exist__(self, str): 63 num = self.data.__len__() 64 for i in range(0, num): 65 if self.data[i].__isName__(str): 66 return True 67 return False 68 69 def __addItem__(self,str): 70 # 存在 71 if self.__exist__(str): 72 num = self.data.__len__() 73 for i in range(0, num): 74 if self.data[i].__isName__(str): 75 self.data[i].__addOne__() 76 # 不存在 77 else : 78 self.data.append(Bean(str,1)) 79 80 def __len__(self): 81 return self.data.__len__() 82 83 84 def takenum(ele): 85 return ele.num 86 87 88 def main(): 89 sw = StringWriter("../testFile/output.txt") 90 sw.makeFileNull() 91 bg = BeanGroup() 92 m = Multi("../testFile/info.txt") 93 lines = m.read() 94 num = lines.__len__() 95 for i in range(0,num): 96 strs = str(lines[i]).replace("\n","").replace("\r","") 97 bg.__addItem__(strs) 98 bg.data.sort(key=takenum,reverse=True) 99 nums = bg.__len__() 100 for i in range(0,nums): 101 sw.write(str(bg.data[i].__toString__())) 102 103 104 main()

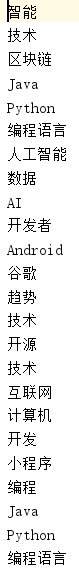

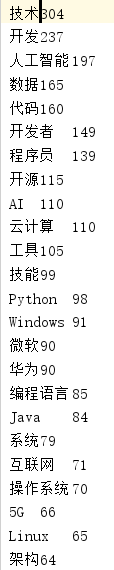

统计结果如下:

突然发现哈,找到的结果里存在Github和GitHub这两个完全相同的词语,我给当成区分的了!导入数据库的时候就出来问题了,哈哈哈!

整治以后代码:

1 import codecs 2 3 4 class StringWriter: 5 filePath = "" 6 7 def __init__(self,str): 8 self.filePath = str 9 pass 10 11 def makeFileNull(self): 12 f = codecs.open(self.filePath, "w+", 'utf-8') 13 f.write("") 14 f.close() 15 16 def write(self,stri): 17 f = codecs.open(self.filePath, "a+", 'utf-8') 18 f.write(stri + "\n") 19 f.close() 20 21 22 class Multi: 23 filePath = "" 24 25 def __init__(self, filepath): 26 self.filePath = filepath 27 pass 28 29 def read(self): 30 fw = open(self.filePath, mode='r', encoding='utf-8') 31 tmp = fw.readlines() 32 return tmp 33 34 35 class Bean : 36 name = "" 37 num = 0 38 39 def __init__(self,name,num): 40 self.name = name 41 self.num = num 42 43 def __addOne__(self): 44 self.num = self.num + 1 45 46 def __toString__(self): 47 return self.name+"\t"+str(self.num) 48 49 def __toSql__(self): 50 return "Insert into data VALUES ('" + self.name + "'," + str(self.num) + ");" 51 52 def __isName__(self,str): 53 if compare(str,self.name): 54 return True 55 else: 56 return False 57 58 59 class BeanGroup: 60 data = [] 61 62 def __init__(self): 63 self.data = [] 64 65 def __exist__(self, str): 66 num = self.data.__len__() 67 for i in range(0, num): 68 if self.data[i].__isName__(str): 69 return True 70 return False 71 72 def __addItem__(self,str): 73 # 存在 74 if self.__exist__(str): 75 num = self.data.__len__() 76 for i in range(0, num): 77 if self.data[i].__isName__(str): 78 self.data[i].__addOne__() 79 # 不存在 80 else : 81 self.data.append(Bean(str,1)) 82 83 def __len__(self): 84 return self.data.__len__() 85 86 87 def takenum(ele): 88 return ele.num 89 90 91 def compare(str,dud): 92 if str == dud : 93 return True 94 else: 95 if str.lower() == dud.lower() : 96 return True 97 else: 98 return False 99 100 def main(): 101 sw = StringWriter("../testFile/output.txt") 102 sw.makeFileNull() 103 bg = BeanGroup() 104 m = Multi("../testFile/info.txt") 105 lines = m.read() 106 num = lines.__len__() 107 for i in range(0,num): 108 strs = str(lines[i]).replace("\n","").replace("\r","") 109 bg.__addItem__(strs) 110 bg.data.sort(key=takenum,reverse=True) 111 nums = bg.__len__() 112 for i in range(0,nums): 113 sw.write(str(bg.data[i].__toString__())) 114 115 116 main()

这就没问题了!