Python 爬取 北京市政府首都之窗信件列表-[后续补充]

日期:2020.01.23

博客期:131

星期四

【本博客的代码如若要使用,请在下方评论区留言,之后再用(就是跟我说一声)】

//博客总体说明

1、准备工作

2、爬取工作(本期博客)

3、数据处理

4、信息展示

我试着改写了一下爬虫脚本,试着运行了一下,第一次卡在了第27页,因为第27页有那个“投诉”类型是我没有料到的!出于对这个问题的解决,我重新写了代码,新的类和上一个总体类的区别有以下几点:

1、因为要使用js调取下一页的数据,所以就去网站上下载了FireFox的驱动

安装参考博客:https://www.cnblogs.com/nuomin/p/8486963.html

这个是Selenium+WebDriver的活用,这样它能够模拟人对浏览器进行操作(当然可以执行js代码!)

# 引包

from selenium import webdriver

# 使用驱动自动打开浏览器并选择页面

profile = webdriver.Firefox()

profile.get('http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow')

# 浏览器执行js代码

# ----[pageTurning(3)在网页上是下一页的跳转js(不用导js文件或方法)]

profile.execute_script("pageTurning(3);")

# 关闭浏览器

profile.__close__()

2、因为要做页面跳转,所以每一次爬完页面地址以后就必须要去执行js代码!

3、因为咨询类的问题可能没有人给予回答,所以对应项应以空格代替(错误抛出)

4、第一个页面读取到文件里需要以"w+"的模式执行写文件,之后则以 “追加”的形式“a+”写文件

先说修改的Bean类(新增内容:我需要记录它每一个信件是“投诉”、“建议”还是“咨询”,所以添加了一个以这个字符串为类型的参数,进行写文件【在开头】)

1 # [ 保存的数据格式 ] 2 class Bean: 3 4 # 构造方法 5 def __init__(self,asker,responser,askTime,responseTime,title,questionSupport,responseSupport,responseUnsupport,questionText,responseText): 6 self.asker = asker 7 self.responser = responser 8 self.askTime = askTime 9 self.responseTime = responseTime 10 self.title = title 11 self.questionSupport = questionSupport 12 self.responseSupport = responseSupport 13 self.responseUnsupport = responseUnsupport 14 self.questionText = questionText 15 self.responseText = responseText 16 17 # 在控制台输出结果(测试用) 18 def display(self): 19 print("提问方:"+self.asker) 20 print("回答方:"+self.responser) 21 print("提问时间:" + self.askTime) 22 print("回答时间:" + self.responseTime) 23 print("问题标题:" + self.title) 24 print("问题支持量:" + self.questionSupport) 25 print("回答点赞数:" + self.responseSupport) 26 print("回答被踩数:" + self.responseUnsupport) 27 print("提问具体内容:" + self.questionText) 28 print("回答具体内容:" + self.responseText) 29 30 def toString(self): 31 strs = "" 32 strs = strs + self.asker; 33 strs = strs + "\t" 34 strs = strs + self.responser; 35 strs = strs + "\t" 36 strs = strs + self.askTime; 37 strs = strs + "\t" 38 strs = strs + self.responseTime; 39 strs = strs + "\t" 40 strs = strs + self.title; 41 strs = strs + "\t" 42 strs = strs + self.questionSupport; 43 strs = strs + "\t" 44 strs = strs + self.responseSupport; 45 strs = strs + "\t" 46 strs = strs + self.responseUnsupport; 47 strs = strs + "\t" 48 strs = strs + self.questionText; 49 strs = strs + "\t" 50 strs = strs + self.responseText; 51 return strs 52 53 # 将信息附加到文件里 54 def addToFile(self,fpath, model): 55 f = codecs.open(fpath, model, 'utf-8') 56 f.write(self.toString()+"\n") 57 f.close() 58 59 # 将信息附加到文件里 60 def addToFile_s(self, fpath, model,kind): 61 f = codecs.open(fpath, model, 'utf-8') 62 f.write(kind+"\t"+self.toString() + "\n") 63 f.close() 64 65 # --------------------[基础数据] 66 # 提问方 67 asker = "" 68 # 回答方 69 responser = "" 70 # 提问时间 71 askTime = "" 72 # 回答时间 73 responseTime = "" 74 # 问题标题 75 title = "" 76 # 问题支持量 77 questionSupport = "" 78 # 回答点赞数 79 responseSupport = "" 80 # 回答被踩数 81 responseUnsupport = "" 82 # 问题具体内容 83 questionText = "" 84 # 回答具体内容 85 responseText = ""

之后是总体的处理类

1 # [ 连续网页爬取的对象 ] 2 class WebPerConnector: 3 profile = "" 4 5 isAccess = True 6 7 # ---[定义构造方法] 8 def __init__(self): 9 self.profile = webdriver.Firefox() 10 self.profile.get('http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow') 11 12 # ---[定义释放方法] 13 def __close__(self): 14 self.profile.quit() 15 16 # 获取 url 的内部 HTML 代码 17 def getHTMLText(self): 18 a = self.profile.page_source 19 return a 20 21 # 获取页面内的基本链接 22 def getFirstChanel(self): 23 index_html = self.getHTMLText() 24 index_sel = parsel.Selector(index_html) 25 links = index_sel.css('div #mailul').css("a[onclick]").extract() 26 inNum = links.__len__() 27 for seat in range(0, inNum): 28 # 获取相应的<a>标签 29 pe = links[seat] 30 # 找到第一个 < 字符的位置 31 seat_turol = str(pe).find('>') 32 # 找到第一个 " 字符的位置 33 seat_stnvs = str(pe).find('"') 34 # 去掉空格 35 pe = str(pe)[seat_stnvs:seat_turol].replace(" ","") 36 # 获取资源 37 pe = pe[14:pe.__len__()-2] 38 pe = pe.replace("'","") 39 # 整理成 需要关联数据的样式 40 mor = pe.split(",") 41 # ---[ 构造网址 ] 42 url_get_item = ""; 43 # 对第一个数据的判断 44 if (mor[0] == "咨询"): 45 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=" 46 else: 47 if (mor[0] == "建议"): 48 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId=" 49 else: 50 if (mor[0] == "投诉"): 51 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.complain.complainDetail.flow?originalId=" 52 url_get_item = url_get_item + mor[1] 53 54 model = "a+" 55 56 if(seat==0): 57 model = "w+" 58 59 dc = DetailConnector(url_get_item) 60 dc.getBean().addToFile_s("../testFile/emails.txt",model,mor[0]) 61 self.getChannel() 62 63 # 获取页面内的基本链接 64 def getNoFirstChannel(self): 65 index_html = self.getHTMLText() 66 index_sel = parsel.Selector(index_html) 67 links = index_sel.css('div #mailul').css("a[onclick]").extract() 68 inNum = links.__len__() 69 for seat in range(0, inNum): 70 # 获取相应的<a>标签 71 pe = links[seat] 72 # 找到第一个 < 字符的位置 73 seat_turol = str(pe).find('>') 74 # 找到第一个 " 字符的位置 75 seat_stnvs = str(pe).find('"') 76 # 去掉空格 77 pe = str(pe)[seat_stnvs:seat_turol].replace(" ", "") 78 # 获取资源 79 pe = pe[14:pe.__len__() - 2] 80 pe = pe.replace("'", "") 81 # 整理成 需要关联数据的样式 82 mor = pe.split(",") 83 # ---[ 构造网址 ] 84 url_get_item = ""; 85 # 对第一个数据的判断 86 if (mor[0] == "咨询"): 87 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=" 88 else: 89 if (mor[0] == "建议"): 90 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId=" 91 else: 92 if (mor[0] == "投诉"): 93 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.complain.complainDetail.flow?originalId=" 94 url_get_item = url_get_item + mor[1] 95 96 dc = DetailConnector(url_get_item) 97 dc.getBean().addToFile_s("../testFile/emails.txt", "a+",mor[0]) 98 self.getChannel() 99 100 def getChannel(self): 101 try: 102 self.profile.execute_script("pageTurning(3);") 103 time.sleep(1) 104 except: 105 print("-# END #-") 106 isAccess = False 107 108 if(self.isAccess): 109 self.getNoFirstChannel() 110 else : 111 self.__close__()

对应执行代码(这次不算测试)

wpc = WebPerConnector() wpc.getFirstChanel()

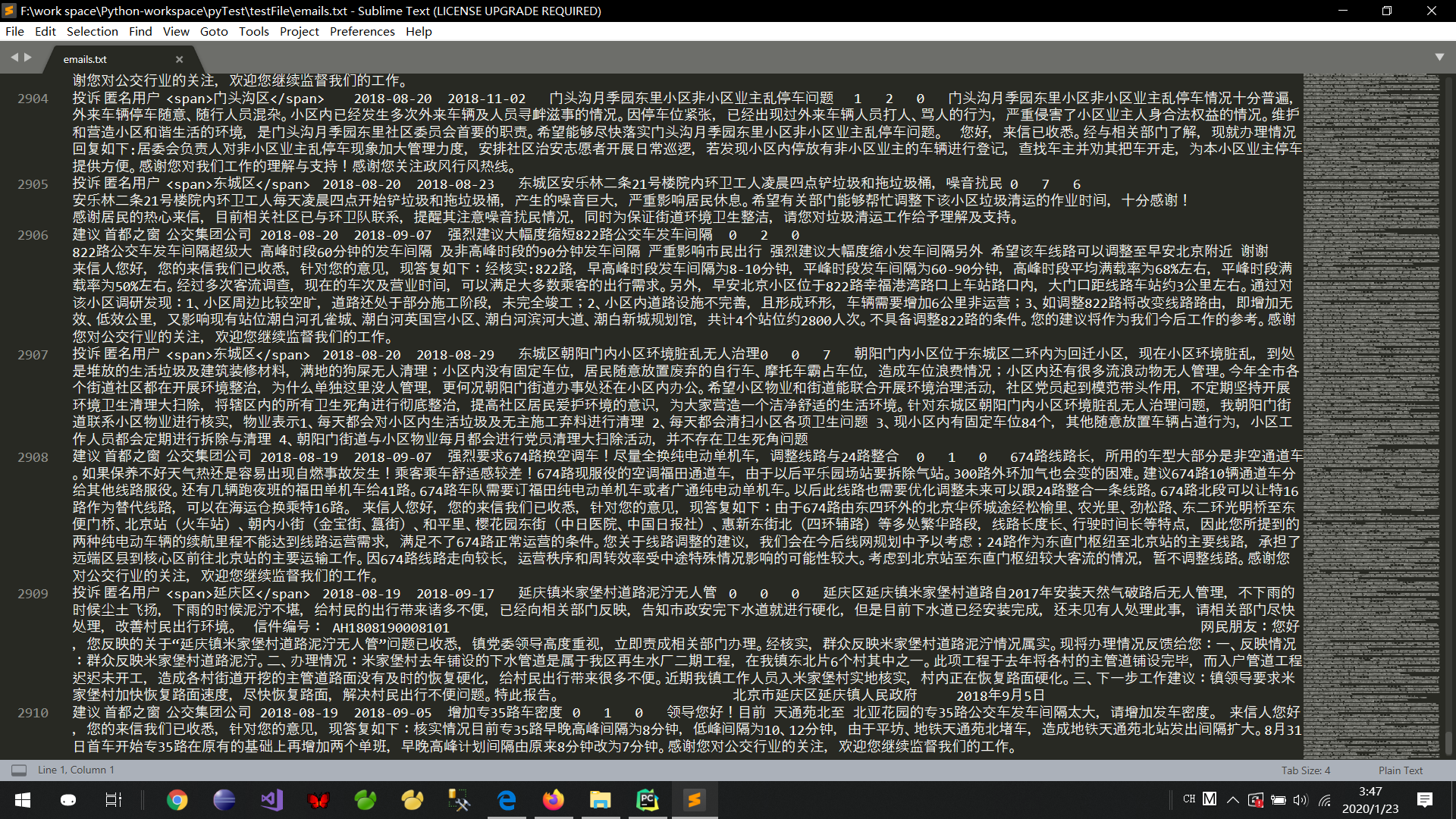

亲测可以执行到第486页,然后页面崩掉,看报错信息应该是溢出了... ...数据量的话是2910条,文件大小 3,553 KB

我将继续对代码进行改造... ...

改造完毕:(完整能够实现爬虫的python集合)

1 import parsel 2 from urllib import request 3 import codecs 4 from selenium import webdriver 5 import time 6 7 8 # [ 对字符串的特殊处理方法-集合 ] 9 class StrSpecialDealer: 10 @staticmethod 11 def getReaction(stri): 12 strs = str(stri).replace(" ","") 13 strs = strs[strs.find('>')+1:strs.rfind('<')] 14 strs = strs.replace("\t","") 15 strs = strs.replace("\r","") 16 strs = strs.replace("\n","") 17 return strs 18 19 20 # [ 保存的数据格式 ] 21 class Bean: 22 23 # 构造方法 24 def __init__(self,asker,responser,askTime,responseTime,title,questionSupport,responseSupport,responseUnsupport,questionText,responseText): 25 self.asker = asker 26 self.responser = responser 27 self.askTime = askTime 28 self.responseTime = responseTime 29 self.title = title 30 self.questionSupport = questionSupport 31 self.responseSupport = responseSupport 32 self.responseUnsupport = responseUnsupport 33 self.questionText = questionText 34 self.responseText = responseText 35 36 # 在控制台输出结果(测试用) 37 def display(self): 38 print("提问方:"+self.asker) 39 print("回答方:"+self.responser) 40 print("提问时间:" + self.askTime) 41 print("回答时间:" + self.responseTime) 42 print("问题标题:" + self.title) 43 print("问题支持量:" + self.questionSupport) 44 print("回答点赞数:" + self.responseSupport) 45 print("回答被踩数:" + self.responseUnsupport) 46 print("提问具体内容:" + self.questionText) 47 print("回答具体内容:" + self.responseText) 48 49 def toString(self): 50 strs = "" 51 strs = strs + self.asker; 52 strs = strs + "\t" 53 strs = strs + self.responser; 54 strs = strs + "\t" 55 strs = strs + self.askTime; 56 strs = strs + "\t" 57 strs = strs + self.responseTime; 58 strs = strs + "\t" 59 strs = strs + self.title; 60 strs = strs + "\t" 61 strs = strs + self.questionSupport; 62 strs = strs + "\t" 63 strs = strs + self.responseSupport; 64 strs = strs + "\t" 65 strs = strs + self.responseUnsupport; 66 strs = strs + "\t" 67 strs = strs + self.questionText; 68 strs = strs + "\t" 69 strs = strs + self.responseText; 70 return strs 71 72 # 将信息附加到文件里 73 def addToFile(self,fpath, model): 74 f = codecs.open(fpath, model, 'utf-8') 75 f.write(self.toString()+"\n") 76 f.close() 77 78 # 将信息附加到文件里 79 def addToFile_s(self, fpath, model,kind): 80 f = codecs.open(fpath, model, 'utf-8') 81 f.write(kind+"\t"+self.toString() + "\n") 82 f.close() 83 84 # --------------------[基础数据] 85 # 提问方 86 asker = "" 87 # 回答方 88 responser = "" 89 # 提问时间 90 askTime = "" 91 # 回答时间 92 responseTime = "" 93 # 问题标题 94 title = "" 95 # 问题支持量 96 questionSupport = "" 97 # 回答点赞数 98 responseSupport = "" 99 # 回答被踩数 100 responseUnsupport = "" 101 # 问题具体内容 102 questionText = "" 103 # 回答具体内容 104 responseText = "" 105 106 107 # [ 连续网页爬取的对象 ] 108 class WebPerConnector: 109 profile = "" 110 111 isAccess = True 112 113 # ---[定义构造方法] 114 def __init__(self): 115 self.profile = webdriver.Firefox() 116 self.profile.get('http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow') 117 118 # ---[定义释放方法] 119 def __close__(self): 120 self.profile.quit() 121 122 # 获取 url 的内部 HTML 代码 123 def getHTMLText(self): 124 a = self.profile.page_source 125 return a 126 127 # 获取页面内的基本链接 128 def getFirstChanel(self): 129 index_html = self.getHTMLText() 130 index_sel = parsel.Selector(index_html) 131 links = index_sel.css('div #mailul').css("a[onclick]").extract() 132 inNum = links.__len__() 133 for seat in range(0, inNum): 134 # 获取相应的<a>标签 135 pe = links[seat] 136 # 找到第一个 < 字符的位置 137 seat_turol = str(pe).find('>') 138 # 找到第一个 " 字符的位置 139 seat_stnvs = str(pe).find('"') 140 # 去掉空格 141 pe = str(pe)[seat_stnvs:seat_turol].replace(" ","") 142 # 获取资源 143 pe = pe[14:pe.__len__()-2] 144 pe = pe.replace("'","") 145 # 整理成 需要关联数据的样式 146 mor = pe.split(",") 147 # ---[ 构造网址 ] 148 url_get_item = ""; 149 # 对第一个数据的判断 150 if (mor[0] == "咨询"): 151 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=" 152 else: 153 if (mor[0] == "建议"): 154 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId=" 155 else: 156 if (mor[0] == "投诉"): 157 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.complain.complainDetail.flow?originalId=" 158 url_get_item = url_get_item + mor[1] 159 160 model = "a+" 161 162 if(seat==0): 163 model = "w+" 164 165 dc = DetailConnector(url_get_item) 166 dc.getBean().addToFile_s("../testFile/emails.txt",model,mor[0]) 167 168 # 获取页面内的基本链接 169 def getNoFirstChannel(self): 170 index_html = self.getHTMLText() 171 index_sel = parsel.Selector(index_html) 172 links = index_sel.css('div #mailul').css("a[onclick]").extract() 173 inNum = links.__len__() 174 for seat in range(0, inNum): 175 # 获取相应的<a>标签 176 pe = links[seat] 177 # 找到第一个 < 字符的位置 178 seat_turol = str(pe).find('>') 179 # 找到第一个 " 字符的位置 180 seat_stnvs = str(pe).find('"') 181 # 去掉空格 182 pe = str(pe)[seat_stnvs:seat_turol].replace(" ", "") 183 # 获取资源 184 pe = pe[14:pe.__len__() - 2] 185 pe = pe.replace("'", "") 186 # 整理成 需要关联数据的样式 187 mor = pe.split(",") 188 # ---[ 构造网址 ] 189 url_get_item = ""; 190 # 对第一个数据的判断 191 if (mor[0] == "咨询"): 192 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=" 193 else: 194 if (mor[0] == "建议"): 195 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId=" 196 else: 197 if (mor[0] == "投诉"): 198 url_get_item = "http://www.beijing.gov.cn/hudong/hdjl/com.web.complain.complainDetail.flow?originalId=" 199 url_get_item = url_get_item + mor[1] 200 201 dc = DetailConnector(url_get_item) 202 bea = "" 203 try: 204 bea = dc.getBean() 205 bea.addToFile_s("../testFile/emails.txt", "a+", mor[0]) 206 except: 207 pass 208 209 # 转移页面 (2-5624) 210 def turn(self,seat): 211 seat = seat - 1 212 self.profile.execute_script("beforeTurning("+str(seat)+");") 213 time.sleep(1) 214 215 # [ 信息爬取结点 ] 216 class DetailConnector: 217 headers = { 218 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.75 Safari/537.36'} 219 basicURL = "" 220 221 # ---[定义构造方法] 222 def __init__(self, url): 223 self.basicURL = url 224 225 # 获取 url 的内部 HTML 代码 226 def getHTMLText(self): 227 req = request.Request(url=self.basicURL, headers=self.headers) 228 r = request.urlopen(req).read().decode() 229 return r 230 231 # 获取基本数据 232 def getBean(self): 233 index_html = self.getHTMLText() 234 index_sel = parsel.Selector(index_html) 235 container_div = index_sel.css('div .container')[0] 236 container_strong = index_sel.css('div strong')[0] 237 container_retire = index_sel.css('div div div div')[5] 238 239 #基础数据配置 240 title = " " 241 num_supp = " " 242 question_toBuilder = " " 243 question_time = " " 244 support_quert = " " 245 quText = " " 246 answer_name = " " 247 answer_time = " " 248 answer_text = " " 249 num_supp = " " 250 num_unsupp = " " 251 252 #------------------------------------------------------------------------------------------提问内容 253 # 获取提问标题 254 title = str(container_strong.extract()) 255 title = title.replace("<strong>", "") 256 title = title.replace("</strong>", "") 257 258 # 获取来信人 259 container_builder = container_retire.css("div div") 260 question_toBuilder = str(container_builder.extract()[0]) 261 question_toBuilder = StrSpecialDealer.getReaction(question_toBuilder) 262 if (question_toBuilder.__contains__("来信人:")): 263 question_toBuilder = question_toBuilder.replace("来信人:", "") 264 265 # 获取提问时间 266 question_time = str(container_builder.extract()[1]) 267 question_time = StrSpecialDealer.getReaction(question_time) 268 if (question_time.__contains__("时间:")): 269 question_time = question_time.replace("时间:", "") 270 271 # 获取网友支持量 272 support_quert = str(container_builder.extract()[2]) 273 support_quert = support_quert[support_quert.find('>') + 1:support_quert.rfind('<')] 274 support_quert = StrSpecialDealer.getReaction(support_quert) 275 276 # 获取问题具体内容 277 quText = str(index_sel.css('div div div div').extract()[9]) 278 if(quText.__contains__("input")): 279 quText = str(index_sel.css('div div div div').extract()[10]) 280 quText = quText.replace("<p>", "") 281 quText = quText.replace("</p>", "") 282 quText = StrSpecialDealer.getReaction(quText) 283 284 # ------------------------------------------------------------------------------------------回答内容 285 try: 286 # 回答点赞数 287 num_supp = str(index_sel.css('div a span').extract()[0]) 288 num_supp = StrSpecialDealer.getReaction(num_supp) 289 # 回答不支持数 290 num_unsupp = str(index_sel.css('div a span').extract()[1]) 291 num_unsupp = StrSpecialDealer.getReaction(num_unsupp) 292 # 获取回答方 293 answer_name = str(container_div.css("div div div div div div div").extract()[1]) 294 answer_name = answer_name.replace("<strong>", "") 295 answer_name = answer_name.replace("</strong>", "") 296 answer_name = answer_name.replace("</div>", "") 297 answer_name = answer_name.replace(" ", "") 298 answer_name = answer_name.replace("\t", "") 299 answer_name = answer_name.replace("\r", "") 300 answer_name = answer_name.replace("\n", "") 301 answer_name = answer_name[answer_name.find('>') + 1:answer_name.__len__()] 302 # ---------------------不想带着这个符号就拿开 303 if (answer_name.__contains__("[官方回答]:")): 304 answer_name = answer_name.replace("[官方回答]:", "") 305 if (answer_name.__contains__("<span>")): 306 answer_name = answer_name.replace("<span>", "") 307 if (answer_name.__contains__("</span>")): 308 answer_name = answer_name.replace("</span>", "") 309 310 # 答复时间 311 answer_time = str(index_sel.css('div div div div div div div div')[2].extract()) 312 answer_time = StrSpecialDealer.getReaction(answer_time) 313 if (answer_time.__contains__("答复时间:")): 314 answer_time = answer_time.replace("答复时间:", "") 315 # 答复具体内容 316 answer_text = str(index_sel.css('div div div div div div div')[4].extract()) 317 answer_text = StrSpecialDealer.getReaction(answer_text) 318 answer_text = answer_text.replace("<p>", "") 319 answer_text = answer_text.replace("</p>", "") 320 except: 321 pass 322 323 bean = Bean(question_toBuilder, answer_name, question_time, answer_time, title, support_quert, num_supp, 324 num_unsupp, quText, answer_text) 325 326 return bean 327 328 wpc = WebPerConnector() 329 wpc.getFirstChanel() 330 for i in range(2,5625): 331 wpc.turn(i) 332 wpc.getNoFirstChannel() 333 wpc.__close__()

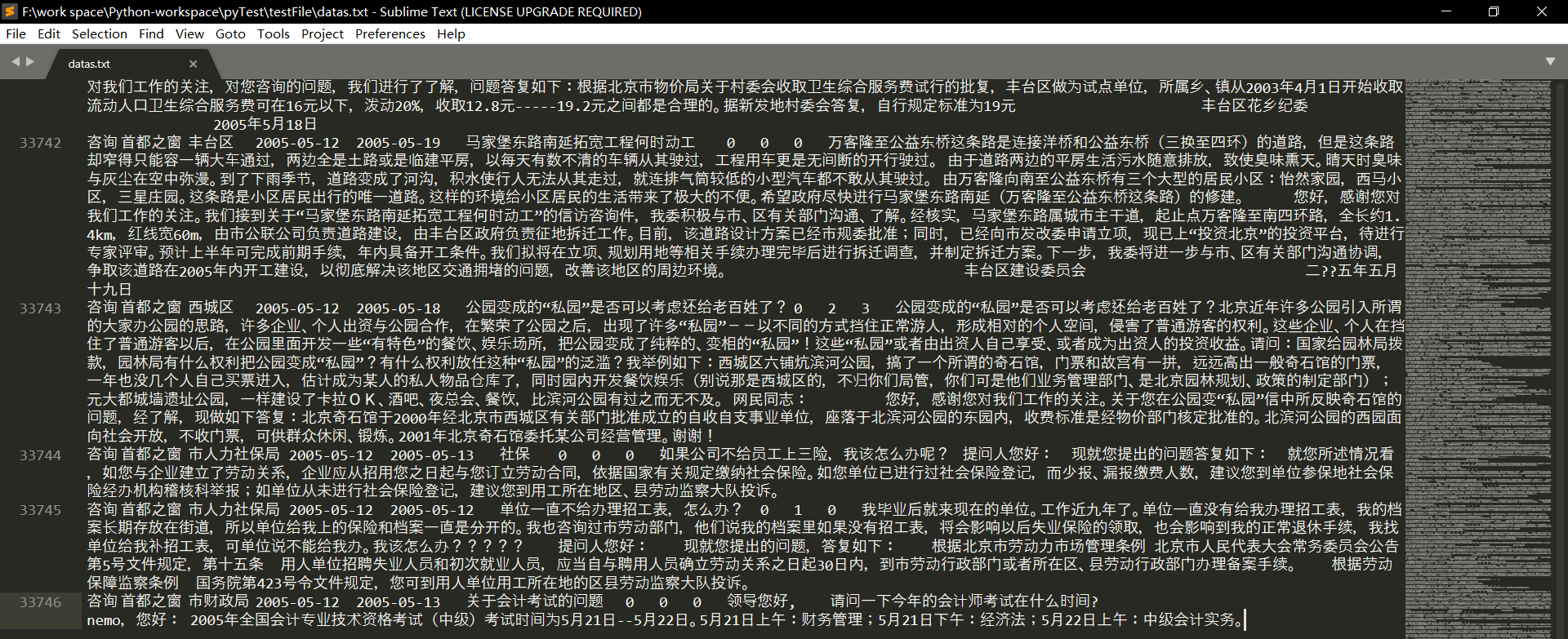

执行结果文件

大小 40,673 KB

数据量 33,746

这爬取过程蛮长的啊!从凌晨2:30开始,一直到7:30多,这是5个小时!

期间爬取任务停了4次:

1、第2565页——进程进行到这里时浏览器卡住了,不动了,这我也不知道就改造了一下代码,继续爬了

2、第3657页——原因同上

3、第3761页——系统说是在文件读写方面,对文件追加的写入已经达到了极限,所以我将当时的数据文件移出,建立了新的空白文件用以储存3761页及以后的数据

4、第4449页——这一页有这样一项数据,名称是“关于亦庄轻轨的小红门站和旧宫东站”(咨询类),然后如果你点开那个链接,你将会收到的是500的网页状态,当时爬虫爬到这里就是因为报错而跳出了程序,如下图: