获取cdua设备基本信息

在深入研究如何编写设备代码之前,我们需要通过某种机制来判断计算机中当前有哪些设备,以及每个设备都支持哪些功能。幸运的是,可以通过一个非常简单的接口来获得这种信息。首先,我们希望知道在系统中有多少个设备是支持CUDA架构的,并且这些设备能够运行基于CUDA C编写的核函数。要获得CUDA设备的数量,可以调用cudaGetDeviceCount()。这个函数的作用从它的名字就可以看出来。int count;HANDLE_ERROR(cudaGetDevicecount(&count ))

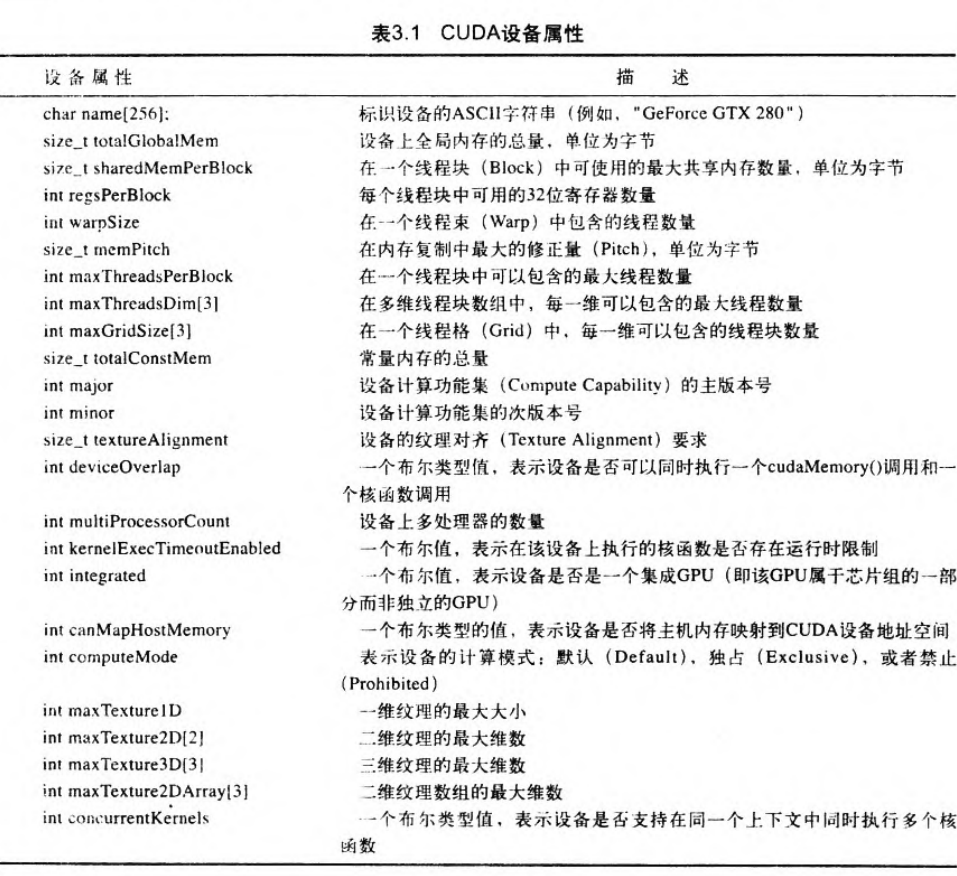

在调用cudaGetDeviceCount()后,可以对每个设备进行迭代,并查询各个设备的相关信息。CUDA运行时将返回一个cudaDevice Prop:类型的结构,其中包含了设备的相关属性。我们可以获得哪些属性?从CUDA3.O开始,在cudaDeviceProp结构中包含了以下信息:

下面的结构体是较高本版cuda中提供的结构体,信息更丰富。

/** * CUDA device properties */ struct __device_builtin__ cudaDeviceProp { char name[256]; /**< ASCII string identifying device */ cudaUUID_t uuid; /**< 16-byte unique identifier */ char luid[8]; /**< 8-byte locally unique identifier. Value is undefined on TCC and non-Windows platforms */ unsigned int luidDeviceNodeMask; /**< LUID device node mask. Value is undefined on TCC and non-Windows platforms */ size_t totalGlobalMem; /**< Global memory available on device in bytes */ size_t sharedMemPerBlock; /**< Shared memory available per block in bytes */ int regsPerBlock; /**< 32-bit registers available per block */ int warpSize; /**< Warp size in threads */ size_t memPitch; /**< Maximum pitch in bytes allowed by memory copies */ int maxThreadsPerBlock; /**< Maximum number of threads per block */ int maxThreadsDim[3]; /**< Maximum size of each dimension of a block */ int maxGridSize[3]; /**< Maximum size of each dimension of a grid */ int clockRate; /**< Clock frequency in kilohertz */ size_t totalConstMem; /**< Constant memory available on device in bytes */ int major; /**< Major compute capability */ int minor; /**< Minor compute capability */ size_t textureAlignment; /**< Alignment requirement for textures */ size_t texturePitchAlignment; /**< Pitch alignment requirement for texture references bound to pitched memory */ int deviceOverlap; /**< Device can concurrently copy memory and execute a kernel. Deprecated. Use instead asyncEngineCount. */ int multiProcessorCount; /**< Number of multiprocessors on device */ int kernelExecTimeoutEnabled; /**< Specified whether there is a run time limit on kernels */ int integrated; /**< Device is integrated as opposed to discrete */ int canMapHostMemory; /**< Device can map host memory with cudaHostAlloc/cudaHostGetDevicePointer */ int computeMode; /**< Compute mode (See ::cudaComputeMode) */ int maxTexture1D; /**< Maximum 1D texture size */ int maxTexture1DMipmap; /**< Maximum 1D mipmapped texture size */ int maxTexture1DLinear; /**< Deprecated, do not use. Use cudaDeviceGetTexture1DLinearMaxWidth() or cuDeviceGetTexture1DLinearMaxWidth() instead. */ int maxTexture2D[2]; /**< Maximum 2D texture dimensions */ int maxTexture2DMipmap[2]; /**< Maximum 2D mipmapped texture dimensions */ int maxTexture2DLinear[3]; /**< Maximum dimensions (width, height, pitch) for 2D textures bound to pitched memory */ int maxTexture2DGather[2]; /**< Maximum 2D texture dimensions if texture gather operations have to be performed */ int maxTexture3D[3]; /**< Maximum 3D texture dimensions */ int maxTexture3DAlt[3]; /**< Maximum alternate 3D texture dimensions */ int maxTextureCubemap; /**< Maximum Cubemap texture dimensions */ int maxTexture1DLayered[2]; /**< Maximum 1D layered texture dimensions */ int maxTexture2DLayered[3]; /**< Maximum 2D layered texture dimensions */ int maxTextureCubemapLayered[2];/**< Maximum Cubemap layered texture dimensions */ int maxSurface1D; /**< Maximum 1D surface size */ int maxSurface2D[2]; /**< Maximum 2D surface dimensions */ int maxSurface3D[3]; /**< Maximum 3D surface dimensions */ int maxSurface1DLayered[2]; /**< Maximum 1D layered surface dimensions */ int maxSurface2DLayered[3]; /**< Maximum 2D layered surface dimensions */ int maxSurfaceCubemap; /**< Maximum Cubemap surface dimensions */ int maxSurfaceCubemapLayered[2];/**< Maximum Cubemap layered surface dimensions */ size_t surfaceAlignment; /**< Alignment requirements for surfaces */ int concurrentKernels; /**< Device can possibly execute multiple kernels concurrently */ int ECCEnabled; /**< Device has ECC support enabled */ int pciBusID; /**< PCI bus ID of the device */ int pciDeviceID; /**< PCI device ID of the device */ int pciDomainID; /**< PCI domain ID of the device */ int tccDriver; /**< 1 if device is a Tesla device using TCC driver, 0 otherwise */ int asyncEngineCount; /**< Number of asynchronous engines */ int unifiedAddressing; /**< Device shares a unified address space with the host */ int memoryClockRate; /**< Peak memory clock frequency in kilohertz */ int memoryBusWidth; /**< Global memory bus width in bits */ int l2CacheSize; /**< Size of L2 cache in bytes */ int persistingL2CacheMaxSize; /**< Device's maximum l2 persisting lines capacity setting in bytes */ int maxThreadsPerMultiProcessor;/**< Maximum resident threads per multiprocessor */ int streamPrioritiesSupported; /**< Device supports stream priorities */ int globalL1CacheSupported; /**< Device supports caching globals in L1 */ int localL1CacheSupported; /**< Device supports caching locals in L1 */ size_t sharedMemPerMultiprocessor; /**< Shared memory available per multiprocessor in bytes */ int regsPerMultiprocessor; /**< 32-bit registers available per multiprocessor */ int managedMemory; /**< Device supports allocating managed memory on this system */ int isMultiGpuBoard; /**< Device is on a multi-GPU board */ int multiGpuBoardGroupID; /**< Unique identifier for a group of devices on the same multi-GPU board */ int hostNativeAtomicSupported; /**< Link between the device and the host supports native atomic operations */ int singleToDoublePrecisionPerfRatio; /**< Ratio of single precision performance (in floating-point operations per second) to double precision performance */ int pageableMemoryAccess; /**< Device supports coherently accessing pageable memory without calling cudaHostRegister on it */ int concurrentManagedAccess; /**< Device can coherently access managed memory concurrently with the CPU */ int computePreemptionSupported; /**< Device supports Compute Preemption */ int canUseHostPointerForRegisteredMem; /**< Device can access host registered memory at the same virtual address as the CPU */ int cooperativeLaunch; /**< Device supports launching cooperative kernels via ::cudaLaunchCooperativeKernel */ int cooperativeMultiDeviceLaunch; /**< Deprecated, cudaLaunchCooperativeKernelMultiDevice is deprecated. */ size_t sharedMemPerBlockOptin; /**< Per device maximum shared memory per block usable by special opt in */ int pageableMemoryAccessUsesHostPageTables; /**< Device accesses pageable memory via the host's page tables */ int directManagedMemAccessFromHost; /**< Host can directly access managed memory on the device without migration. */ int maxBlocksPerMultiProcessor; /**< Maximum number of resident blocks per multiprocessor */ int accessPolicyMaxWindowSize; /**< The maximum value of ::cudaAccessPolicyWindow::num_bytes. */ size_t reservedSharedMemPerBlock; /**< Shared memory reserved by CUDA driver per block in bytes */ };

下面的程序打印了我的p2200显卡的信息

#include "cuda_runtime.h" #include "device_launch_parameters.h" #include <stdio.h> #include "book.h" void getDeviceProp() { cudaDeviceProp prop; int count; HANDLE_ERROR(cudaGetDeviceCount(&count)); for (int i = 0; i < count; i++) { HANDLE_ERROR(cudaGetDeviceProperties(&prop, i)); //print device property printf("---General Information for device %d ---In", i); printf("Name:%s\n", prop.name); printf("Compute capability:%d.%d\n", prop.major, prop.minor); printf("clock rate:%d\n", prop.clockRate); printf("Device copy overlap:"); if (prop.deviceOverlap) printf("Enabled\n"); else printf("Disabled\n"); printf("Kernel execition timeout "); if (prop.kernelExecTimeoutEnabled) printf("Enabled\n"); else printf("Disabled\n\n"); printf("--- Memory Information for device %d--- \n", i); printf("Total global mem : %d\n", prop.totalGlobalMem); printf("Total constant Mem : %d\n", prop.totalConstMem); printf("Max mem pitch : %d\n", prop.memPitch); printf("Texture Alignment : %d\n", prop.textureAlignment); printf("-- - MP Information for device %d-- - \n", i); printf("Multiprocessor count : %d\n", prop.multiProcessorCount); printf("Shared mem per mp : %d\n", prop.sharedMemPerBlock); printf("Registers per mp : %d\n", prop.regsPerBlock); printf("Threads in warp : %d\n", prop.warpSize); printf("Max threads per block : %d\n", prop.maxThreadsPerBlock); printf("Max thread dimensions : (%d, %d, %d)\n", prop.maxThreadsDim[0], prop.maxThreadsDim[1], prop.maxThreadsDim[2]); printf("Max grid dimensions : (%d, %d, %d)\n", prop.maxGridSize[0], prop.maxGridSize[1], prop.maxGridSize[2]); printf("\n"); } } int main() { //testSaclarAdd(); getDeviceProp(); return 0; }

输出结果如下

Device copy overlap:Enabled

Kernel execition timeout Enabled

-- - Memory Information for device 0-- -

Total global mem : 1073479680

Total constant Mem : 65536

Max mem pitch : 2147483647

Texture Alignment : 512

-- - MP Information for device 0-- -

Multiprocessor count : 10

Shared mem per mp : 49152

Registers per mp : 65536

Threads in warp : 32

Max threads per block : 1024

Max thread dimensions : (1024, 1024, 64)

Max grid dimensions : (2147483647, 65535, 65535)

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· winform 绘制太阳,地球,月球 运作规律

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· AI 智能体引爆开源社区「GitHub 热点速览」

· 写一个简单的SQL生成工具