阶段作业1:完整的中英文词频统计

1·英文小说,词频统计

#读取文件字符串 fo=open('nihao.txt','r',encoding='utf-8') str=fo.read().lower() fo.close() #对文件预处理 sep=""",./?!";'""" for word in sep: str=str.replace(word,"") print(str) #排除无意义词 li=str.split() strset=set(li) exclude={'a','the','and','i','you','in'} strset=strset-exclude #单词计数 dict={} for word in strset: dict[word]=li.count(word) print(len(dict),dict) wclist=list(dict.items()) #print(wclist) #按词频排序 wclist.sort(key=lambda x:x[1],reverse=True) #print(wclist) #输出pop(20) for i in range(20): #print(wclist[i])

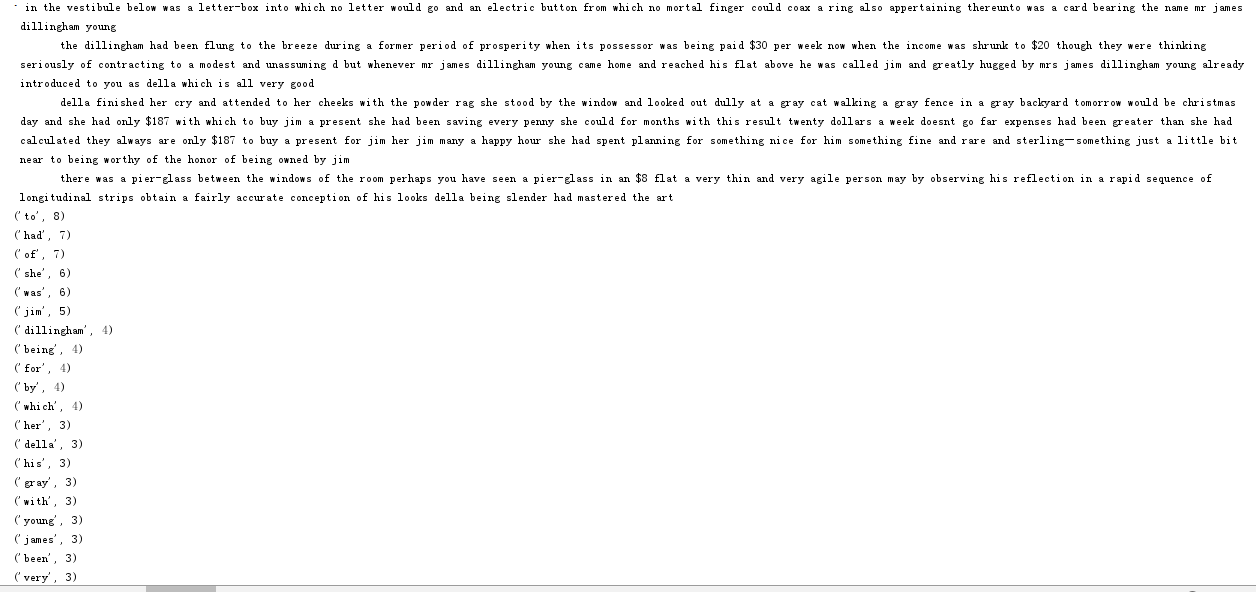

运行截图

2、中文小说,词频统计

import jieba import jieba.posseg as psg #通过文本处理文件 with open("nihao.txt", 'r') as fo: str=fo.read() fo.close() #汉字文本的预处理 for ch in str: if ch.isalpha() is False: str = str.replace(ch, "") # 分词并转成一个列表 strList = [x.word for x in psg.cut(str) if x.flag.startswith('n')] # 词频统计,用字典保存,排序 mySet = set(strList) keyList = [] valueList = [] for word in mySet: keyList.append(word) valueList.append(strList.count(word)) wordCount = dict(zip(keyList, valueList)) # 字典排序函数(并取top20): def sortDict(myDict): tempList = list() for i in myDict.items(): tempList.append(i) tempList.sort(key=lambda x: x[1], reverse=True) myDict = dict(tempList[0:21]) return myDict wordCount = sortDict(wordCount) # 输出 print(" 单词 出现次数".center(13)) for word in wordCount.keys(): print(word.center(13), wordCount[word])

运行截图: