Cenots7.6 安装Oracle Rac 11.2.0.4

一、环境准备

系统:centos 7.6

软件:oracle 11.2.0.4

database: p13390677_112040_Linux-x86-64_1of7.zip p13390677_112040_Linux-x86-64_2of7.zip

grid集群: p13390677_112040_Linux-x86-64_3of7.zip

共享磁盘:系统组已配置好 iscsi。

二、前期准备

2.1 ip规划

每个节点网卡需要2个,11.2开始至少需要4种IP地址,规划如下:

rac01:134.80.101.2 公共ip

rac01-vip:134.80.101.4 虚拟ip

rac01-pip:192.100.100.2 私有ip

rac02:134.80.101.3

rac02-vip:134.80.101.5

rac02-pip:192.100.100.3

scan-cluster:134.80.101.6 集群入口

说明:3个公共ip,2个虚拟ip 需要在同一个子网!

Oracle RAC环境下每个节点都会有多个IP地址,分别为公共IP(Public IP) 、私有IP(Private IP)和虚拟IP(Virtual IP):

私有IP(Public IP)

Private IP address is used only for internal clustering processing(Cache Fusion).

专用(私有)IP地址只用于内部群集处理,如心跳侦测,服务器间的同步数据用。

虚拟IP(Virtual IP)

Virtual IP is used by database applications to enable fail over when one cluster node fails.

当一个群集节点出现故障时,数据库应用程序通过虚拟IP地址进行故障切换。

当一个群集节点出现故障时,数据库应用程序(包括数据库客户端)通过虚拟IP地址切换到另一个无故障节点,另一个功能是均衡负载。

公共IP(Public IP)

Public IP adress is the normal IP address typically used by DBA and SA to manage storage, system and database.

公共IP地址

正常的(真实的)IP地址,通常DBA和SA使用公共IP地址在来管理存储、系统和数据库。

监听IP(SCAN IP)

从Oracle 11g R2开始,Oracle RAC网络对IP地址有特殊要求,新增了加监听IP地址(SCAN IP),所以从Oracle 11g R2开始Oracle RAC网络至少需要4种IP地址(前面介绍三种IP地址)。在Oracle 11g R2之前,如果数据库采用了RAC架构,在客户端的tnsnames中,需要配置多个节点的连接信息,从而实现诸如负载均衡、Failover等RAC的特性。因此,当数据库RAC集群需要添加或删除节点时,需要及时对客户端机器的tns进行更新,以免出现安全隐患。

在Oracle 11g R2中,为了简化该项配置工作,引入了SCAN(Single Client Access Name)的特性。该特性的好处在于,在数据库与客户端之间,添加了一层虚拟的服务层,就是所谓的SCAN IP以及SCAN IP Listener,在客户端仅需要配置SCAN IP的tns信息,通过SCAN IP Listener,连接后台集群数据库。这样,不论集群数据库是否有添加或者删除节点的操作,均不会对Client产生影响。

2.2 编辑/etc/hosts文件

rac01,rac02 同时配置 vim /etc/hosts 如下:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#rac01

134.80.101.2 rac01

134.80.101.4 rac01-vip

192.100.100.2 rac01-pip

#rac02

134.80.101.3 rac02

134.80.101.5 rac02-vip

192.100.100.3 rac02-pip

#scan cluster

134.81.101.6 scan-cluster

2.3创建用户和组

rac01,rac02两节点 root 下执行操作:创建组、用户,并设置用户密码

groupadd -g 1000 oinstall

groupadd -g 1200 dba

groupadd -g 1201 oper

groupadd -g 1300 asmadmin

groupadd -g 1301 asmdba

groupadd -g 1302 asmoper

useradd -u 1100 -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid

useradd -u 1101 -g oinstall -G dba,oper,asmdba oracle

passwd grid

passwd oracle

2.4 创建目录并授权

在rac01、rac02上进行目录的创建和授权:

root 下执行以下操作:

mkdir -p /u01/app/11.2.0/grid

mkdir -p /u01/app/grid

mkdir /u01/app/oracle

chown -R grid:oinstall /u01

chown oracle:oinstall /u01/app/oracle

chmod -R 775 /u01/

2.5 配置用户环境变量

2.5.1配置grid用户

rac01:

[grid@rac01 ~]$ vim .bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/11.2.0/grid

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

umask 022

[grid@rac01 ~]$ source .bash_profile

rac02:

[grid@rac02 ~]$ vim .bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_SID=+ASM2

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/11.2.0/grid

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

umask 022

[grid@rac02 ~]$ source .bash_profile

2.5.2 配置oracle用户

rac01:

[oracle@rac01 ~]$ vim .bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_SID=orcl1

export ORACLE_UNQNAME=orcl

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export TNS_ADMIN=$ORACLE_HOME/network/admin

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

[oracle@rac01 ~]$ source .bash_profile

rac02:

[oracle@rac02 ~]$ vim .bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_SID=orcl2

export ORACLE_UNQNAME=orcl

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export TNS_ADMIN=$ORACLE_HOME/network/admin

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

[oracle@rac02 ~]$ source .bash_profile

2.6 配置grid,oracle用户ssh互信

rac01:

ssh-keygen -t rsa #一路回车

ssh-keygen -t dsa #一路回车

rac02:

ssh-keygen -t rsa #一路回车

ssh-keygen -t dsa #一路回车

先rac01、再rac02上执行过上述两条命令后,再回到rac01再继续执行下面的命令:

cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys

cat ~/.ssh/id_dsa.pub >>~/.ssh/authorized_keys

ssh rac02 cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys

ssh rac02 cat ~/.ssh/id_dsa.pub >>~/.ssh/authorized_keys

scp ~/.ssh/authorized_keys rac02:~/.ssh/authorized_keys

chmod 600 .ssh/authorized_keys

两个节点互相ssh通过一次

ssh rac01 date

ssh rac02 date

ssh rac01-pip date

ssh rac02-pip date

2.7 配置系统参数

[root@rac01 ~]# cat /etc/sysconfig/selinux

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@ ~]# vim /etc/sysctl.conf ,在最后添加以下内容:

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736 RAM times 0.5

kernel.shmall = 4294967296 physical RAM size / pagesize(getconf PAGESIZE)

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = 2097152

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

net.ipv4.tcp_wmem = 262144 262144 262144

net.ipv4.tcp_rmem = 4194304 4194304 4194304

[root@ rac01~]# sysctl -p 立即生效

2.7.3 修改 limits.conf

在rac01和rac02上都要执行

[root@rac01 ~]# vim /etc/security/limits.conf ,在最后添加以下内容:

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

2.7.4 修改 /etc/pam.d/login

在rac01和rac02上都要执行

[root@rac01 ~]# vim /etc/pam.d/login,在session required pam_namespace.so下面插入:

session required pam_limits.so

2.7.5 修改/etc/profile

在rac01和rac02上都要执行

[root@ rac01~]# cp /etc/profile /etc/profile.bak

[root@ rac01~]# vim /etc/profile,在文件最后添加以下内容:

if [ $USER = "ORACLE" ] || [ $USER = "GRID" ];then

if [ $SHELL = "/bin/ksh" ];then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

2.7.6 停止并删除ntp服务

在rac01和rac02上都要执行

[root@rac01~]# service ntpd status

[root@rac01~]# chkconfig ntpd off

[root@rac01~]# cp /etc/ntp.conf /etc/ntp.conf.bak

[root@rac01 ~]# rm -rf /etc/ntp.conf

2.8 共享磁盘分区

在rac01执行 fdisk /dev/sdb

根据提示输入 n、 p、 w 等

# 同理,重复步骤对 sdc sdd sde 完成分区。

分区完 rac02 执行

[root@rac02 ~]# partprobe 分区立即生效

2.9 安装UEK核心

这里采用asmlib方式配置磁盘,需要安装oracleasm rpm包

[root@ ~]# yum install kmod-oracleasm

[root@ ~]# rpm -ivh oracleasmlib-2.0.12-1.el6.x86_64.rpm

[root@ ~]# rpm -ivh oracleasm-support-2.1.8-1.el6.x86_64.rpm

kmod可以直接yum安装,另外两个需要去官网下载

下载地址:https://www.oracle.com/linux/downloads/linux-asmlib-rhel7-downloads.html

2.10 创建ASM Disk Volumes

2.10.1 配置并装载ASM核心模块

rac01 rac02都要操作

[root@rac01 ~]# oracleasm configure -i ,根据提示输入:

Configuringthe Oracle ASM library driver.

Thiswill configure the on-boot properties of the Oracle ASM library

driver. The following questions will determinewhether the driver is

loadedon boot and what permissions it will have. The current values

willbe shown in brackets ('[]'). Hitting<ENTER> without typing an

answerwill keep that current value. Ctrl-Cwill abort.

Defaultuser to own the driver interface []: grid

Defaultgroup to own the driver interface []: asmadmin

StartOracle ASM library driver on boot (y/n) [n]: y

Scanfor Oracle ASM disks on boot (y/n) [y]: y

WritingOracle ASM library driver configuration: done

[root@rac01 ~]# oracleasm init

Creating/dev/oracleasm mount point: /dev/oracleasm

Loadingmodule "oracleasm": oracleasm

MountingASMlib driver filesystem: /dev/oracleasm

2.10.2 创建ASM磁盘

rac01上执行

oracleasm createdisk CRSVOL1 /dev/sdc1

oracleasm createdisk FRAVOL1 /dev/sdc2

oracleasm createdisk ARCVOL1 /dev/sdc3

oracleasm createdisk DATAVOL1 /dev/sdd1

oracleasm createdisk DATAVOL2 /dev/sde1

oracleasm createdisk DATAVOL3 /dev/sdf1

oracleasm createdisk DATAVOL4 /dev/sdg1

oracleasm createdisk DATAVOL5 /dev/sdh1

[root@rac01 ~]# oracleasm listdisks

ARCVOL1

CRSVOL1

DATAVOL1

DATAVOL2

DATAVOL3

DATAVOL4

DATAVOL5

FRAVOL1

使用oracleasm-discover查找ASM磁盘,运行该命令查看是否能找到刚创建的几个磁盘。

[root@rac01 ~]# oracleasm-discover

UsingASMLib from /opt/oracle/extapi/64/asm/orcl/1/libasm.so

[ASMLibrary - Generic Linux, version 2.0.4 (KABI_V2)]

Discovereddisk: ORCL:CRSVOL1 [2096753 blocks (1073537536 bytes), maxio 512]

Discovereddisk: ORCL:DATAVOL1 [41940960 blocks (21473771520 bytes), maxio 512]

Discovereddisk: ORCL:DATAVOL2 [41940960 blocks (21473771520 bytes), maxio 512]

Discovereddisk: ORCL:FRAVOL1 [62912480 blocks (32211189760 bytes), maxio 512]

rac02上使用oracleasm scandisks 扫描asm磁盘

[root@rac02 ~]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

[root@rac02 ~]# oracleasm listdisks

ARCVOL1

CRSVOL1

DATAVOL1

DATAVOL2

DATAVOL3

DATAVOL4

DATAVOL5

FRAVOL1

三、安装rac 集群软件

3.1 安装oracle所依赖组件

3.1.1配置yum源

cd /etc/yum.repos.d/

下载repo文件

wget http://mirrors.aliyun.com/repo/Centos-7.repo

mv CentOs-Base.repo CentOs-Base.repo.bak

mv Centos-7.repo CentOs-Base.repo

执行yum源更新命令

yum clean all

yum makecache

yum update

3.1.2安装oracle 依赖

[root@rac01 ~]# yum -y install binutils compat-libstdc++ elfutils-libelf elfutils-libelf-devel gcc gcc-c++ glibc glibc-common glibc-devel glibc-headers kernel-headers ksh libaio libaio-devel libgcc libgomp libstdc++ libstdc++-devel numactl-devel sysstat unixODBC unixODBC-devel compat-libstdc++* libXp

[root@rac01 ~]# rpm -ivh pdksh-5.2.14-30.x86_64.rpm (这个包需要下载)

[root@rac02 ~]# 同上

3.2 安装前预检查配置信息

使用grid用户 ,racnode1、racnode2都要执行一下这个脚本。

[grid@rac01 ~]$ cd /opt/grid/ 切换到软件上传目录

[grid@rac01 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac01,rac02 -fixup -verbose

结果如:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "rac01"

Destination Node Reachable?

------------------------------------ ------------------------

rac01 yes

rac02 yes

Result: Node reachability check passed from node "rac01"

Checking user equivalence...

Check: User equivalence for user "grid"

Node Name Status

------------------------------------ ------------------------

rac02 passed

rac01 passed

Result: User equivalence check passed for user "grid"

(中间部分省略粘贴)

(中间部分省略粘贴)

(中间部分省略粘贴)

Checking the file "/etc/resolv.conf" to make sure only one of domain and search entries is defined

File "/etc/resolv.conf" does not have both domain and search entries defined

Checking if domain entry in file "/etc/resolv.conf" is consistent across the nodes...

domain entry in file "/etc/resolv.conf" is consistent across nodes

Checking if search entry in file "/etc/resolv.conf" is consistent across the nodes...

search entry in file "/etc/resolv.conf" is consistent across nodes

Checking DNS response time for an unreachable node

Node Name Status

------------------------------------ ------------------------

rac02 passed

rac01 passed

The DNS response time for an unreachable node is within acceptable limit on all nodes

File "/etc/resolv.conf" is consistent across nodes

Check: Time zone consistency

Result: Time zone consistency check passed

Pre-check for cluster services setup was successful.

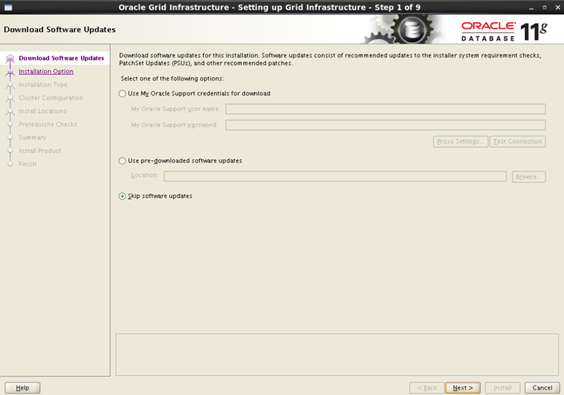

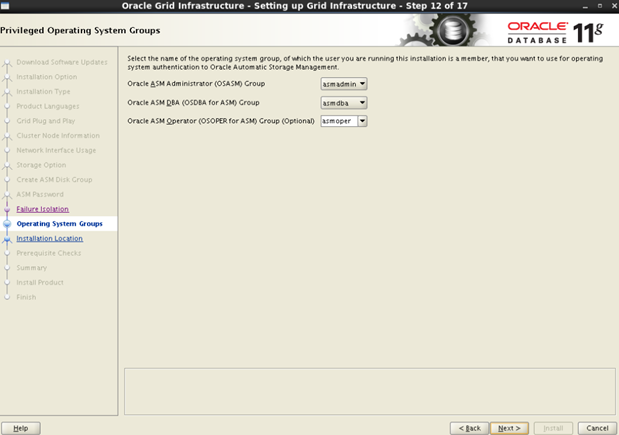

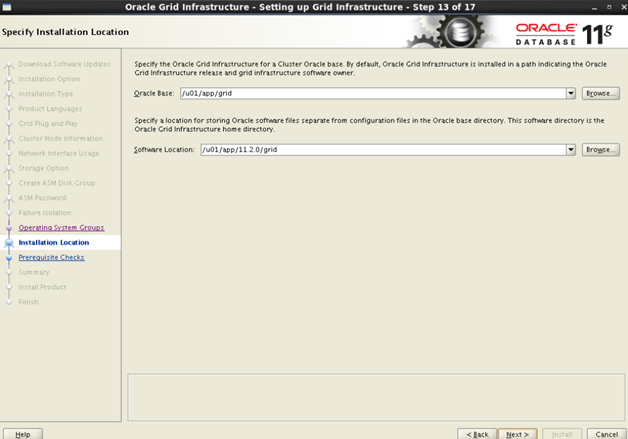

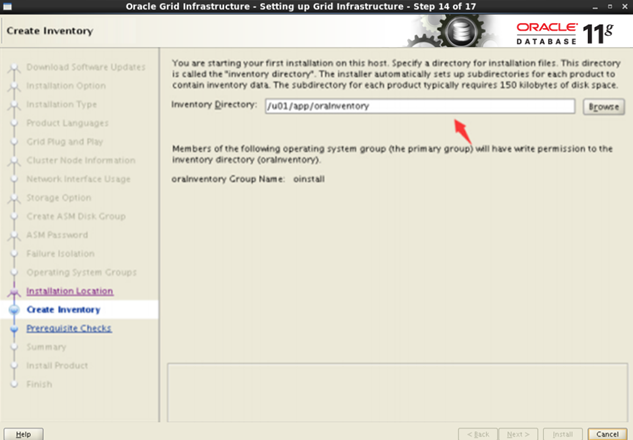

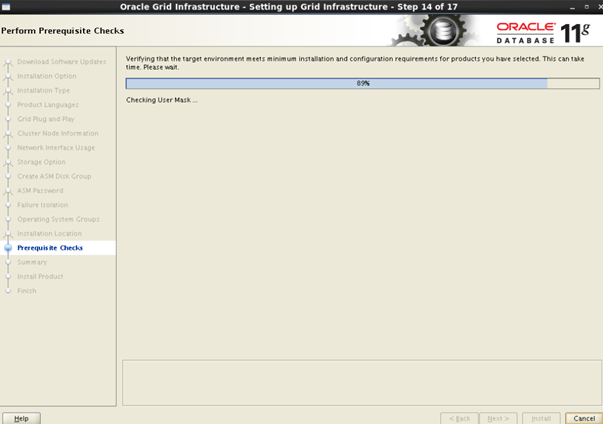

3.3 开始安装grid软件

确保两个节点rac01、racn02都已经启动,然后以grid用户登录,开始Oracle Grid Infrastructure安装 【请在图形界面下】

[grid@rac01 ~]$ cd /opt/grid/ 切换到软件上传目录

调出图形界面,可以安装xmanager

[grid@rac01 grid] export DISPLAY=本地ip:0.0

[grid@rac01 grid]$ ./runInstaller

选择"skip software updates".

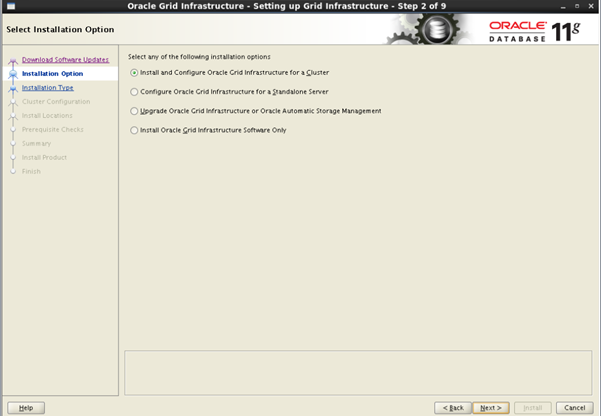

默认即可。选择"Install and Configure Oracle Grid Infrastructure for a Cluster".

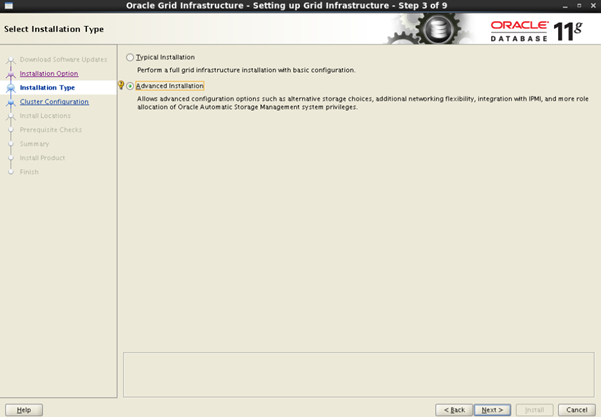

选择高级安装

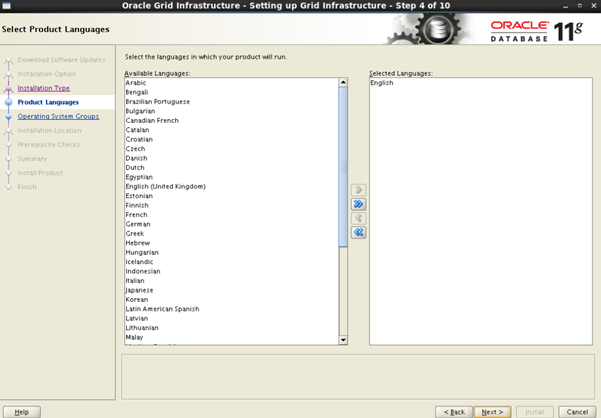

选择English

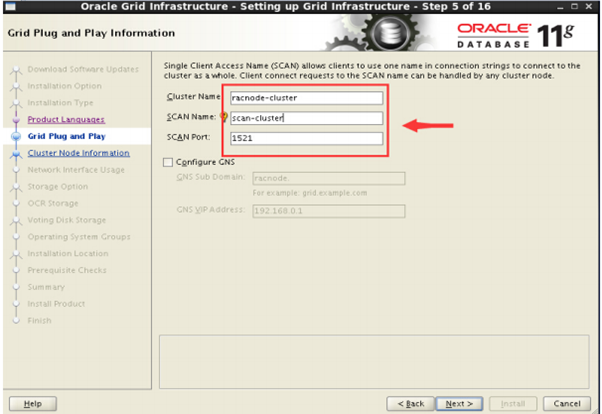

去掉Configure GNS,设置Cluster Name,Scan Name,ScanPort:1521.

意:将SCAN Name与/etc/hosts文件一致。 我们这里应是: scan-cluster ,Cluster Name可以自定义。

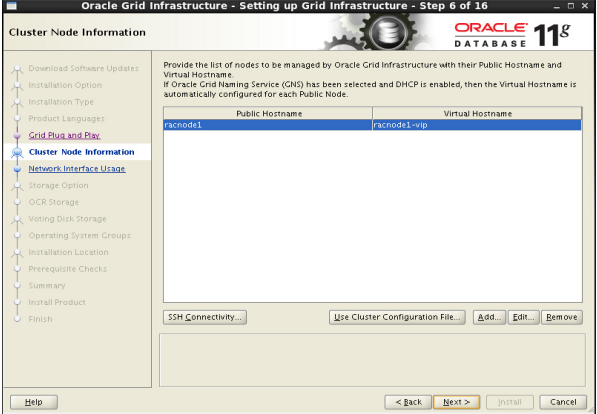

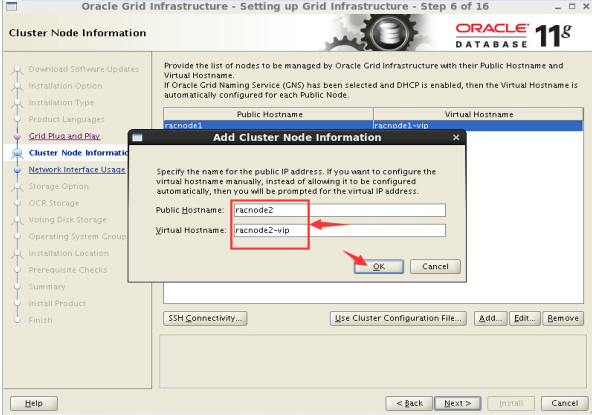

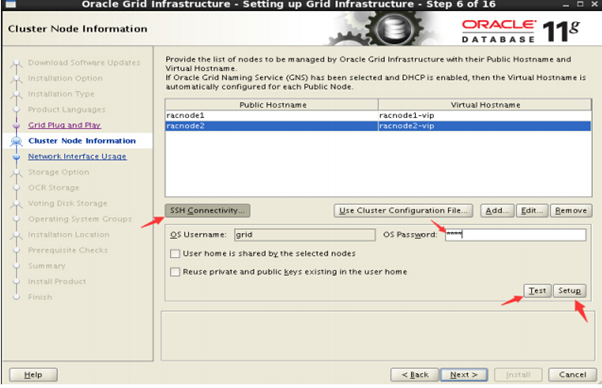

选择Add,添加节点

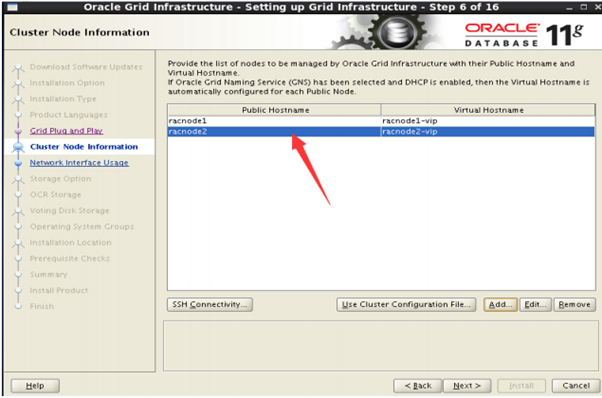

Public Hostname输入rac02, Virtual Hostname输入rac02-vip

最终结果如下:

验证ssh等效性

1) 如果前置未设置ssh等效性:选择ssh connectivty,输入OS password:grid(grid用户密

码),点击setup,等待即可,成功则下一步。然后点击"T est".

2) 如果前面已经设置了ssh等效性:可以点击"T est",或直接下一步

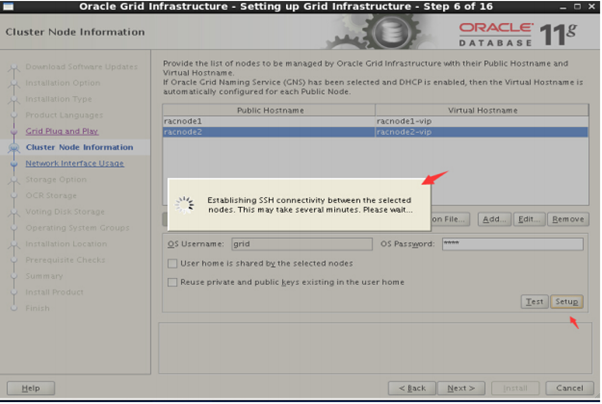

点击"setup"后

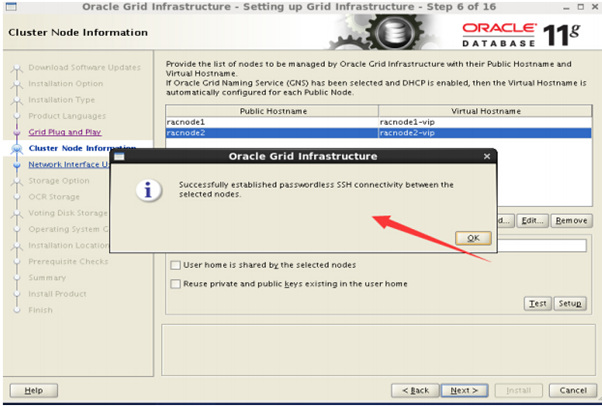

点击"OK"

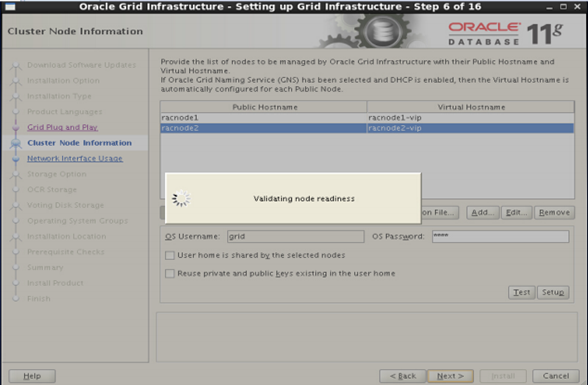

点击next

后面默认即可

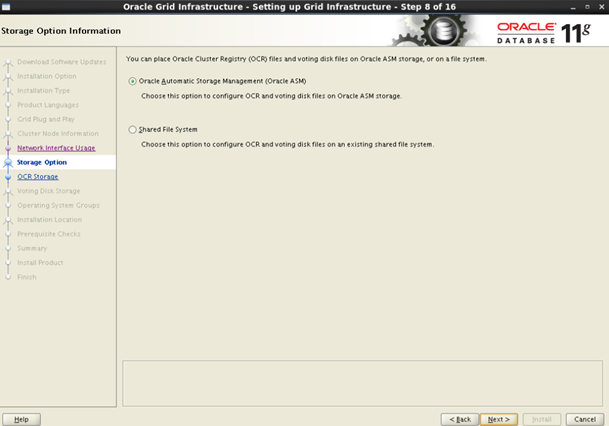

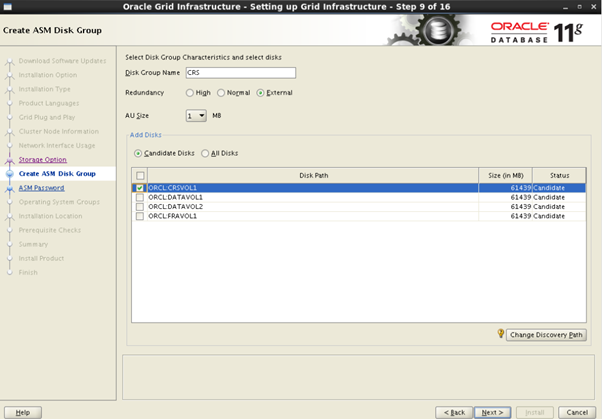

创建ASM磁盘组。若未发现磁盘,则点击change Discovery Path,输入磁盘所在地址。

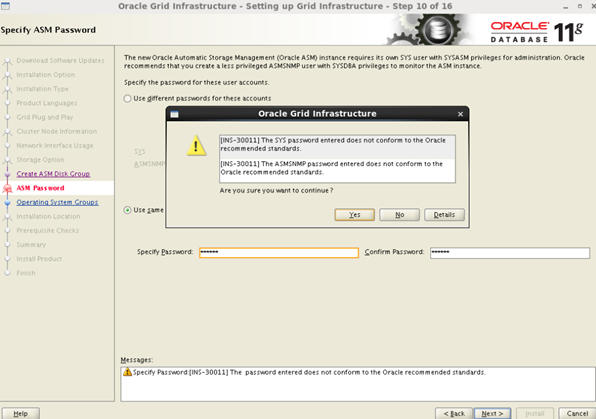

设置密码,如果密码设置相对简单,会弹出提示,直接继续即可。

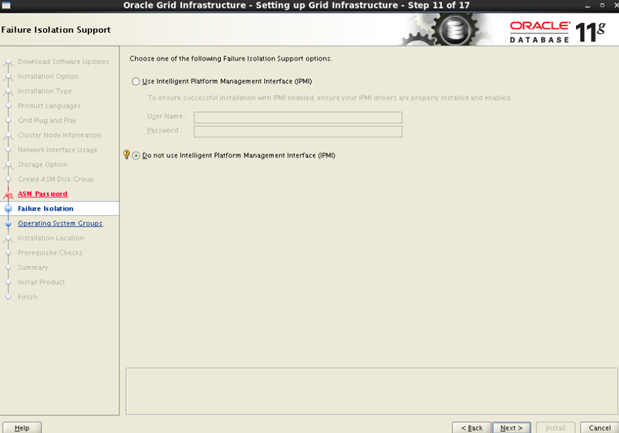

后面默认即可.

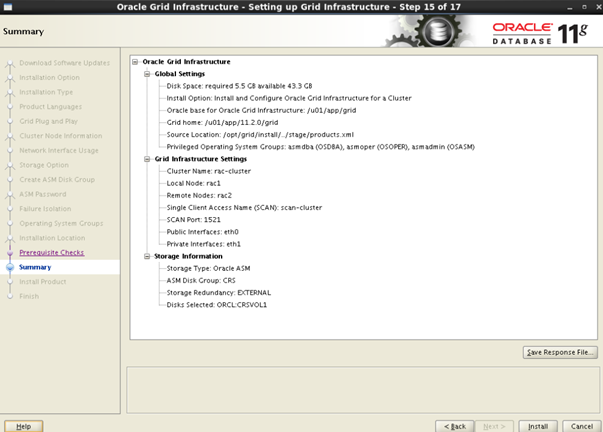

安装前检查,有些警告提示可以忽略。 如有其它错误,根据具体错误提示进行分析配置。点下一步出现。

默认即可,点击 install 开始安装。

安装过程

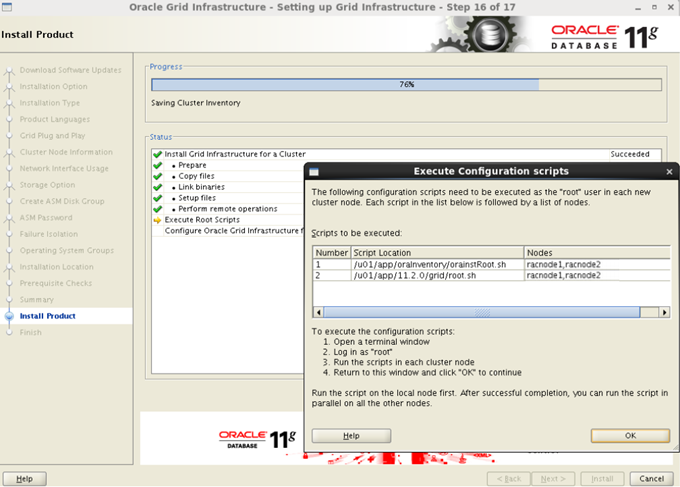

提示执行脚本。

一定要以root帐户执行,并且不能同时执行。

先执行rac01 /u01/app/oraInventory/orainstRoot.sh,

再执行rac02 /u01/app/oraInventory/orainstRoot.sh

然后,先执行rac01 /u01/app/11.2.0/grid/root.sh

再执行 rac02 /u01/app/11.2.0/grid/root.sh

安装完毕,点击close。 至此rac软件安装完毕。接下来要进行验证。

以上截图部分来源于网上,可能有新对不上的图片,步骤一样即可。

3.4 验证Oracle Grid Infrastructure安装成功。

检查结果类似如下:

以rac01为例粘贴结果,rac02执行结果此处不粘贴了。

[root@racnode1 ~]# su - grid

[grid@racnode1 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

检查Clusterware资源:[grid@racnode1 ~]$ crs_stat -t -v

Name Type R/RA F/FT Target State Host

----------------------------------------------------------------------

ora.CRS.dg ora....up.type 0/5 0/ ONLINE ONLINE racnode1

ora.DATA.dg ora....up.type 0/5 0/ ONLINE ONLINE racnode1

ora.FRA.dg ora....up.type 0/5 0/ ONLINE ONLINE racnode1

ora....ER.lsnr ora....er.type 0/5 0/ ONLINE ONLINE racnode1

ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE racnode1

ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE racnode1

ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE racnode2

ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE

ora....network ora....rk.type 0/5 0/ ONLINE ONLINE racnode1

ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE racnode2

ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE racnode1

ora....SM1.asm application 0/5 0/0 ONLINE ONLINE racnode1

ora....C1.lsnr application 0/5 0/0 ONLINE ONLINE racnode1

ora. racnode1.gsd application 0/5 0/0 OFFLINE OFFLINE

ora. racnode1.ons application 0/3 0/0 ONLINE ONLINE racnode1

ora. racnode1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE racnode1

ora....SM2.asm application 0/5 0/0 ONLINE ONLINE racnode2

ora....C2.lsnr application 0/5 0/0 ONLINE ONLINE racnode2

ora. racnode2.gsd application 0/5 0/0 OFFLINE OFFLINE

ora. racnode2.ons application 0/3 0/0 ONLINE ONLINE racnode2

ora. racnode2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE racnode2

ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE racnode1

检查集群节点

[grid@racnode1 ~]$ olsnodes -n

racnode1 1

racnode2 2

检查两个节点上的Oracle TNS监听器进程:

[grid@racnode1 ~]$ ps -ef|grep lsnr|grep -v 'grep'

grid 94448 1 0 15:04 ? 00:00:00 /u01/app/11.2.0/grid/bin/tnslsnr LISTENER -inherit

grid 94485 1 0 15:04 ? 00:00:00 /u01/app/11.2.0/grid/bin/tnslsnr LISTENER_SCAN1 -inherit

[grid@racnode1 ~]$ ps -ef|grep lsnr|grep -v 'grep'|awk '{print $9}'

LISTENER

LISTENER_SCAN1

确认针对Oracle Clusterware文件的Oracle ASM功能:

[grid@racnode1 ~]$ srvctl status asm -a

ASM is running on racnode1,racnode2

ASM is enabled.

检查Oracle集群注册表(OCR):

[grid@racnode1 ~]$ ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 2624

Available space (kbytes) : 259496

ID : 1555425658

Device/File Name : +CRS

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check bypassed due to non-privileged user

检查表决磁盘:

[grid@racnode1 ~]$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 0d90b0c368684ff5bff8f2094823b901 (ORCL:CRSVOL1) [CRS]

Located 1 voting disk(s).

3.5 安装grid遇到的错误

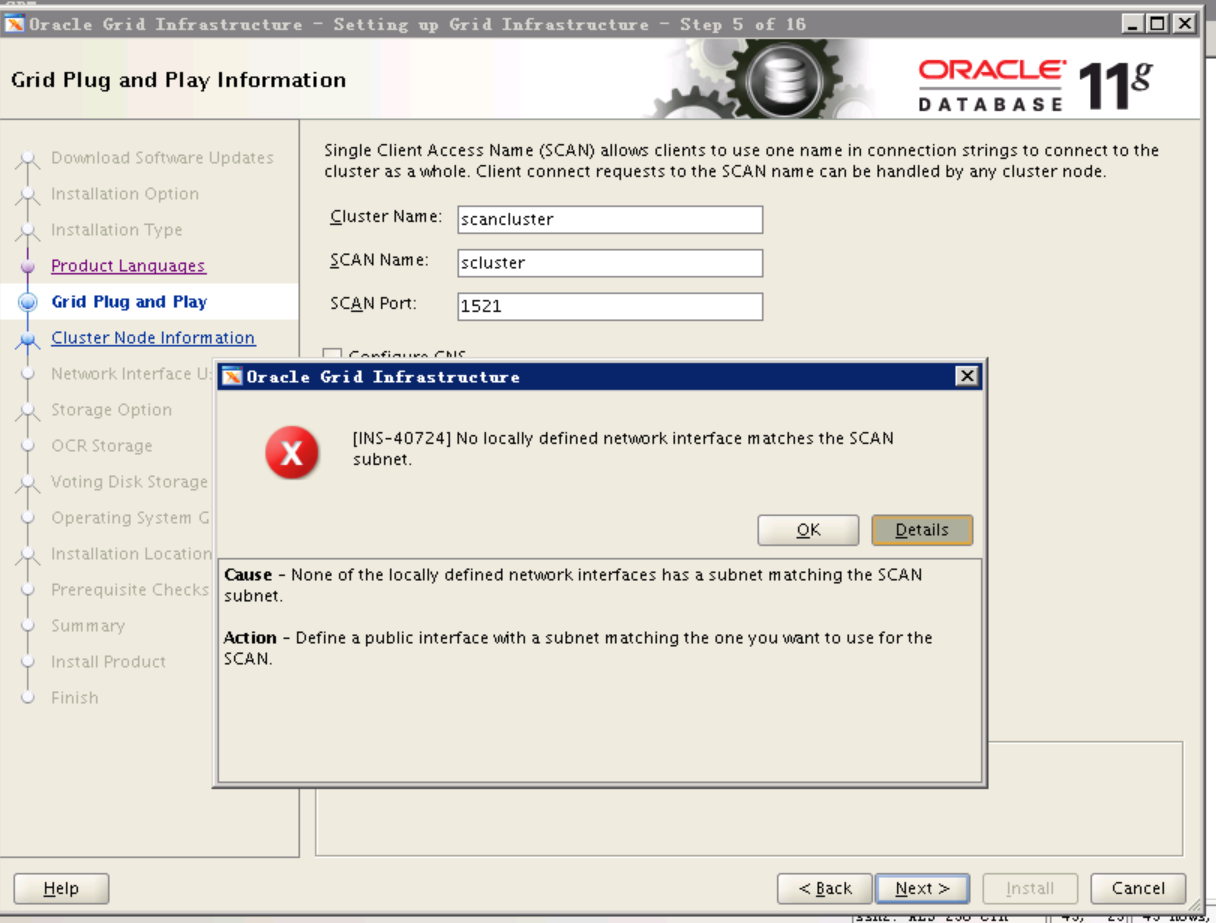

3.5.1提示scan ip 不在一个子网

原因:scan ip ,rac01-vip,rac02-vip与rac01 rac02不在同一个子网

解决:联系系统组重新分配ip在同一个vlan

3.5.2 提示/tmp空间不足

/tmp空间不足,扩容磁盘

解决:vgs 查看vg

lvextend -L +100G /dev/mapper/centos-root

xfs_growfs /dev/mapper/centos-root

3.5.3 cvuqdisk-1.0.9-1 rpm包检测未安装

解决办法:(grid 按装包的rpm目录下有此包。)

1.执行#cd grid/rpm

2.执行rpm -ivh cvuqdisk-1.0.9-1.rpm ,安装前确保yum install smartmontools(所有节点都要执行)

3.checkagain,问题解决

3.5.4 ohasd failed to start

最后执行root.sh报错 ohasd failed to start

报错原因:因为RHEL 7使用systemd而不是initd运行进程和重启进程,而root.sh通过传统的initd运行ohasd进程。

[root@rac01 grid]# ./root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u1/db/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u1/db/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to inittab

ohasd failed to start

Failed to start the Clusterware. Last 20 lines of the alert log follow:

2019-10-31 11:19:36.572

[client(33002)]CRS-2101:The OLR was formatted using version 3.

解决办法:

在RHEL 7中ohasd需要被设置为一个服务,在运行脚本root.sh之前。

步骤如下:

1. 以root用户创建服务文件

#touch /usr/lib/systemd/system/ohas.service

#chmod 777 /usr/lib/systemd/system/ohas.service

2. 将以下内容添加到新创建的ohas.service文件中

[root@rac1 init.d]# cat /usr/lib/systemd/system/ohas.service

[Unit]

Description=Oracle High Availability Services

After=syslog.target

[Service]

ExecStart=/etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple

Restart=always

[Install]

WantedBy=multi-user.target

3. 以root用户运行下面的命令

systemctl daemon-reload

systemctl enable ohas.service

systemctl start ohas.service

4. 查看运行状态

[root@rac1 init.d]# systemctl status ohas.service

ohas.service - Oracle High Availability Services

Loaded: loaded (/usr/lib/systemd/system/ohas.service; enabled)

Active: failed (Result: start-limit) since Fri 2015-09-11 16:07:32 CST; 1s ago

Process: 5734 ExecStart=/etc/init.d/init.ohasd run >/dev/null 2>&1 Type=simple (code=exited, status=203/EXEC)

Main PID: 5734 (code=exited, status=203/EXEC)

Sep 11 16:07:32 rac1 systemd[1]: Starting Oracle High Availability Services...

Sep 11 16:07:32 rac1 systemd[1]: Started Oracle High Availability Services.

Sep 11 16:07:32 rac1 systemd[1]: ohas.service: main process exited, code=exited, status=203/EXEC

Sep 11 16:07:32 rac1 systemd[1]: Unit ohas.service entered failed state.

Sep 11 16:07:32 rac1 systemd[1]: ohas.service holdoff time over, scheduling restart.

Sep 11 16:07:32 rac1 systemd[1]: Stopping Oracle High Availability Services...

Sep 11 16:07:32 rac1 systemd[1]: Starting Oracle High Availability Services...

Sep 11 16:07:32 rac1 systemd[1]: ohas.service start request repeated too quickly, refusing to start.

Sep 11 16:07:32 rac1 systemd[1]: Failed to start Oracle High Availability Services.

Sep 11 16:07:32 rac1 systemd[1]: Unit ohas.service entered failed state.

此时状态为失败,原因是现在还没有/etc/init.d/init.ohasd文件。

rac01因为已经执行过root.sh,这里显示服务是activing ,再次执行.root.sh 即为成功。

rac02 首次执行root.sh,还是会提示失败,执行完后,systemctl restart ohas.service ,服务就起来了,再次执行root.sh后成功。

成功后部分结果如下:

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u1/db/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u1/db/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

OLR initialization - successful

Adding Clusterware entries to inittab

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node crmtest1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

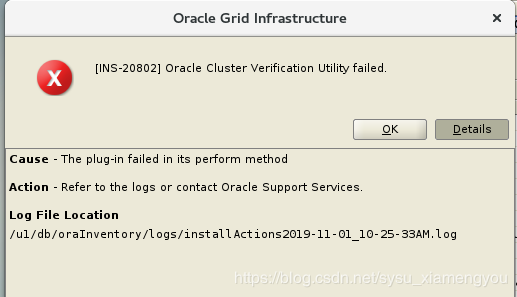

3.5.5 最后一步 INS-20802 显示集群验证失败

解决办法:

网上查看到原因说是因为没有配置DNS解析原因造成的SCAN IP解析错误,这个错误可以忽略

rac01 rac02能ping通scan ip即可。

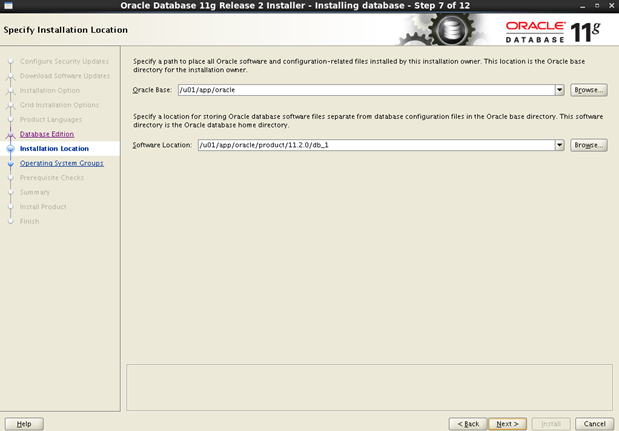

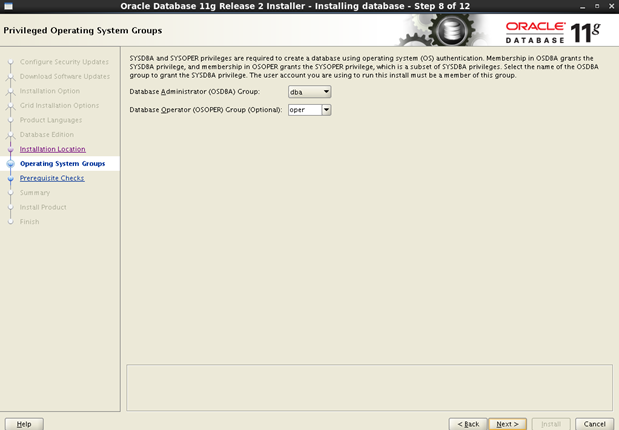

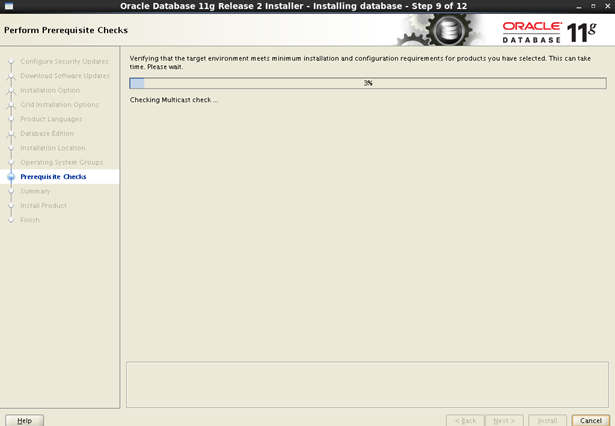

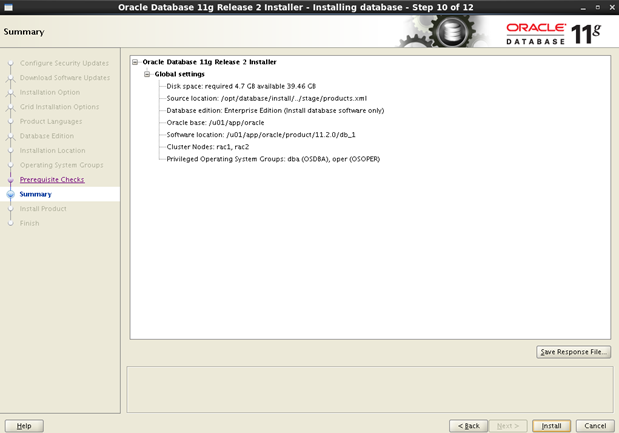

四、安装database软件

4.1 安装软件

确保两个节点rac01、racn02都已经启动,然后以oracle用户登录,安装请在图形界面下

进入软件安装包的目录

[oracle@racnode1 database]$ ./runInstaller

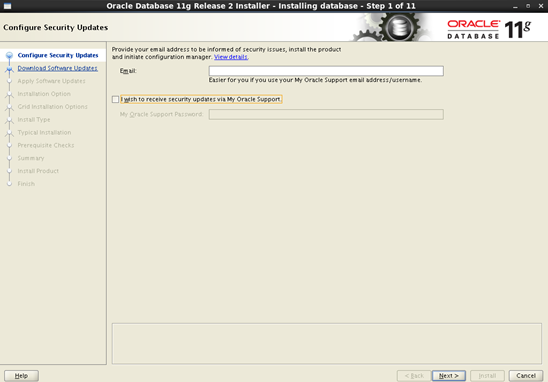

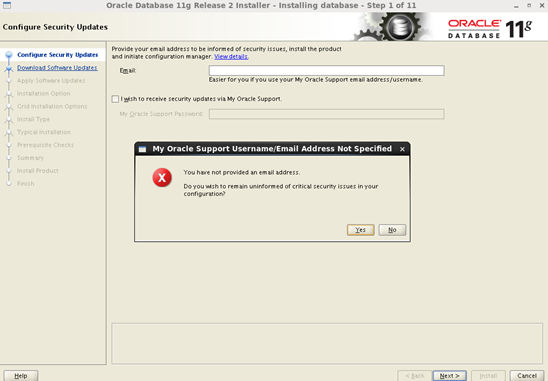

第1步:不勾选I wish to …… ,然后点next。

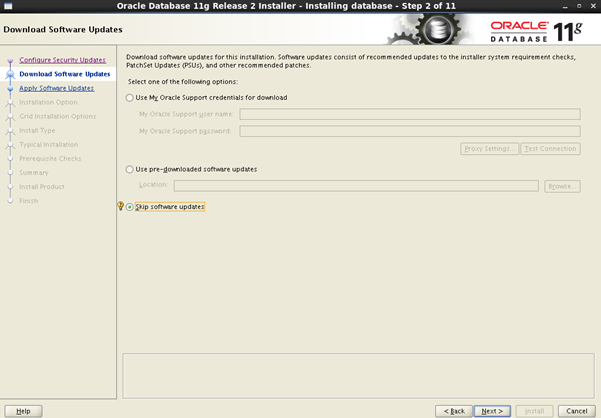

选择跳过软件更新,点击next。

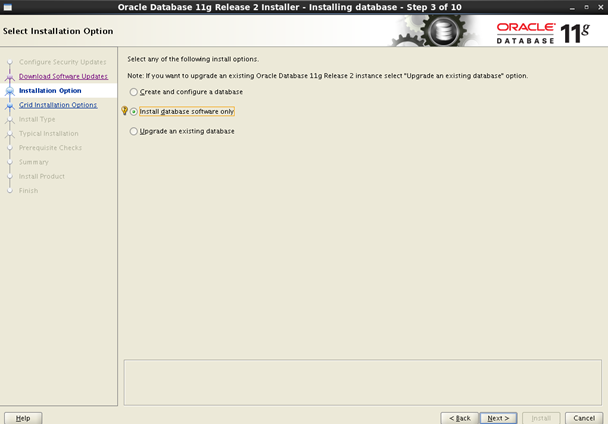

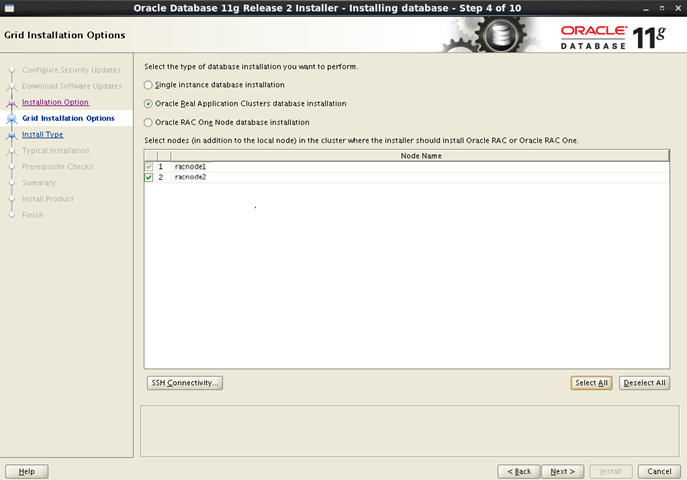

选择仅安装数据库软件,点击next。

选择全部节点,并测试ssh互信。

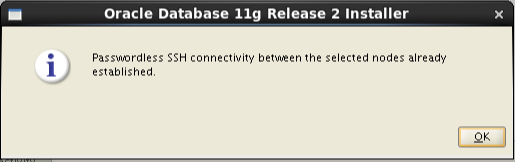

点击SSH Connectivity… ,输入oracle的密码,点击test 测试通过如下提示:

点击OK,然后点击next。

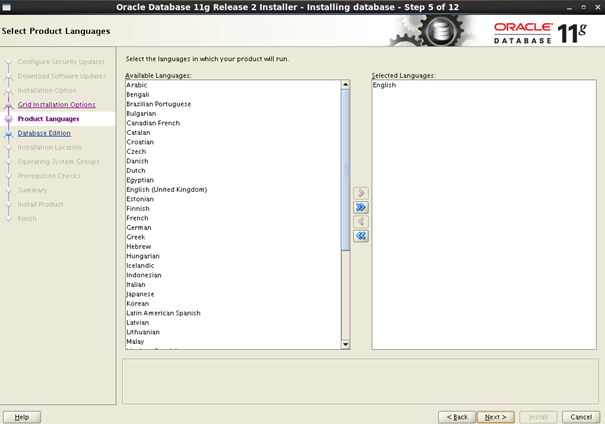

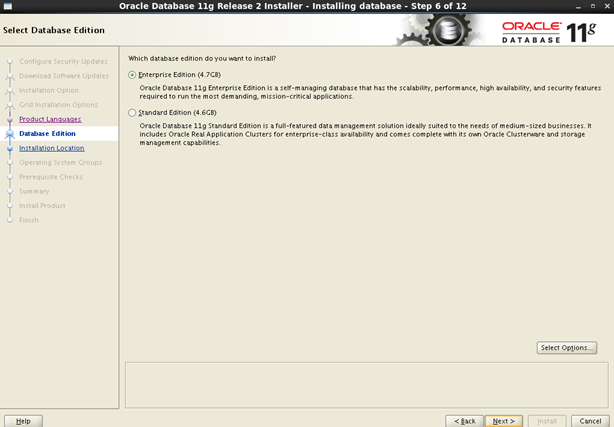

选择企业版

后面默认即可

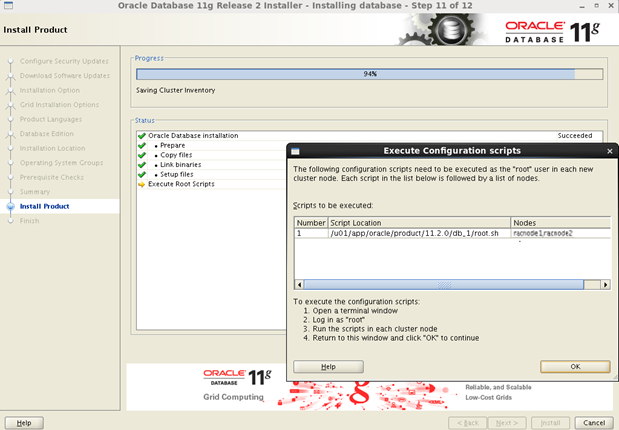

安装过程中需要执行脚本

rac01,rac02先后执行该root.sh脚本

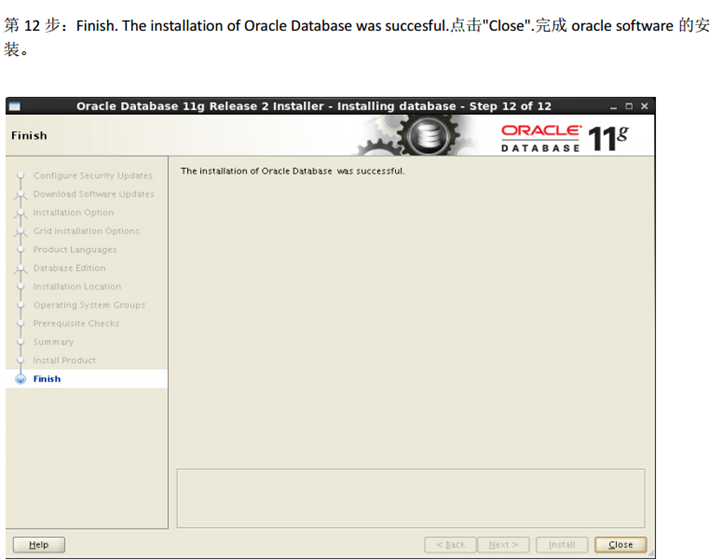

至此软件安装完毕。

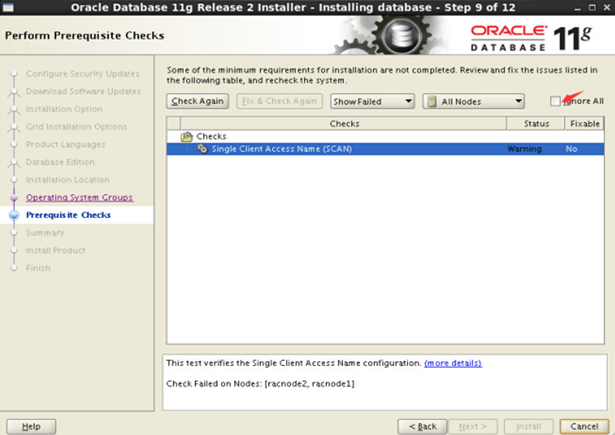

4.2 安装遇到的问题

4.2.1 安装检查提示rac01-vip rac02-vip不是同一个网卡

ifconfig 查看发现rac01的私有ip在eth4上,而rac02私有Ip在eth5上,这里网卡要对应都是eth4或者eth5

只能再次联系系统组解决,这里grid安装没有报错,安装软件才提示报错,后来只能卸载grid 重装。

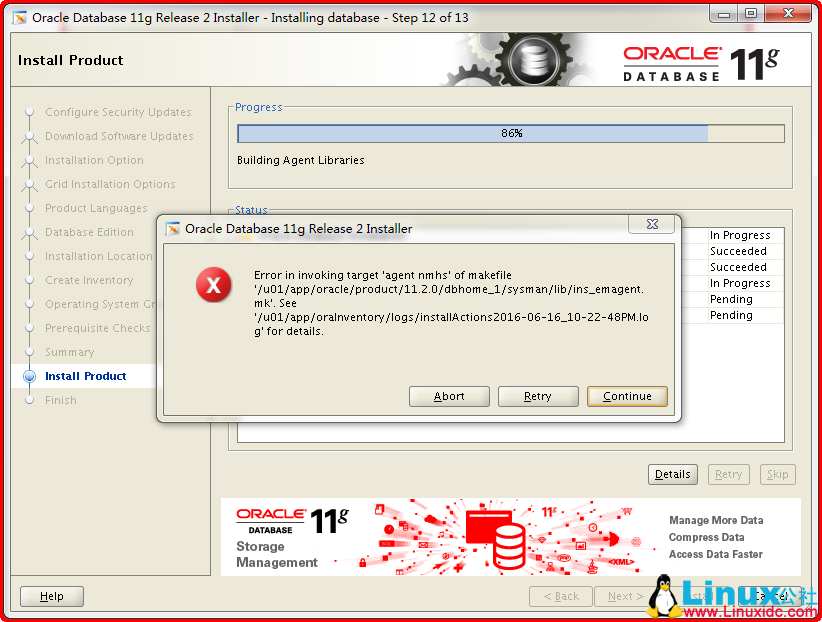

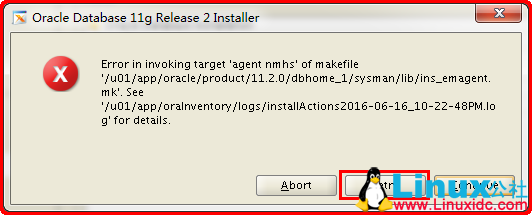

4.2.2 安装检查Error in invoking target 'agent nmhs' of makefile

解决方案:

在makefile中添加链接libnnz11库的参数

修改$ORACLE_HOME/sysman/lib/ins_emagent.mk,将

$(MK_EMAGENT_NMECTL)修改为:$(MK_EMAGENT_NMECTL) -lnnz11

建议修改前备份原始文件

[oracle@rac01 ~]$ cd $ORACLE_HOME/sysman/lib

[oracle@rac01 lib]$ cp ins_emagent.mk ins_emagent.mk.bak

[oracle@rac01 lib]$ vim ins_emagent.mk

进入vi编辑器后 命令模式输入/NMECTL 进行查找,快速定位要修改的行

在后面追加参数-lnnz11 第一个是字母l 后面两个是数字1

保存后Retry

通过安装前的检查。

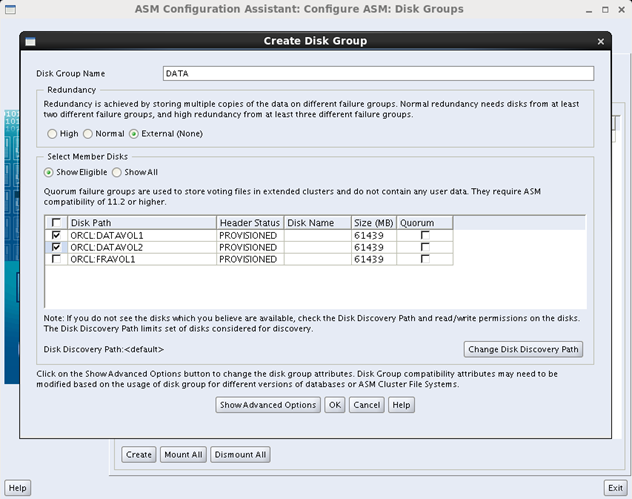

五、配置asm磁盘组

也可以在安装ORACLE软件之前创建ASM磁盘组。下面开始创建ASM磁盘组。

su - grid

命令 asmca 启动图形配置 。 点击Create ,创建磁盘组。

Disk Group Name 命名为DATA;

Redundancy选择 External (None);;

disks勾选 DATAVOL1、DATAVOL2、DATAVOL3、DATAVOL4、DATAVOL5;

点击OK,提示创建成功。

依次创建ARC FRA目录,最后执行挂载,点击Mount ALL ,挂载所有磁盘。点击Yes。确认挂载。

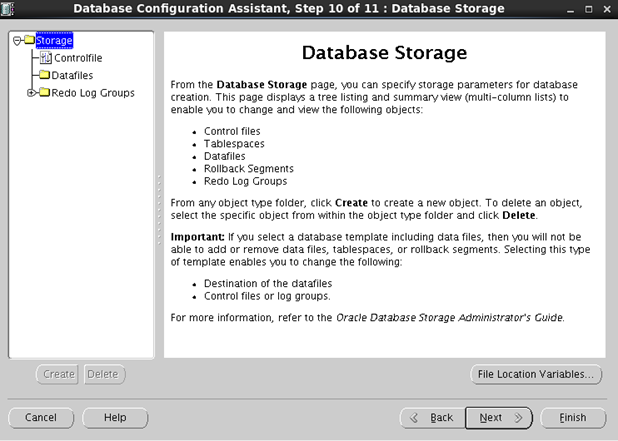

六、创建数据库实例

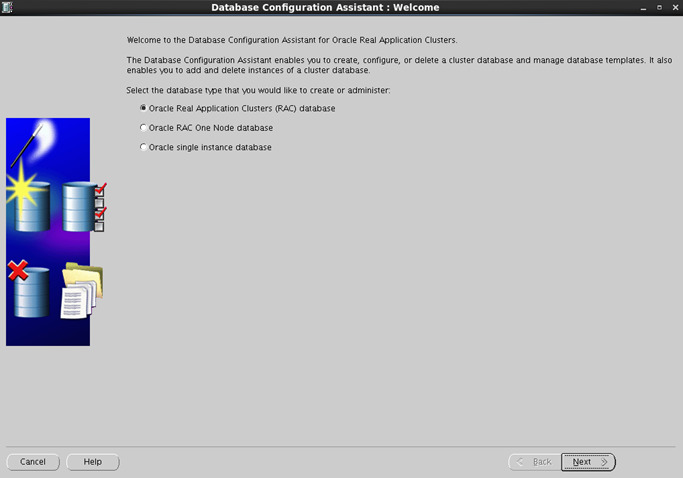

切换到oracle用户,以oracle用户运行命令 dbca 。弹出开始画面。

选择Oracle Real Application Cluster(RAC)database,点击next。

选择Create a Database,创建一个数据库。点击next。

选择custom database,截图来源网上,不对应参考即可

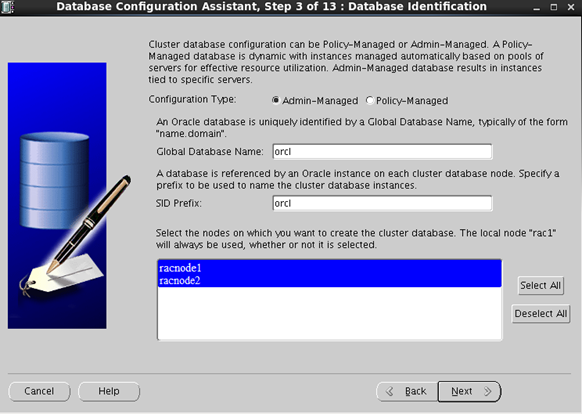

选择Configuration Type:Admin-Managed. Global Database Name:orcl. SID Prefix:orcl.

点击"Select ALL". 这里一定要选择全部节点.

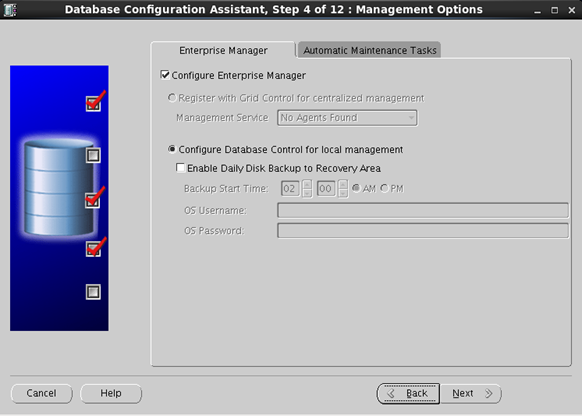

配置Enterprise Managert 和 Automatic Maintenance Tasks.

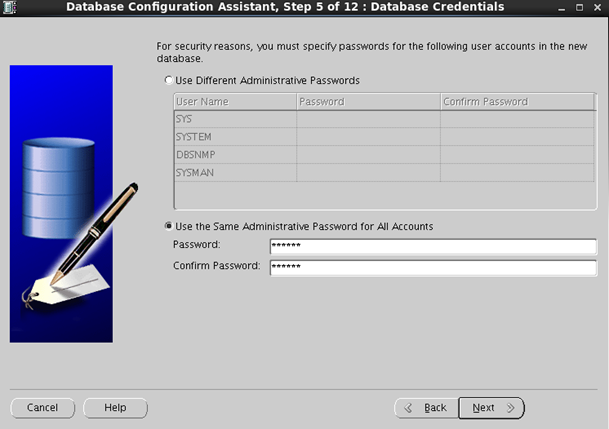

设置密码。"Use the Same Administrative Password for All Accounts"

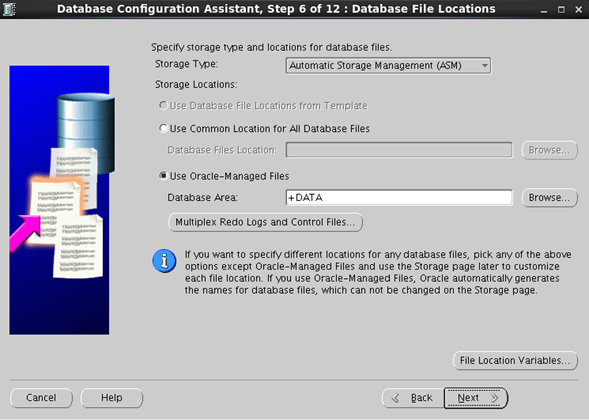

在"Databse Area",点击"Browse",选择+DATA.

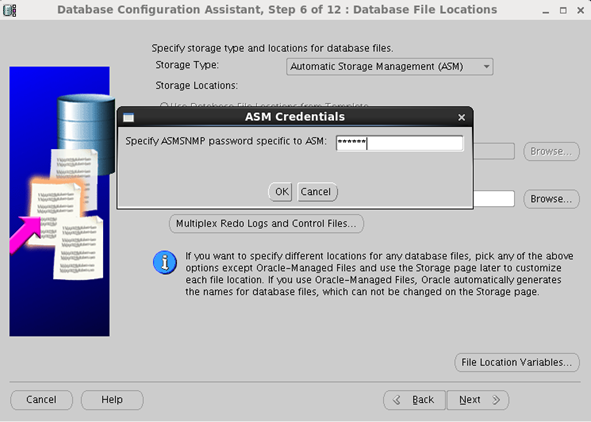

要求设置ASMSNMP密码

第7步:设置FRA和归档。定义快速恢复区(FRA)大小时,一般用整个卷的大小的90%

点击"Browse",选择 FRA

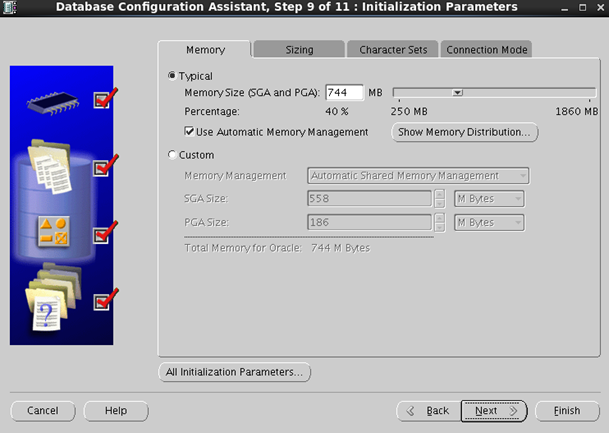

设置内存、SGA和PGA、字符集、连接模式。

选择Typical,SGA and PGA,先用默认的40%. 后面根据情况也可以调整。

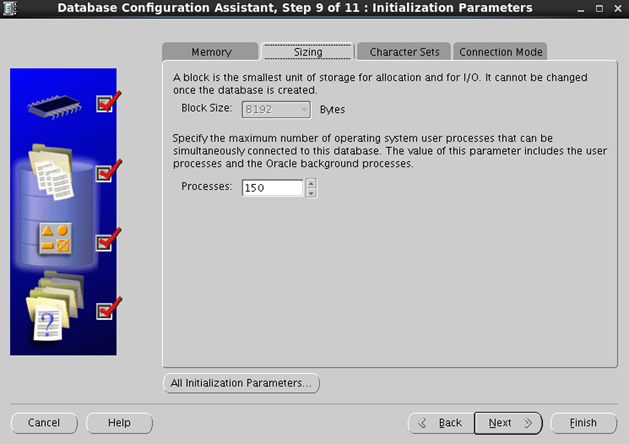

根据实际并发情况调整process 大小

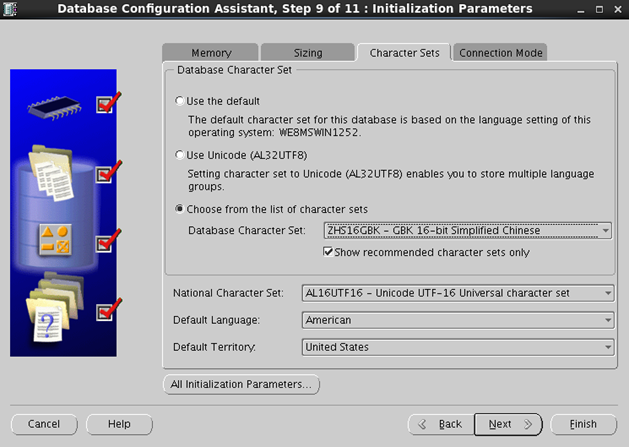

字符集这里一般正常选择UTF-8,看实际项目使用

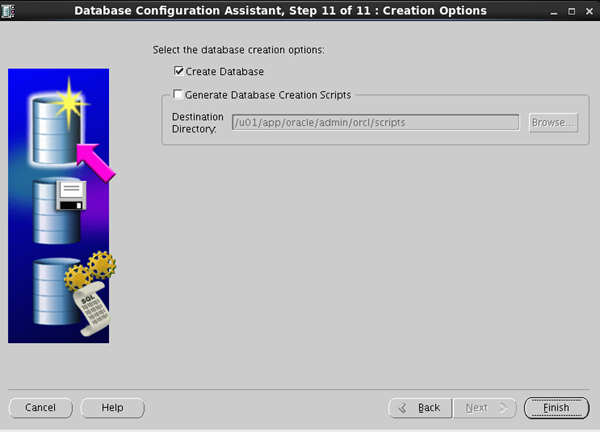

开始创建数据库。选择"Create Database"

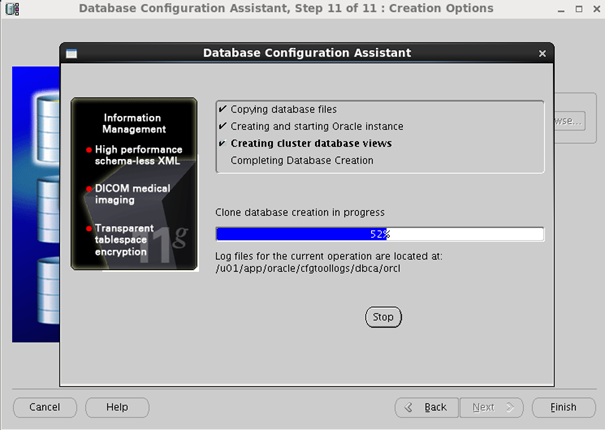

创建过程中

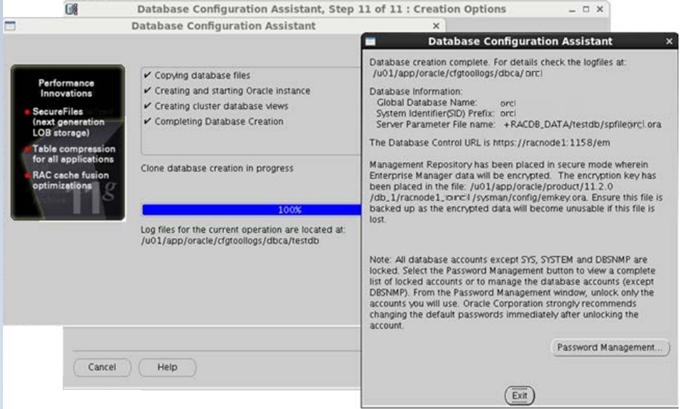

弹出下图,提示Database creation complete.和相应的提示信息。

点Exit,退出。数据库创建完成。

RAC安装到此完成。