Cannot initialize Cluster. Please check your configuration for mapreduce.framework .name and the cor

-

destination hdfs://ztcluster/warehouse/tablespace/managed/hive/ods_common.db/ods_common_logistics_track_d/p_day=20190321;

-

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:106)

-

at org.apache.spark.sql.hive.HiveExternalCatalog.loadPartition(HiveExternalCatalog.scala:843)

-

at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.processInsert(InsertIntoHiveTable.scala:249)

-

at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.run(InsertIntoHiveTable.scala:99)

-

at org.apache.spark.sql.execution.command.DataWritingCommandExec.sideEffectResult$lzycompute(commands.scala:104)

-

at org.apache.spark.sql.execution.command.DataWritingCommandExec.sideEffectResult(commands.scala:102)

-

at org.apache.spark.sql.execution.command.DataWritingCommandExec.executeCollect(commands.scala:115)

-

at org.apache.spark.sql.Dataset$$anonfun$6.apply(Dataset.scala:190)

-

at org.apache.spark.sql.Dataset$$anonfun$6.apply(Dataset.scala:190)

-

at org.apache.spark.sql.Dataset$$anonfun$52.apply(Dataset.scala:3259)

-

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:77)

-

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3258)

-

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:190)

-

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:75)

-

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:642)

-

at zt.dc.bigdata.bp.process.warehouse.LogisticsTrackSourceProcess$.main(LogisticsTrackSourceProcess.scala:122)

-

at zt.dc.bigdata.bp.process.warehouse.LogisticsTrackSourceProcess.main(LogisticsTrackSourceProcess.scala)

-

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

-

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

-

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

-

at java.lang.reflect.Method.invoke(Method.java:498)

-

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$4.run(ApplicationMaster.scala:721)

-

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Unable to move source hdfs://ztcluster/warehouse/tablespace/managed/hive/ods_common.db/ods_common_logisti

-

cs_track_d/.hive-staging_hive_2021-01-09_23-05-39_625_275694820341612468-1/-ext-10000 to destination hdfs://ztcluster/warehouse/tablespace/managed/hive/ods_common.db/

-

ods_common_logistics_track_d/p_day=20190321

-

at org.apache.hadoop.hive.ql.metadata.Hive.getHiveException(Hive.java:4057)

-

at org.apache.hadoop.hive.ql.metadata.Hive.getHiveException(Hive.java:4012)

-

at org.apache.hadoop.hive.ql.metadata.Hive.moveFile(Hive.java:4007)

-

at org.apache.hadoop.hive.ql.metadata.Hive.replaceFiles(Hive.java:4372)

-

at org.apache.hadoop.hive.ql.metadata.Hive.loadPartition(Hive.java:1962)

-

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

-

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

-

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

-

at java.lang.reflect.Method.invoke(Method.java:498)

-

at org.apache.spark.sql.hive.client.Shim_v3_0.loadPartition(HiveShim.scala:1275)

-

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$loadPartition$1.apply$mcV$sp(HiveClientImpl.scala:747)

-

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$loadPartition$1.apply(HiveClientImpl.scala:745)

-

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$loadPartition$1.apply(HiveClientImpl.scala:745)

-

at org.apache.spark.sql.hive.client.HiveClientImpl$$anonfun$withHiveState$1.apply(HiveClientImpl.scala:278)

-

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:216)

-

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:215)

-

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:261)

-

at org.apache.spark.sql.hive.client.HiveClientImpl.loadPartition(HiveClientImpl.scala:745)

-

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$loadPartition$1.apply$mcV$sp(HiveExternalCatalog.scala:855)

-

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$loadPartition$1.apply(HiveExternalCatalog.scala:843)

-

at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$loadPartition$1.apply(HiveExternalCatalog.scala:843)

-

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)

-

... 21 more

-

Caused by: java.io.IOException: Cannot execute DistCp process: java.io.IOException: Cannot initialize Cluster. Please check your configuration for mapreduce.framework

-

.name and the correspond server addresses.

-

at org.apache.hadoop.hive.shims.Hadoop23Shims.runDistCp(Hadoop23Shims.java:1151)

-

at org.apache.hadoop.hive.common.FileUtils.distCp(FileUtils.java:643)

-

at org.apache.hadoop.hive.common.FileUtils.copy(FileUtils.java:625)

-

at org.apache.hadoop.hive.common.FileUtils.copy(FileUtils.java:600)

-

at org.apache.hadoop.hive.ql.metadata.Hive.moveFile(Hive.java:3921)

-

... 40 more

-

Caused by: java.io.IOException: Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses.

-

at org.apache.hadoop.mapreduce.Cluster.initialize(Cluster.java:116)

-

at org.apache.hadoop.mapreduce.Cluster.<init>(Cluster.java:109)

-

at org.apache.hadoop.mapreduce.Cluster.<init>(Cluster.java:102)

-

at org.apache.hadoop.tools.DistCp.createMetaFolderPath(DistCp.java:410)

-

at org.apache.hadoop.tools.DistCp.<init>(DistCp.java:116)

-

at org.apache.hadoop.hive.shims.Hadoop23Shims.runDistCp(Hadoop23Shims.java:1141)

-

... 44 more

-

-

ApplicationMaster host: 192.168.81.58

-

ApplicationMaster RPC port: 0

-

queue: default

-

start time: 1610204535333

-

final status: FAILED

-

tracking URL: http://szch-ztn-dc-bp-pro-192-168-81-57:8088/proxy/application_1610095260612_0149/

-

user: hive

-

Exception in thread "main" org.apache.spark.SparkException: Application application_1610095260612_0149 finished with failed status

-

at org.apache.spark.deploy.yarn.Client.run(Client.scala:1269)

-

at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1627)

-

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:904)

-

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:198)

-

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:228)

-

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:137)

-

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

解决方案

根据网上的方案我进行了以下尝试

尝试一(未解决)

尝试了在客户添加如下pom依赖,并未解决

-

<dependency>

-

<groupId>org.apache.hadoop</groupId>

-

<artifactId>hadoop-mapreduce-client-common</artifactId>

-

<version>${hadoop.version}</version>

-

</dependency>

-

-

<dependency>

-

<groupId>org.apache.hadoop</groupId>

-

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

-

<version>${hadoop.version}</version>

-

<scope>${scopetype}</scope>

-

</dependency>

-

-

<dependency>

-

<groupId>org.apache.hadoop</groupId>

-

<artifactId>hadoop-client</artifactId>

-

<version>${hadoop.version}</version>

-

<scope>${scopetype}</scope>

-

</dependency>

-

-

<dependency>

-

<groupId>org.apache.hadoop</groupId>

-

<artifactId>hadoop-mapreduce-client-core</artifactId>

-

<version>${hadoop.version}</version>

-

<scope>${scopetype}</scope>

-

</dependency>

-

-

<dependency>

-

<groupId>org.apache.hadoop</groupId>

-

<artifactId>hadoop-common</artifactId>

-

<version>${hadoop.version}</version>

-

<scope>${scopetype}</scope>

-

</dependency>还有mapreduce-jobclient,mapreduce等,注意scope一定要改成compile不能是test,不然还是会报错

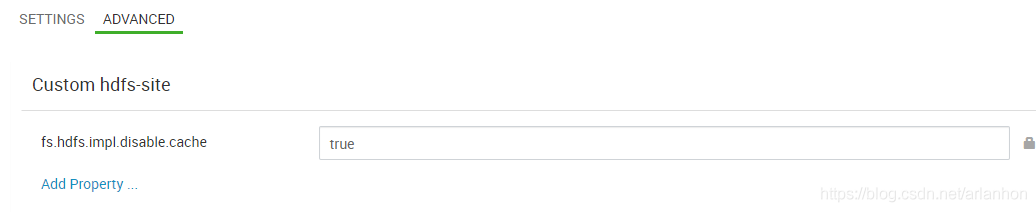

尝试二(未解决)

修改hdfs-site.xml中

fs.hdfs.impl.disable.cache属性为true

尝试三(未解决)

关闭hive的事务功能

关闭hdp 3.0 创建表自动为acid表的参数:

hive.create.as.insert.only=false

metastore.create.as.acid=false

hive.strict.managed.tables=false

hive.strict.managed.tables=false

hive.txn.manager=org.apache.hadoop.hive.ql.lockmgr.DummyTxnManager

hive.support.concurrency=false

// hive.stats.autogather属性要设置成true, 否则在执行sql时,会报异常

hive.stats.autogather=true

尝试四(未解决)

检查mapred-site.xml文件的mapreduce.framework.name属性是否为yarn

如果不是改成local再尝试

尝试五(解决)

在找遍了网上所有的方法无解后,想到了一个点,之前在使用hive3.0时,如果分区事先创建好了,通过第三方api或spark客户端写数据时有问题,

所以便尝试了,让创建分区的方法显示声明在代码中,再次尝试,问题解决。但为什么会这样,到目前还未知。

附代码:

-

// 删除分区

-

spark.sql(s"alter table ods_common.ods_common_logistics_track_d drop if exists partition(p_day='$day')")

-

// 添加分区

-

spark.sql(s"alter table ods_common.ods_common_logistics_track_d add if not exists partition (p_day='$day')")

-

-

-

df.show(10, truncate = false)

-

-

df.createOrReplaceTempView("tmp_logistics_track")

-

-

spark.sql("select * from tmp_logistics_track").show(20, truncate = false)

-

-

spark.sql(

-

s"""insert overwrite table ods_common.ods_common_logistics_track_d PARTITION(p_day='$day')

-

|select dc_id, source_order_id, order_id, tracking_number, warehouse_code, user_id, channel_name,

-

|creation_time, update_time, sync_time, last_tracking_time, tracking_change_time, next_tracking_time,

-

|tms_nti_time, tms_oc_time, tms_as_time, tms_pu_time, tms_it_time, tms_od_time, tms_wpu_time, tms_rt_time,

-

|tms_excp_time, tms_fd_time, tms_df_time, tms_un_time, tms_np_time from tmp_logistics_track

-

|""".stripMargin)

本文转自:https://blog.csdn.net/arlanhon/article/details/112480999?spm=1001.2101.3001.6650.6&utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EESLANDING%7Edefault-6-112480999-blog-98077988.pc_relevant_landingrelevant&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EESLANDING%7Edefault-6-112480999-blog-98077988.pc_relevant_landingrelevant&utm_relevant_index=7

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek 开源周回顾「GitHub 热点速览」

· 物流快递公司核心技术能力-地址解析分单基础技术分享

· .NET 10首个预览版发布:重大改进与新特性概览!

· AI与.NET技术实操系列(二):开始使用ML.NET

· 单线程的Redis速度为什么快?

2017-10-31 浅谈一个网页打开的全过程(涉及DNS、CDN、Nginx负载均衡等)