flink⼿手动维护kafka偏移量量

flink对接kafka,官方模式方式是自动维护偏移量

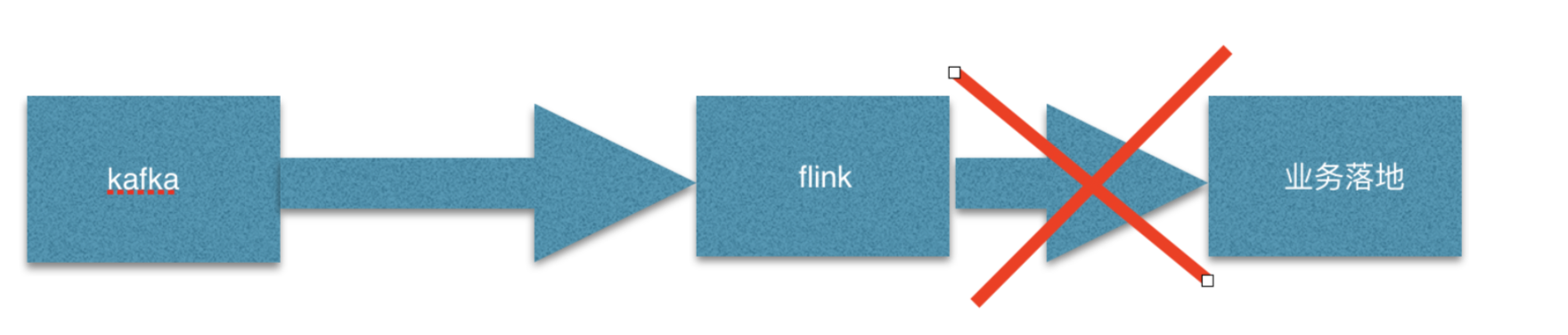

但并没有考虑到flink消费kafka过程中,如果出现进程中断后的事情! 如果此时,进程中段:

1:数据可能丢失

从获取了了数据,但是在执⾏行行业务逻辑过程中发⽣生中断,此时会出现丢失数据现象

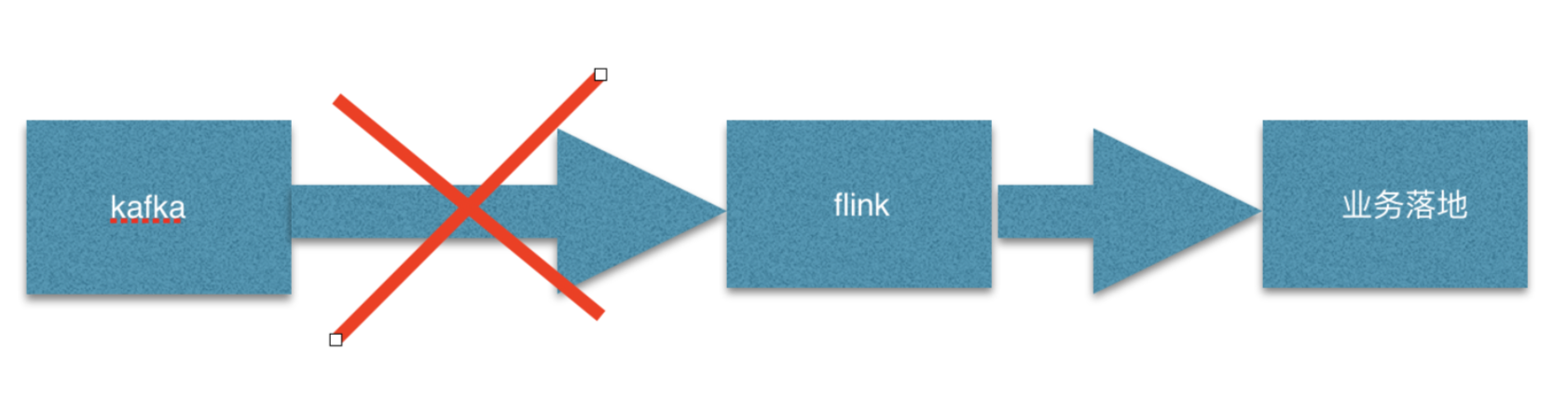

2:数据可能重复处理理

flink从kafka拉去数据过程中,如果此时flink进程挂掉,那么重启flink之后,会从当前Topic的 起始偏移量量开始消费

解决flink消费kafka的弊端

上述问题,在任何公司的实际⽣生产中,都会遇到,并且⽐比较头痛的事情,主要原因是因为上述的代码 是使⽤用flink⾃自动维护kafka的偏移量量,导致⼀一些实际⽣生产问题出现。~那么为了了解决这些问题,我们就 需要⼿手动维护kafka的偏移量量,并且保证kafka的偏移量量和flink的checkpoint的数据状态保持⼀一致 (最好是⼿手动维护偏移量量的同时,和现有业务做成事务放在⼀一起)~

1):offset和checkpoint绑定

//创建kafka数据流 val properties = new Properties() properties.setProperty("bootstrap.servers", GlobalConfigUtils.getBootstrap) properties.setProperty("zookeeper.connect", GlobalConfigUtils.getZk) properties.setProperty("group.id", GlobalConfigUtils.getConsumerGroup) properties.setProperty("enable.auto.commit" , "true")//TODO properties.setProperty("auto.commit.interval.ms" , "5000") properties.setProperty("auto.offset.reset" , "latest") properties.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); properties.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); val kafka09 = new FlinkKafkaConsumer09[String]( GlobalConfigUtils.getIntputTopic, new SimpleStringSchema(), properties ) /** * 如果checkpoint启⽤用,当checkpoint完成之后,Flink Kafka Consumer将会提交offset保存 到checkpoint State中, 这就保证了了kafka broker中的committed offset与 checkpoint stata中的offset相⼀一致。 ⽤用户可以在Consumer中调⽤用setCommitOffsetsOnCheckpoints(boolean) ⽅方法来选择启⽤用 或者禁⽤用offset committing(默认情况下是启⽤用的) * */ kafka09.setCommitOffsetsOnCheckpoints(true) kafka09.setStartFromLatest()//start from the latest record kafka09.setStartFromGroupOffsets() //添加数据源addSource(kafka09) val data: DataStream[String] = env.addSource(kafka09)

2):编写flink⼿手动维护kafka偏移量量

/** * ⼿手动维护kafka的偏移量量 */ object KafkaTools { var offsetClient: KafkaConsumer[Array[Byte], Array[Byte]] = null var standardProps:Properties = null def init():Properties = { standardProps = new Properties standardProps.setProperty("bootstrap.servers", GlobalConfigUtils.getBootstrap) standardProps.setProperty("zookeeper.connect", GlobalConfigUtils.getZk) standardProps.setProperty("group.id", GlobalConfigUtils.getConsumerGroup) standardProps.setProperty("enable.auto.commit" , "true")//TODO standardProps.setProperty("auto.commit.interval.ms" , "5000") standardProps.setProperty("auto.offset.reset" , "latest") standardProps.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); standardProps.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); standardProps } def getZkUtils():ZkUtils = { val zkClient = new ZkClient("hadoop01:2181") ZkUtils.apply(zkClient, false) } def createTestTopic(topic: String, numberOfPartitions: Int, replicationFactor: Int, topicConfig: Properties) = { val zkUtils = getZkUtils() try{ AdminUtils.createTopic(zkUtils, topic, numberOfPartitions, replicationFactor, topicConfig) }finally { zkUtils.close() } } def offsetHandler() = { val props = new Properties props.putAll(standardProps) props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.ByteArrayDeserializer") props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.ByteArrayDeserializer") offsetClient = new KafkaConsumer[Array[Byte], Array[Byte]](props) } def getCommittedOffset(topicName: String, partition: Int): Long = { init() offsetHandler() val committed = offsetClient.committed(new TopicPartition(topicName, partition)) println(topicName , partition , committed.offset()) if (committed != null){ committed.offset } else{ 0L } } def setCommittedOffset(topicName: String, partition: Int, offset: Long) { init() offsetHandler() var partitionAndOffset:util.Map[TopicPartition , OffsetAndMetadata] = new util.HashMap[TopicPartition , OffsetAndMetadata]() partitionAndOffset.put(new TopicPartition(topicName, partition), new OffsetAndMetadata(offset)) offsetClient.commitSync(partitionAndOffset) } def close() { offsetClient.close() } }