关于从网页上下载数据

1 #include "curl/curl.h" 2 3 #include <io.h> 4 #include <direct.h> 5 #include <stdio.h> 6 #include <string> 7 #include <fstream> 8 #include <stdlib.h> 9 10 #pragma comment(lib, "libcurl.lib") 11 #pragma comment(lib, "wldap32.lib") 12 #pragma comment(lib, "ws2_32.lib") 13 #pragma comment(lib, "winmm.lib") 14 15 size_t write_func(void *ptr, size_t size, size_t nmemb, FILE *stream) 16 { 17 return fwrite(ptr, size, nmemb, stream); 18 } 19 20 21 std::string analyze_name; 22 std::string analyze_url; 23 std::string cplocation; 24 std::string httpurl; 25 26 //得到每条链接的短地址和名字 27 void get_name_url(std::string line) 28 { 29 //File d; 30 char *linkhead = "<a href="; 31 char *linkmiddle = "\""; 32 char *linktail = "</a>"; 33 std::string head = "<a href="; 34 35 char *s = strstr((char*)line.data(), linkhead); 36 for (int i = 0; i<9; i++) 37 *s++; 38 int urloffset = 0; 39 while (*s != *linkmiddle) 40 { 41 *s++; 42 urloffset++; 43 } 44 analyze_url = line.substr(line.find(head) + 9, urloffset); 45 for (int i = 0; i<2; i++) 46 *s++; 47 int nameoffset = 0; 48 while (*s != *linktail) 49 { 50 *s++; 51 nameoffset++; 52 } 53 analyze_name = line.substr(line.find(head) + 11 + urloffset, nameoffset); 54 55 //return d; 56 } 57 58 //根据http网址下载网页到本机指定位置 59 int http_get(std::string httpurl, std::string location) 60 { 61 CURL *curl; 62 CURLcode res; 63 FILE *outfile; 64 errno_t err; 65 err = fopen_s(&outfile, location.c_str(), "wb"); 66 //outfile = fopen(location.c_str(), "wb"); 67 const char *url = httpurl.c_str(); 68 curl = curl_easy_init(); 69 if (curl) 70 { 71 curl_easy_setopt(curl, CURLOPT_URL, url); 72 curl_easy_setopt(curl, CURLOPT_WRITEDATA, outfile); 73 curl_easy_setopt(curl, CURLOPT_WRITEFUNCTION, write_func); 74 res = curl_easy_perform(curl); 75 fclose(outfile); 76 curl_easy_cleanup(curl); 77 } 78 return 0; 79 } 80 81 82 int linkextract(std::string httpfile, std::string httpurl, std::string cplocation) 83 { 84 std::ifstream infile; 85 std::string line, link, name; 86 infile.open(httpfile, std::ios::in); 87 std::string directory = "alt=\"[DIR]\""; 88 std::string file = "alt=\"[ ]\""; 89 90 while (!infile.eof()) 91 { 92 getline(infile, line, '\n'); 93 //if (line.find(directory) != -1)//目录 94 //{ 95 // get_name_url(line); 96 // analyze_name = analyze_name.substr(0, analyze_name.length() - 1); 97 // std::string content = cplocation + "/" + analyze_name; 98 // _mkdir((char*)content.c_str()); 99 // httpurl = httpurl + analyze_url; 100 // cplocation = cplocation + "/" + analyze_name; 101 // http_get(httpurl, cplocation + "/" + analyze_name + ".txt"); 102 // linkextract(cplocation + "/" + analyze_name + ".txt", httpurl, cplocation); 103 //} 104 //else if (line.find(file) != -1)//文件 105 { 106 //get_name_url(line); 107 if (line.find("href=\"Tile")==-1) 108 continue; 109 110 char *linkhead = "<a href="; 111 char *linkmiddle = "\""; 112 char *linktail = "</a>"; 113 std::string head = "<a href="; 114 115 char *s = strstr((char*)line.data(), linkhead); 116 for (int i = 0; i<9; i++) 117 *s++; 118 int urloffset = 0; 119 while (*s != *linkmiddle) 120 { 121 *s++; 122 urloffset++; 123 } 124 analyze_url = line.substr(line.find(head) + 9, urloffset); 125 for (int i = 0; i<2; i++) 126 *s++; 127 int nameoffset = 0; 128 while (*s != *linktail) 129 { 130 *s++; 131 nameoffset++; 132 } 133 analyze_name = line.substr(line.find(head) + 11 + urloffset, nameoffset); 134 135 136 http_get(httpurl + analyze_url, cplocation + "/" + analyze_name); 137 } 138 /*else 139 continue;*/ 140 } 141 infile.close(); 142 143 return 0; 144 } 145 146 147 148 149 int main() 150 { 151 std::string httpurl = "http://服务地址"; 152 std::string content = "../DownloadDirectory"; 153 _mkdir((char*)content.c_str()); 154 std::string localdir = "../DownloadDirectory/DownloadDirectory.txt"; 155 http_get(httpurl, localdir); 156 linkextract(localdir, httpurl, content); 157 return 0; 158 }

只能下载href里面的链接文件,而且很多网页不能下载;

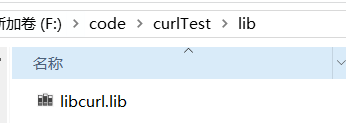

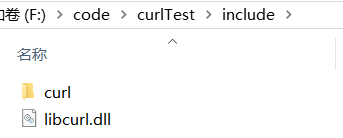

其中,需要下载curl并配置路径,关于下载编译curl有很好的博客,暂时找不见了;