Android BPF 应用流量统计

1. BPF 和 eBPF

BPF (Berkeley Packet Filter)最早的网络数据包捕获。

eBPF (extended Berkeley Packet Filter) 新出了一个 BPF 替换了之前老的 BPF ,但是名称有的时候也常 BPF ,也有叫 eBPF 的。功能得到加强,除了能网络数据包捕获外,也能用于 trace 内核函数,内核中自带的一个 ftrace 功能就使用这个技术。

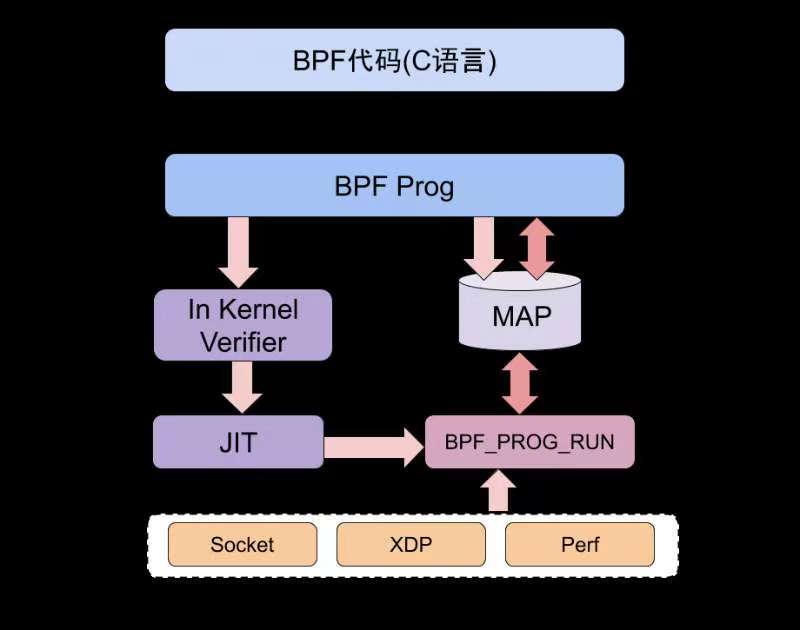

如下图所示:分为用户层和内核层,用户层通过编译 bpf 的指令,可以通过 bcc 库,最终经过 llvm 编译为 elf 格式的文件。由 bpf loader 加载到内核中通过 BFP 虚拟机检查,如 :死循环、不安全的调用等。在通过 JIT 编译提高运行速度,最终被添加到内核中。

readelf -S /system/etc/bpf/netd.o

There are 24 section headers, starting at offset 0x28a0:

Section Headers:

[Nr] Name Type Address Offset

Size EntSize Flags Link Info Align

[ 0] NULL 0000000000000000 00000000

0000000000000000 0000000000000000 0 0 0

[ 1] .strtab STRTAB 0000000000000000 00002402

000000000000049b 0000000000000000 0 0 1

[ 2] .text PROGBITS 0000000000000000 00000040

0000000000000000 0000000000000000 AX 0 0 4

[ 3] cgroupskb/in[...] PROGBITS 0000000000000000 00000040

00000000000009d8 0000000000000000 AX 0 0 8

[ 4] .relcgroupsk[...] REL 0000000000000000 000020e0

0000000000000140 0000000000000010 23 3 8

[ 5] cgroupskb/eg[...] PROGBITS 0000000000000000 00000a18

0000000000000950 0000000000000000 AX 0 0 8

[ 6] .relcgroupsk[...] REL 0000000000000000 00002220

0000000000000140 0000000000000010 23 5 8

[ 7] skfilter/egr[...] PROGBITS 0000000000000000 00001368

0000000000000110 0000000000000000 AX 0 0 8

[ 8] .relskfilter[...] REL 0000000000000000 00002360

0000000000000030 0000000000000010 23 7 8

[ 9] skfilter/ing[...] PROGBITS 0000000000000000 00001478

0000000000000110 0000000000000000 AX 0 0 8

[10] .relskfilter[...] REL 0000000000000000 00002390

0000000000000030 0000000000000010 23 9 8

[11] skfilter/whi[...] PROGBITS 0000000000000000 00001588

00000000000000d0 0000000000000000 AX 0 0 8

[12] .relskfilter[...] REL 0000000000000000 000023c0

0000000000000010 0000000000000010 23 11 8

[13] skfilter/bla[...] PROGBITS 0000000000000000 00001658

0000000000000070 0000000000000000 AX 0 0 8

[14] .relskfilter[...] REL 0000000000000000 000023d0

0000000000000010 0000000000000010 23 13 8

[15] cgroupsock/i[...] PROGBITS 0000000000000000 000016c8

00000000000000a0 0000000000000000 AX 0 0 8

[16] .relcgroupso[...] REL 0000000000000000 000023e0

0000000000000010 0000000000000010 23 15 8

[17] .rodata PROGBITS 0000000000000000 00001768

0000000000000021 0000000000000000 A 0 0 4

[18] maps PROGBITS 0000000000000000 0000178c

0000000000000118 0000000000000000 WA 0 0 4

[19] license PROGBITS 0000000000000000 000018a4

000000000000000b 0000000000000000 WA 0 0 1

[20] .BTF PROGBITS 0000000000000000 000018af

0000000000000019 0000000000000000 0 0 1

[21] .BTF.ext PROGBITS 0000000000000000 000018c8

0000000000000020 0000000000000000 0 0 1

[22] .llvm_addrsig LOOS+0xfff4c03 0000000000000000 000023f0

0000000000000012 0000000000000000 E 23 0 1

[23] .symtab SYMTAB 0000000000000000 000018e8

00000000000007f8 0000000000000018 1 58 8

bcc 库,是一个简化开发 bpf.o 的 c 语言库,安卓在早期版本,如 11 版本时使用了 一小部分的 bcc 提供的功能,在 安卓 13 后自己整出来一个 libbpf 的东西,不用 bcc 库了。

安卓中查询 APP使用流量详细的方法

NetworkStatsManager networkStatsManager = (NetworkStatsManager)getApplicationContext().getSystemService(Context.NETWORK_STATS_SERVICE);

//网络类型 TYPE_WIFI 移动数据 TYPE_MOBILE

//订阅者ID 为空表示不区分 SIM 卡

long startTime = new Date().getTime() - 7*24*60*60*1000; //最近7天 (ms)

long endTime = new Date().getTime();

NetworkStats.Bucket bucket = networkStatsManager.querySummaryForDevice(ConnectivityManager.TYPE_WIFI, null, startTime, endTime);

Log.d(TAG, "bucket uid:" + bucket.getUid());

Log.d(TAG, "bucket getRxBytes:" + bucket.getRxBytes());

Log.d(TAG, "bucket getTxBytes:" + bucket.getTxBytes());

1,bpf loader 挂载 bpf 文件系统,挂载 cgroup2 启动 bpfloader ,cgroup 由 /system/etc/cgroups.json 初始化,这里是手动初始化

#bpf start

mount bpf bpf /sys/fs/bpf

mkdir /dev/cg2_bpf 0600 root root

chmod 0600 /dev/cg2_bpf

mount cgroup2 none /dev/cg2_bpf

on late-init

trigger load_bpf_programs

on load_bpf_programs

write /proc/sys/kernel/unprivileged_bpf_disabled 0

write /proc/sys/net/core/bpf_jit_enable 1

write /proc/sys/net/core/bpf_jit_kallsyms 1

start bpfloader

service bpfloader /system/bin/bpf-loader

user root

group root

disabled

oneshot

#bpf end

2,cgroup 配置

对于cgroupskb类型的bpf程序, 还需要通过BPF_PROG_ATTACH命令把固定到/sys/fs/bpf的代码附着到对应的cgroup上(这样我们就可以监控特定cgroup上的进程的网络状态了):

mount bpf bpf /sys/fs/bpf

mkdir /dev/cg2_bpf 0600 root root

chmod 0600 /dev/cg2_bpf

mount cgroup2 none /dev/cg2_bpf

3,安卓流量收集记录功能

TrafficController::start()

initMaps() # 初始化 map 文件

initPrograms() # attach to cgroup

addInterface() # 添加网卡配置

4,读取流量统计记录,并清理结果

BpfNetworkStats.cpp

parseBpfNetworkStatsDetail()

BpfMapRO<uint32_t, uint8_t> configurationMap(CONFIGURATION_MAP_PATH);

auto configuration = configurationMap.readValue(CURRENT_STATS_MAP_CONFIGURATION_KEY);

const char* statsMapPath = STATS_MAP_PATH[configuration.value()];

TrafficController.cpp

swapActiveStatsMap() #切换 mapA mapB

5,安卓统计记录实现,供参考:

# android11 流量统计相关代码

NetworkStatsService.java

# 创建4个收集文件

// create data recorders along with historical rotators

mDevRecorder = buildRecorder(PREFIX_DEV, mSettings.getDevConfig(), false);

mXtRecorder = buildRecorder(PREFIX_XT, mSettings.getXtConfig(), false);

mUidRecorder = buildRecorder(PREFIX_UID, mSettings.getUidConfig(), false);

mUidTagRecorder = buildRecorder(PREFIX_UID_TAG, mSettings.getUidTagConfig(), true);

# 创建 performPoll() 更新流量统计用的 handle

private final class NetworkStatsHandler extends Handler {

NetworkStatsHandler(@NonNull Looper looper) {

super(looper);

}

@Override

public void handleMessage(Message msg) {

switch (msg.what) {

case MSG_PERFORM_POLL: {

performPoll(FLAG_PERSIST_ALL);

break;

}

# 注册 更新广播

private BroadcastReceiver mPollReceiver = new BroadcastReceiver() {

@Override

public void onReceive(Context context, Intent intent) {

// on background handler thread, and verified UPDATE_DEVICE_STATS

// permission above.

performPoll(FLAG_PERSIST_ALL);

// verify that we're watching global alert

registerGlobalAlert();

}

};

# 收集 更新函数 添加同步保护 synchronized

private void performPoll(int flags) {

synchronized (mStatsLock) {

mWakeLock.acquire();

try {

performPollLocked(flags);

} finally {

mWakeLock.release();

}

}

}

# 更新4个收集文件

private void performPollLocked(int flags) {

try {

recordSnapshotLocked(currentTime);

} catch (IllegalStateException e) {

Log.wtf(TAG, "problem reading network stats", e);

return;

}

# 拉取更新记录

private void recordSnapshotLocked(long currentTime) throws RemoteException {

// For xt/dev, we pass a null VPN array because usage is aggregated by UID, so VPN traffic

// can't be reattributed to responsible apps.

Trace.traceBegin(TRACE_TAG_NETWORK, "recordDev");

mDevRecorder.recordSnapshotLocked(devSnapshot, mActiveIfaces, currentTime);

Trace.traceEnd(TRACE_TAG_NETWORK);

Trace.traceBegin(TRACE_TAG_NETWORK, "recordXt");

mXtRecorder.recordSnapshotLocked(xtSnapshot, mActiveIfaces, currentTime);

Trace.traceEnd(TRACE_TAG_NETWORK);

// For per-UID stats, pass the VPN info so VPN traffic is reattributed to responsible apps.

Trace.traceBegin(TRACE_TAG_NETWORK, "recordUid");

mUidRecorder.recordSnapshotLocked(uidSnapshot, mActiveUidIfaces, currentTime);

Trace.traceEnd(TRACE_TAG_NETWORK);

Trace.traceBegin(TRACE_TAG_NETWORK, "recordUidTag");

mUidTagRecorder.recordSnapshotLocked(uidSnapshot, mActiveUidIfaces, currentTime);

Trace.traceEnd(TRACE_TAG_NETWORK);

final NetworkStats uidSnapshot = getNetworkStatsUidDetail(INTERFACES_ALL);

NetworkStats uidSnapshot = readNetworkStatsUidDetail(UID_ALL, ifaces, TAG_ALL);

mStatsFactory.readNetworkStatsDetail(uid, ifaces, tag);

public NetworkStats readNetworkStatsDetail(

int limitUid, String[] limitIfaces, int limitTag) throws IOException {

// In order to prevent deadlocks, anything protected by this lock MUST NOT call out to other

// code that will acquire other locks within the system server. See b/134244752.

synchronized (mPersistentDataLock) {

// Take a reference. If this gets swapped out, we still have the old reference.

final VpnInfo[] vpnArray = mVpnInfos;

// Take a defensive copy. mPersistSnapshot is mutated in some cases below

final NetworkStats prev = mPersistSnapshot.clone();

if (USE_NATIVE_PARSING) {

final NetworkStats stats =

new NetworkStats(SystemClock.elapsedRealtime(), 0 /* initialSize */);

# 读取 bpf 信息

if (mUseBpfStats) {

try {

requestSwapActiveStatsMapLocked();

} catch (RemoteException e) {

throw new IOException(e);

}

// Stats are always read from the inactive map, so they must be read after the

// swap

if (nativeReadNetworkStatsDetail(stats, mStatsXtUid.getAbsolutePath(), UID_ALL,

INTERFACES_ALL, TAG_ALL, mUseBpfStats) != 0) {

throw new IOException("Failed to parse network stats");

}

// BPF stats are incremental; fold into mPersistSnapshot.

mPersistSnapshot.setElapsedRealtime(stats.getElapsedRealtime());

mPersistSnapshot.combineAllValues(stats);

}

# nativeReadNetworkStatsDetail() jni

base\services\core\jni\com_android_server_net_NetworkStatsFactory.cpp:

328

329 static const JNINativeMethod gMethods[] = {

330: { "nativeReadNetworkStatsDetail",

331 "(Landroid/net/NetworkStats;Ljava/lang/String;I[Ljava/lang/String;IZ)I",

332 (void*) readNetworkStatsDetail },

static int readNetworkStatsDetail(JNIEnv* env, jclass clazz, jobject stats, jstring path,

jint limitUid, jobjectArray limitIfacesObj, jint limitTag,

jboolean useBpfStats) {

std::vector<std::string> limitIfaces;

if (limitIfacesObj != NULL && env->GetArrayLength(limitIfacesObj) > 0) {

int num = env->GetArrayLength(limitIfacesObj);

for (int i = 0; i < num; i++) {

jstring string = (jstring)env->GetObjectArrayElement(limitIfacesObj, i);

ScopedUtfChars string8(env, string);

if (string8.c_str() != NULL) {

limitIfaces.push_back(std::string(string8.c_str()));

}

}

}

std::vector<stats_line> lines;

if (useBpfStats) {

if (parseBpfNetworkStatsDetail(&lines, limitIfaces, limitTag, limitUid) < 0)

return -1;

}

int parseBpfNetworkStatsDetail(std::vector<stats_line>* lines,

const std::vector<std::string>& limitIfaces, int limitTag,

int limitUid) {

BpfMapRO<uint32_t, IfaceValue> ifaceIndexNameMap(IFACE_INDEX_NAME_MAP_PATH);

if (!ifaceIndexNameMap.isValid()) {

int ret = -errno;

ALOGE("get ifaceIndexName map fd failed: %s", strerror(errno));

return ret;

}

BpfMapRO<uint32_t, uint8_t> configurationMap(CONFIGURATION_MAP_PATH);

if (!configurationMap.isValid()) {

int ret = -errno;

ALOGE("get configuration map fd failed: %s", strerror(errno));

return ret;

}

auto configuration = configurationMap.readValue(CURRENT_STATS_MAP_CONFIGURATION_KEY);

if (!configuration.ok()) {

ALOGE("Cannot read the old configuration from map: %s",

configuration.error().message().c_str());

return -configuration.error().code();

}

const char* statsMapPath = STATS_MAP_PATH[configuration.value()];

BpfMap<StatsKey, StatsValue> statsMap(statsMapPath);

if (!statsMap.isValid()) {

int ret = -errno;

ALOGE("get stats map fd failed: %s, path: %s", strerror(errno), statsMapPath);

return ret;

}

// It is safe to read and clear the old map now since the

// networkStatsFactory should call netd to swap the map in advance already.

int ret = parseBpfNetworkStatsDetailInternal(lines, limitIfaces, limitTag, limitUid, statsMap,

ifaceIndexNameMap);

if (ret) {

ALOGE("parse detail network stats failed: %s", strerror(errno));

return ret;

}

Result<void> res = statsMap.clear();

if (!res.ok()) {

ALOGE("Clean up current stats map failed: %s", strerror(res.error().code()));

return -res.error().code();

}

return 0;

}

# 读取 bpf 收集的数据

int parseBpfNetworkStatsDetailInternal(std::vector<stats_line>* lines,

const std::vector<std::string>& limitIfaces, int limitTag,

int limitUid, const BpfMap<StatsKey, StatsValue>& statsMap,

const BpfMap<uint32_t, IfaceValue>& ifaceMap) {

int64_t unknownIfaceBytesTotal = 0;

const auto processDetailUidStats =

[lines, &limitIfaces, &limitTag, &limitUid, &unknownIfaceBytesTotal, &ifaceMap](

const StatsKey& key,

const BpfMap<StatsKey, StatsValue>& statsMap) -> Result<void> {

char ifname[IFNAMSIZ];

if (getIfaceNameFromMap(ifaceMap, statsMap, key.ifaceIndex, ifname, key,

&unknownIfaceBytesTotal)) {

return Result<void>();

}

std::string ifnameStr(ifname);

if (limitIfaces.size() > 0 &&

std::find(limitIfaces.begin(), limitIfaces.end(), ifnameStr) == limitIfaces.end()) {

// Nothing matched; skip this line.

return Result<void>();

}

if (limitTag != TAG_ALL && uint32_t(limitTag) != key.tag) {

return Result<void>();

}

if (limitUid != UID_ALL && uint32_t(limitUid) != key.uid) {

return Result<void>();

}

Result<StatsValue> statsEntry = statsMap.readValue(key);

if (!statsEntry.ok()) {

return base::ResultError(statsEntry.error().message(), statsEntry.error().code());

}

lines->push_back(populateStatsEntry(key, statsEntry.value(), ifname));

# 添加 bpf 收集的统计信息

ALOGD("network bpf ifname:%s", ifname);

ALOGD("network bpf key.tag:%d", key.tag);

ALOGD("network bpf key.uid:%d", key.uid);

ALOGD("network bpf rxBytes:%d", (int)(statsEntry.value().rxBytes));

ALOGD("network bpf txBytes:%d", (int)(statsEntry.value().txBytes));

return Result<void>();

};

Result<void> res = statsMap.iterate(processDetailUidStats);

if (!res.ok()) {

ALOGE("failed to iterate per uid Stats map for detail traffic stats: %s",

strerror(res.error().code()));

return -res.error().code();

}

// Since eBPF use hash map to record stats, network stats collected from

// eBPF will be out of order. And the performance of findIndexHinted in

// NetworkStats will also be impacted.

//

// Furthermore, since the StatsKey contains iface index, the network stats

// reported to framework would create items with the same iface, uid, tag

// and set, which causes NetworkStats maps wrong item to subtract.

//

// Thus, the stats needs to be properly sorted and grouped before reported.

groupNetworkStats(lines);

return 0;

}

使用 浏览器下载一个大文件,使用 logcat 观察数据记录过程

# 查看 浏览器 UID 为 10125

/ # cat data/system/packages.list | grep browser

org.chromium.browser 10125 0 /data/user/0/org.chromium.browser default:targetSdkVersion=29 3002,3003,3001 0 398713230

# logcat 中的记录 key.uid rxBytes txBytes

09-07 10:41:12.281 5020 5326 D BpfNetworkStats: network bpf key.tag:0

09-07 10:41:12.281 5020 5326 D BpfNetworkStats: network bpf key.uid:10125

09-07 10:41:12.281 5020 5326 D BpfNetworkStats: network bpf rxBytes:3900464

09-07 10:41:12.281 5020 5326 D BpfNetworkStats: network bpf txBytes:207692

09-07 10:41:14.449 5020 5326 D BpfNetworkStats: network bpf ifname:wlan0

参考:

eBPF 流量监控

https://source.android.google.cn/devices/tech/datausage/ebpf-traffic-monitor?hl=zh-cn&skip_cache=true

使用 eBPF 扩展内核

https://source.android.google.cn/docs/core/architecture/kernel/bpf?hl=zh-cn

理解android-ebpf

https://sniffer.site/2021/11/26/理解android-ebpf/

浙公网安备 33010602011771号

浙公网安备 33010602011771号