Elasticsearch+Fluentd+Kibana+docker-compose容器日志监控

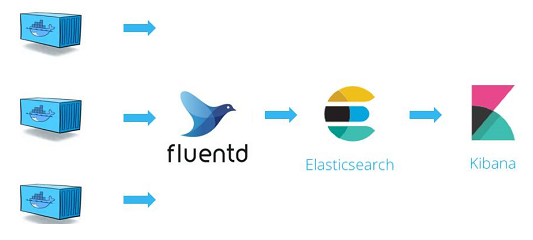

核心思路:

Elasticsearch+Kibana部署在单独的服务器上,Fluentd运行在承载docker容器的服务器上,通过容器内置参数logging driver来获取日志,

docker-elastickibana.yml

version: '2' services: elasticsearch: image: docker.elastic.co/elasticsearch/elasticsearch-oss:6.1.4 container_name: elasticsearch environment: - discovery.type=single-node volumes: - ./esdata:/usr/share/elasticsearch/data networks: - efknet ports: - "9200:9200" kibana: image: docker.elastic.co/kibana/kibana-oss:6.1.4 container_name: kibana networks: - efknet ports: - "5601:5601" networks: efknet:

docker-fluentd.yml

version: '2' services: fluentd: build: . volumes: - ./conf:/fluentd/etc - ./log:/fluentd/log container_name: fluentd networks: - fluent_net ports: - "24224:24224" - "24224:24224/udp" networks: fluent_net:

fluent.conf

# fluentd/conf/fluent.conf <source> @type forward port 24224 bind 0.0.0.0 </source> <match *.**> @type copy <store> @type elasticsearch host elasticsearch port 9200 logstash_format true logstash_prefix fluentd logstash_dateformat %Y%m%d include_tag_key true type_name access_log tag_key @log_name flush_interval 1s </store> <store> @type stdout </store> </match>

测试容器http_server,核心是logging一栏

version: '3' services: http_server: image: httpd container_name: http_server ports: - "80:80" networks: - efknet logging: driver: "fluentd" options: fluentd-address: localhost:24224 tag: httpd_server networks: efknet:

需要注意的点:

Elasticsearch宿主机/etc/sysctl.conf文件底部添加一行 vm.max_map_count=655360

[root@localhost efk]# sysctl -p vm.max_map_count = 655360

修改系统ulimit大小

Elasticsearch默认是集群方式启动,compose中要指明以单节点启动,一般用于开发环境。

docker中fluentd无法连接到Elasticsearch可能是firewalld的原因,systemctl stop firewalld && systemctl restart docker

Elasticsearch在容器中默认是以id为1000的账户在运行,如果-v挂载日志目录到本地或者文件,需要保证宿主机目录或文件有写权限。

Fluentd容器运行时区的问题,可以挂载/etc/localtime到容器中。

暂时没想到,持续更新。。。

乌龟虽然跑的慢但是比兔子长寿啊

浙公网安备 33010602011771号

浙公网安备 33010602011771号