高可用OpenStack(Queen版)集群-9.Cinder控制节点集群

参考文档:

- Install-guide:https://docs.openstack.org/install-guide/

- OpenStack High Availability Guide:https://docs.openstack.org/ha-guide/index.html

- 理解Pacemaker:http://www.cnblogs.com/sammyliu/p/5025362.html

十三.Cinder控制节点集群

1. 创建cinder数据库

# 在任意控制节点创建数据库,后台数据自动同步,以controller01节点为例; [root@controller01 ~]# mysql -uroot -pmysql_pass MariaDB [(none)]> CREATE DATABASE cinder; MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'cinder_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'cinder_dbpass'; MariaDB [(none)]> flush privileges; MariaDB [(none)]> exit;

2. 创建cinder-api

# 在任意控制节点操作,以controller01节点为例; # 调用cinder服务需要认证信息,加载环境变量脚本即可 [root@controller01 ~]# . admin-openrc

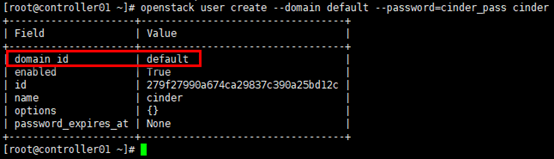

1)创建cinder用户

# service项目已在glance章节创建; # neutron用户在”default” domain中 [root@controller01 ~]# openstack user create --domain default --password=cinder_pass cinder

2)cinder赋权

# 为cinder用户赋予admin权限 [root@controller01 ~]# openstack role add --project service --user cinder admin

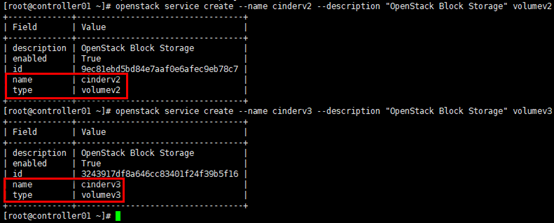

3)创建cinder服务实体

# cinder服务实体类型”volume”; # 创建v2/v3两个服务实体 [root@controller01 ~]# openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2 [root@controller01 ~]# openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

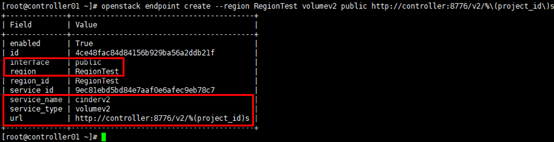

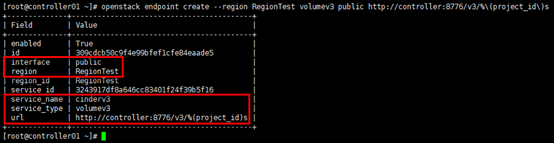

4)创建cinder-api

# 注意--region与初始化admin用户时生成的region一致; # api地址统一采用vip,如果public/internal/admin分别使用不同的vip,请注意区分; # cinder-api 服务类型为volume; # cinder-api后缀为用户project-id,可通过”openstack project list”查看 # v2 public api [root@controller01 ~]# openstack endpoint create --region RegionTest volumev2 public http://controller:8776/v2/%\(project_id\)s

# v2 internal api [root@controller01 ~]# openstack endpoint create --region RegionTest volumev2 internal http://controller:8776/v2/%\(project_id\)s

# v2 admin api [root@controller01 ~]# openstack endpoint create --region RegionTest volumev2 admin http://controller:8776/v2/%\(project_id\)s

# v3 public api [root@controller01 ~]# openstack endpoint create --region RegionTest volumev3 public http://controller:8776/v3/%\(project_id\)s

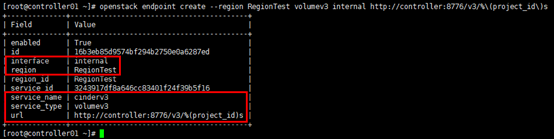

# v3 internal api [root@controller01 ~]# openstack endpoint create --region RegionTest volumev3 internal http://controller:8776/v3/%\(project_id\)s

# v3 admin api [root@controller01 ~]# openstack endpoint create --region RegionTest volumev3 admin http://controller:8776/v3/%\(project_id\)s

3. 安装cinder

# 在全部控制节点安装cinder服务,以controller01节点为例 [root@controller01 ~]# yum install openstack-cinder -y

4. 配置cinder.conf

# 在全部控制节点操作,以controller01节点为例; # 注意”my_ip”参数,根据节点修改; # 注意cinder.conf文件的权限:root:cinder [root@controller01 ~]# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak [root@controller01 ~]# egrep -v "^$|^#" /etc/cinder/cinder.conf [DEFAULT] state_path = /var/lib/cinder my_ip = 172.30.200.31 glance_api_servers = http://controller:9292 auth_strategy = keystone osapi_volume_listen = $my_ip osapi_volume_listen_port = 8776 log_dir = /var/log/cinder # 前端采用haproxy时,服务连接rabbitmq会出现连接超时重连的情况,可通过各服务与rabbitmq的日志查看; # transport_url = rabbit://openstack:rabbitmq_pass@controller:5673 # rabbitmq本身具备集群机制,官方文档建议直接连接rabbitmq集群;但采用此方式时服务启动有时会报错,原因不明;如果没有此现象,强烈建议连接rabbitmq直接对接集群而非通过前端haproxy transport_url=rabbit://openstack:rabbitmq_pass@controller01:5672,controller02:5672,controller03:5672 [backend] [backend_defaults] [barbican] [brcd_fabric_example] [cisco_fabric_example] [coordination] [cors] [database] connection = mysql+pymysql://cinder:cinder_dbpass@controller/cinder [fc-zone-manager] [healthcheck] [key_manager] [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller01:11211,controller02:11211,controller03:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = cinder_pass [matchmaker_redis] [nova] [oslo_concurrency] lock_path = $state_path/tmp [oslo_messaging_amqp] [oslo_messaging_kafka] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_messaging_zmq] [oslo_middleware] [oslo_policy] [oslo_reports] [oslo_versionedobjects] [profiler] [service_user] [ssl] [vault]

5. 配置nova.conf

# 在全部控制节点操作,以controller01节点为例; # 配置只涉及nova.conf的”[cinder]”字段; # 加入对应regiong [root@controller01 ~]# vim /etc/nova/nova.conf [cinder] os_region_name=RegionTest

6. 同步cinder数据库

# 任意控制节点操作; # 忽略部分”deprecation”信息 [root@controller01 ~]# su -s /bin/sh -c "cinder-manage db sync" cinder # 验证 [root@controller01 ~]# mysql -h controller -ucinder -pcinder_dbpass -e "use cinder;show tables;"

7. 启动服务

# 全部控制节点操作; # 变更nova配置文件,首先需要重启nova服务 [root@controller01 ~]# systemctl restart openstack-nova-api.service # 开机启动 [root@controller01 ~]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service # 启动 [root@controller01 ~]# systemctl restart openstack-cinder-api.service [root@controller01 ~]# systemctl restart openstack-cinder-scheduler.service

8. 验证

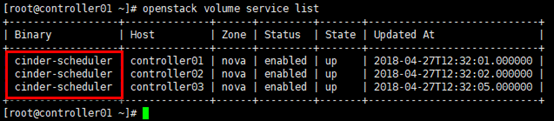

[root@controller01 ~]# . admin-openrc # 查看agent服务; # 或:cinder service-list [root@controller01 ~]# openstack volume service list

9. 设置pcs资源

# 在任意控制节点操作; # 添加资源cinder-api与cinder-scheduler [root@controller01 ~]# pcs resource create openstack-cinder-api systemd:openstack-cinder-api --clone interleave=true [root@controller01 ~]# pcs resource create openstack-cinder-scheduler systemd:openstack-cinder-scheduler --clone interleave=true # cinder-api与cinder-scheduler以active/active模式运行; # openstack-nova-volume以active/passive模式运行 # 查看资源 [root@controller01 ~]# pcs resource

浙公网安备 33010602011771号

浙公网安备 33010602011771号