40.第34章 kubeadm

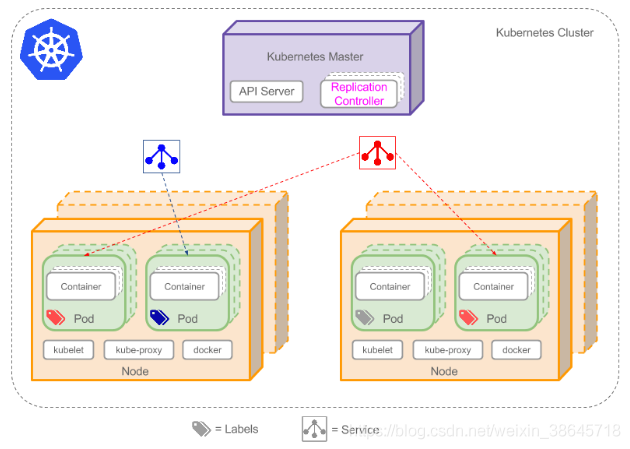

一.kubernetes Master节点和Node节点上各组件的基本作用

Kubernetes集群分为一个Master节点和若干Node节点。

Master

Master是Kubernetes 的主节点。

Master组件可以在集群中任何节点上运行。但是为了简单起见,通常在一台虚拟机上启动所有Master组件,并且不会在此VM机器上运行用户容器。

集群所有的控制命令都传递给Master组件,在Master节点上运行。kubectl命令在其他Node节点上无法执行。

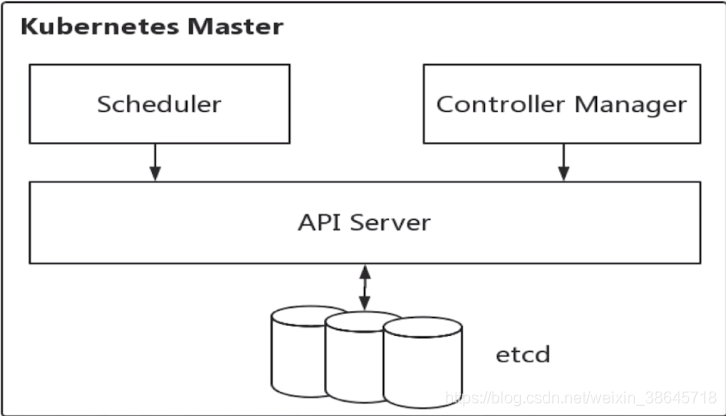

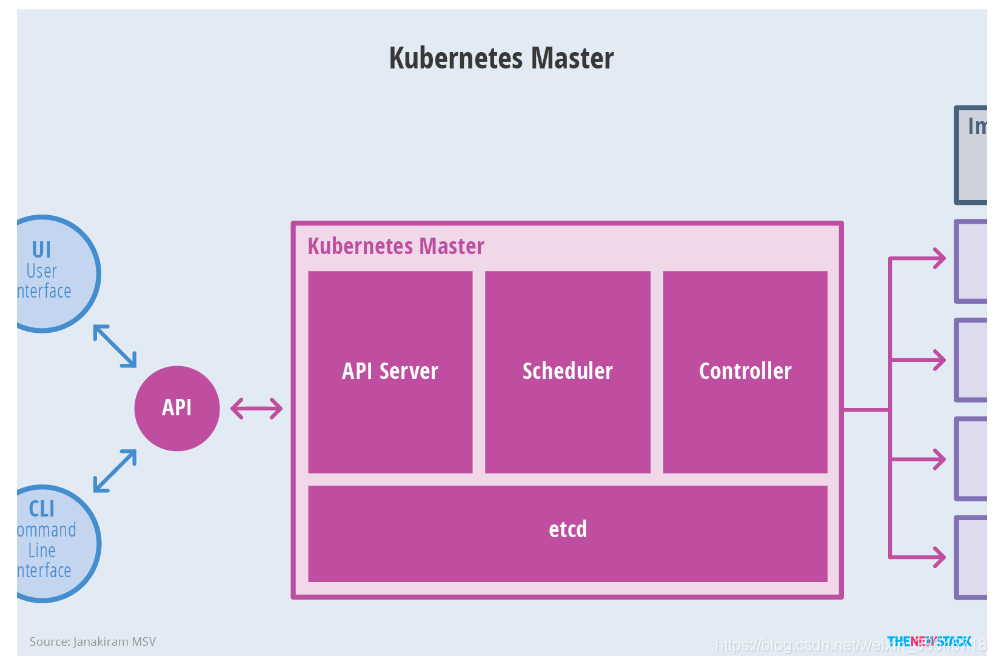

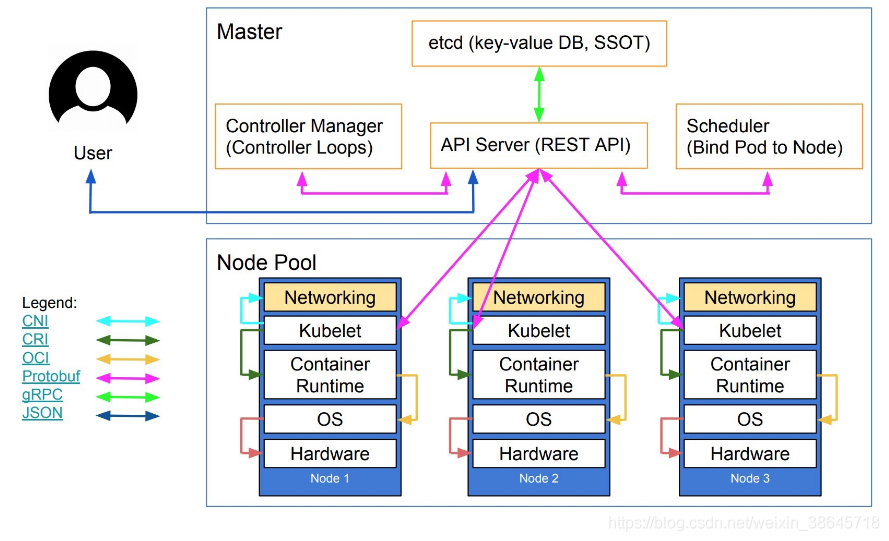

Master节点上面主要由四个模块组成:etcd、api server、controller manager、scheduler。

四个组件的主要功能可以概括为:

api server:负责对外提供restful的Kubernetes API服务,其他Master组件都通过调用api server提供的rest接口实现各自的功能,如controller就是通过api server来实时监控各个资源的状态的。

etcd:是 Kubernetes 提供的一个高可用的键值数据库,用于保存集群所有的网络配置和资源对象的状态信息,也就是保存了整个集群的状态。数据变更都是通过api server进行的。整个kubernetes系统中一共有两个服务需要用到etcd用来协同和存储配置,分别是:

1)网络插件flannel,其它网络插件也需要用到etcd存储网络的配置信息;

2)kubernetes本身,包括各种资源对象的状态和元信息配置。

scheduler:监听新建pod副本信息,并通过调度算法为该pod选择一个最合适的Node节点。会检索到所有符合该pod要求的Node节点,执行pod调度逻辑。调度成功之后,会将pod信息绑定到目标节点上,同时将信息写入到etcd中。一旦绑定,就由Node上的kubelet接手pod的接下来的生命周期管理。如果把scheduler看成一个黑匣子,那么它的输入是pod和由多个Node组成的列表,输出是pod和一个Node的绑定,即将这个pod部署到这个Node上。Kubernetes目前提供了调度算法,但是同样也保留了接口,用户可以根据自己的需求定义自己的调度算法。

controller manager:负责维护集群的状态,比如故障检测、自动扩展、滚动更新等。每个资源一般都对应有一个控制器,这些controller通过api server实时监控各个资源的状态,controller manager就是负责管理这些控制器的。当有资源因为故障导致状态变化,controller就会尝试将系统由“现有状态”恢复到“期待状态”,保证其下每一个controller所对应的资源始终处于期望状态。比如我们通过api server创建一个pod,当这个pod创建成功后,api server的任务就算完成了。而后面保证pod的状态始终和我们预期的一样的重任就由controller manager去保证了。

controller manager 包括运行管理控制器(kube-controller-manager)和云管理控制器(cloud-controller-manager)

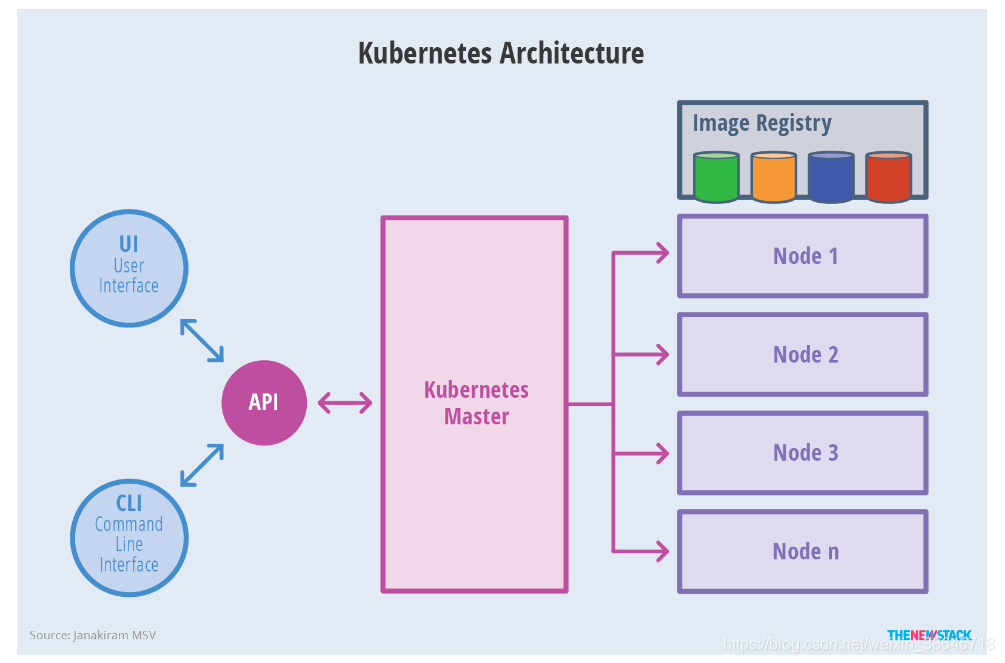

Kubernetes Master 的架构为:

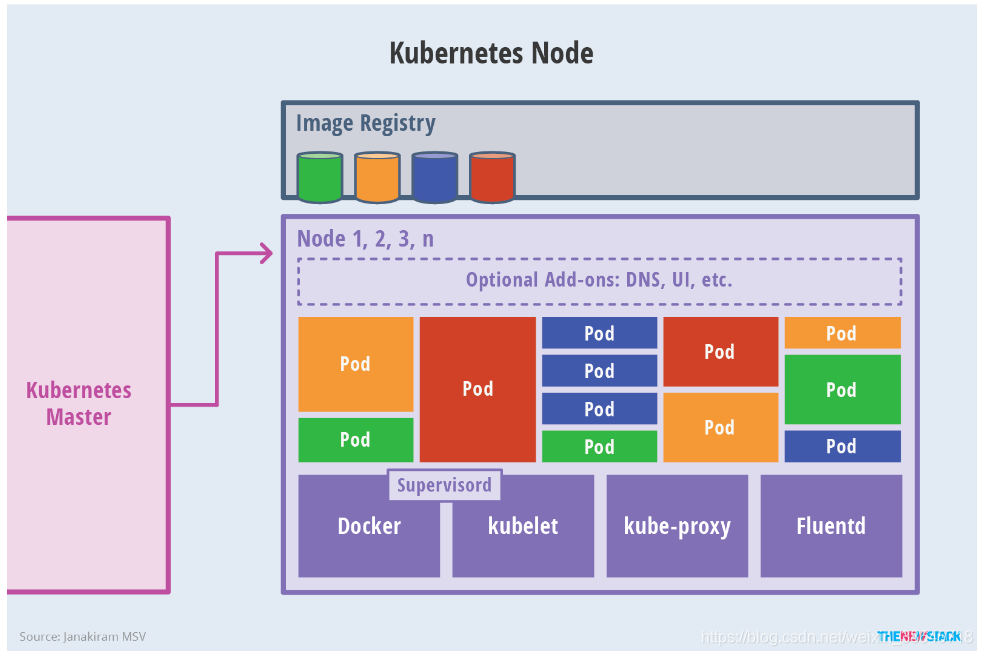

Node

Node上运行着Master分配的pod,当一个Node宕机,其上的pod会被自动转移到其他Node上。

每一个Node节点都安装了Node组件,包括kubelet、kube-proxy、container runtime。

kubelet 会监视已分配给节点的pod,负责pod的生命周期管理,同时与Master密切协作,维护和管理该Node上面的所有容器,实现集群管理的基本功能。即Node节点通过kubelet与master组件交互,可以理解为kubelet是Master在每个Node节点上面的agent。本质上,它负责使Pod的运行状态与期望的状态一致。

kube-proxy 是实现service的通信与负载均衡机制的重要组件,将到service的请求转发到后端的pod上。

Container runtime:容器运行环境,目前Kubernetes支持docker和rkt两种容器。

Node 的架构为:

通常不会在Master节点上运行任何用户容器,Node从节点才是真正运行工作负载的节点。

整体架构如下:

抽象后的架构为:

除了核心组件,还有一些推荐的Add-ons:

- kube-dns负责为整个集群提供DNS服务

- Ingress Controller为服务提供外网入口

- Heapster提供资源监控

- Dashboard提供GUI

- Federation提供跨可用区的集群

二.kubernetes环境中pod服务的访问流程

你的请求先通过防火墙到负载均衡器,从负载均衡器到nodeport,nodeport转给service,service再转给服务,seivice基于label标签筛选后面的pod

三.kubeadm Init 命令的工作流程

kubeadm init 命令通过执行下列步骤来启动一个 Kubernetes 控制平面节点。

-

在做出变更前运行一系列的预检项来验证系统状态。一些检查项目仅仅触发警告, 其它的则会被视为错误并且退出 kubeadm,除非问题得到解决或者用户指定了

--ignore-preflight-errors=<错误列表>参数。 -

生成一个自签名的 CA 证书来为集群中的每一个组件建立身份标识。 用户可以通过将其放入

--cert-dir配置的证书目录中(默认为/etc/kubernetes/pki) 来提供他们自己的 CA 证书以及/或者密钥。 APIServer 证书将为任何--apiserver-cert-extra-sans参数值提供附加的 SAN 条目,必要时将其小写。 -

将 kubeconfig 文件写入

/etc/kubernetes/目录以便 kubelet、控制器管理器和调度器用来连接到 API 服务器,它们每一个都有自己的身份标识,同时生成一个名为admin.conf的独立的 kubeconfig 文件,用于管理操作。 -

为 API 服务器、控制器管理器和调度器生成静态 Pod 的清单文件。假使没有提供一个外部的 etcd 服务的话,也会为 etcd 生成一份额外的静态 Pod 清单文件。

静态 Pod 的清单文件被写入到

/etc/kubernetes/manifests目录; kubelet 会监视这个目录以便在系统启动的时候创建 Pod。一旦控制平面的 Pod 都运行起来,

kubeadm init的工作流程就继续往下执行。 -

对控制平面节点应用标签和污点标记以便不会在它上面运行其它的工作负载。

-

生成令牌,将来其他节点可使用该令牌向控制平面注册自己。 如 kubeadm token 文档所述, 用户可以选择通过

--token提供令牌。 -

为了使得节点能够遵照启动引导令牌 和 TLS 启动引导 这两份文档中描述的机制加入到集群中,kubeadm 会执行所有的必要配置:

- 创建一个 ConfigMap 提供添加集群节点所需的信息,并为该 ConfigMap 设置相关的 RBAC 访问规则。

- 允许启动引导令牌访问 CSR 签名 API。

- 配置自动签发新的 CSR 请求。

更多相关信息,请查看 kubeadm join。

-

通过 API 服务器安装一个 DNS 服务器 (CoreDNS) 和 kube-proxy 附加组件。 在 Kubernetes 版本 1.11 和更高版本中,CoreDNS 是默认的 DNS 服务器。 请注意,尽管已部署 DNS 服务器,但直到安装 CNI 时才调度它。

警告: 从 v1.18 开始,在 kubeadm 中使用 kube-dns 的支持已被废弃,并已在 v1.21 版本中删除。

四.k8s部署(基于kubeadm)

1.基础环境准备

注意:

关闭selinux

关闭防火墙,

2. keepalived安装及配置

节点1安装及配置keepalived:

root@k8s-ha1:~# cat install_keepalived_for_ha1.sh

#!/bin/bash

#

#******************************************************************************

#Author: zhanghui

#QQ: 19661891

#Date: 2021-03-31

#FileName: install_keepalived.sh

#URL: www.cnblogs.com/neteagles

#Description: install_keepalived for centos 7/8 & ubuntu 18.04/20.04

#Copyright (C): 2021 All rights reserved

#******************************************************************************

SRC_DIR=/usr/local/src

COLOR="echo -e \\033[01;31m"

END='\033[0m'

KEEPALIVED_URL=https://keepalived.org/software/

KEEPALIVED_FILE=keepalived-2.2.2.tar.gz

KEEPALIVED_INSTALL_DIR=/apps/keepalived

CPUS=`lscpu |awk '/^CPU\(s\)/{print $2}'`

NET_NAME=`ip a |awk -F"[: ]" '/^2/{print $3}'`

STATE=MASTER

PRIORITY=100

VIP=10.0.0.188

os(){

if grep -Eqi "CentOS" /etc/issue || grep -Eq "CentOS" /etc/*-release;then

rpm -q redhat-lsb-core &> /dev/null || { ${COLOR}"安装lsb_release工具"${END};yum -y install redhat-lsb-core &> /dev/null; }

fi

OS_ID=`lsb_release -is`

OS_RELEASE_VERSION=`lsb_release -rs |awk -F'.' '{print $1}'`

}

check_file (){

cd ${SRC_DIR}

if [ ${OS_ID} == "CentOS" ] &> /dev/null;then

rpm -q wget &> /dev/null || yum -y install wget &> /dev/null

fi

if [ ! -e ${KEEPALIVED_FILE} ];then

${COLOR}"缺少${KEEPALIVED_FILE}文件"${END}

${COLOR}'开始下载KEEPALIVED源码包'${END}

wget ${KEEPALIVED_URL}${KEEPALIVED_FILE} || { ${COLOR}"KEEPALIVED源码包下载失败"${END}; exit; }

else

${COLOR}"相关文件已准备好"${END}

fi

}

install_keepalived(){

${COLOR}"开始安装KEEPALIVED"${END}

${COLOR}"开始安装KEEPALIVED依赖包"${END}

if [[ ${OS_RELEASE_VERSION} == 8 ]] &> /dev/null;then

cat > /etc/yum.repos.d/PowerTools.repo <<-EOF

[PowerTools]

name=PowerTools

baseurl=https://mirrors.aliyun.com/centos/8/PowerTools/x86_64/os/

https://mirrors.huaweicloud.com/centos/8/PowerTools/x86_64/os/

https://mirrors.cloud.tencent.com/centos/8/PowerTools/x86_64/os/

https://mirrors.tuna.tsinghua.edu.cn/centos/8/PowerTools/x86_64/os/

http://mirrors.163.com/centos/8/PowerTools/x86_64/os/

http://mirrors.sohu.com/centos/8/PowerTools/x86_64/os/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

EOF

yum -y install make gcc ipvsadm autoconf automake openssl-devel libnl3-devel iptables-devel ipset-devel file-devel net-snmp-devel glib2-devel pcre2-devel libnftnl-devel libmnl-devel systemd-devel &> /dev/null

elif [[ ${OS_RELEASE_VERSION} == 7 ]] &> /dev/null;then

yum -y install make gcc libnfnetlink-devel libnfnetlink ipvsadm libnl libnl-devel libnl3 libnl3-devel lm_sensors-libs net-snmp-agent-libs net-snmp-libs openssh-server openssh-clients openssl openssl-devel automake iproute &> /dev/null

elif [[ ${OS_RELEASE_VERSION} == 20 ]] &> /dev/null;then

apt update &> /dev/null;apt -y install make gcc ipvsadm build-essential pkg-config automake autoconf libipset-dev libnl-3-dev libnl-genl-3-dev libssl-dev libxtables-dev libip4tc-dev libip6tc-dev libipset-dev libmagic-dev libsnmp-dev libglib2.0-dev libpcre2-dev libnftnl-dev libmnl-dev libsystemd-dev

else

apt update &> /dev/null;apt -y install make gcc ipvsadm build-essential pkg-config automake autoconf iptables-dev libipset-dev libnl-3-dev libnl-genl-3-dev libssl-dev libxtables-dev libip4tc-dev libip6tc-dev libipset-dev libmagic-dev libsnmp-dev libglib2.0-dev libpcre2-dev libnftnl-dev libmnl-dev libsystemd-dev &> /dev/null

fi

tar xf ${KEEPALIVED_FILE}

KEEPALIVED_DIR=`echo ${KEEPALIVED_FILE} | sed -nr 's/^(.*[0-9]).*/\1/p'`

cd ${KEEPALIVED_DIR}

./configure --prefix=${KEEPALIVED_INSTALL_DIR} --disable-fwmark

make -j $CPUS && make install

[ $? -eq 0 ] && $COLOR"KEEPALIVED编译安装成功"$END || { $COLOR"KEEPALIVED编译安装失败,退出!"$END;exit; }

[ -d /etc/keepalived ] || mkdir -p /etc/keepalived &> /dev/null

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state ${STATE}

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority ${PRIORITY}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

${VIP} dev eth0 label eth0:1

}

}

EOF

cp ./keepalived/keepalived.service /lib/systemd/system/

echo "PATH=${KEEPALIVED_INSTALL_DIR}/sbin:${PATH}" > /etc/profile.d/keepalived.sh

systemctl daemon-reload

systemctl enable --now keepalived &> /dev/null

systemctl is-active keepalived &> /dev/null || { ${COLOR}"KEEPALIVED 启动失败,退出!"${END} ; exit; }

${COLOR}"KEEPALIVED安装完成"${END}

}

main(){

os

check_file

install_keepalived

}

main

root@k8s-ha1:/usr/local/src# bash install_keepalived_for_ha1.sh

[C:\~]$ ping 10.0.0.188

正在 Ping 10.0.0.188 具有 32 字节的数据:

来自 10.0.0.188 的回复: 字节=32 时间<1ms TTL=64

来自 10.0.0.188 的回复: 字节=32 时间<1ms TTL=64

来自 10.0.0.188 的回复: 字节=32 时间<1ms TTL=64

来自 10.0.0.188 的回复: 字节=32 时间<1ms TTL=64

10.0.0.188 的 Ping 统计信息:

数据包: 已发送 = 4,已接收 = 4,丢失 = 0 (0% 丢失),

往返行程的估计时间(以毫秒为单位):

最短 = 0ms,最长 = 0ms,平均 = 0ms

root@k8s-ha1:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:b9:92:79 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.104/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.188/32 scope global eth0:0 #这里可以看到vip地址

valid_lft forever preferred_lft forever

节点2安装及配置keepalived:

root@k8s-ha2:~# cat install_keepalived_for_ha2.sh

#!/bin/bash

#

#******************************************************************************

#Author: zhanghui

#QQ: 19661891

#Date: 2021-03-31

#FileName: install_keepalived.sh

#URL: www.cnblogs.com/neteagles

#Description: install_keepalived for centos 7/8 & ubuntu 18.04/20.04

#Copyright (C): 2021 All rights reserved

#******************************************************************************

SRC_DIR=/usr/local/src

COLOR="echo -e \\033[01;31m"

END='\033[0m'

KEEPALIVED_URL=https://keepalived.org/software/

KEEPALIVED_FILE=keepalived-2.2.2.tar.gz

KEEPALIVED_INSTALL_DIR=/apps/keepalived

CPUS=`lscpu |awk '/^CPU\(s\)/{print $2}'`

NET_NAME=`ip a |awk -F"[: ]" '/^2/{print $3}'`

STATE=BACKUP

PRIORITY=80

VIP=10.0.0.188

os(){

if grep -Eqi "CentOS" /etc/issue || grep -Eq "CentOS" /etc/*-release;then

rpm -q redhat-lsb-core &> /dev/null || { ${COLOR}"安装lsb_release工具"${END};yum -y install redhat-lsb-core &> /dev/null; }

fi

OS_ID=`lsb_release -is`

OS_RELEASE_VERSION=`lsb_release -rs |awk -F'.' '{print $1}'`

}

check_file (){

cd ${SRC_DIR}

if [ ${OS_ID} == "CentOS" ] &> /dev/null;then

rpm -q wget &> /dev/null || yum -y install wget &> /dev/null

fi

if [ ! -e ${KEEPALIVED_FILE} ];then

${COLOR}"缺少${KEEPALIVED_FILE}文件"${END}

${COLOR}'开始下载KEEPALIVED源码包'${END}

wget ${KEEPALIVED_URL}${KEEPALIVED_FILE} || { ${COLOR}"KEEPALIVED源码包下载失败"${END}; exit; }

else

${COLOR}"相关文件已准备好"${END}

fi

}

install_keepalived(){

${COLOR}"开始安装KEEPALIVED"${END}

${COLOR}"开始安装KEEPALIVED依赖包"${END}

if [[ ${OS_RELEASE_VERSION} == 8 ]] &> /dev/null;then

cat > /etc/yum.repos.d/PowerTools.repo <<-EOF

[PowerTools]

name=PowerTools

baseurl=https://mirrors.aliyun.com/centos/8/PowerTools/x86_64/os/

https://mirrors.huaweicloud.com/centos/8/PowerTools/x86_64/os/

https://mirrors.cloud.tencent.com/centos/8/PowerTools/x86_64/os/

https://mirrors.tuna.tsinghua.edu.cn/centos/8/PowerTools/x86_64/os/

http://mirrors.163.com/centos/8/PowerTools/x86_64/os/

http://mirrors.sohu.com/centos/8/PowerTools/x86_64/os/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

EOF

yum -y install make gcc ipvsadm autoconf automake openssl-devel libnl3-devel iptables-devel ipset-devel file-devel net-snmp-devel glib2-devel pcre2-devel libnftnl-devel libmnl-devel systemd-devel &> /dev/null

elif [[ ${OS_RELEASE_VERSION} == 7 ]] &> /dev/null;then

yum -y install make gcc libnfnetlink-devel libnfnetlink ipvsadm libnl libnl-devel libnl3 libnl3-devel lm_sensors-libs net-snmp-agent-libs net-snmp-libs openssh-server openssh-clients openssl openssl-devel automake iproute &> /dev/null

elif [[ ${OS_RELEASE_VERSION} == 20 ]] &> /dev/null;then

apt update &> /dev/null;apt -y install make gcc ipvsadm build-essential pkg-config automake autoconf libipset-dev libnl-3-dev libnl-genl-3-dev libssl-dev libxtables-dev libip4tc-dev libip6tc-dev libipset-dev libmagic-dev libsnmp-dev libglib2.0-dev libpcre2-dev libnftnl-dev libmnl-dev libsystemd-dev

else

apt update &> /dev/null;apt -y install make gcc ipvsadm build-essential pkg-config automake autoconf iptables-dev libipset-dev libnl-3-dev libnl-genl-3-dev libssl-dev libxtables-dev libip4tc-dev libip6tc-dev libipset-dev libmagic-dev libsnmp-dev libglib2.0-dev libpcre2-dev libnftnl-dev libmnl-dev libsystemd-dev &> /dev/null

fi

tar xf ${KEEPALIVED_FILE}

KEEPALIVED_DIR=`echo ${KEEPALIVED_FILE} | sed -nr 's/^(.*[0-9]).*/\1/p'`

cd ${KEEPALIVED_DIR}

./configure --prefix=${KEEPALIVED_INSTALL_DIR} --disable-fwmark

make -j $CPUS && make install

[ $? -eq 0 ] && $COLOR"KEEPALIVED编译安装成功"$END || { $COLOR"KEEPALIVED编译安装失败,退出!"$END;exit; }

[ -d /etc/keepalived ] || mkdir -p /etc/keepalived &> /dev/null

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state ${STATE}

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority ${PRIORITY}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

${VIP} dev eth0 label eth0:1

}

}

EOF

cp ./keepalived/keepalived.service /lib/systemd/system/

echo "PATH=${KEEPALIVED_INSTALL_DIR}/sbin:${PATH}" > /etc/profile.d/keepalived.sh

systemctl daemon-reload

systemctl enable --now keepalived &> /dev/null

systemctl is-active keepalived &> /dev/null || { ${COLOR}"KEEPALIVED 启动失败,退出!"${END} ; exit; }

${COLOR}"KEEPALIVED安装完成"${END}

}

main(){

os

check_file

install_keepalived

}

main

root@k8s-ha2:/usr/local/src# bash install_keepalived_for_ha2.sh

root@k8s-ha2:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:d7:1e:39 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.105/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fed7:1e39/64 scope link

valid_lft forever preferred_lft forever

验证keepalived高可用:

root@k8s-ha1:~# systemctl stop keepalived

root@k8s-ha2:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:03:34:7d brd ff:ff:ff:ff:ff:ff

inet 10.0.0.105/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.188/32 scope global eth0:1 #vip飘到ha2

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe03:347d/64 scope link

valid_lft forever preferred_lft forever

root@k8s-ha1:~# systemctl start keepalived

root@k8s-ha1:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:26:08:f4 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.104/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.188/32 scope global eth0:1 #vip飘回到ha1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe26:8f4/64 scope link

valid_lft forever preferred_lft forever

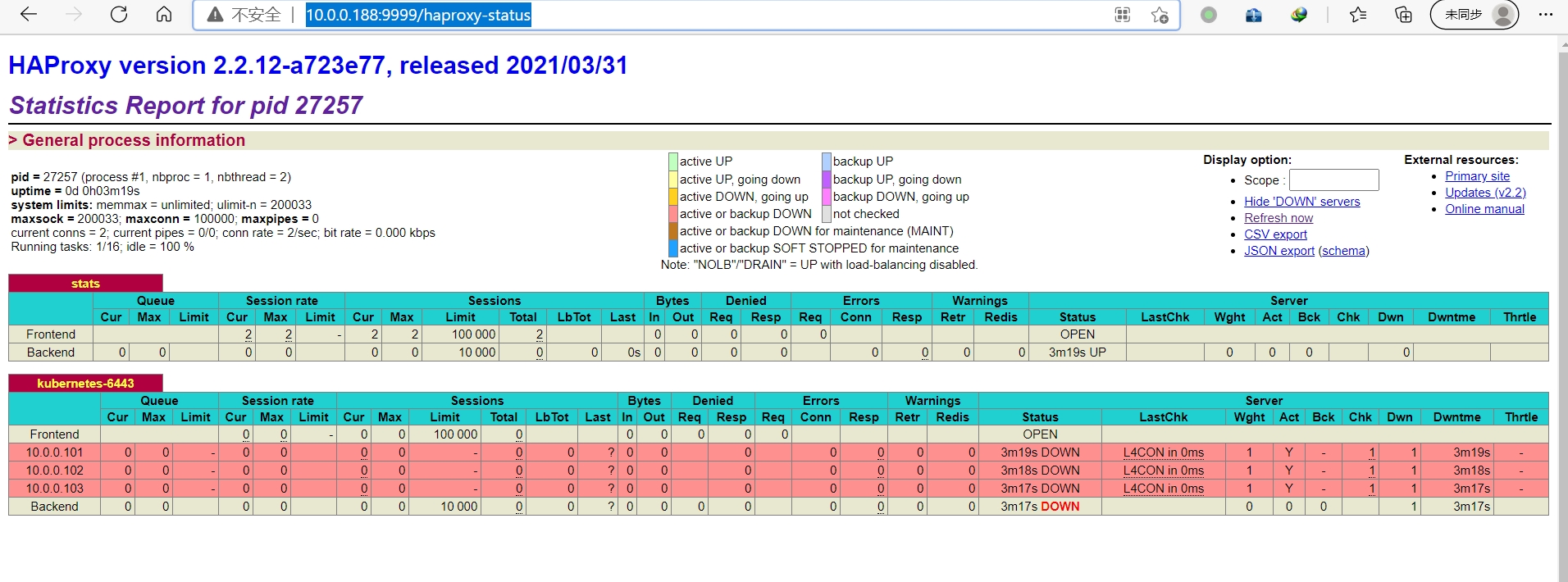

3.haproxy安装及配置

节点1安装及配置haproxy:

root@k8s-ha1:~# cat install_haproxy.sh

#!/bin/bash

#

#******************************************************************************

#Author: zhanghui

#QQ: 19661891

#Date: 2021-04-03

#FileName: install_haproxy.sh

#URL: www.cnblogs.com/neteagles

#Description: install_haproxy for centos 7/8 & ubuntu 18.04/20.04

#Copyright (C): 2021 All rights reserved

#******************************************************************************

SRC_DIR=/usr/local/src

COLOR="echo -e \\033[01;31m"

END='\033[0m'

CPUS=`lscpu |awk '/^CPU\(s\)/{print $2}'`

LUA_FILE=lua-5.4.3.tar.gz

HAPROXY_FILE=haproxy-2.2.12.tar.gz

HAPROXY_INSTALL_DIR=/apps/haproxy

STATS_AUTH_USER=admin

STATS_AUTH_PASSWORD=123456

VIP=10.0.0.188

MASTER1=10.0.0.101

MASTER2=10.0.0.102

MASTER3=10.0.0.103

os(){

if grep -Eqi "CentOS" /etc/issue || grep -Eq "CentOS" /etc/*-release;then

rpm -q redhat-lsb-core &> /dev/null || { ${COLOR}"安装lsb_release工具"${END};yum -y install redhat-lsb-core &> /dev/null; }

fi

OS_ID=`lsb_release -is`

}

check_file (){

cd ${SRC_DIR}

${COLOR}'检查HAPROXY相关源码包'${END}

if [ ! -e ${LUA_FILE} ];then

${COLOR}"缺少${LUA_FILE}文件"${END}

exit

elif [ ! -e ${HAPROXY_FILE} ];then

${COLOR}"缺少${HAPROXY_FILE}文件"${END}

exit

else

${COLOR}"相关文件已准备好"${END}

fi

}

install_haproxy(){

${COLOR}"开始安装HAPROXY"${END}

${COLOR}"开始安装HAPROXY依赖包"${END}

if [ ${OS_ID} == "CentOS" ] &> /dev/null;then

yum -y install gcc make gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-devel systemd-devel libtermcap-devel ncurses-devel libevent-devel readline-devel &> /dev/null

else

apt update &> /dev/null;apt -y install gcc make openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev libreadline-dev libsystemd-dev &> /dev/null

fi

tar xf ${LUA_FILE}

LUA_DIR=`echo ${LUA_FILE} | sed -nr 's/^(.*[0-9]).*/\1/p'`

cd ${LUA_DIR}

make all test

cd ${SRC_DIR}

tar xf ${HAPROXY_FILE}

HAPROXY_DIR=`echo ${HAPROXY_FILE} | sed -nr 's/^(.*[0-9]).*/\1/p'`

cd ${HAPROXY_DIR}

make -j ${CPUS} ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_CPU_AFFINITY=1 USE_LUA=1 LUA_INC=/usr/local/src/${LUA_DIR}/src/ LUA_LIB=/usr/local/src/${LUA_DIR}/src/ PREFIX=${HAPROXY_INSTALL_DIR}

make install PREFIX=${HAPROXY_INSTALL_DIR}

[ $? -eq 0 ] && $COLOR"HAPROXY编译安装成功"$END || { $COLOR"HAPROXY编译安装失败,退出!"$END;exit; }

cat > /lib/systemd/system/haproxy.service <<-EOF

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q

ExecStart=/usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/lib/haproxy/haproxy.pid

ExecReload=/bin/kill -USR2 $MAINPID

[Install]

WantedBy=multi-user.target

EOF

[ -L /usr/sbin/haproxy ] || ln -s ../../apps/haproxy/sbin/haproxy /usr/sbin/ &> /dev/null

[ -d /etc/haproxy ] || mkdir /etc/haproxy &> /dev/null

[ -d /var/lib/haproxy/ ] || mkdir -p /var/lib/haproxy/ &> /dev/null

cat > /etc/haproxy/haproxy.cfg <<-EOF

global

maxconn 100000

chroot /apps/haproxy

stats socket /var/lib/haproxy/haproxy.sock mode 600 level admin

uid 99

gid 99

daemon

#nbproc 4

#cpu-map 1 0

#cpu-map 2 1

#cpu-map 3 2

#cpu-map 4 3

pidfile /var/lib/haproxy/haproxy.pid

log 127.0.0.1 local3 info

defaults

option http-keep-alive

option forwardfor

maxconn 100000

mode http

timeout connect 300000ms

timeout client 300000ms

timeout server 300000ms

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth ${STATS_AUTH_USER}:${STATS_AUTH_PASSWORD}

listen kubernetes-6443

bind ${VIP}:6443

mode tcp

log global

server ${MASTER1} ${MASTER1}:6443 check inter 3000 fall 2 rise 5

server ${MASTER2} ${MASTER2}:6443 check inter 3000 fall 2 rise 5

server ${MASTER3} ${MASTER3}:6443 check inter 3000 fall 2 rise 5

EOF

cat >> /etc/sysctl.conf <<-EOF

net.ipv4.ip_nonlocal_bind = 1

EOF

sysctl -p &> /dev/null

echo "PATH=${HAPROXY_INSTALL_DIR}/sbin:${PATH}" > /etc/profile.d/haproxy.sh

systemctl daemon-reload

systemctl enable --now haproxy &> /dev/null

systemctl is-active haproxy &> /dev/null || { ${COLOR}"HAPROXY 启动失败,退出!"${END} ; exit; }

${COLOR}"HAPROXY安装完成"${END}

}

main(){

os

check_file

install_haproxy

}

main

root@k8s-ha1:/usr/local/src# bash install_haproxy.sh

root@k8s-ha1:~# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 10.0.0.188:6443 0.0.0.0:*

LISTEN 0 128 0.0.0.0:9999 0.0.0.0:*

LISTEN 0 128 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 127.0.0.1:6010 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 128 [::1]:6010 [::]:*

节点2安装及配置haproxy:

root@k8s-ha2:/usr/local/src# bash install_haproxy.sh

root@k8s-ha2:~# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 20480 0.0.0.0:9999 0.0.0.0:*

LISTEN 0 128 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 127.0.0.1:6010 0.0.0.0:*

LISTEN 0 20480 10.0.0.188:6443 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 128 [::1]:6010 [::]:*

http://10.0.0.188:9999/haproxy-status

用户名:admin 密码:123456

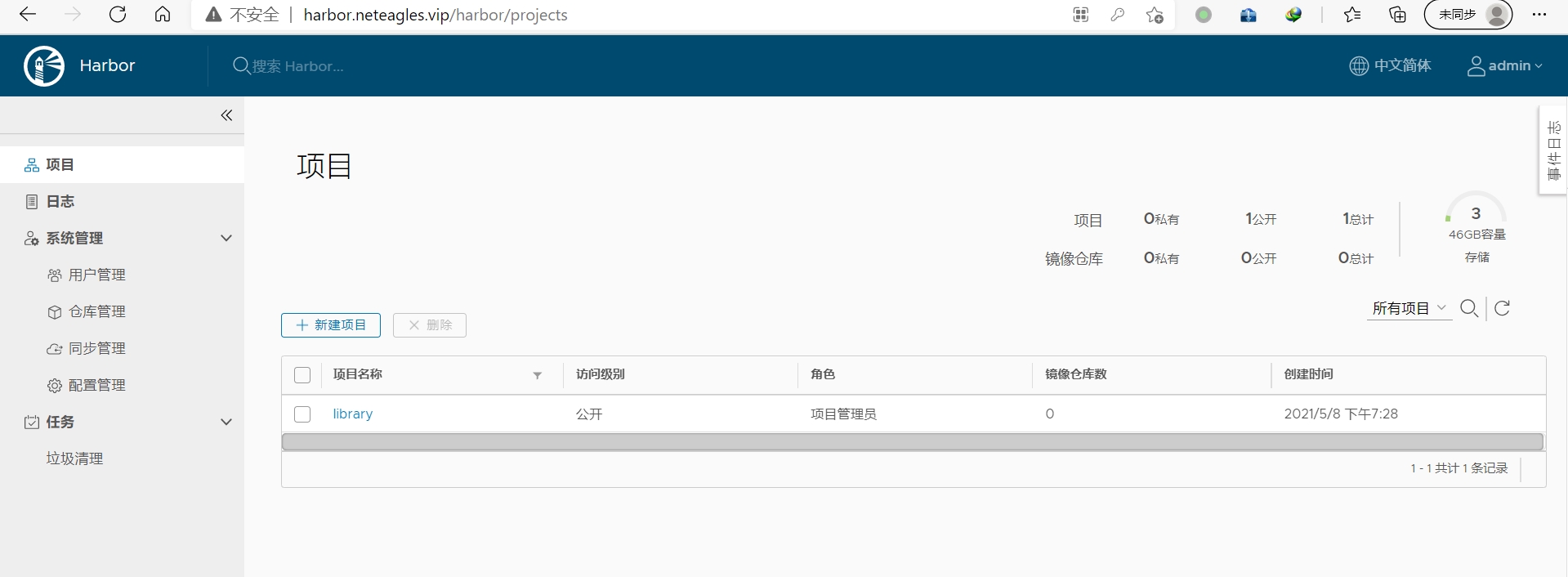

4.harbor安装

root@k8s-harbor:/usr/local/src# cat install_docker_compose_harbor1.8.6.sh

#!/bin/bash

#

#****************************************************************************************

#Author: zhanghui

#QQ: 19661891

#Date: 2021-04-30

#FileName: install_docker__compose_harbor1.8.6.sh

#URL: www.cnblogs.com/neteagles

#Description: install_docker__compose_harbor1.8.6 for centos 7/8 & ubuntu 18.04/20.04

#Copyright (C): 2021 All rights reserved

#****************************************************************************************

SRC_DIR=/usr/local/src

COLOR="echo -e \\033[01;31m"

END='\033[0m'

URL='https://download.docker.com/linux/static/stable/x86_64/'

DOCKER_FILE=docker-19.03.9.tgz

DOCKER_COMPOSE_FILE=docker-compose-Linux-x86_64-

DOCKER_COMPOSE_VERSION=1.27.4

HARBOR_FILE=harbor-offline-installer-v

HARBOR_VERSION=1.8.6

TAR=.tgz

HARBOR_INSTALL_DIR=/apps

IPADDR=`hostname -I|awk '{print $1}'`

HOSTNAME=harbor.neteagles.vip

HARBOR_ADMIN_PASSWORD=123456

os(){

if grep -Eqi "CentOS" /etc/issue || grep -Eq "CentOS" /etc/*-release;then

rpm -q redhat-lsb-core &> /dev/null || { ${COLOR}"安装lsb_release工具"${END};yum -y install redhat-lsb-core &> /dev/null; }

fi

OS_ID=`lsb_release -is`

}

check_file (){

cd ${SRC_DIR}

rpm -q wget &> /dev/null || yum -y install wget &> /dev/null

if [ ! -e ${DOCKER_FILE} ];then

${COLOR}"缺少${DOCKER_FILE}文件"${END}

${COLOR}'开始下载DOCKER二进制源码包'${END}

wget ${URL}${DOCKER_FILE} || { ${COLOR}"DOCKER二进制安装包下载失败"${END}; exit; }

elif [ ! -e ${DOCKER_COMPOSE_FILE}${DOCKER_COMPOSE_VERSION} ];then

${COLOR}"缺少${DOCKER_COMPOSE_FILE}${DOCKER_COMPOSE_VERSION}文件"${END}

exit

elif [ ! -e ${HARBOR_FILE}${HARBOR_VERSION}${TAR} ];then

${COLOR}"缺少${HARBOR_FILE}${HARBOR_VERSION}${TAR}文件"${END}

exit

else

${COLOR}"相关文件已准备好"${END}

fi

}

install_docker(){

tar xf ${DOCKER_FILE}

mv docker/* /usr/bin/

cat > /lib/systemd/system/docker.service <<-EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd -H unix://var/run/docker.sock

ExecReload=/bin/kill -s HUP \$MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://si7y70hh.mirror.aliyuncs.com"]

}

EOF

echo 'alias rmi="docker images -qa|xargs docker rmi -f"' >> ~/.bashrc

echo 'alias rmc="docker ps -qa|xargs docker rm -f"' >> ~/.bashrc

systemctl daemon-reload

systemctl enable --now docker &> /dev/null

systemctl is-active docker &> /dev/null && ${COLOR}"Docker 服务启动成功"${END} || { ${COLOR}"Docker 启动失败"${END};exit; }

docker version && ${COLOR}"Docker 安装成功"${END} || ${COLOR}"Docker 安装失败"${END}

}

install_docker_compose(){

${COLOR}"开始安装 Docker compose....."${END}

sleep 1

mv ${SRC_DIR}/${DOCKER_COMPOSE_FILE}${DOCKER_COMPOSE_VERSION} /usr/bin/docker-compose

chmod +x /usr/bin/docker-compose

docker-compose --version && ${COLOR}"Docker Compose 安装完成"${END} || ${COLOR}"Docker compose 安装失败"${END}

}

install_harbor(){

${COLOR}"开始安装 Harbor....."${END}

sleep 1

[ -d ${HARBOR_INSTALL_DIR} ] || mkdir ${HARBOR_INSTALL_DIR}

tar -xvf ${SRC_DIR}/${HARBOR_FILE}${HARBOR_VERSION}${TAR} -C ${HARBOR_INSTALL_DIR}/

sed -i.bak -e 's/^hostname: .*/hostname: '''${HOSTNAME}'''/' -e 's/^harbor_admin_password: .*/harbor_admin_password: '''${HARBOR_ADMIN_PASSWORD}'''/' -e 's/^https:/#https:/' -e 's/ port: 443/ #port: 443/' -e 's@ certificate: /your/certificate/path@ #certificate: /your/certificate/path@' -e 's@ private_key: /your/private/key/path@ #private_key: /your/private/key/path@' ${HARBOR_INSTALL_DIR}/harbor/harbor.yml

if [ ${OS_ID} == "CentOS" ] &> /dev/null;then

yum -y install python &> /dev/null || { ${COLOR}"安装软件包失败,请检查网络配置"${END}; exit; }

else

apt -y install python &> /dev/null || { ${COLOR}"安装软件包失败,请检查网络配置"${END}; exit; }

fi

${HARBOR_INSTALL_DIR}/harbor/install.sh && ${COLOR}"Harbor 安装完成"${END} || ${COLOR}"Harbor 安装失败"${END}

cat > /lib/systemd/system/harbor.service <<-EOF

[Unit]

Description=Harbor

After=docker.service systemd-networkd.service systemd-resolved.service

Requires=docker.service

Documentation=http://github.com/vmware/harbor

[Service]

Type=simple

Restart=on-failure

RestartSec=5

ExecStart=/usr/bin/docker-compose -f /apps/harbor/docker-compose.yml up

ExecStop=/usr/bin/docker-compose -f /apps/harbor/docker-compose.yml down

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable harbor &>/dev/null && ${COLOR}"Harbor已配置为开机自动启动"${END}

}

set_swap_limit(){

${COLOR}'设置Docker的"WARNING: No swap limit support"警告'${END}

chmod u+w /etc/default/grub

sed -i.bak 's/GRUB_CMDLINE_LINUX=.*/GRUB_CMDLINE_LINUX=" net.ifnames=0 cgroup_enable=memory swapaccount=1"/' /etc/default/grub

chmod u-w /etc/default/grub ;update-grub

${COLOR}"10秒后,机器会自动重启"${END}

sleep 10

reboot

}

main(){

os

check_file

dpkg -s docker-ce &> /dev/null && ${COLOR}"Docker已安装"${END} || install_docker

docker-compose --version &> /dev/null && ${COLOR}"Docker Compose已安装"${END} || install_docker_compose

install_harbor

set_swap_limit

}

main

root@k8s-harbor:/usr/local/src# bash install_docker_compose_harbor1.8.6.sh

在windows系统,C:\Windows\System32\drivers\etc\hosts文件里添加一行

10.0.0.106 harbor.neteagles.vip

http://harbor.neteagles.vip/

用户名:admin 密码:123456

5.安装docker

在master1、master2、master3、node1、node2、node3上安装docker

root@k8s-master1:/usr/local/src# cat install_docker_binary.sh

#!/bin/bash

#

#****************************************************************************************

#Author: zhanghui

#QQ: 19661891

#Date: 2021-04-30

#FileName: install_docker_binary.sh

#URL: www.cnblogs.com/neteagles

#Description: install_docker_binary for centos 7/8 & ubuntu 18.04/20.04

#Copyright (C): 2021 All rights reserved

#****************************************************************************************

SRC_DIR=/usr/local/src

COLOR="echo -e \\033[01;31m"

END='\033[0m'

URL='https://download.docker.com/linux/static/stable/x86_64/'

DOCKER_FILE=docker-19.03.9.tgz

check_file (){

cd ${SRC_DIR}

rpm -q wget &> /dev/null || yum -y install wget &> /dev/null

if [ ! -e ${DOCKER_FILE} ];then

${COLOR}"缺少${DOCKER_FILE}文件"${END}

${COLOR}'开始下载DOCKER二进制安装包'${END}

wget ${URL}${DOCKER_FILE} || { ${COLOR}"DOCKER二进制安装包下载失败"${END}; exit; }

else

${COLOR}"相关文件已准备好"${END}

fi

}

install(){

tar xf ${DOCKER_FILE}

mv docker/* /usr/bin/

cat > /lib/systemd/system/docker.service <<-EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd -H unix://var/run/docker.sock

ExecReload=/bin/kill -s HUP \$MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://si7y70hh.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload

systemctl enable --now docker &> /dev/null

systemctl is-active docker &> /dev/null && ${COLOR}"Docker 服务启动成功"${END} || { ${COLOR}"Docker 启动失败"${END};exit; }

docker version && ${COLOR}"Docker 安装成功"${END} || ${COLOR}"Docker 安装失败"${END}

}

set_alias(){

echo 'alias rmi="docker images -qa|xargs docker rmi -f"' >> ~/.bashrc

echo 'alias rmc="docker ps -qa|xargs docker rm -f"' >> ~/.bashrc

}

set_swap_limit(){

${COLOR}'设置Docker的"WARNING: No swap limit support"警告'${END}

chmod u+w /etc/default/grub

sed -i.bak 's/GRUB_CMDLINE_LINUX=.*/GRUB_CMDLINE_LINUX=" net.ifnames=0 cgroup_enable=memory swapaccount=1"/' /etc/default/grub

chmod u-w /etc/default/grub ;update-grub

${COLOR}"10秒后,机器会自动重启"${END}

sleep 10

reboot

}

main(){

check_file

install

set_alias

set_swap_limit

}

main

6.在所有master节点安装kubeadm、kubelet、kubectl

root@k8s-master1:~# cat install_kubeadm_for_master.sh

#!/bin/bash

#

#********************************************************************

#Author: zhanghui

#QQ: 19661891

#Date: 2021-05-07

#FileName: install_kubeadm_for_master.sh

#URL: www.cnblogs.com/neteagles

#Description: The test script

#Copyright (C): 2021 All rights reserved

#********************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

VERSION=1.20.5-00

os(){

if grep -Eqi "CentOS" /etc/issue || grep -Eq "CentOS" /etc/*-release;then

rpm -q redhat-lsb-core &> /dev/null || { ${COLOR}"安装lsb_release工具"${END};yum -y install redhat-lsb-core &> /dev/null; }

fi

OS_ID=`lsb_release -is`

OS_RELEASE=`lsb_release -rs`

}

install_kubeadm(){

${COLOR}"开始安装Kubeadm依赖包"${END}

apt update &> /dev/null && apt install -y apt-transport-https &> /dev/null

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - &> /dev/null

echo "deb https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial main" >> /etc/apt/sources.list.d/kubernetes.list

apt update &> /dev/null

${COLOR}"Kubeadm有以下版本"${END}

apt-cache madison kubeadm

${COLOR}"10秒后即将安装:Kubeadm-"${VERSION}"版本......"${END}

${COLOR}"如果想安装其它Kubeadm版本,请按Ctrl+c键退出,修改版本再执行"${END}

sleep 10

${COLOR}"开始安装Kubeadm"${END}

apt -y install kubelet=${VERSION} kubeadm=${VERSION} kubectl=${VERSION} &> /dev/null

${COLOR}"Kubeadm安装完成"${END}

}

images_download(){

${COLOR}"开始下载Kubeadm镜像"${END}

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v${VERSION}

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v${VERSION}

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v${VERSION}

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v${VERSION}

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

${COLOR}"Kubeadm镜像下载完成"${END}

}

set_swap(){

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a

${COLOR}"${OS_ID} ${OS_RELEASE} 禁用swap成功!"${END}

}

set_kernel(){

cat > /etc/sysctl.conf <<-EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p &> /dev/null

${COLOR}"${OS_ID} ${OS_RELEASE} 优化内核参数成功!"${END}

}

set_limits(){

cat >> /etc/security/limits.conf <<-EOF

root soft core unlimited

root hard core unlimited

root soft nproc 1000000

root hard nproc 1000000

root soft nofile 1000000

root hard nofile 1000000

root soft memlock 32000

root hard memlock 32000

root soft msgqueue 8192000

root hard msgqueue 8192000

EOF

${COLOR}"${OS_ID} ${OS_RELEASE} 优化资源限制参数成功!"${END}

}

main(){

os

install_kubeadm

images_download

set_swap

set_kernel

set_limits

}

main

#安装完重启系统

7.在所有node节点安装kubeadm、kubelet

#!/bin/bash

#

#********************************************************************

#Author: zhanghui

#QQ: 19661891

#Date: 2021-05-07

#FileName: install_kubeadm_for_node.sh

#URL: www.cnblogs.com/neteagles

#Description: The test script

#Copyright (C): 2021 All rights reserved

#********************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

VERSION=1.20.5-00

os(){

if grep -Eqi "CentOS" /etc/issue || grep -Eq "CentOS" /etc/*-release;then

rpm -q redhat-lsb-core &> /dev/null || { ${COLOR}"安装lsb_release工具"${END};yum -y install redhat-lsb-core &> /dev/null; }

fi

OS_ID=`lsb_release -is`

OS_RELEASE=`lsb_release -rs`

}

install_kubeadm(){

${COLOR}"开始安装Kubeadm依赖包"${END}

apt update &> /dev/null && apt install -y apt-transport-https &> /dev/null

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - &> /dev/null

echo "deb https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial main" >> /etc/apt/sources.list.d/kubernetes.list

apt update &> /dev/null

${COLOR}"Kubeadm有以下版本"${END}

apt-cache madison kubeadm

${COLOR}"10秒后即将安装:Kubeadm-"${VERSION}"版本......"${END}

${COLOR}"如果想安装其它Kubeadm版本,请按Ctrl+c键退出,修改版本再执行"${END}

sleep 10

${COLOR}"开始安装Kubeadm"${END}

apt -y install kubelet=${VERSION} kubeadm=${VERSION} &> /dev/null

${COLOR}"Kubeadm安装完成"${END}

}

set_swap(){

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a

${COLOR}"${OS_ID} ${OS_RELEASE} 禁用swap成功!"${END}

}

set_kernel(){

cat > /etc/sysctl.conf <<-EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p &> /dev/null

${COLOR}"${OS_ID} ${OS_RELEASE} 优化内核参数成功!"${END}

}

set_limits(){

cat >> /etc/security/limits.conf <<-EOF

root soft core unlimited

root hard core unlimited

root soft nproc 1000000

root hard nproc 1000000

root soft nofile 1000000

root hard nofile 1000000

root soft memlock 32000

root hard memlock 32000

root soft msgqueue 8192000

root hard msgqueue 8192000

EOF

${COLOR}"${OS_ID} ${OS_RELEASE} 优化资源限制参数成功!"${END}

}

main(){

os

install_kubeadm

set_swap

set_kernel

set_limits

}

main

#安装完重启系统

8.初始化高可用master

root@k8s-master1:~# kubeadm init --apiserver-advertise-address=10.0.0.101 --control-plane-endpoint=10.0.0.188 --apiserver-bind-port=6443 --kubernetes-version=v1.20.5 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=neteagles.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap

[init] Using Kubernetes version: v1.20.5

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master1.example.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.neteagles.local] and IPs [10.200.0.1 10.0.0.101 10.0.0.188]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master1.example.local localhost] and IPs [10.0.0.101 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master1.example.local localhost] and IPs [10.0.0.101 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 77.536995 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master1.example.local as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k8s-master1.example.local as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 9enh01.q35dsb6rdin4hbuj

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

#提示需要安装网络组件

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

#下面信息添加master,不过需要生成证书才能添加

kubeadm join 10.0.0.188:6443 --token 9enh01.q35dsb6rdin4hbuj \

--discovery-token-ca-cert-hash sha256:e3bb6181f455ad018d2e13b4881ab35fc85332f4e020149aed7cac21023e2760 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

#下面信息添加node

kubeadm join 10.0.0.188:6443 --token 9enh01.q35dsb6rdin4hbuj \

--discovery-token-ca-cert-hash sha256:e3bb6181f455ad018d2e13b4881ab35fc85332f4e020149aed7cac21023e2760

9. 安装网络组件calico

root@k8s-master1:~# mkdir -p $HOME/.kube

root@k8s-master1:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@k8s-master1:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

#部署calico网络组件

root@k8s-master1:~# wget https://docs.projectcalico.org/v3.14/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

root@k8s-master1:~# vim calico.yaml

#将下面内容

# - name: CALICO_IPV4POOL_CIDR

# value: "192.168.0.0/16"

#修改为

- name: CALICO_IPV4POOL_CIDR

value: "10.100.0.0/16"

:wq

root@k8s-master1:~# kubectl apply -f calico.yaml

configmap/calico-config created

Warning: apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

10.当前maste生成证书用于添加新控制节点

root@k8s-master1:~# kubeadm init phase upload-certs --upload-certs

I0607 19:41:57.980206 21674 version.go:254] remote version is much newer: v1.21.1; falling back to: stable-1.20

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

ac019c228f4b7dc7d2358092eef57dbab00ab480670657f43d1438b97ef7a9b7

11.添加master节点

#添加master2

root@k8s-master2:~# kubeadm join 10.0.0.188:6443 --token 9enh01.q35dsb6rdin4hbuj \

--discovery-token-ca-cert-hash sha256:e3bb6181f455ad018d2e13b4881ab35fc85332f4e020149aed7cac21023e2760 \

--control-plane --certificate-key ac019c228f4b7dc7d2358092eef57dbab00ab480670657f43d1438b97ef7a9b7

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master2.example.local localhost] and IPs [10.0.0.102 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master2.example.local localhost] and IPs [10.0.0.102 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master2.example.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.neteagles.local] and IPs [10.200.0.1 10.0.0.102 10.0.0.188]

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node k8s-master2.example.local as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k8s-master2.example.local as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

#添加master3

root@k8s-master3:~# kubeadm join 10.0.0.188:6443 --token 9enh01.q35dsb6rdin4hbuj \

--discovery-token-ca-cert-hash sha256:e3bb6181f455ad018d2e13b4881ab35fc85332f4e020149aed7cac21023e2760 \

--control-plane --certificate-key ac019c228f4b7dc7d2358092eef57dbab00ab480670657f43d1438b97ef7a9b7

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master3.example.local localhost] and IPs [10.0.0.103 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master3.example.local localhost] and IPs [10.0.0.103 127.0.0.1 ::1]

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master3.example.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.neteagles.local] and IPs [10.200.0.1 10.0.0.103 10.0.0.188]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node k8s-master3.example.local as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k8s-master3.example.local as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

12.添加node节点

#添加node1节点

root@k8s-node1:~# kubeadm join 10.0.0.188:6443 --token 9enh01.q35dsb6rdin4hbuj \

--discovery-token-ca-cert-hash sha256:e3bb6181f455ad018d2e13b4881ab35fc85332f4e020149aed7cac21023e2760

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

#添加node2节点

root@k8s-node2:~# kubeadm join 10.0.0.188:6443 --token 9enh01.q35dsb6rdin4hbuj \

--discovery-token-ca-cert-hash sha256:e3bb6181f455ad018d2e13b4881ab35fc85332f4e020149aed7cac21023e2760

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

#添加node3节点

root@k8s-node3:~# kubeadm join 10.0.0.188:6443 --token 9enh01.q35dsb6rdin4hbuj \

--discovery-token-ca-cert-hash sha256:e3bb6181f455ad018d2e13b4881ab35fc85332f4e020149aed7cac21023e2760

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

13.验证当前node状态

root@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1.example.local Ready control-plane,master 37m v1.20.5

k8s-master2.example.local Ready control-plane,master 15m v1.20.5

k8s-master3.example.local Ready control-plane,master 14m v1.20.5

k8s-node1.example.local Ready <none> 12m v1.20.5

k8s-node2.example.local Ready <none> 11m v1.20.5

k8s-node3.example.local Ready <none> 108s v1.20.5

14.验证当前证书状态

root@k8s-master1:~# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-4fg8m 18m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:9enh01 Approved,Issued

csr-h8xk4 14m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:9enh01 Approved,Issued

csr-jbznh 15m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:9enh01 Approved,Issued

csr-qdvr2 4m20s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:9enh01 Approved,Issued

csr-tzf4p 17m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:9enh01 Approved,Issued

15.部署dashboard

#在所有master和 node运行这个脚本

#!/bin/bash

#

#********************************************************************

#Author: zhanghui

#QQ: 19661891

#Date: 2021-05-10

#FileName: set_harbor_hostname_service.sh

#URL: www.cnblogs.com/neteagles

#Description: The test script

#Copyright (C): 2021 All rights reserved

#********************************************************************

HOSTNAME=harbor.neteagles.vip

echo "10.0.0.106 ${HOSTNAME}" >> /etc/hosts

sed -i.bak 's@ExecStart=.*@ExecStart=/usr/bin/dockerd -H unix://var/run/docker.sock --insecure-registry '${HOSTNAME}'@' /lib/systemd/system/docker.service

systemctl daemon-reload

systemctl restart docker

root@k8s-master1:~# docker pull kubernetesui/dashboard:v2.2.0

v2.2.0: Pulling from kubernetesui/dashboard

7cccffac5ec6: Pull complete

7f06704bb864: Pull complete

Digest: sha256:148991563e374c83b75e8c51bca75f512d4f006ddc791e96a91f1c7420b60bd9

Status: Downloaded newer image for kubernetesui/dashboard:v2.2.0

docker.io/kubernetesui/dashboard:v2.2.0

harbor.neteagles.vip

先在这里新建一个仓库hf

root@k8s-master1:~# docker login harbor.neteagles.vip

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

root@k8s-master1:~# docker tag kubernetesui/dashboard:v2.2.0 harbor.neteagles.vip/hf/dashboard:v2.2.0

root@k8s-master1:~# docker push harbor.neteagles.vip/hf/dashboard:v2.2.0

The push refers to repository [harbor.neteagles.vip/hf/dashboard]

8ba672b77b05: Pushed

77842fd4992b: Pushed

v2.2.0: digest: sha256:b9217b835cdcb33853f50a9cf13617ee0f8b887c508c5ac5110720de154914e4 size: 736

root@k8s-master1:~# docker pull kubernetesui/metrics-scraper:v1.0.6

v1.0.6: Pulling from kubernetesui/metrics-scraper

47a33a630fb7: Pull complete

62498b3018cb: Pull complete

Digest: sha256:1f977343873ed0e2efd4916a6b2f3075f310ff6fe42ee098f54fc58aa7a28ab7

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.6

docker.io/kubernetesui/metrics-scraper:v1.0.6

root@k8s-master1:~# docker tag kubernetesui/metrics-scraper:v1.0.6 harbor.neteagles.vip/hf/metrics-scraper:v1.0.6

root@k8s-master1:~# docker push harbor.neteagles.vip/hf/metrics-scraper:v1.0.6

The push refers to repository [harbor.neteagles.vip/hf/metrics-scraper]

a652c34ae13a: Pushed

6de384dd3099: Pushed

v1.0.6: digest: sha256:c09adb7f46e1a9b5b0bde058713c5cb47e9e7f647d38a37027cd94ef558f0612 size: 736

root@k8s-master1:~# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

root@k8s-master1:~# vim recommended.yaml

#把下面内容

image: kubernetesui/dashboard:v2.2.0

image: kubernetesui/metrics-scraper:v1.0.6

#改成为

image: harbor.neteagles.vip/hf/dashboard:v2.2.0

image: harbor.neteagles.vip/hf/metrics-scraper:v1.0.6

:wq

root@k8s-master1:~# vim recommended.yaml

39 spec:

40 type: NodePort #添加这行

41 ports:

42 - port: 443

43 targetPort: 8443

44 nodePort: 30005 #添加这行

45 selector:

46 k8s-app: kubernetes-dashboard

:wq

root@k8s-master1:~# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

root@k8s-master1:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6dfcd885bf-grqw9 1/1 Running 2 63m

kube-system calico-node-9rc68 1/1 Running 1 46m

kube-system calico-node-9s5xq 1/1 Running 1 50m

kube-system calico-node-9x8sz 1/1 Running 1 47m

kube-system calico-node-nhh9v 1/1 Running 2 63m

kube-system calico-node-qn7gv 1/1 Running 1 49m

kube-system calico-node-r6ndl 1/1 Running 1 36m

kube-system coredns-54d67798b7-9dz9c 1/1 Running 2 72m

kube-system coredns-54d67798b7-d4rpn 1/1 Running 2 72m

kube-system etcd-k8s-master1.example.local 1/1 Running 2 72m

kube-system etcd-k8s-master2.example.local 1/1 Running 1 50m

kube-system etcd-k8s-master3.example.local 1/1 Running 1 48m

kube-system kube-apiserver-k8s-master1.example.local 1/1 Running 3 72m

kube-system kube-apiserver-k8s-master2.example.local 1/1 Running 1 50m

kube-system kube-apiserver-k8s-master3.example.local 1/1 Running 1 48m

kube-system kube-controller-manager-k8s-master1.example.local 1/1 Running 3 72m

kube-system kube-controller-manager-k8s-master2.example.local 1/1 Running 1 50m

kube-system kube-controller-manager-k8s-master3.example.local 1/1 Running 1 48m

kube-system kube-proxy-27pt2 1/1 Running 1 50m

kube-system kube-proxy-5tx7f 1/1 Running 1 47m

kube-system kube-proxy-frzf4 1/1 Running 1 46m

kube-system kube-proxy-qxzff 1/1 Running 2 49m

kube-system kube-proxy-stvzj 1/1 Running 1 36m

kube-system kube-proxy-w7pjr 1/1 Running 3 72m

kube-system kube-scheduler-k8s-master1.example.local 1/1 Running 4 72m

kube-system kube-scheduler-k8s-master2.example.local 1/1 Running 1 50m

kube-system kube-scheduler-k8s-master3.example.local 1/1 Running 1 48m

kubernetes-dashboard dashboard-metrics-scraper-ccf6d4787-vft8f 1/1 Running 0 36s

kubernetes-dashboard kubernetes-dashboard-76ffb47755-n4pvv 1/1 Running 0 36s

root@k8s-master1:~# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 127.0.0.1:44257 0.0.0.0:*

LISTEN 0 128 127.0.0.1:10248 0.0.0.0:*

LISTEN 0 128 127.0.0.1:10249 0.0.0.0:*

LISTEN 0 128 127.0.0.1:9099 0.0.0.0:*

LISTEN 0 128 127.0.0.1:2379 0.0.0.0:*

LISTEN 0 128 10.0.0.101:2379 0.0.0.0:*

LISTEN 0 128 10.0.0.101:2380 0.0.0.0:*

LISTEN 0 128 127.0.0.1:2381 0.0.0.0:*

LISTEN 0 128 127.0.0.1:10257 0.0.0.0:*

LISTEN 0 128 127.0.0.1:10259 0.0.0.0:*

LISTEN 0 8 0.0.0.0:179 0.0.0.0:*

LISTEN 0 128 0.0.0.0:30005 0.0.0.0:* #每个节点上都有30005端口

LISTEN 0 128 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 127.0.0.1:6010 0.0.0.0:*

LISTEN 0 128 *:10250 *:*

LISTEN 0 128 *:6443 *:*

LISTEN 0 128 *:10256 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 128 [::1]:6010 [::]:*

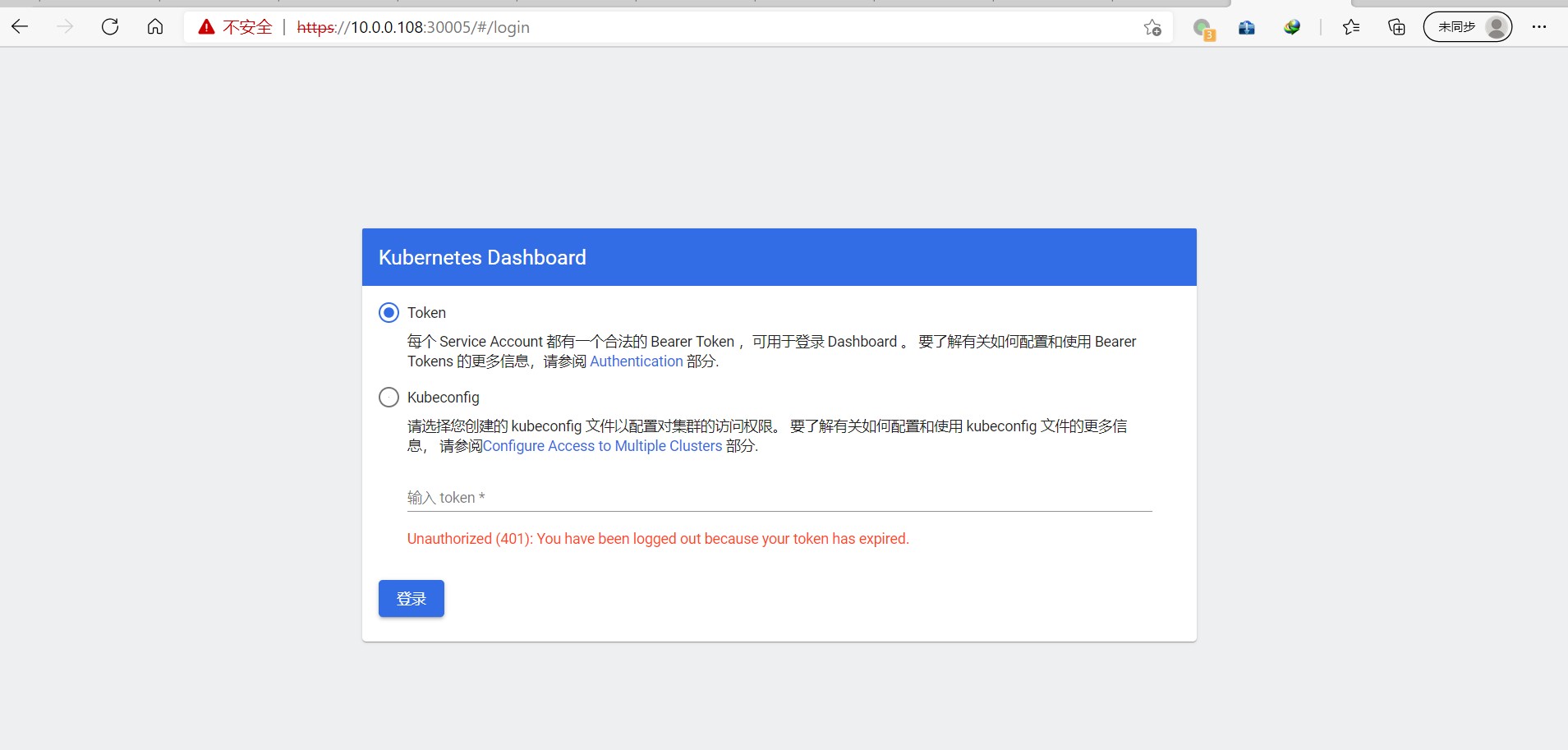

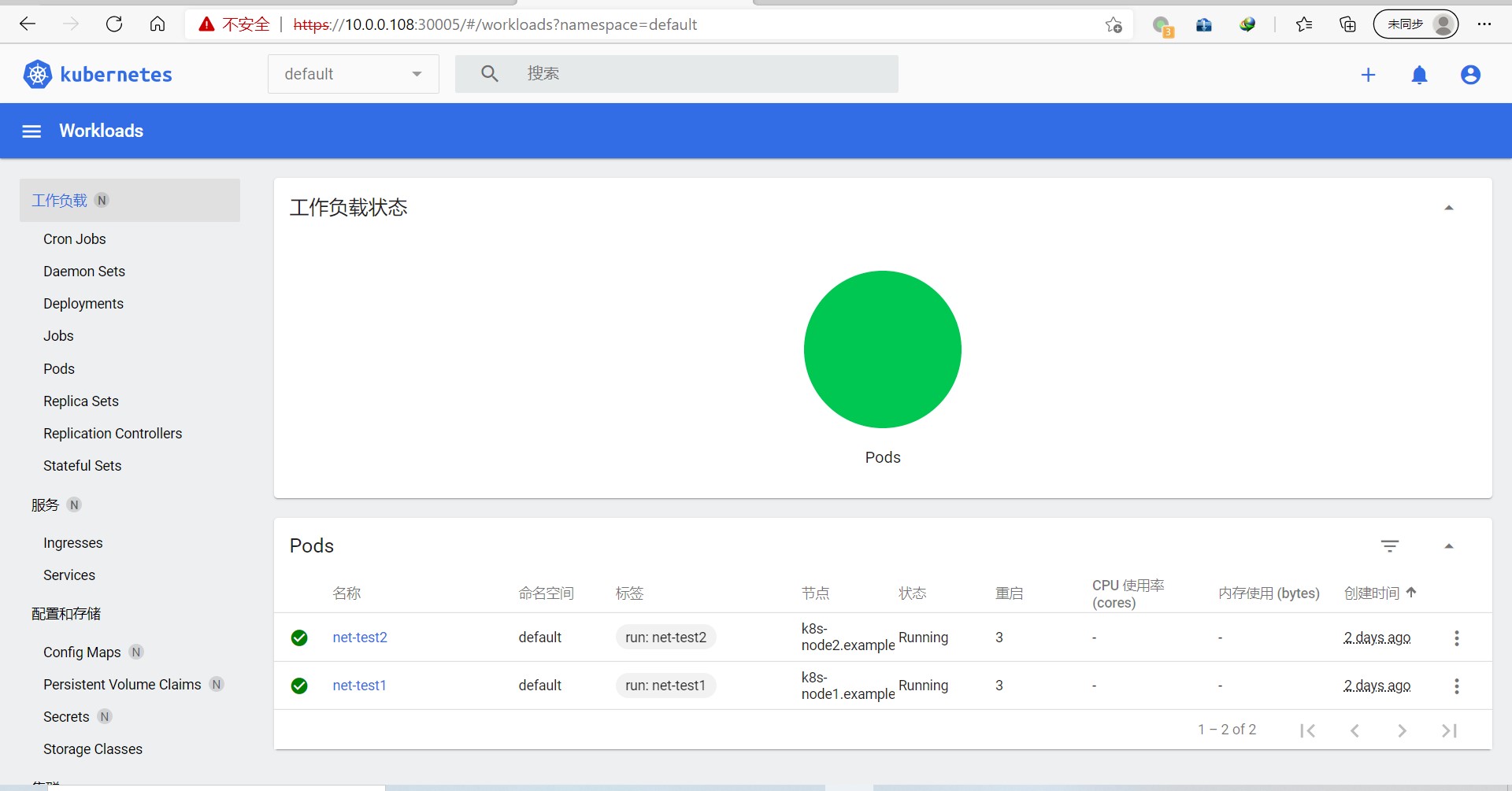

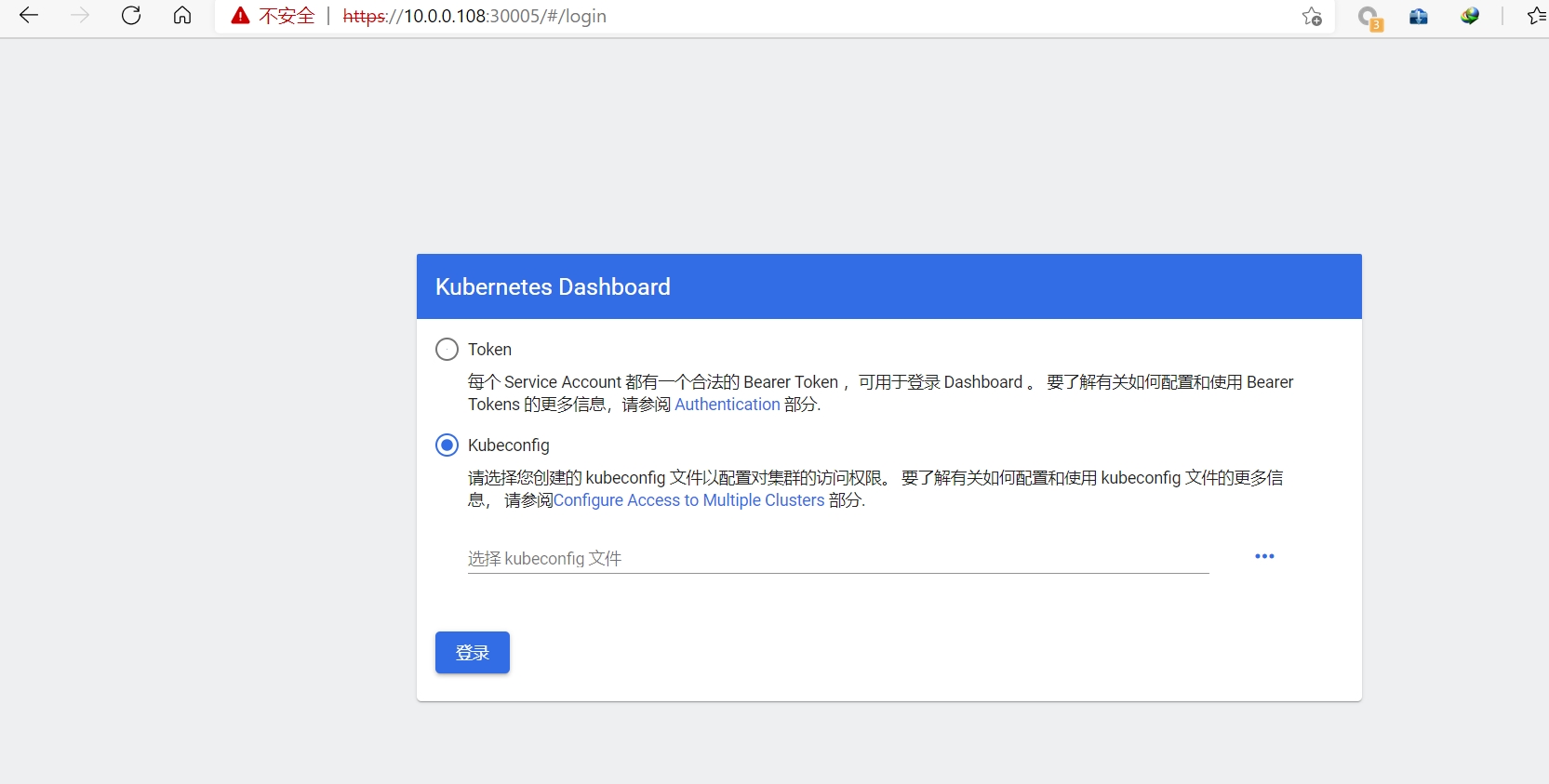

16.访问dashboard

10.0.0.108:30005

提示使用https登录

获取登录token

root@k8s-master1:~# mkdir dashboard-2.2.0

root@k8s-master1:~# mv recommended.yaml dashboard-2.2.0/

root@k8s-master1:~# cd dashboard-2.2.0/

root@k8s-master1:~/dashboard-2.2.0# vim admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

:wq

root@k8s-master1:~/dashboard-2.2.0# kubectl apply -f admin-user.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

root@k8s-master1:~/dashboard-2.2.0# kubectl get secret -A |grep admin

kubernetes-dashboard admin-user-token-sfsnw kubernetes.io/service-account-token 3 38s

root@k8s-master1:~/dashboard-2.2.0# kubectl describe secret admin-user-token-sfsnw -n kubernetes-dashboard

Name: admin-user-token-sfsnw

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 54ff5070-778b-490e-83f8-4e27f63919f6

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkVtZE1yT2puOGtDbmxpRTk5ejF5YTJ1TDVNbTJJUjhtWUs1Wm9feWQyTDAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXNmc253Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI1NGZmNTA3MC03NzhiLTQ5MGUtODNmOC00ZTI3ZjYzOTE5ZjYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.UteOOUZJ-K6dgkL-BQaxQUfTboLOlEKLqbWDVJwLD6-5WZo6tC4wq1Ovn-Zu5xwIYXr3lm1i9_OrS0rkWVsv5Iof1bXarMfLuD-PbdCAwX43zOTm91nKvPEI1MEbnVmcz5AlHLM6Q4t4SklHQ8v6iH5pGKESTcXRCBTm3WzuHBCf0sUzhLos4nQY10LvaKAs3BU31INY04IxvvV7wph8sX5bomWFjBjYgpYNkXhWx5BL8QvELvASRfzDSFIX79_IrjD6Jk4H2CQRb_ndaj34oUNtwu5V6254fbF0jntQGMEcqSWon-uKlBtgmJefxlD9ptYspvBXv1aSNg7WmRwg-Q

使用kubeconfig文件登录dashboard

root@k8s-master1:~/dashboard-2.2.0# cd

root@k8s-master1:~# cp .kube/config /opt/kubeconfig

root@k8s-master1:~# vim /opt/kubeconfig

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkVtZE1yT2puOGtDbmxpRTk5ejF5YTJ1TDVNbTJJUjhtWUs1Wm9feWQyTDAifQ.eyJpc3MiOiJrdWJlcm

5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1Ym

VybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXNmc253Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3

VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOi

I1NGZmNTA3MC03NzhiLTQ5MGUtODNmOC00ZTI3ZjYzOTE5ZjYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YW

RtaW4tdXNlciJ9.UteOOUZJ-K6dgkL-BQaxQUfTboLOlEKLqbWDVJwLD6-5WZo6tC4wq1Ovn-Zu5xwIYXr3lm1i9_OrS0rkWVsv5Iof1bXarMfLuD-PbdCAw

X43zOTm91nKvPEI1MEbnVmcz5AlHLM6Q4t4SklHQ8v6iH5pGKESTcXRCBTm3WzuHBCf0sUzhLos4nQY10LvaKAs3BU31INY04IxvvV7wph8sX5bomWFjBjYg

pYNkXhWx5BL8QvELvASRfzDSFIX79_IrjD6Jk4H2CQRb_ndaj34oUNtwu5V6254fbF0jntQGMEcqSWon-uKlBtgmJefxlD9ptYspvBXv1aSNg7WmRwg-Q

:wq

17.k8s升级

#各master安装指定新版本kubeadm

root@k8s-master1:~# apt-cache madison kubeadm

kubeadm | 1.21.1-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.21.0-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.20.7-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.20.6-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.20.5-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.20.4-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.20.2-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.20.1-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

kubeadm | 1.20.0-00 | https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial/main amd64 Packages

...

root@k8s-master1:~# apt -y install kubeadm=1.20.7-00

root@k8s-master2:~# apt -y install kubeadm=1.20.7-00

root@k8s-master3:~# apt -y install kubeadm=1.20.7-00

root@k8s-master1:~# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.7", GitCommit:"132a687512d7fb058d0f5890f07d4121b3f0a2e2", GitTreeState:"clean", BuildDate:"2021-05-12T12:38:16Z", GoVersion:"go1.15.12", Compiler:"gc", Platform:"linux/amd64"}

#查看升级计划

root@k8s-master1:~# kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.20.5

[upgrade/versions] kubeadm version: v1.20.6

I0607 21:20:03.532587 130121 version.go:254] remote version is much newer: v1.21.1; falling back to: stable-1.20

[upgrade/versions] Latest stable version: v1.20.7

[upgrade/versions] Latest stable version: v1.20.7

[upgrade/versions] Latest version in the v1.20 series: v1.20.7

[upgrade/versions] Latest version in the v1.20 series: v1.20.7

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

kubelet 6 x v1.20.5 v1.20.7

Upgrade to the latest version in the v1.20 series:

COMPONENT CURRENT AVAILABLE

kube-apiserver v1.20.5 v1.20.7

kube-controller-manager v1.20.5 v1.20.7

kube-scheduler v1.20.5 v1.20.7

kube-proxy v1.20.5 v1.20.7

CoreDNS 1.7.0 1.7.0

etcd 3.4.13-0 3.4.13-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.20.7

Note: Before you can perform this upgrade, you have to update kubeadm to v1.20.7.

_____________________________________________________________________

The table below shows the current state of component configs as understood by this version of kubeadm.

Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or

resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually

upgrade to is denoted in the "PREFERRED VERSION" column.

API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED