Flink CDC 实时同步 MySQL

- Flink CDC 实时同步 MySQL

- Flink CDC 实时同步 Oracle

准备工作

-

MySQL 数据库(version:

5.7.25),注意,MySQL 数据库版本必须大于 5.6,否则不支持。 -

开启 MySQL 的 log-bin:

[mysqld] # Binary Logging. log-bin=mysql-bin server-id=1 -

Flink (version :

1.18.1) -

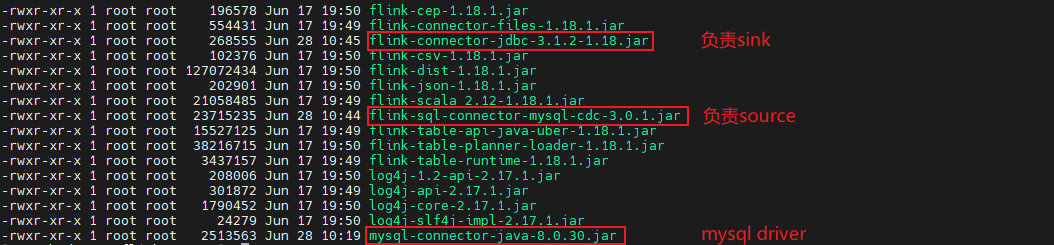

添加以下 jar 包到

flink/lib:flink-sql-connector-mysql-cdc.jar负责 sourceflink-connector-jdbc.jar负责 sinkmysql-connector-java.jarsink 端所需的 jdbc 驱动

-

准备待同步源端表

源端表:

cdc_test_source.player_sourceCREATE TABLE `player_source` ( `id` int(11), `name` varchar(255), PRIMARY KEY (`id`) ); -

准备目标端表

在目标端创建和源端同构的表:

cdc_test_target.player_targetCREATE TABLE `player_target` ( `id` int(11), `name` varchar(255), PRIMARY KEY (`id`) );

SQL-Client 实现数据同步

使用 Flink 的 sql-client 实现数据同步

启动 Flink

./bin/start-cluster.sh

启动 sql-client

sudo ./bin/sql-client.sh

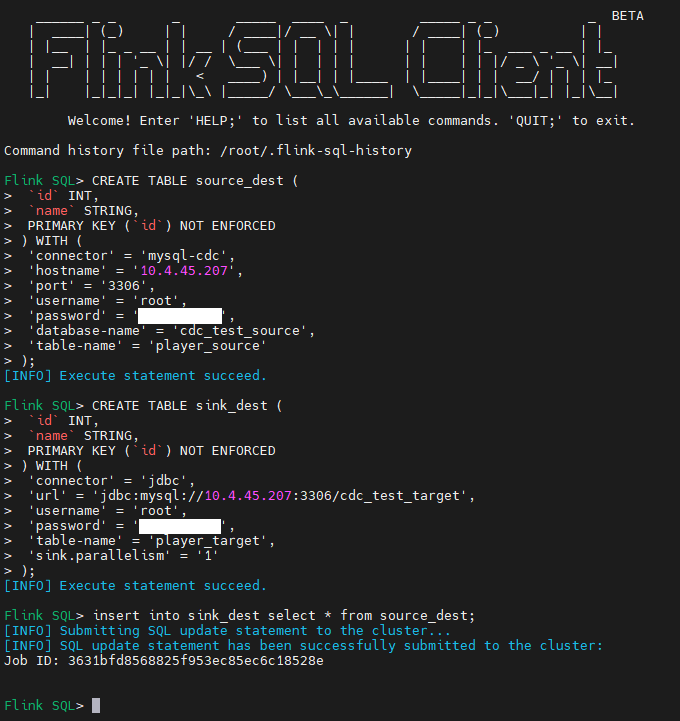

数据同步任务创建

-

创建源端表对应的逻辑表,参照 MySQL 字段类型和 FlinkSQL 字段类型的映射关系

CREATE TABLE source_dest ( `id` INT , `name` STRING, PRIMARY KEY (`id`) NOT ENFORCED ) WITH ( 'connector' = 'mysql-cdc', 'hostname' = '127.0.0.1', 'port' = '3306', 'username' = 'username', 'password' = 'password', 'database-name' = 'cdc_test_source', 'table-name' = 'player_source' ); -

创建目标端表对应的逻辑表

CREATE TABLE sink_dest ( `id` INT, `name` STRING, PRIMARY KEY (`id`) NOT ENFORCED ) WITH ( 'connector' = 'jdbc', 'url' = 'jdbc:mysql://127.0.0.1:3306/cdc_test_target', 'username' = 'username', 'password' = 'password', 'table-name' = 'player_target', 'sink.parallelism' = '1' ); -

建立源端逻辑表和目标端逻辑表的连接

INSERT INTO sink_dest (id, name) SELECT id, name FROM source_dest; -

任务创建成功:

-

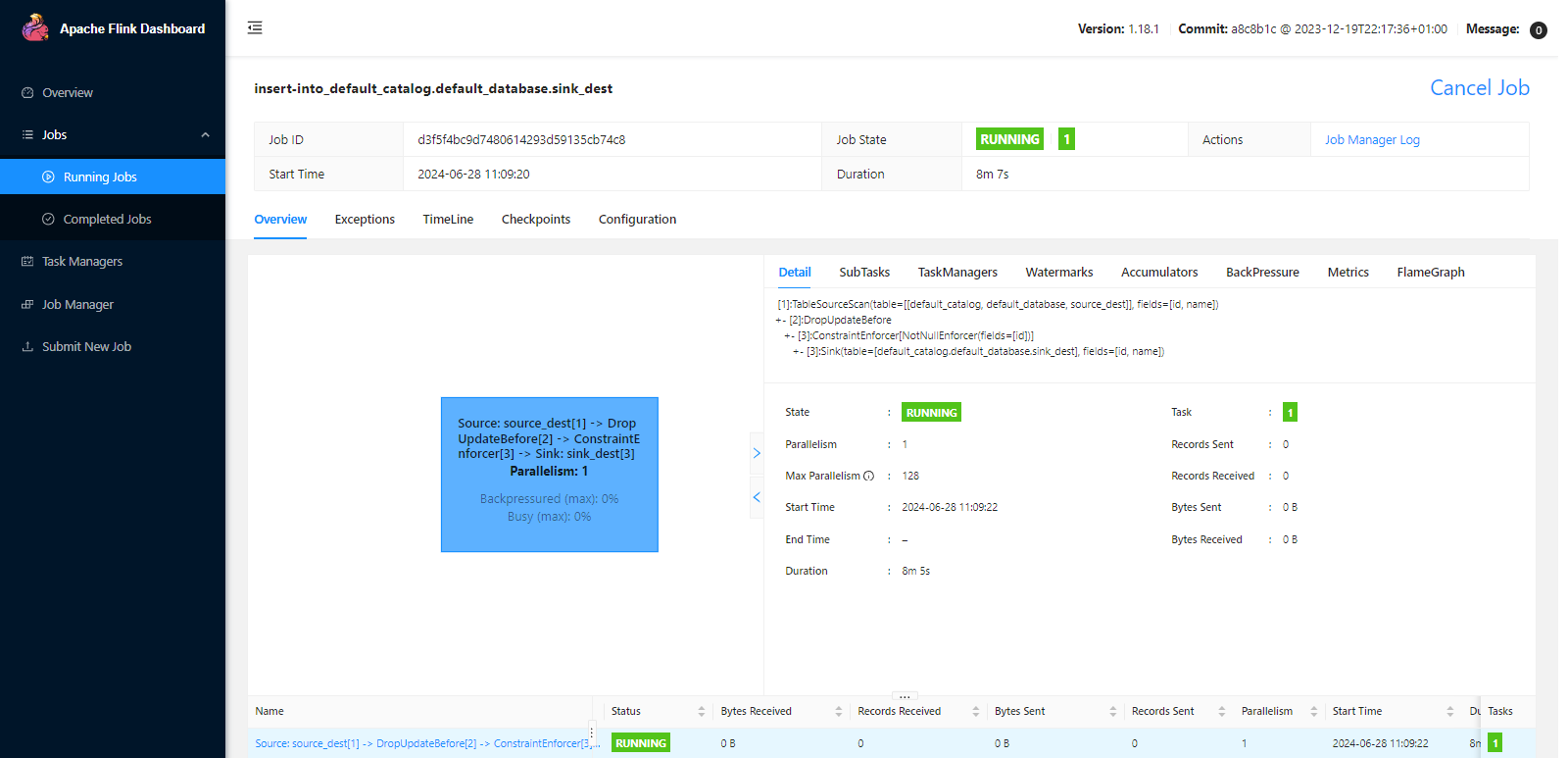

Flink Web 查看提交的任务:

-

源端表中进行更新、删除操作,查看目标端表是否自动完成同步

TableAPI 实现数据同步

引入 maven 依赖:

<properties>

<scala.binary.version>2.12</scala.binary.version>

<flink.version>1.15.4</flink.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-runtime</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-loader</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-base</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-sql-connector-mysql-cdc</artifactId>

<version>2.4.0</version>

</dependency>

<dependencies>

程序实现:

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.StatementSet;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class FlinkSQL {

public static void main(String[] args) throws Exception {

Configuration configuration = new Configuration();

configuration.setInteger("rest.port", 9091);

// configuration.setString("execution.checkpointing.interval", "3min");

StreamExecutionEnvironment env = StreamExecutionEnvironment

.createLocalEnvironmentWithWebUI(configuration);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

String createSourceTableSQL = "CREATE TABLE source_dest (" +

"`id` INT," +

"`name` STRING," +

"PRIMARY KEY (`id`) NOT ENFORCED" +

") WITH (" +

"'connector' = 'mysql-cdc'," +

"'hostname' = '127.0.0.1'," +

"'username' = 'username'," +

"'password' = 'password'," +

"'database-name' = 'cdc_test_source'," +

"'table-name' = 'player_source'," +

"'scan.startup.mode' = 'latest-offset'" +

");";

tableEnv.executeSql(createSourceTableSQL);

String createSinkTableSQL = "CREATE TABLE sink_dest (" +

"`id` INT," +

"`name` STRING," +

"PRIMARY KEY (`id`) NOT ENFORCED" +

") WITH (" +

"'connector' = 'jdbc'," +

"'url' = 'jdbc:mysql://127.0.0.1:3306/cdc_test_target'," +

"'username' = 'username'," +

"'password' = 'password'," +

"'table-name' = 'player_target'" +

");";

tableEnv.executeSql(createSinkTableSQL);

String insertSQL = "INSERT INTO sink_dest SELECT * FROM source_dest;";

StatementSet statementSet = tableEnv.createStatementSet();

statementSet.addInsertSql(insertSQL);

statementSet.execute();

}

}

我们在 Configuration 中设置了 rest.port = 9091, 程序启动成功后,可以在浏览器打开 localhost:9091 看到提交运行的任务。

scan.startup.mode 可取值为:

initial:当没有指定 `scan.startup.mode` 时,默认取值为 `initial`,官网对 initial 的 [说明原文](https://nightlies.apache.org/flink/flink-cdc-docs-master/zh/docs/connectors/cdc-connectors/mysql-cdc/#startup-reading-position) 是 `Performs an initial snapshot on the monitored database tables upon first startup, and continue to read the latest binlog.`

earliest-offset:可访问的最早的 binlog 偏移量。

latest-offset:从 binlog 的末尾位置开始读取,这意味着只接收 connector 启动之后的变更事件流。

specific-offset:跳过快照阶段,从特定偏移量开始读取 binlog 事件。该偏移量可以使用二进制日志文件名和位置指定。

timestamp:从一个指定的时间戳位置开始读取 binlog,时间的设定很方便,但是根据 timestamp 定位到具体的 offset 需要经过一点儿时间。

遇到的问题

执行 FlinkSQL 报错

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.api.ValidationException:

Could not find any factory for identifier 'mysql-cdc' that

implements 'org.apache.flink.table.factories.DynamicTableFactory' in the classpath.

Available factory identifiers are:

blackhole

datagen

filesystem

jdbc

oracle-cdc

print

python-input-format

错误原因

不识别源端定义的 mysql-cdc ,缺少 `flink-sql-connector-mysql-cdc.jar。

解决方案

添加 flink-connector-jdbc.jar 和 flink-sql-connector-mysql-cdc.jar 重启后解决。

MySQL 开启 bin-log 报错

[ERROR] You have enabled the binary log, but you haven’t provided the mandatory server-id.

Please refer to the proper server start-up parameters documentation

2016-09-03T03:17:51.815890Z 0 [ERROR] Aborting

报错原因

在设置 bin-log 日志的时候,没有设置 server_id 参数。server-id 参数用于在复制中,为主库和备库提供一个独立的 ID,以区分主库和备库;

开启二进制文件的时候,需要设置这个参数。

解决方案

修改 MySQL 配置文件 my.ini (windows) / my.cnf (linux)

[mysqld]

# Binary Logging.

log-bin=mysql-bin

server-id=1

重启 MySQL 服务。

本文主要介绍了使用 Flink CDC 实现 MySQL 数据的实时同步。

本文主要介绍了使用 Flink CDC 实现 MySQL 数据的实时同步。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· AI技术革命,工作效率10个最佳AI工具