深入爬虫书scrapy 之json内容没有写入文本

settings.py设置

ITEM_PIPELINES = { 'tets.pipelines.TetsPipeline': 300, }

spider代码

xpath后缀添加.extract() parse()返回return item

import scrapy from tets.items import TetsItem class KugouSpider(scrapy.Spider): name = 'kugou' allowed_domains = ['www.kugou.com'] start_urls = ['http://www.kugou.com/'] def parse(self, response): item = TetsItem() item['title'] = response.xpath("/html/head/title/text()").extract() print(item['title']) return item

piplines代码

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import codecs import json class TetsPipeline(object): def __init__(self): # self.file = codecs.open("D:/git/learn_scray/day11/mydata2.txt", "wb", encoding="utf-8") self.file = codecs.open("D:/git/learn_scray/day11/1.json", "wb", encoding="utf-8") # 处理文本(xx.txt) # def process_item(self, item, spider): # l = str(item) + "\n" # print(l) # self.file.write(l) # return item def process_item(self, item, spider): print("进入") # print(item) i = json.dumps(dict(item), ensure_ascii=False) # print("进入json") # print(i) l = i + "\n" print(l) self.file.write(l) return item def close_spider(self, spider): slef.file.close()

items.py

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class TetsItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() title = scrapy.Field()

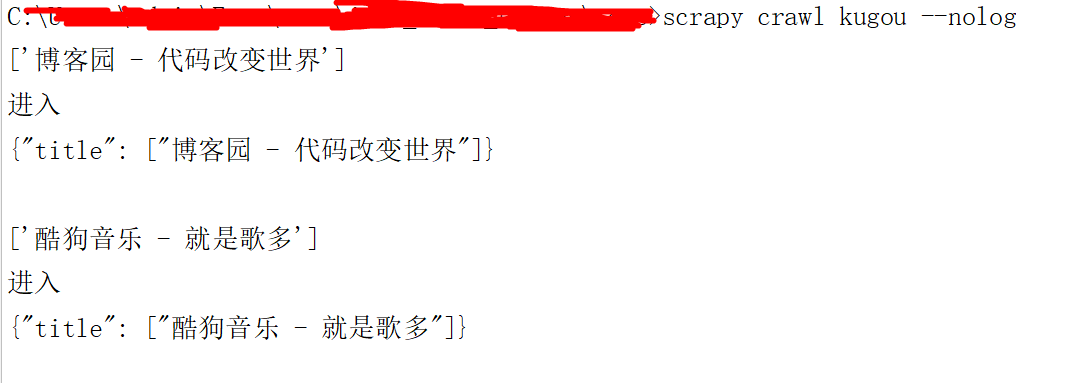

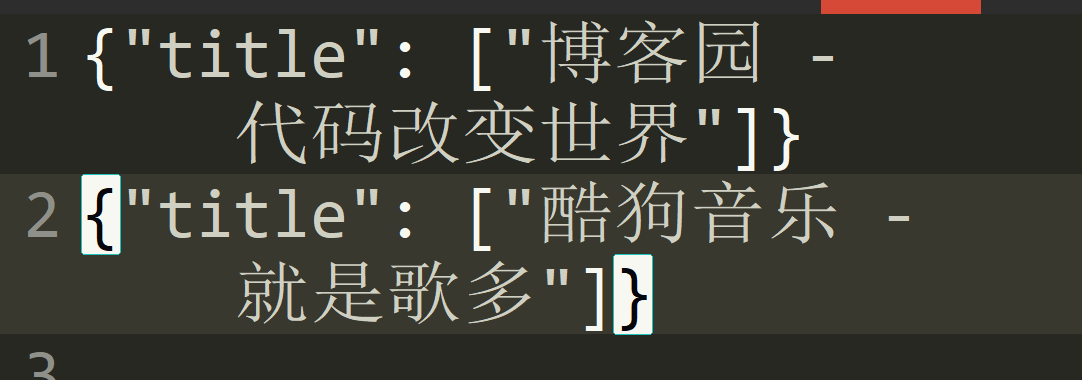

结果如图下

作者:以罗伊

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须在文章页面给出原文链接,否则保留追究法律责任的权利。