1.DDPG是一个离线的策略方法

rl_utils.train_off_policy_agent(env, agent, num_episodes,

replay_buffer, minimal_size, batch_size)

2.DDPG是一个将状态states和actor的输出作为共同的输入来预测critic的Q值

next_q_values = self.target_critic(

torch.cat(

[next_states, self.target_actor(next_states)], dim=1))

q_targets = rewards + self.gamma * next_q_values * (1 - dones)

# critic_loss 将状态和状态对应的动作进行构造损失

critic_loss = torch.mean(

F.mse_loss(

self.critic(torch.cat([states, actions], dim=1)),

q_targets))

3.动作的损失是使得Q值最大

actor_loss = -torch.mean( self.critic( # 策略网络就是为了使得Q值最大值 torch.cat([states, self.actor(states)], dim=1))) # 为了使得Q值最大

4.软更新策略目标网络 self.tau的数值是0.005

for param_target, param in zip(target_net.parameters(), net.parameters()): param_target.data.copy_(param_target.data*(1.0 - self.tau) + param.data * self.tau)

train.py

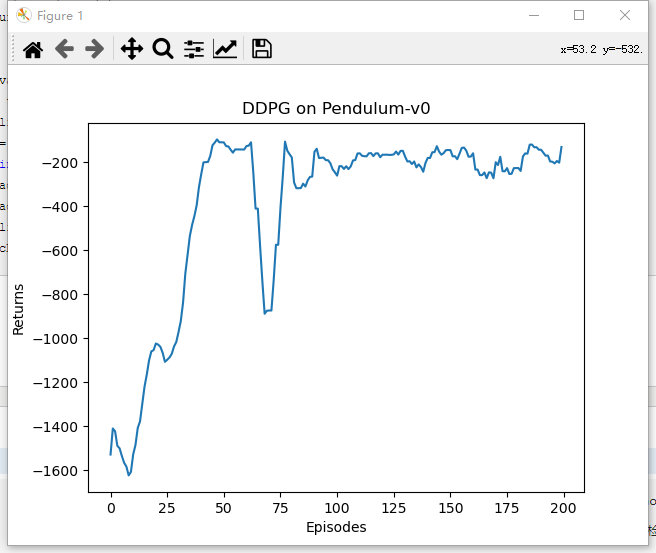

import random import gym import numpy as np from tqdm import tqdm import torch from torch import nn import rl_utils from model import DDPG import matplotlib.pyplot as plt actor_lr = 5e-4 critic_lr = 5e-3 num_episodes = 200 hidden_dim = 64 gamma = 0.98 tau = 0.005 # 软更新参数 buffer_size = 10000 minimal_size = 1000 batch_size = 64 sigma = 0.01 # 高斯噪声标准差 device = torch.device("cuda") if torch.cuda.is_available() else torch.device( "cpu") env_name = "Pendulum-v0" env = gym.make(env_name) random.seed(0) np.random.seed(0) env.seed(0) torch.manual_seed(0) replay_buffer = rl_utils.ReplayBuffer(buffer_size) state_dim = env.observation_space.shape[0] action_dim = env.action_space.shape[0] action_bound = env.action_space.high[0] # 动作最大值 agent = DDPG(state_dim, action_dim, state_dim + action_dim, hidden_dim, False, action_bound, sigma, actor_lr, critic_lr, tau, gamma, device) return_list = rl_utils.train_off_policy_agent(env, agent, num_episodes, replay_buffer, minimal_size, batch_size) episodes_list = list(range(len(return_list))) plt.plot(episodes_list, return_list) plt.xlabel('Episodes') plt.ylabel('Returns') plt.title('DDPG on {}'.format(env_name)) plt.show() mv_return = rl_utils.moving_average(return_list, 9) plt.plot(episodes_list, mv_return) plt.xlabel('Episodes') plt.ylabel('Returns') plt.title('DDPG on {}'.format(env_name)) plt.show()

model.py

import torch import torch.nn.functional as F from torch import nn import random import numpy as np class PolicyNet(torch.nn.Module): def __init__(self, state_dim, hidden_dim, action_dim, action_bound): super(PolicyNet, self).__init__() self.fc1 = torch.nn.Linear(state_dim, hidden_dim) self.fc2 = torch.nn.Linear(hidden_dim, action_dim) self.action_bound = action_bound # action_bound 是环境可以接受的最大动作值 def forward(self, x): x = F.relu(self.fc1(x)) return torch.tanh(self.fc2(x)) * self.action_bound # tanh可以用来使得数值为-1, 1, self.action_bound可以将其变为对应的离散范围 class QValueNet(torch.nn.Module): def __init__(self, state_dim, hidden_dim, action_dim): super(QValueNet, self).__init__() self.fc1 = torch.nn.Linear(state_dim + action_dim, hidden_dim) self.fc2 = torch.nn.Linear(hidden_dim, 1) def forward(self, x, a): cat = torch.cat([x, a], dim=1) # 拼接状态和动作 x = F.relu(self.fc1(cat)) return self.fc2(x) class TwoLayerFC(torch.nn.Module): # 这是一个简单的两层神经网络 def __init__(self, num_in, num_out, hidden_dim, activation=F.relu, out_fn=lambda x: x): super().__init__() self.fc1 = nn.Linear(num_in, hidden_dim) self.fc2 = nn.Linear(hidden_dim, hidden_dim) self.fc3 = nn.Linear(hidden_dim, num_out) self.activation = activation self.out_fn = out_fn def forward(self, x): x = self.activation(self.fc1(x)) x = self.activation(self.fc2(x)) x = self.out_fn(self.fc3(x)) return x class DDPG: """DDPG 算法""" def __init__(self, num_in_actor, num_out_actor, num_in_critic, hidden_dim, discrete, action_bound, sigma, actor_lr, critic_lr, tau, gamma, device): out_fn = (lambda x: x) if discrete else ( # 如果是分离的或者连续的 lambda x : torch.tanh(x) * action_bound) self.actor = TwoLayerFC(num_in_actor, num_out_actor, hidden_dim, activation=F.relu, out_fn=out_fn).to(device) self.target_actor = TwoLayerFC(num_in_actor, num_out_actor, hidden_dim, activation=F.relu, out_fn=out_fn).to(device) self.critic = TwoLayerFC(num_in_critic, 1, hidden_dim).to(device) self.target_critic = TwoLayerFC(num_in_critic, 1, hidden_dim).to(device) # 初始化目标价值网络并设置和价值网络相同的参数 self.target_critic.load_state_dict(self.critic.state_dict()) self.target_actor.load_state_dict(self.actor.state_dict()) self.actor_optimizer = torch.optim.Adam(self.actor.parameters(), lr=actor_lr) self.critic_optimizer = torch.optim.Adam(self.critic.parameters(), lr=critic_lr) self.gamma = gamma self.sigma = sigma # 高斯噪声的标准差, 均值为0 self.action_bound = action_bound # action_bound 是环境可以接受的动作的最大值 self.tau = tau # 目标网络软更新参数 self.action_dim = num_out_actor self.device = device def take_action(self, state): state = torch.tensor([state], dtype=torch.float).to(self.device) action = self.actor(state).item() # 给动作添加噪声, 增加探索 action = action + self.sigma * np.random.randn(self.action_dim) return action def soft_update(self, net, target_net): for param_target, param in zip(target_net.parameters(), net.parameters()): param_target.data.copy_(param_target.data*(1.0 - self.tau) + param.data * self.tau) def update(self, transition_dict): states = torch.tensor(transition_dict['states'], dtype=torch.float).to(self.device) actions = torch.tensor(transition_dict['actions'], dtype=torch.float).view(-1, 1).to(self.device) rewards = torch.tensor(transition_dict['rewards'], dtype=torch.float).view(-1, 1).to(self.device) next_states = torch.tensor(transition_dict['next_states'], dtype=torch.float).to(self.device) dones = torch.tensor(transition_dict['dones'], dtype=torch.float).view(-1, 1).to(self.device) # 将状态和动作的输出组合完输入 next_q_values = self.target_critic( torch.cat( [next_states, self.target_actor(next_states)], dim=1)) q_targets = rewards + self.gamma * next_q_values * (1 - dones) # critic_loss 将状态和状态对应的动作进行构造损失 critic_loss = torch.mean( F.mse_loss( self.critic(torch.cat([states, actions], dim=1)), q_targets)) self.critic_optimizer.zero_grad() critic_loss.backward() self.critic_optimizer.step() actor_loss = -torch.mean( self.critic( # 策略网络就是为了使得Q值最大值 torch.cat([states, self.actor(states)], dim=1))) # 为了使得Q值最大 self.actor_optimizer.zero_grad() actor_loss.backward() self.actor_optimizer.step() self.soft_update(self.actor, self.target_actor) # 软更新策略网络 self.soft_update(self.critic, self.target_critic) # 软更新价值网络

rl_utils.py

from tqdm import tqdm import numpy as np import torch import collections import random class ReplayBuffer: def __init__(self, capacity): self.buffer = collections.deque(maxlen=capacity) def add(self, state, action, reward, next_state, done): self.buffer.append((state, action, reward, next_state, done)) def sample(self, batch_size): transitions = random.sample(self.buffer, batch_size) state, action, reward, next_state, done = zip(*transitions) return np.array(state), action, reward, np.array(next_state), done def size(self): return len(self.buffer) def moving_average(a, window_size): cumulative_sum = np.cumsum(np.insert(a, 0, 0)) middle = (cumulative_sum[window_size:] - cumulative_sum[:-window_size]) / window_size r = np.arange(1, window_size - 1, 2) begin = np.cumsum(a[:window_size - 1])[::2] / r end = (np.cumsum(a[:-window_size:-1])[::2] / r)[::-1] return np.concatenate((begin, middle, end)) def train_on_policy_agent(env, agent, num_episodes): return_list = [] for i in range(10): with tqdm(total=int(num_episodes / 10), desc='Iteration %d' % i) as pbar: for i_episode in range(int(num_episodes / 10)): episode_return = 0 transition_dict = {'states': [], 'actions': [], 'next_states': [], 'rewards': [], 'dones': []} state = env.reset() done = False # 一局放入到一块数据里面 while not done: action = agent.take_action(state) next_state, reward, done, _ = env.step(action) transition_dict['states'].append(state) transition_dict['actions'].append(action) transition_dict['next_states'].append(next_state) transition_dict['rewards'].append(reward) transition_dict['dones'].append(done) state = next_state episode_return += reward return_list.append(episode_return) agent.update(transition_dict) if (i_episode + 1) % 10 == 0: pbar.set_postfix({'episode': '%d' % (num_episodes / 10 * i + i_episode + 1), 'return': '%.3f' % np.mean(return_list[-10:])}) pbar.update(1) return return_list def train_off_policy_agent(env, agent, num_episodes, replay_buffer, minimal_size, batch_size): return_list = [] for i in range(10): with tqdm(total=int(num_episodes / 10), desc='Iteration %d' % i) as pbar: for i_episode in range(int(num_episodes / 10)): episode_return = 0 state = env.reset() done = False while not done: action = agent.take_action(state) next_state, reward, done, _ = env.step(action) replay_buffer.add(state, action, reward, next_state, done) state = next_state episode_return += reward if replay_buffer.size() > minimal_size: b_s, b_a, b_r, b_ns, b_d = replay_buffer.sample(batch_size) transition_dict = {'states': b_s, 'actions': b_a, 'next_states': b_ns, 'rewards': b_r, 'dones': b_d} agent.update(transition_dict) return_list.append(episode_return) if (i_episode + 1) % 10 == 0: pbar.set_postfix({'episode': '%d' % (num_episodes / 10 * i + i_episode + 1), 'return': '%.3f' % np.mean(return_list[-10:])}) pbar.update(1) return return_list def compute_advantage(gamma, lmbda, td_delta): td_delta = td_delta.detach().numpy() advantage_list = [] advantage = 0.0 for delta in td_delta[::-1]: advantage = gamma * lmbda * advantage + delta # 时分差分算法, 表示从头到尾的影响 advantage_list.append(advantage) advantage_list.reverse() # 序列反转 return torch.tensor(advantage_list, dtype=torch.float)

浙公网安备 33010602011771号

浙公网安备 33010602011771号