大三上寒假15天--第4天

继昨天

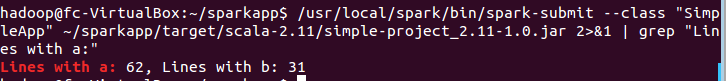

运行成功

大数据原理与应用 第十六章 Spark 学习指南

今天进行Java独立应用编程

1.安装maven

- sudo unzip ~/下载/apache-maven-3.3.9-bin.zip -d /usr/local

- cd /usr/local

- sudo mv apache-maven-3.3.9/ ./maven

- sudo chown -R hadoop ./maven

2.Java应用程序代码

- cd ~ #进入用户主文件夹

- mkdir -p ./sparkapp2/src/main/java

- vim ./sparkapp2/src/main/java/SimpleApp.java,并插入如下

/*** SimpleApp.java ***/ import org.apache.spark.api.java.*; import org.apache.spark.api.java.function.Function; public class SimpleApp { public static void main(String[] args) { String logFile = "file:///usr/local/spark/README.md"; // Should be some file on your system JavaSparkContext sc = new JavaSparkContext("local", "Simple App", "file:///usr/local/spark/", new String[]{"target/simple-project-1.0.jar"}); JavaRDD<String> logData = sc.textFile(logFile).cache(); long numAs = logData.filter(new Function<String, Boolean>() { public Boolean call(String s) { return s.contains("a"); } }).count(); long numBs = logData.filter(new Function<String, Boolean>() { public Boolean call(String s) { return s.contains("b"); } }).count(); System.out.println("Lines with a: " + numAs + ", lines with b: " + numBs); } }

4.vim ./sparkapp2/pom.xml 并加入如下:

<project> <groupId>edu.berkeley</groupId> <artifactId>simple-project</artifactId> <modelVersion>4.0.0</modelVersion> <name>Simple Project</name> <packaging>jar</packaging> <version>1.0</version> <repositories> <repository> <id>Akka repository</id> <url>http://repo.akka.io/releases</url> </repository> </repositories> <dependencies> <dependency> <!-- Spark dependency --> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.11</artifactId> <version>2.0.0-preview</version> </dependency> </dependencies> </project>

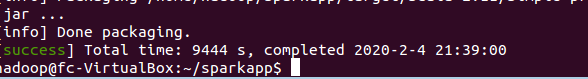

3.使用maven打包java程序

- cd ~/sparkapp2

- find

- /usr/local/maven/bin/mvn package 又开始下载了,无语。。。。