rancher2.4.5安装(RKE 集群)

官网推荐架构及节点角色要求:

- Rancher 的 DNS 应该被解析到四层负载均衡器上。

- 负载均衡器应该把 TCP/80 端口和 TCP/443 端口的流量转发到集群中全部的 3 个节点上。

- Ingress Controller 将把 HTTP 重定向到 HTTPS,在 TCP/443 端口使用 SSL/TLS。

- Ingress Controller 把流量转发到 Rancher Server 的 pod 的 80 端口。

在Kubernetes集群中安装Rancher,并使用四层负载均衡,SSL终止在Ingress Controller中

-

RKE 每个节点至少需要一个角色,但并不强制每个节点只能有一个角色。但是,对于运行您的业务应用的集群,我们建议为每个节点使用单独的角色,这可以保证工作节点上的工作负载不会干扰 Kubernetes Master 或集群数据。

以下是我们对于下游集群的最低配置建议:

- 三个只有 etcd 角色的节点 保障高可用性,如果这三个节点中的任意一个出现故障,还可以继续使用。

- 两个只有 controlplane 角色的节点 这样可以保证 master 组件的高可用性。

- 一个或多个只有 worker 角色的节点 用于运行 Kubernetes 节点组件和您部署的服务或应用。

在安装 Rancher Server 时三个节点,每个节点都有三个角色是安全的,因为:

- 可以允许一个 etcd 节点失败

- 多个 controlplane 节点使 master 组件保持多实例的状态。

- 该集群有且只有 Rancher 在运行。

服务器信息:

| 服务器 | 公网地址 | rancher私网地址 | 用途及角色 | 服务器配置 |

| nginx | 192.168.198.130 | 反向代理及负载均衡 | 1c 2g | |

| rancher1 | 192.168.198.150 | 10.10.10.150 | controlplane, worker, etcd | 2c 4g |

| rancher2 | 192.168.198.151 | 10.10.10.151 | controlplane, worker, etcd | 2c 4g |

| rancher3 | 192.168.198.152 | 10.10.10.152 | controlplane, worker, etcd | 2c 4g |

因为机器配置有限,只能用最低要求三节点的rancher,然后每个节点都承担了三个角色。

操作系统版本:CentOS Linux release 7.7.1908 (Core)

操作系统内核版本:5.7.4-1.el7.elrepo.x86_64

docker版本:Docker version 19.03.11, build 42e35e61f3

1.升级操作系统内核(操作系统内核升级到了最新版本,参考博客:https://www.cnblogs.com/ding2016/p/10429640.html)

2.安装最新版本docker(各rancher节点)

2.1 yum install -y yum-utils device-mapper-persistent-data lvm2

2.2 添加软件源信息

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

2.3 安装docker(不指定版本默认安装最新版本的docker)

yum -y install docker-ce

查看当前docker版本

docker version

3.关闭防火墙及selinux(各rancher节点及nginx服务器)

3.1关闭防火墙

systemctl stop firewalld.service

3.2禁止防火墙开机服务

systemctl disable firewalld.service

3.3关闭selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

4.配置阿里云镜像加速(各rancher节点)

根据自己的镜像配置修改:

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{ "registry-mirrors": ["https://hcepoa2b.mirror.aliyuncs.com"] }

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

5.rancher用户及互信配置(各rancher节点)

rancher安装时,不能使用root用户。

5.1新建rancher用户

groupadd docker

useradd rancher -G docker

echo "123456" | passwd --stdin rancher

5.2rancher用户各节点需要配置互信

su - rancher

各节点密钥生成

ssh-keygen -t rsa

rancher1节点执行公钥导入:

ssh 192.168.198.150 cat /home/rancher/.ssh/id_rsa.pub >>/home/rancher/.ssh/authorized_keys

ssh 192.168.198.151 cat /home/rancher/.ssh/id_rsa.pub >>/home/rancher/.ssh/authorized_keys

ssh 192.168.198.152 cat /home/rancher/.ssh/id_rsa.pub >>/home/rancher/.ssh/authorized_keys

chmod 600 /home/rancher/.ssh/authorized_keys

将authorized_key复制到rancher2、rancher3

scp authorized_keys rancher@192.168.198.151:/home/rancher/.ssh/

scp authorized_keys rancher@192.168.198.152:/home/rancher/.ssh/

6.安装Kubernetes(rke集群安装)

6.1安装 Kubernetes 命令行工具 kubectl

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF

yum install -y kubectl

6.2安装RKE,Rancher Kubernetes Engine,一种 Kubernetes 分发版和命令行工具

下载地址:https://github.com/rancher/rke/releases

Release v1.1.3

我使用rke_linux-amd64,将工具上传到/home/rancher

mv rke_linux-amd64 rke

6.3创建rancher-cluster.yml文件

[root@rancher1 rancher]# cat /home/racher/rancher-cluster.yml

nodes:

- address: 192.168.198.150

internal_address: 10.10.10.150

user: rancher

role: [controlplane, worker, etcd]

- address: 192.168.198.151

internal_address: 10.10.10.151

user: rancher

role: [controlplane, worker, etcd]

- address: 192.168.198.152

internal_address: 10.10.10.152

user: rancher

role: [controlplane, worker, etcd]

services:

etcd:

snapshot: true

creation: 6h

retention: 24h

# 当使用外部 TLS 终止,并且使用 ingress-nginx v0.22或以上版本时,必须。

ingress:

provider: nginx

options:

use-forwarded-headers: "true"

文件参数说明:

| 选项 | 必填 | 描述 |

| address | yes | 公用 DNS 或 IP 地址 |

| user | yes | 可以运行 docker 命令的用户 |

| role | yes | 分配给节点的 Kubernetes 角色列表 |

| internal_address | yes | 内部集群流量的专用 DNS 或 IP 地址 |

| ssh_key_path | no | 用于对节点进行身份验证的 SSH 私钥的路径(默认为~/.ssh/id_rsa) |

6.4运行rke

cd /home/rancher

./rke up --config rancher-cluster.yml

完成后,它应该以这样一行结束: Finished building Kubernetes cluster successfully.

6.5安装过程出现问题需要重新安装,手动清理节点

# 停止服务

systemctl disable kubelet.service

systemctl disable kube-scheduler.service

systemctl disable kube-proxy.service

systemctl disable kube-controller-manager.service

systemctl disable kube-apiserver.service

systemctl stop kubelet.service

systemctl stop kube-scheduler.service

systemctl stop kube-proxy.service

systemctl stop kube-controller-manager.service

systemctl stop kube-apiserver.service

# 删除所有容器

docker rm -f $(docker ps -qa)

# 删除所有容器卷

docker volume rm $(docker volume ls -q)

# 卸载mount目录

for mount in $(mount | grep tmpfs | grep '/var/lib/kubelet' | awk '{ print $3 }') /var/lib/kubelet /var/lib/rancher; do umount $mount; done

# 备份目录

mv /etc/kubernetes /etc/kubernetes-bak-$(date +"%Y%m%d%H%M")

mv /var/lib/etcd /var/lib/etcd-bak-$(date +"%Y%m%d%H%M")

mv /var/lib/rancher /var/lib/rancher-bak-$(date +"%Y%m%d%H%M")

mv /opt/rke /opt/rke-bak-$(date +"%Y%m%d%H%M")

# 删除残留路径

rm -rf /etc/ceph \

/etc/cni \

/opt/cni \

/run/secrets/kubernetes.io \

/run/calico \

/run/flannel \

/var/lib/calico \

/var/lib/cni \

/var/lib/kubelet \

/var/log/containers \

/var/log/pods \

/var/run/calico

# 清理网络接口

network_interface=`ls /sys/class/net`

for net_inter in $network_interface;

do

if ! echo $net_inter | grep -qiE 'lo|docker0|eth*|ens*';then

ip link delete $net_inter

fi

done

# 清理残留进程

port_list=`80 443 6443 2376 2379 2380 8472 9099 10250 10254`

for port in $port_list

do

pid=`netstat -atlnup|grep $port |awk '{print $7}'|awk -F '/' '{print $1}'|grep -v -|sort -rnk2|uniq`

if [[ -n $pid ]];then

kill -9 $pid

fi

done

pro_pid=`ps -ef |grep -v grep |grep kube|awk '{print $2}'`

if [[ -n $pro_pid ]];then

kill -9 $pro_pid

fi

# 清理Iptables表

## 注意:如果节点Iptables有特殊配置,以下命令请谨慎操作

sudo iptables --flush

sudo iptables --flush --table nat

sudo iptables --flush --table filter

sudo iptables --table nat --delete-chain

sudo iptables --table filter --delete-chain

systemctl restart docker

6.6测试集群,使用kubectl测试您的连通性,并查看您的所有节点是否都处于Ready状态

[rancher@rancher1 ~]$ kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.198.150 Ready controlplane,etcd,worker 30h v1.18.3

192.168.198.151 Ready controlplane,etcd,worker 30h v1.18.3

192.168.198.152 Ready controlplane,etcd,worker 30h v1.18.3

6.7检查pod运行情况

[rancher@rancher1 ~]$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

cattle-system cattle-cluster-agent-57d9454cb6-sn2qk 1/1 Running 0 2m54s

cattle-system cattle-node-agent-4rh9f 1/1 Running 0 104s

cattle-system cattle-node-agent-jfvzr 1/1 Running 0 117s

cattle-system cattle-node-agent-k47vb 1/1 Running 0 2m

cattle-system rancher-64b9795c65-cdmkg 1/1 Running 0 4m22s

cattle-system rancher-64b9795c65-gr6ld 1/1 Running 0 4m22s

cattle-system rancher-64b9795c65-stnfv 1/1 Running 0 4m22s

ingress-nginx default-http-backend-598b7d7dbd-lw97l 1/1 Running 2 35h

ingress-nginx nginx-ingress-controller-424pp 1/1 Running 2 35h

ingress-nginx nginx-ingress-controller-k664p 1/1 Running 2 35h

ingress-nginx nginx-ingress-controller-twlql 1/1 Running 2 35h

kube-system canal-8m7xt 2/2 Running 4 35h

kube-system canal-fr5kf 2/2 Running 4 35h

kube-system canal-gj9sb 2/2 Running 4 35h

kube-system coredns-849545576b-dknjf 1/1 Running 2 35h

kube-system coredns-849545576b-dr4hk 1/1 Running 2 35h

kube-system coredns-autoscaler-5dcd676cbd-dtzjg 1/1 Running 2 35h

kube-system metrics-server-697746ff48-j6hll 1/1 Running 2 35h

kube-system rke-coredns-addon-deploy-job-lg99g 0/1 Completed 0 35h

kube-system rke-ingress-controller-deploy-job-d9nlc 0/1 Completed 0 35h

kube-system rke-metrics-addon-deploy-job-9zcmb 0/1 Completed 0 35h

kube-system rke-network-plugin-deploy-job-rvbdr 0/1 Completed 0 35h

7.安装rancher

7.1安装cli工具

kubectl - Kubernetes 命令行工具。

helm - Kubernetes 的软件包管理工具。请参阅 Helm 版本要求以选择要安装 Rancher 的 Helm 版本。

kubectl之前已经安装。

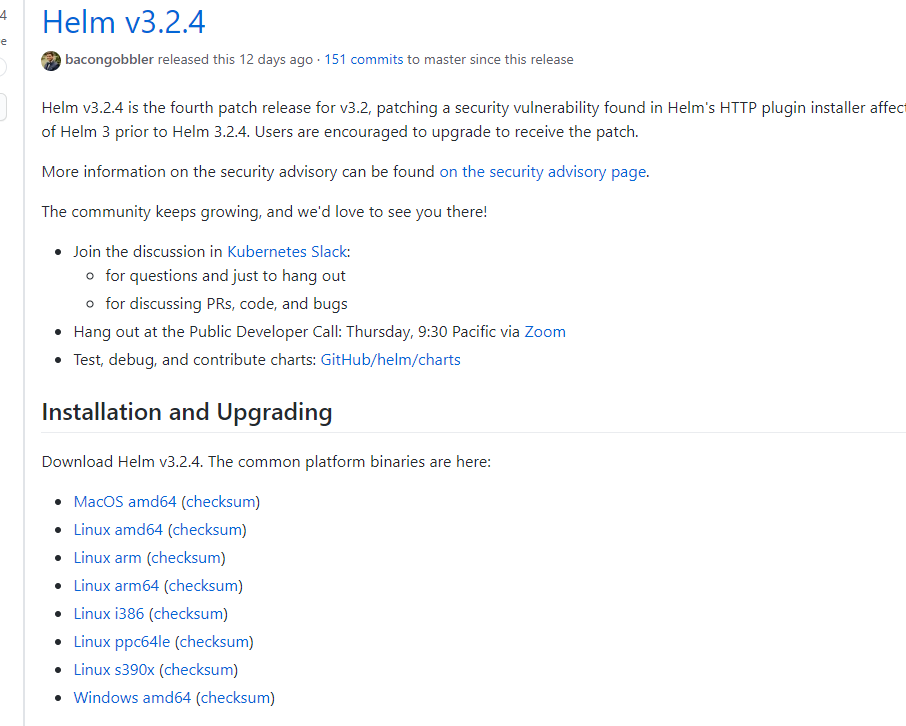

需要安装helm ,下载地址(https://github.com/helm/helm/releases)

下载helm-v3.2.4-linux-amd64.tar,上传到/home/rancher目录。

7.2添加helm chart仓库

helm repo add rancher-stable http://rancher-mirror.oss-cn-beijing.aliyuncs.com/server-charts/stable

7.3为 Rancher 创建 Namespace

kubectl create namespace cattle-system

7.4使用自签名SSL证书安装安装Rancher Server

[rancher@rancher1 ~]$ mkdir ssl

[rancher@rancher1 ~]$ cd ssl/

#先把一键生成ssl证书的脚本另存为create_self-signed-cert.sh(脚本地址:https://rancher2.docs.rancher.cn/docs/installation/options/self-signed-ssl/_index/)

#然后再用这个脚本生成ssl证书

[rancher@node1 ssl]$ ./create_self-signed-cert.sh --ssl-domain=rancher.my.org --ssl-trusted-ip=192.168.198.130 --ssl-size=2048 --ssl-date=3650

[rancher@rancher1 ssl]$ ls

cacerts.pem cacerts.srl cakey.pem openssl.cnf rancher.my.org.crt rancher.my.org.csr rancher.my.org.key ssl.sh tls.crt tls.key

#服务证书和私钥密文

kubectl -n cattle-system create \

secret tls tls-rancher-ingress \

--cert=/home/rancher/ssl/tls.crt \

--key=/home/rancher/ssl/tls.key

#ca证书密文

kubectl -n cattle-system create secret \

generic tls-ca \

--from-file=/home/rancher/ssl/cacerts.pem

安装rancher

kubectl -n cattle-system rollout status deploy/rancher

Waiting for deployment "rancher" rollout to finish: 0 of 3 updated replicas are available...

deployment "rancher" successfully rolled out

8.安装nginx

192.168.198.130安装nginx

配置文件如下(参考地址:https://rancher2.docs.rancher.cn/docs/installation/options/nginx/_index):

[root@localhost nginx]# cat /etc/nginx/nginx.conf

worker_processes 4;

worker_rlimit_nofile 40000;

events {

worker_connections 8192;

}

stream {

upstream rancher_servers_http {

least_conn;

server 192.168.198.150:80 max_fails=3 fail_timeout=5s;

server 192.168.198.151:80 max_fails=3 fail_timeout=5s;

server 192.168.198.152:80 max_fails=3 fail_timeout=5s;

}

server {

listen 80;

proxy_pass rancher_servers_http;

}

upstream rancher_servers_https {

least_conn;

server 192.168.198.150:443 max_fails=3 fail_timeout=5s;

server 192.168.198.151:443 max_fails=3 fail_timeout=5s;

server 192.168.198.152:443 max_fails=3 fail_timeout=5s;

}

server {

listen 443;

proxy_pass rancher_servers_https;

}

}

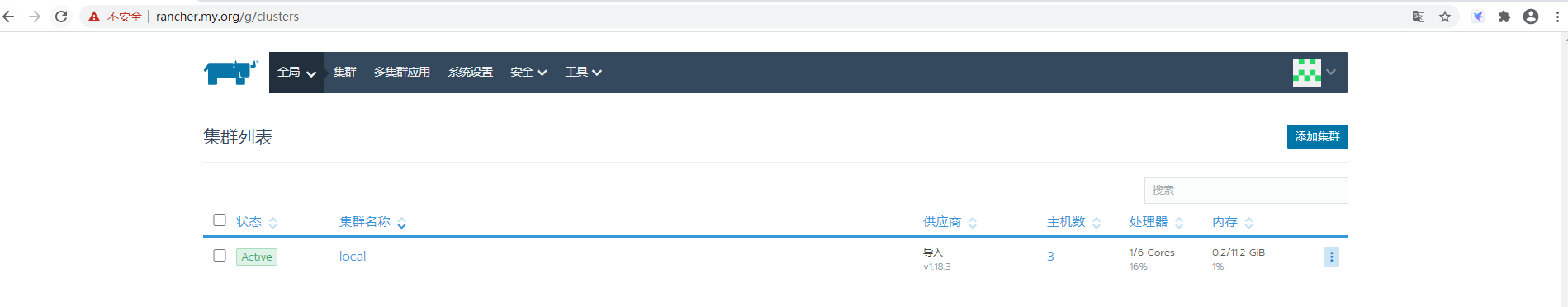

9.访问地址rancher.my.org

进入system

查看红框deployment是否正常

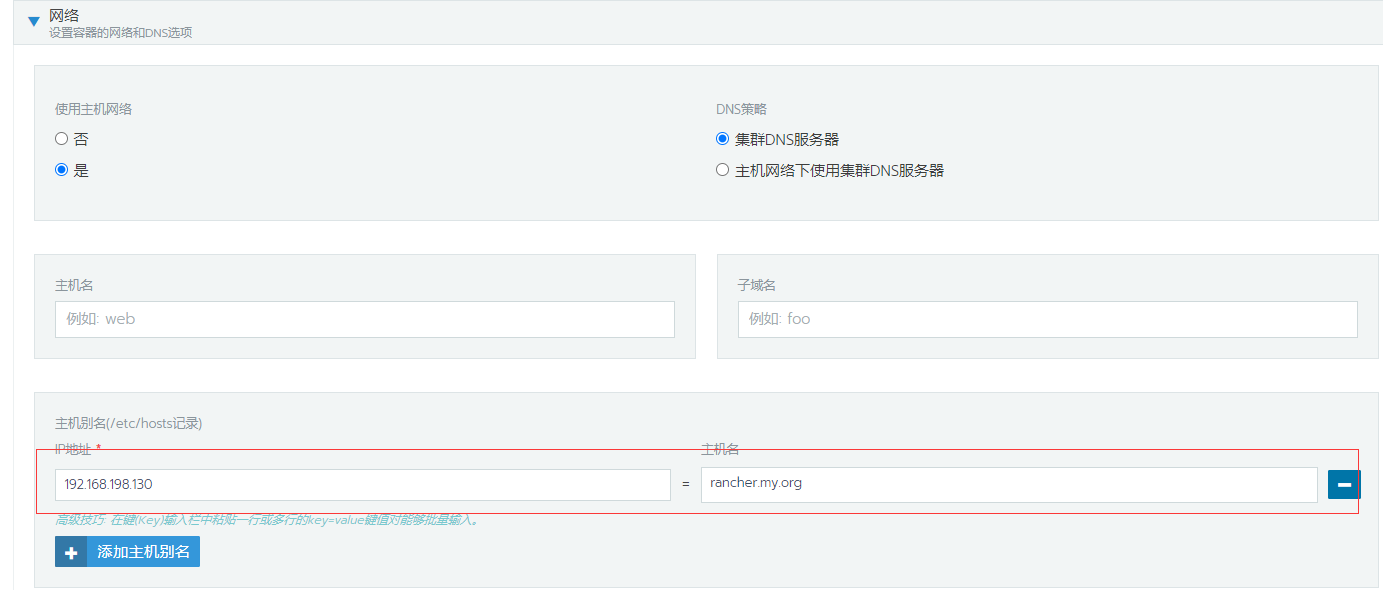

如果不正常查看容器日志,如果日志中报域名解析相关的问题。

编辑deployment,网络添加主机别名

参考文章:

https://rancher2.docs.rancher.cn/docs/installation/k8s-install/_index/

https://www.bookstack.cn/read/rancher-v2.x/74435b0bf28d8990.md

https://blog.csdn.net/wc1695040842/article/details/105253706/