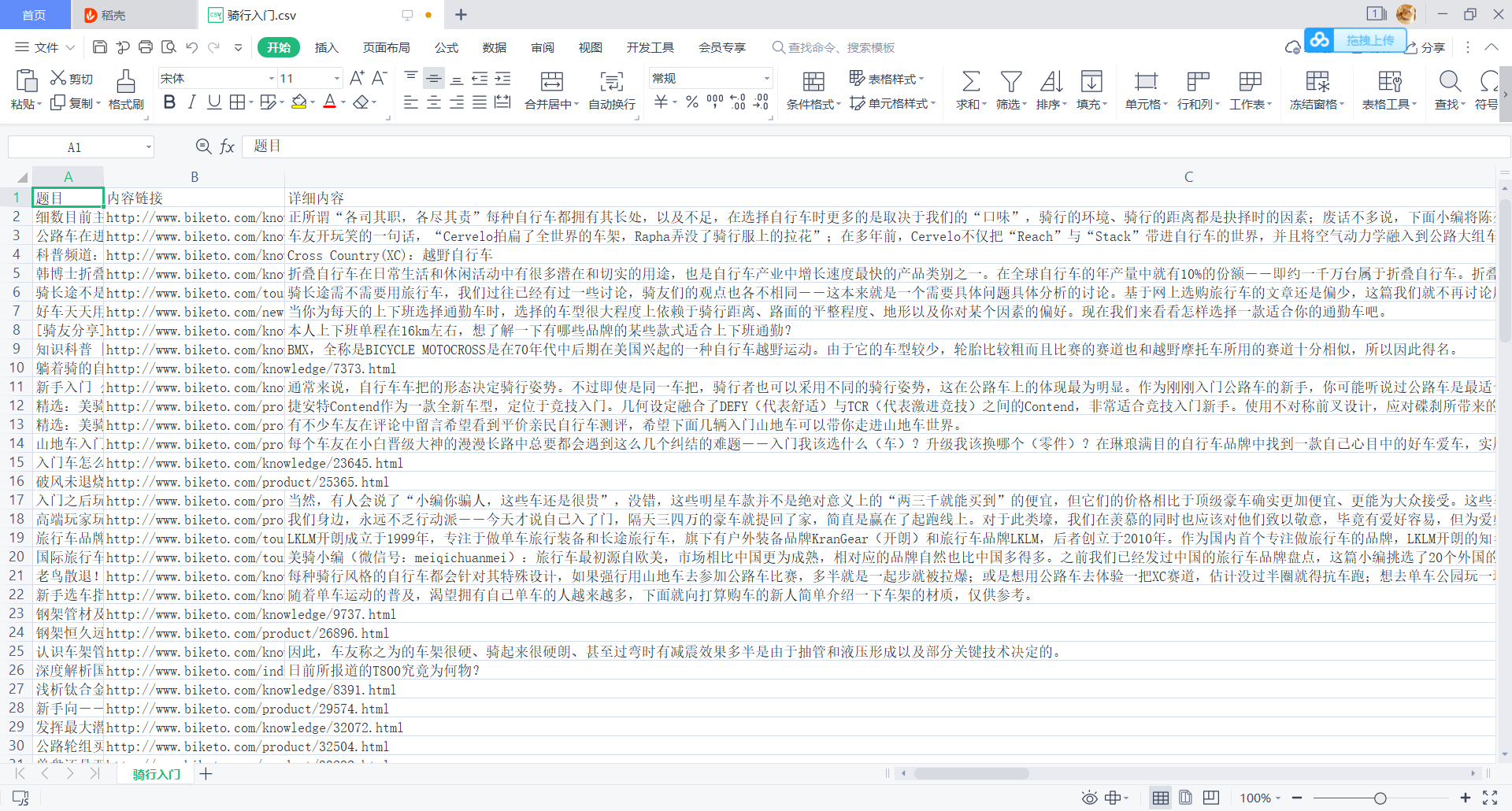

骑行入门

对每一个骑者的入门进行推荐,爬虫:

import re import requests from bs4 import BeautifulSoup import csv def paqu(): f = open('../../Documents/Tencent Files/631836111/FileRecv/骑行入门.csv', 'a+', encoding='utf-8', newline='') csv_writer = csv.writer(f) csv_writer.writerow(["题目", "内容链接", "详细内容"]) headers = { 'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.82 Safari/537.36', } link = 'http://www.biketo.com/beginnerguide/' r = requests.get(link, headers=headers, timeout=10) soup = BeautifulSoup(r.text, "lxml") li_list = soup.find_all('li')[4:140] for each in li_list: txt=each.find('a').string href=each.find('a')['href'] print(txt + " " + href) Response = requests.get(href, headers=headers, timeout=10) soup1 = BeautifulSoup(Response.text, "lxml") div_list = soup1.find_all('p', style='text-indent:2em;') #div_list= soup1.find_all('div',class_='article-content') txt1=[] for each1 in div_list: if(each1.string is not None): txt1.append(each1.string) #print(*txt1) csv_writer.writerow([txt, href,*txt1]) if __name__ == '__main__': paqu()

获得的数据:

是多疑还是去相信

谎言背后的忠心

或许是自己太执迷

命题游戏

沿着他的脚步 呼吸开始变得急促

就算看清了面目 设下埋伏

真相却居无定处

I swear I'll never be with the devil

用尽一生孤独 没有退路的路

你看不到我

眉眼焦灼却不明下落

命运的轮轴

伺机而动 来不及闪躲

沿着他的脚步 呼吸开始变得急促

就算看清了面目 设下埋伏

真相却居无定处

I swear I'll never be with the devil

用尽一生孤独 没有退路的路

你看不到我

眉眼焦灼却不明下落

命运的轮轴

伺机而动 来不及闪躲

你看不到我

眉眼焦灼却不明下落

命运的轮轴

伺机而动 来不及闪躲

黑夜和白昼

你争我夺 真相被蛊惑

心从不退缩

这天堂荒漠 留给孤独的猎手