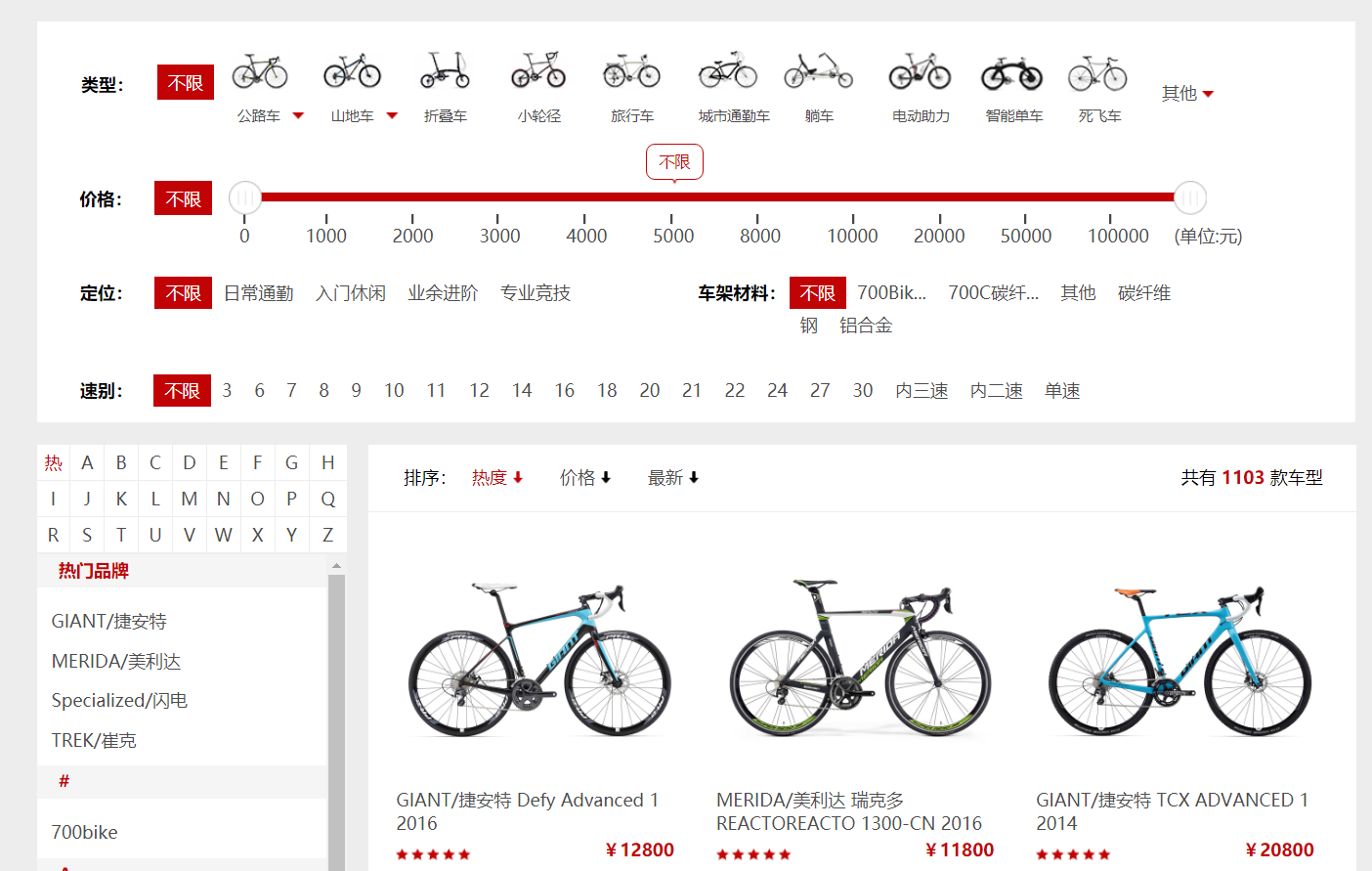

针对chinabike、美骑网的爬虫

爬取网站:http://www.chinabike.net/和http://www.biketo.com/

import requests from bs4 import BeautifulSoup import bs4 import random import csv import json def paqu(i): f = open('自行车.csv', 'a+', encoding='utf-8',newline='') csv_writer = csv.writer(f) if(i==1): csv_writer.writerow(["名字", "价格", "种类", "图片地址"]) url = 'http://p.biketo.com/ajax/filterList?cate_id=0&brand_id=0&low=0&high=0&niche=&material=&transmission=&wheel_diameter=&tags_id=&page='+str(i)+'&sort=hot&sort_up=1' user_agent_list = [ "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1", "Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50", "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0" ] # 每次请求的浏览器都不一样 headers = { 'user-agent': random.choice(user_agent_list), } response = requests.get(url, headers=headers) #print(response.text) jd = json.loads(response.text) data_list = jd['data']['data'] for a in data_list: csv_writer.writerow([a['name'],(a['price']).replace("¥",""),a['category'],a['photo']]) print(a['name']+" "+(a['price']).replace("¥","")+" "+a['category']+" "+a['photo']) if __name__ == '__main__': # f = open('自行车.csv', 'w+', encoding='utf-8') # csv_writer = csv.writer(f) #csv_writer.writerow(["名字", "价格", "种类", "图片地址"]) for i in range(1,47): paqu(i)

是多疑还是去相信

谎言背后的忠心

或许是自己太执迷

命题游戏

沿着他的脚步 呼吸开始变得急促

就算看清了面目 设下埋伏

真相却居无定处

I swear I'll never be with the devil

用尽一生孤独 没有退路的路

你看不到我

眉眼焦灼却不明下落

命运的轮轴

伺机而动 来不及闪躲

沿着他的脚步 呼吸开始变得急促

就算看清了面目 设下埋伏

真相却居无定处

I swear I'll never be with the devil

用尽一生孤独 没有退路的路

你看不到我

眉眼焦灼却不明下落

命运的轮轴

伺机而动 来不及闪躲

你看不到我

眉眼焦灼却不明下落

命运的轮轴

伺机而动 来不及闪躲

黑夜和白昼

你争我夺 真相被蛊惑

心从不退缩

这天堂荒漠 留给孤独的猎手