block: cfq 学习01

CFQ,即Completely Fair Queueing绝对公平调度器,力图为竞争块设备使用权的所有进程分配一个等同的时间片,在调度器分配给进程的时间片内,进程可以将其读写请求发送给底层块设备,当进程的时间片消耗完,进程的请求队列将被挂起,等待调度。相对于Noop和Deadline调度器,CFQ要复杂得多,因此可能要分几次才能将其分析完。

优先级

每个进程都会有一个IO优先级,CFQ调度器将会将其作为考虑的因素之一,来确定该进程的请求队列何时可以获取块设备的使用权。IO优先级从高到低可以分为三大类:RT(real time),BE(best try),IDLE(idle),其中RT和BE又可以再划分为8个子优先级。实际上,我们已经知道CFQ调度器的公平是针对于进程而言的,而只有同步请求(read或syn write)才是针对进程而存在的,他们会放入进程自身的请求队列,而所有同优先级的异步请求,无论来自于哪个进程,都会被放入公共的队列,异步请求的队列总共有8(RT)+8(BE)+1(IDLE)=17个。

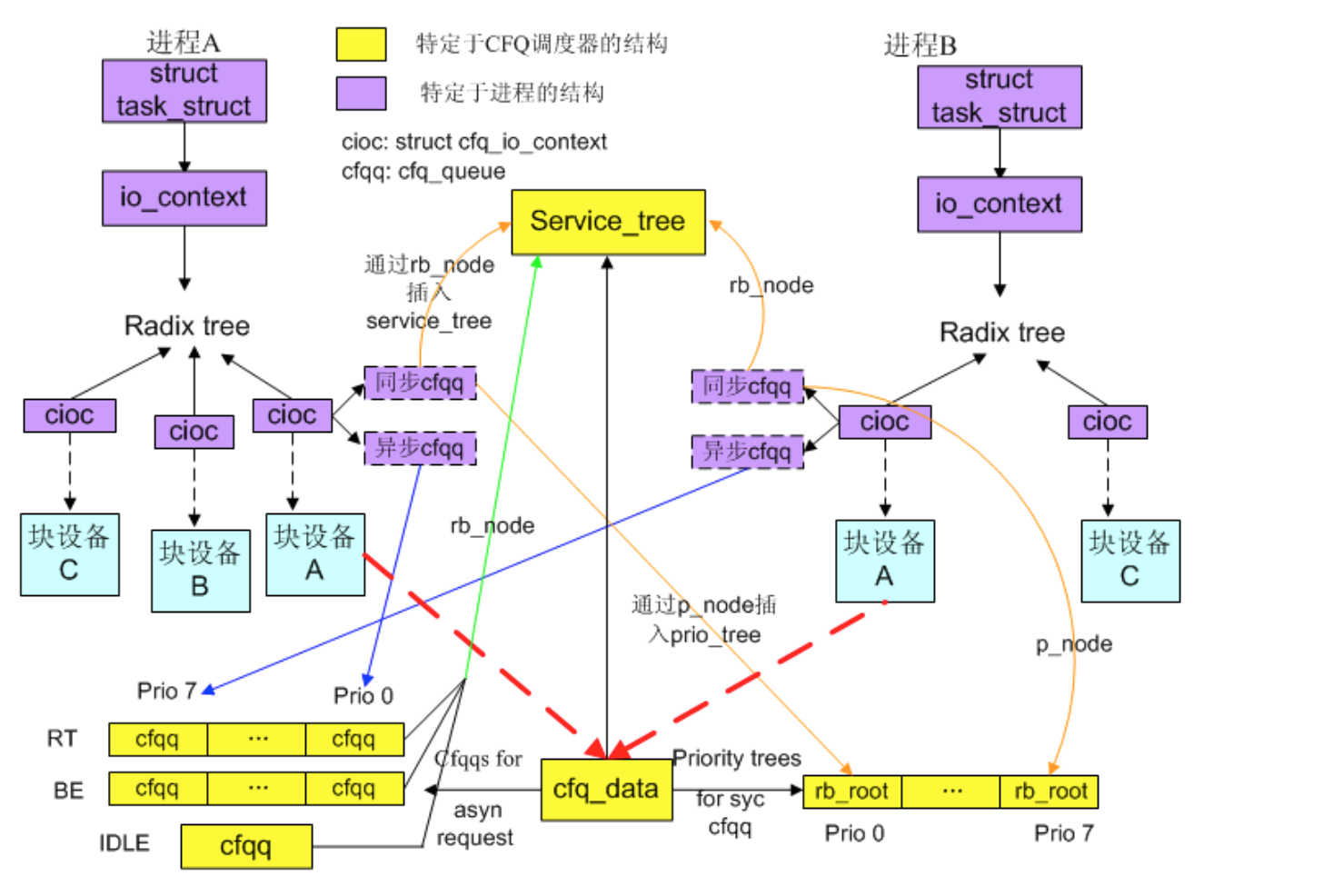

调度器的结构

CFQ调度器在整个工作过程中所涉及到的结构比较多,我们可以把这些结构分为两类,一类是用来描述调度器本身相关的结构,由于CFQ将进程作为考虑对象,因此另一类结构就是特定于进程的结构,对于这些结构,我们只选择其内部的重要元素进行分析。和调度器相关的数据结构主要有两个,

一个是描述调度器的struct cfq_data

一个是描述队列的struct cfq_queue。

struct cfq_data {

struct request_queue *queue;

/*

* rr list of queues with requests and the count of them

*/

struct cfq_rb_root service_tree;

/*

* Each priority tree is sorted by next_request position. These

* trees are used when determining if two or more queues are

* interleaving requests (see cfq_close_cooperator).

*/

struct rb_root prio_trees[CFQ_PRIO_LISTS];

unsigned int busy_queues;

int rq_in_driver[2];

int sync_flight;

/*

* queue-depth detection

*/

int rq_queued;

int hw_tag;

int hw_tag_samples;

int rq_in_driver_peak;

/*

* idle window management

*/

struct timer_list idle_slice_timer;

struct work_struct unplug_work;

struct cfq_queue *active_queue;

struct cfq_io_context *active_cic;

/*

* async queue for each priority case

*/

struct cfq_queue *async_cfqq[2][IOPRIO_BE_NR];

struct cfq_queue *async_idle_cfqq;

sector_t last_position;

/*

* tunables, see top of file

*/

unsigned int cfq_quantum;

unsigned int cfq_fifo_expire[2];

unsigned int cfq_back_penalty;

unsigned int cfq_back_max;

unsigned int cfq_slice[2];

unsigned int cfq_slice_async_rq;

unsigned int cfq_slice_idle;

unsigned int cfq_latency;

struct list_head cic_list;

/*

* Fallback dummy cfqq for extreme OOM conditions

*/

struct cfq_queue oom_cfqq;

unsigned long last_end_sync_rq;

};

queue:指向块设备对应的request_queue

service_tree:所有待调度的队列都被添加进该红黑树,等待调度获取时间片

prio_trees[CFQ_PRIO_LISTS]:对应8个优先级的红黑树,所有优先级类别为RT或BE的进程的同步请求队列,都会根据优先级添加至相应的红黑树

busy_queues:用于计算service_tree中有多少个队列在等待调度

active_queue:指向当前占有块设备的队列

async_cfqq[2][IOPRIO_BE_NR]:对应RT和BE优先级类的16个异步请求队列

async_idle_cfqq:对应优先级类别为IDLE的异步请求队列

cfq_quantum:用于计算在一个队列的时间片内,最多发放多少个请求到底层的块设备

cfq_fifo_expire[2]:同步、异步请求的响应期限时间

cfq_slice[2]:同步、异步请求队列的时间片长度

struct cfq_queue {

/* reference count */

atomic_t ref;

/* various state flags, see below */

unsigned int flags;

/* parent cfq_data */

struct cfq_data *cfqd;

/* service_tree member */

struct rb_node rb_node;

/* service_tree key */

unsigned long rb_key;

/* prio tree member */

struct rb_node p_node;

/* prio tree root we belong to, if any */

struct rb_root *p_root;

/* sorted list of pending requests */

struct rb_root sort_list;

/* if fifo isn't expired, next request to serve */

struct request *next_rq;

/* requests queued in sort_list */

int queued[2];

/* currently allocated requests */

int allocated[2];

/* fifo list of requests in sort_list */

struct list_head fifo;

unsigned long slice_end;

long slice_resid;

unsigned int slice_dispatch;

/* pending metadata requests */

int meta_pending;

/* number of requests that are on the dispatch list or inside driver */

int dispatched;

/* io prio of this group */

unsigned short ioprio, org_ioprio;

unsigned short ioprio_class, org_ioprio_class;

unsigned int seek_samples;

u64 seek_total;

sector_t seek_mean;

sector_t last_request_pos;

unsigned long seeky_start;

pid_t pid;

struct cfq_queue *new_cfqq;

};

cfqd:指向队列所属的cfq_data

rb_node:用于将队列插入service_tree

rb_key:红黑树节点关键值,用于确定队列在service_tree中的位置,该值要综合jiffies,进程的IO优先级等因素进行计算

p_node:用于将队列插入对应优先级的prio_tree

p_root:对应的prio_tree树根

sort_list:组织队列内的请求用的红黑树,按请求的起始扇区进行排序

fifo:组织队列内的请求用的链表头,按请求的响应期限排序

slice_end:指明时间片何时消耗完

slice_dispatch:在时间片内发送的请求数

ioprio:进程的当前IO优先级

相对于进程的结构有struct io_context和struct cfq_io_context。io_context的核心结构是一个基数树,里面组织了进程所访问的所有块设备所对应的cfq_io_context。cfq_io_context中的核心结构是两个队列,也就是进程在一个CFQ调度器所关系到的队列,一个是同步的,一个是异步的,下面是我根据自己的理解画的一张关系图:

REF

cfq参数: https://www.kernel.org/doc/Documentation/block/cfq-iosched.txt