sysbench测试阿里云ECS云磁盘的IOPS,吞吐量

测试阿里云ECS

对象:在aliyun上买了一个ECS附加的云盘,使用sysbench测试云盘的IOPS和吞吐量

sysbench prepare

准备文件,10个文件,1个1G

[root@iZwz9fy718twfih4bjs551Z ~]# sysbench --test=fileio --file-total-size=10G --file-num=10 prepare

sysbench 0.4.12: multi-threaded system evaluation benchmark

10 files, 1048576Kb each, 10240Mb total

Creating files for the test...

已经可以看到,在准备文件阶段也是有写入的

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 1.04 0.00 72.92 0.00 34016.67 933.03 85.64 344.51 0.00 344.51 9.50 69.27

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 116.67 0.00 56058.33 961.00 124.54 946.51 0.00 946.51 8.93 104.17

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 1.03 130.93 4.12 62886.60 953.19 129.84 1042.84 402.00 1047.88 7.81 103.09

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 117.02 0.00 56578.72 966.98 136.09 1116.11 0.00 1116.11 9.09 106.38

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 117.89 0.00 56648.42 961.00 134.70 1090.65 0.00 1090.65 8.93 105.26

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 8.16 0.00 114.29 0.00 55297.96 967.71 103.06 1101.99 0.00 1101.99 8.93 102.04

[root@iZwz9fy718twfih4bjs551Z ~]# ls

test_file.0 test_file.2 test_file.4 test_file.6 test_file.8

test_file.1 test_file.3 test_file.5 test_file.7 test_file.9

sysbench run

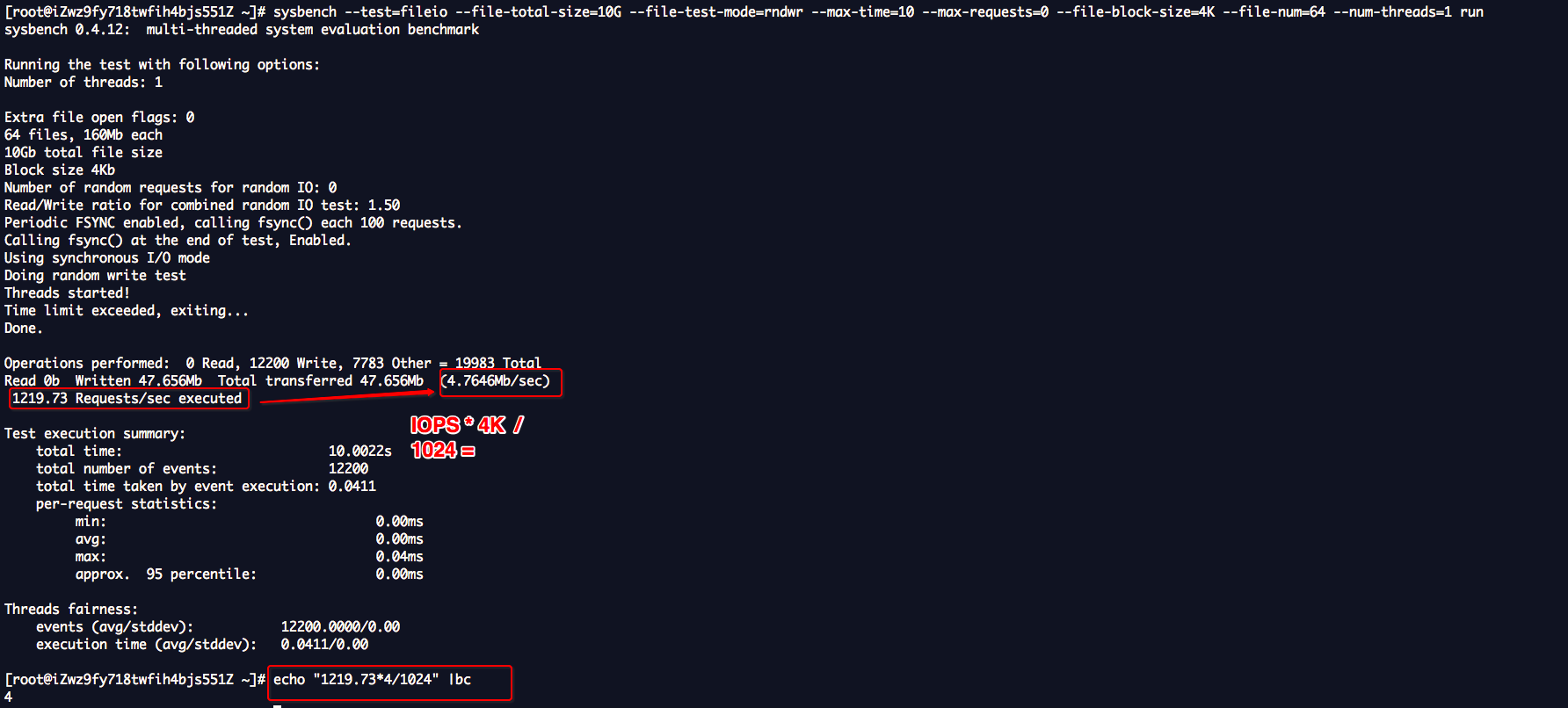

一定要指定文件块4K,测试模式: 随机写,进程数量1个;

[root@iZwz9fy718twfih4bjs551Z ~]# sysbench --test=fileio --file-total-size=10G --file-test-mode=rndwr --max-time=60 --max-requests=0 --file-block-size=4K --file-num=64 --num-threads=1 run

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 912.00 0.00 1111.00 0.00 7616.00 13.71 1.36 1.23 0.00 1.23 0.68 75.80

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 1389.90 3.03 1403.03 12.12 10557.58 15.03 1.71 1.20 0.67 1.20 0.70 98.59

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 1457.58 1.01 1381.82 4.04 10820.20 15.66 1.66 1.21 1.00 1.21 0.71 97.98

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 1451.49 0.99 1352.48 3.96 10712.87 15.84 1.32 0.98 1.00 0.98 0.71 95.54

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

vda 0.00 1533.33 0.00 1383.84 0.00 11159.60 16.13 1.47 1.06 0.00 1.06 0.71 98.69

分析结果:

-

最重要的是看IOPS: 664.62 Requests/sec executed,一般的HDD硬盘就是几百的IOPS。

-

我们在说IOPS的时候,已经要说明是文件块多大的情况下的IOPS,4K的文件块下的IOPS和16K大的文件块下的IOPS是完全不一样的概念!两个就不是一个值,肯定文件块越小,IOPS越大

-

--num-threads值不一样,IOPS的值肯定也是不一样的,但是,当1个thread就可以达到IOPS的瓶颈的时候,实际上,你后面再加上多少个thread,IOPS的值都不会再往上增加了,但是,w_await和svctm的比值会变得越来越大!

后面,我会做一个测试,w_await是等待 -

另外一个参考数据是“吞吐量”,Total transferred 155.86Mb (2.5962Mb/sec)

-

总数据传输量:155.86Mb ,这个数据没有什么意义,时间越长,总数据传输量肯定越大,但是“吞吐量”:2.5962Mb/sec是有意义的,“吞吐量”一般是按照MB/s为单位的。

吞吐量= IOPS * 4K / 1024 = 664.62 Requests/sec executed * 4K / 1024 = 2.5962Mb/sec -

approx. 95 percentile: 0.01ms

另外一个重要的参数指标是: approx. 95 percentile: 表示,百分之95的请求的平均返回时间

[root@iZwz9fy718twfih4bjs551Z ~]# sysbench --test=fileio --file-total-size=10G --file-test-mode=rndwr --max-time=60 --max-requests=0 --file-block-size=4K --file-num=64 --num-threads=1 run

sysbench 0.4.12: multi-threaded system evaluation benchmark

Running the test with following options:

Number of threads: 1

Extra file open flags: 0

64 files, 160Mb each

10Gb total file size

Block size 4Kb

Number of random requests for random IO: 0

Read/Write ratio for combined random IO test: 1.50

Periodic FSYNC enabled, calling fsync() each 100 requests.

Calling fsync() at the end of test, Enabled.

Using synchronous I/O mode

Doing random write test

Threads started!

Time limit exceeded, exiting...

Done.

Operations performed: 0 Read, 39900 Write, 25493 Other = 65393 Total

Read 0b Written 155.86Mb Total transferred 155.86Mb (2.5962Mb/sec)

664.62 Requests/sec executed

Test execution summary:

total time: 60.0344s

total number of events: 39900

total time taken by event execution: 0.2442

per-request statistics:

min: 0.00ms

avg: 0.01ms

max: 1.91ms

approx. 95 percentile: 0.01ms

Threads fairness:

events (avg/stddev): 39900.0000/0.00

execution time (avg/stddev): 0.2442/0.00

实际写次数: 39900.0629

[root@iZwz9fy718twfih4bjs551Z ~]# echo "664.62*60.0344" | bc

39900.0629

阿里云盘

https://help.aliyun.com/document_detail/25382.html?spm=5176.doc25383.6.549.13pyGB

https://help.aliyun.com/document_detail/25383.html?spm=5176.doc25382.2.2.FeSCI4

清理准备文件

sysbench --test=fileio --file-total-size=10G --file-num=10 cleanup

thread num作为变量主要会影响,w_await:svctm的比例关系

为什么thread num变多了,会导致,w_await 高呢,这是因为,一次性突发的IO请求太多,瞬间IOPS到达瓶颈,当然要排队了,但是服务时间svctm没有增高,说明系统的办事效率基本保持不变,这2个单位都是微秒;

[root@jiangyi01.sqa.zmf /home/ahao.mah]

#for each in 1 4 8 16 32 64; do sysbench --test=fileio --file-total-size=20G --file-test-mode=rndwr --max-time=10 --max-requests=0 --file-block-size=4K --file-num=5 --num-threads=$each run; sleep 3; done | tee log1

[root@jiangyi01.sqa.zmf /home/ahao.mah]

#cat log1 | grep "Requests/sec executed" -B1

Read 0b Written 7.8125Mb Total transferred 7.8125Mb (797.98Kb/sec)

199.49 Requests/sec executed

--

Read 0b Written 8.2031Mb Total transferred 8.2031Mb (818.76Kb/sec)

204.69 Requests/sec executed

--

Read 0b Written 9.375Mb Total transferred 9.375Mb (907.82Kb/sec)

226.96 Requests/sec executed

--

Read 0b Written 10.156Mb Total transferred 10.156Mb (998.38Kb/sec)

249.59 Requests/sec executed

--

Read 0b Written 10.938Mb Total transferred 10.938Mb (1.0035Mb/sec)

256.90 Requests/sec executed

--

Read 0b Written 13.281Mb Total transferred 13.281Mb (1.156Mb/sec)

295.94 Requests/sec executed

[root@jiangyi01.sqa.zmf /home/ahao.mah]

#cat log2 | grep sda

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

sda 0.08 2.84 0.09 3.47 1.56 203.27 115.22 0.20 55.90 5.45 57.16 3.33 1.18

sda 0.00 9.00 0.00 2.00 0.00 44.00 44.00 0.03 16.00 0.00 16.00 16.00 3.20

sda 0.00 10.00 0.00 118.00 0.00 504.00 8.54 1.37 11.06 0.00 11.06 4.62 54.50

sda 0.00 5.00 0.00 141.00 0.00 564.00 8.00 1.56 11.15 0.00 11.15 7.09 100.00

sda 0.00 15.00 0.00 213.00 0.00 884.00 8.30 1.77 8.52 0.00 8.52 4.69 100.00

sda 0.00 9.00 0.00 197.00 0.00 796.00 8.08 1.66 8.19 0.00 8.19 5.07 99.80

sda 0.00 9.00 0.00 215.00 0.00 860.00 8.00 1.74 8.08 0.00 8.08 4.66 100.10

sda 0.00 11.00 0.00 224.00 0.00 908.00 8.11 1.66 7.52 0.00 7.52 4.45 99.60

sda 0.00 12.00 0.00 232.00 0.00 940.00 8.10 1.82 7.88 0.00 7.88 4.30 99.80

sda 0.00 6.00 0.00 192.00 0.00 764.00 7.96 3.28 16.90 0.00 16.90 5.21 100.00

sda 0.00 11.00 0.00 212.00 0.00 860.00 8.11 1.66 7.68 0.00 7.68 4.71 99.90

sda 0.00 12.00 0.00 232.00 0.00 940.00 8.10 1.71 7.37 0.00 7.37 4.29 99.60

sda 0.00 6.00 0.00 110.00 0.00 448.00 8.15 0.83 8.37 0.00 8.37 4.34 47.70

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 5.00 0.00 68.00 27.20 0.00 0.80 0.00 0.80 0.20 0.10

sda 0.00 14.00 0.00 150.00 0.00 656.00 8.75 11.80 77.16 0.00 77.16 3.43 51.50

sda 0.00 10.00 0.00 182.00 0.00 764.00 8.40 4.72 26.50 0.00 26.50 5.49 100.00

sda 0.00 5.00 0.00 132.00 0.00 548.00 8.30 7.64 23.89 0.00 23.89 7.57 99.90

sda 0.00 13.00 0.00 257.00 0.00 1056.00 8.22 16.09 80.45 0.00 80.45 3.89 100.00

sda 0.00 13.00 0.00 250.00 0.00 1040.00 8.32 6.77 27.18 0.00 27.18 4.00 99.90

sda 0.00 9.00 0.00 177.00 0.00 744.00 8.41 4.98 26.83 0.00 26.83 5.65 100.00

sda 0.00 9.00 0.00 238.00 0.00 976.00 8.20 10.79 39.32 0.00 39.32 4.20 100.00

sda 0.00 19.00 0.00 222.00 0.00 944.00 8.50 12.05 61.05 0.00 61.05 4.50 100.00

sda 0.00 12.00 0.00 245.00 0.00 1028.00 8.39 5.67 23.58 0.00 23.58 4.08 100.00

sda 0.00 10.00 0.00 199.00 0.00 820.00 8.24 25.78 128.58 0.00 128.58 5.03 100.00

sda 0.00 10.00 0.00 143.00 0.00 608.00 8.50 3.12 23.65 0.00 23.65 5.20 74.40

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 9.00 0.00 138.00 0.00 552.00 8.00 31.23 59.28 0.00 59.28 1.81 25.00

sda 0.00 0.00 0.00 104.00 0.00 452.00 8.69 49.96 656.34 0.00 656.34 9.62 100.00

sda 0.00 7.00 0.00 215.00 0.00 860.00 8.00 44.06 199.61 0.00 199.61 4.65 100.00

sda 0.00 12.00 0.00 268.00 0.00 1096.00 8.18 40.68 126.00 0.00 126.00 3.73 100.00

sda 0.00 12.00 0.00 267.00 0.00 1068.00 8.00 55.23 243.07 0.00 243.07 3.75 100.00

sda 0.00 5.00 0.00 199.00 0.00 844.00 8.48 15.97 73.91 0.00 73.91 5.03 100.00

sda 0.00 14.00 0.00 313.00 0.00 1348.00 8.61 36.61 121.29 0.00 121.29 3.19 100.00

sda 0.00 6.00 0.00 181.00 0.00 732.00 8.09 17.14 110.00 0.00 110.00 5.52 100.00

sda 0.00 9.00 0.00 306.00 0.00 1232.00 8.05 30.18 85.80 0.00 85.80 3.27 100.00

sda 0.00 7.00 0.00 191.00 0.00 796.00 8.34 22.58 138.93 0.00 138.93 5.23 99.90

sda 0.00 9.00 0.00 268.00 0.00 1104.00 8.24 17.60 47.21 0.00 47.21 3.74 100.10

sda 0.00 0.00 0.00 21.00 0.00 76.00 7.24 4.83 477.67 0.00 477.67 15.43 32.40

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 251.00 0.00 1004.00 8.00 92.04 206.95 0.00 206.95 2.67 67.00

sda 0.00 7.00 0.00 158.00 0.00 660.00 8.35 51.55 578.28 0.00 578.28 6.33 100.00

sda 0.00 9.00 0.00 219.00 0.00 896.00 8.18 88.27 174.04 0.00 174.04 4.57 100.00

sda 0.00 10.00 0.00 225.00 0.00 940.00 8.36 72.32 383.53 0.00 383.53 4.44 99.90

sda 0.00 4.00 0.00 282.00 0.00 1144.00 8.11 98.93 426.34 0.00 426.34 3.55 100.00

sda 0.00 13.00 0.00 264.00 0.00 1096.00 8.30 102.65 382.55 0.00 382.55 3.79 100.00

sda 0.00 6.00 0.00 312.00 0.00 1272.00 8.15 105.84 370.33 0.00 370.33 3.21 100.00

sda 0.00 13.00 0.00 260.00 0.00 1024.00 7.88 84.60 235.59 0.00 235.59 3.85 100.00

sda 0.00 6.00 0.00 306.00 0.00 1324.00 8.65 69.52 242.36 0.00 242.36 3.27 100.00

sda 0.00 6.00 0.00 266.00 0.00 1056.00 7.94 96.71 393.21 0.00 393.21 3.76 100.00

sda 0.00 0.00 0.00 106.00 0.00 436.00 8.23 20.53 362.85 0.00 362.85 7.05 74.70

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 66.00 0.00 280.00 8.48 0.88 13.33 0.00 13.33 0.30 2.00

sda 0.00 0.00 0.00 144.00 0.00 576.00 8.00 33.84 62.20 0.00 62.20 1.72 24.70

sda 0.00 0.00 0.00 251.00 0.00 1004.00 8.00 139.01 437.20 0.00 437.20 3.98 100.00

sda 0.00 14.00 0.00 294.00 0.00 1176.00 8.00 119.51 528.34 0.00 528.34 3.40 100.00

sda 0.00 0.00 0.00 216.00 0.00 868.00 8.04 46.87 192.15 0.00 192.15 4.63 100.00

sda 0.00 1.00 0.00 305.00 0.00 1220.00 8.00 146.43 409.43 0.00 409.43 3.28 100.00

sda 0.00 7.00 0.00 188.00 0.00 784.00 8.34 52.51 517.56 0.00 517.56 5.32 100.00

sda 0.00 0.00 0.00 336.00 0.00 1276.00 7.60 118.96 267.04 0.00 267.04 2.98 100.00

sda 0.00 6.93 0.00 296.04 0.00 1211.88 8.19 100.26 431.62 0.00 431.62 3.34 99.01

sda 0.00 7.00 0.00 288.00 0.00 1180.00 8.19 108.21 267.76 0.00 267.76 3.47 100.00

sda 0.00 4.00 0.00 307.00 0.00 1228.00 8.00 126.08 406.97 0.00 406.97 3.26 100.00

sda 0.00 9.00 0.00 204.00 0.00 792.00 7.76 67.40 349.34 0.00 349.34 4.90 100.00

sda 0.00 0.00 0.00 143.00 0.00 572.00 8.00 20.40 354.34 0.00 354.34 4.56 65.20

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 159.00 0.00 636.00 8.00 48.54 94.02 0.00 94.02 2.14 34.10

sda 0.00 0.00 0.00 260.00 0.00 1040.00 8.00 141.14 515.23 0.00 515.23 3.85 100.00

sda 0.00 0.00 0.00 298.00 0.00 1192.00 8.00 142.06 479.01 0.00 479.01 3.36 100.00

sda 0.00 0.00 0.00 320.00 0.00 1280.00 8.00 139.28 422.16 0.00 422.16 3.12 100.00

sda 0.00 7.00 0.00 250.00 0.00 1032.00 8.26 79.77 495.41 0.00 495.41 4.00 100.00

sda 0.00 0.00 0.00 297.00 0.00 1188.00 8.00 100.28 192.63 0.00 192.63 3.37 100.00

sda 0.00 0.00 0.00 347.00 0.00 1388.00 8.00 139.39 431.21 0.00 431.21 2.88 100.00

sda 0.00 0.00 0.00 310.00 0.00 1240.00 8.00 140.17 452.45 0.00 452.45 3.23 100.00

sda 0.00 14.00 0.00 299.00 0.00 1252.00 8.37 106.89 426.34 0.00 426.34 3.34 100.00

sda 0.00 0.00 0.00 273.00 0.00 1092.00 8.00 92.81 247.36 0.00 247.36 3.66 100.00

sda 0.00 0.00 0.00 344.00 0.00 1376.00 8.00 143.44 430.23 0.00 430.23 2.91 100.00

sda 0.00 8.00 0.00 197.00 0.00 820.00 8.32 76.40 544.16 0.00 544.16 5.08 100.00

sda 0.00 0.00 0.00 26.00 0.00 0.00 0.00 3.82 234.27 0.00 234.27 5.65 14.70

sda 0.00 0.00 9.00 0.00 80.00 0.00 17.78 0.33 36.56 36.56 0.00 11.44 10.30

sda 0.00 0.00 0.00 5.00 0.00 76.00 30.40 0.00 0.80 0.00 0.80 0.20 0.10

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 22.00 0.00 2.00 0.00 96.00 96.00 0.02 12.00 0.00 12.00 12.00 2.40

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

[root@iZwz9fy718twfih4bjs551Z ~]# for each in 1 4 8 16 32 ;do sysbench --test=fileio --file-total-size=10G --file-test-mode=rndwr --max-time=10 --max-requests=0 --file-block-size=4K --file-num=64 --num-threads=$each run ;sleep 3;done

实际上发现,这个值没有影响

结论

测试结果,和阿里云的数据基本一致

sysbench fileio 参数

Sysbench Fileio file-extra-flags

This option allows you to change the flags used when sysbench performs the open syscall. This option can be helpful if you want to test the difference between using caching / buffering as much as possible, or avoiding caching / buffers as much as possible.

--file-extra-flags=$VALUE

O_DIRECT will minimize cache effects of IO to, and from the file being used. This can sometimes lower performance, but in some cases it can actually improve application performance if the application has it's own cache. File IO is done directly to / from the user space buffers. When O_DIRECT is specified on it's own it will try to transfer data synchronously, however it won't guarantee that data and it's metadata are transferred, like O_SYNC. You should use O_SYNC in addition to O_DIRECT if you want to be damn sure data is always safely written.

O_DSYNC Similar to O_DIRECT, however this ensures that all data are written to disk before considering the write complete. Basically with this mode, each write is followed by fdatasync. This is slightly less safe than O_SYNC which is like a write followed by fsync, this ensure the data is written AND the metadata is written.

O_SYNC This mode is similar to O_DSYNC, which ensures data integrity. O_SYNC provides file integrity as well as data integrity. This means the file data and metadata are both guaranteed to complete and be written to disk.

You should add this argument at the very end of the sysbench command, but before "run" at the very end.

--file-extra-flags=direct

--file-extra-flags=O_DIRECT

--file-extra-flags=O_DSYNC

--file-extra-flags=O_SYNC

For example, you can run a random write test with "--file-extra-flags=direct" by running the command below

sysbench --test=fileio --file-total-size=8G --file-test-mode=rndwr --max-time=240 --max-requests=0 --file-block-size=4K --num-threads=$each --file-extra-flags=direct run

http://man7.org/linux/man-pages/man2/open.2.html

Sysbench Fileio file-io-mode

This option allows you to choose which IO mode sysbench should use for IO tests. By default this is set to sync. Depending on the OS you can change this to async. This is useful to test out performance differences between sync and async, typically async will be faster if your version of Linux supports it.

--file-io-mode=$value

Possible values are

--file-io-mode=sync

--file-io-mode=async

--file-io-mode=fastmmap

--file-io-mode=slowmmap

Sysbench Fileio file-block-size

This value defines the block size that should be used for the IO tests. By default sysbench sets this to 16K (KB), if you want to test random io then usually 4K should be used, if you are testing out sequential IO then larger blocksizes of 1M should be used.

--file-block-size=$Number

You can configure the blocksize to be whatever you want, the most common sizes are listed below. The smaller the block size, the slower the IO is. The larger the block size the more throughput you get.

--file-block-size=4K

--file-block-size=16K

--file-block-size=1M

Sysbench Fileio file-num

This option allows you to specify the amount of files to use for the IO test. The default value is 128 files, so if you have a file test size of 128GB each of the 128 files created would be 1GB in size. You can adjust this to whatever value you want, so if your application typically writes to say, 100 files then you can set the number to 100

--file-num=$number

Valid options for this flag are positive numbers

--file-num=1

--file-num=64

--file-num=128

etc etc

Sysbench Fileio file-fsync-all

This option does not have any additional flags, If you add this to a random write test then each write will be flushed to disk using fsync before moving on to the next write. This option allows you to see how IO performs under the most "extreme" case, meaning an application that must ensure every single transaction / write is safely written on disk instead of just placed in a buffer to be written out later. Performance will be much lower when using this option.

--file-fsync-all

These are the valid options for file-fsync-all

--file-fsync-all

If you do not add this option then sysbench will use the default fsync option which flushes writes to disk after 100 writes are performed.

Sysbench Fileio file-test-mode

You can select which test sysbench will run by changing the value for file-test-mode

--file-test-mode=$MODE

These are the valid options for file-test-mode

--file-test-mode=seqwr (sequential write test)

--file-test-mode=seqrd (sequential read test)

--file-test-mode=rndwr (random write test)

--file-test-mode=rndrd (random read test)

--file-test-mode=rndrw (random read and write test)