Hadoop中DataNode没有启动解决办法

一般由于多次格式化NameNode导致。在配置文件中保存的是第一次格式化时保存的namenode的ID,因此就会造成datanode与namenode之间的id不一致。

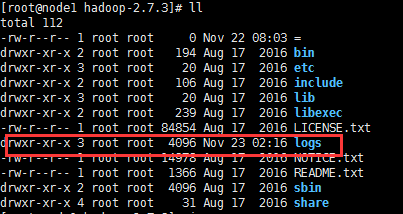

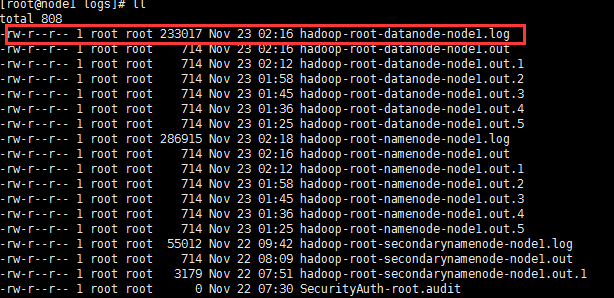

查看从节点机器hadoop中datanode的log文件 , 拉到最后 , 报错如下

然后找到datanode日志文件打开

2018-11-23 02:16:33,807 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: registered UNIX signal handlers for [TERM, HUP, INT]

2018-11-23 02:16:36,416 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2018-11-23 02:16:36,881 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s).

2018-11-23 02:16:36,881 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: DataNode metrics system started

2018-11-23 02:16:36,935 INFO org.apache.hadoop.hdfs.server.datanode.BlockScanner: Initialized block scanner with targetBytesPerSec 1048576

2018-11-23 02:16:36,974 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Configured hostname is node1

2018-11-23 02:16:37,003 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Starting DataNode with maxLockedMemory = 0

2018-11-23 02:16:37,171 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Opened streaming server at /0.0.0.0:50010

2018-11-23 02:16:37,174 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Balancing bandwith is 1048576 bytes/s

2018-11-23 02:16:37,175 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Number threads for balancing is 5

2018-11-23 02:16:37,790 INFO org.mortbay.log: Logging to org.slf4j.impl.Log4jLoggerAdapter(org.mortbay.log) via org.mortbay.log.Slf4jLog

2018-11-23 02:16:37,834 INFO org.apache.hadoop.security.authentication.server.AuthenticationFilter: Unable to initialize FileSignerSecretProvider, falling back to use random secrets.

2018-11-23 02:16:37,871 INFO org.apache.hadoop.http.HttpRequestLog: Http request log for http.requests.datanode is not defined

2018-11-23 02:16:37,914 INFO org.apache.hadoop.http.HttpServer2: Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter)

2018-11-23 02:16:37,917 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context datanode

2018-11-23 02:16:37,917 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context static

2018-11-23 02:16:37,918 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

2018-11-23 02:16:38,084 INFO org.apache.hadoop.http.HttpServer2: Jetty bound to port 43865

2018-11-23 02:16:38,084 INFO org.mortbay.log: jetty-6.1.26

2018-11-23 02:16:39,757 INFO org.mortbay.log: Started HttpServer2$SelectChannelConnectorWithSafeStartup@localhost:43865

2018-11-23 02:16:40,913 INFO org.apache.hadoop.hdfs.server.datanode.web.DatanodeHttpServer: Listening HTTP traffic on /0.0.0.0:50075

2018-11-23 02:16:42,063 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: dnUserName = root

2018-11-23 02:16:42,063 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: supergroup = supergroup

2018-11-23 02:16:42,374 INFO org.apache.hadoop.ipc.CallQueueManager: Using callQueue class java.util.concurrent.LinkedBlockingQueue

2018-11-23 02:16:42,494 INFO org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 50020

2018-11-23 02:16:42,554 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Opened IPC server at /0.0.0.0:50020

2018-11-23 02:16:42,579 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Refresh request received for nameservices: null

2018-11-23 02:16:42,613 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Starting BPOfferServices for nameservices: <default>

2018-11-23 02:16:42,645 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Block pool <registering> (Datanode Uuid unassigned) service to node1/127.0.0.1:9000 starting to offer service

2018-11-23 02:16:42,666 INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2018-11-23 02:16:42,668 INFO org.apache.hadoop.ipc.Server: IPC Server listener on 50020: starting

2018-11-23 02:16:43,745 INFO org.apache.hadoop.hdfs.server.common.Storage: Using 1 threads to upgrade data directories (dfs.datanode.parallel.volumes.load.threads.num=1, dataDirs=1)

2018-11-23 02:16:43,776 INFO org.apache.hadoop.hdfs.server.common.Storage: Lock on /var/data/hadoop/dfs/data/in_use.lock acquired by nodename 3212@node1

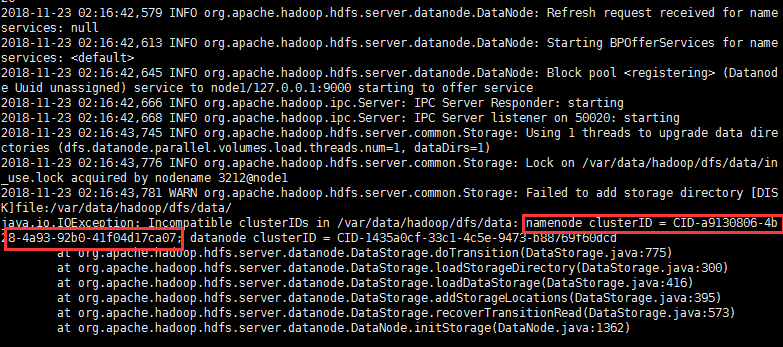

2018-11-23 02:16:43,781 WARN org.apache.hadoop.hdfs.server.common.Storage: Failed to add storage directory [DISK]file:/var/data/hadoop/dfs/data/

java.io.IOException: Incompatible clusterIDs in /var/data/hadoop/dfs/data: namenode clusterID = CID-a9130806-4b28-4a93-92b0-41f04d17ca07; datanode clusterID = CID-1435a0cf-33c1-4c5e-9473-b88769f60dcd

at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:775)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadStorageDirectory(DataStorage.java:300)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.loadDataStorage(DataStorage.java:416)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.addStorageLocations(DataStorage.java:395)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:573)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1362)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1327)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:317)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:223)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:802)

at java.lang.Thread.run(Thread.java:745)

2018-11-23 02:16:43,789 FATAL org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for Block pool <registering> (Datanode Uuid unassigned) service to node1/127.0.0.1:9000. Exiting.

java.io.IOException: All specified directories are failed to load.

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:574)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1362)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1327)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:317)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:223)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:802)

at java.lang.Thread.run(Thread.java:745)

2018-11-23 02:16:43,790 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Ending block pool service for: Block pool <registering> (Datanode Uuid unassigned) service to node1/127.0.0.1:9000

2018-11-23 02:16:43,898 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Removed Block pool <registering> (Datanode Uuid unassigned)

2018-11-23 02:16:45,899 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Exiting Datanode

2018-11-23 02:16:45,901 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 0

2018-11-23 02:16:45,904 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DataNode at node1/127.0.0.1

************************************************************/

如图,复制这个namenode clusterID。

找到data目录,在这个目录下,有一个VERSION文件。将VERSION中的clusterID改成上面复制的ID。

找到current 中的VERSION 打开

/var/data/hadoop/dfs/data/current

复制修改clusterID

保存

重启服务

DataNode已经出现了

浙公网安备 33010602011771号

浙公网安备 33010602011771号