爬取五八同城上房子信息并保存到Excel

爬取58网上信息用于横向对比各房子单价、总价、楼层、面积等信息。

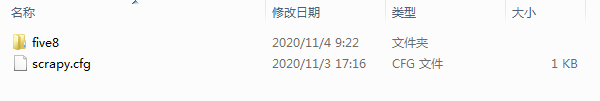

0、首先在CMD界面进入路径然后敲入 scrapy startproject five8

进入到目录可以看到生成了 five8文件夹和scrapy.cfg文件

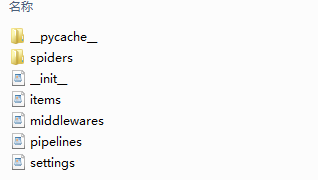

进入到five8文件夹可以看到有一个spiders文件夹和其余5个.py文件,这些文件和文件夹都是自动生成的(_pycache是运行程序出现的缓存文件,不用管)

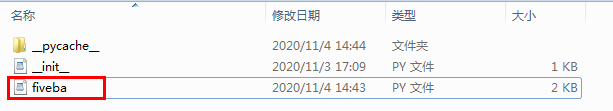

点开spiders文件夹可以看到fiveba.py,这个py文件是我们自己新加入的,里面配置了很多规则,具体里面的内容后续小节会讲到

进入到配置

1 items.py

import scrapy class Five8Item(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() biaoti = scrapy.Field() huxing = scrapy.Field() mianji = scrapy.Field() chaoxiang = scrapy.Field() cenggao = scrapy.Field() quyu = scrapy.Field() zongjia = scrapy.Field() danjia = scrapy.Field() adtime = scrapy.Field()

2 settings.py

BOT_NAME = 'five8' SPIDER_MODULES = ['five8.spiders'] NEWSPIDER_MODULE = 'five8.spiders' ROBOTSTXT_OBEY = True ITEM_PIPELINES = { 'five8.pipelines.Five8Pipeline': 300, } FEED_EXPORT_ENCODING ='utf-8'

##注意:因为需要导出到excel,所以ITEM_PIPELINES是必须要设置的,调用本地的pipelines文件

3 fiveba.py

爬虫规则设定文件,该文件是自己新增的,放置在 spiders目录下,其中里面的爬虫名非常重要(本次设置为fiveqq88),启动爬虫时需要用到该名

# -*- coding: utf-8 -*- import scrapy from five8.items import Five8Item from scrapy.http import Request from urllib.parse import urljoin class FivebaSpider(scrapy.Spider): name = 'fiveqq88' allowed_domains = ['fz.58.com'] start_urls = ['https://fz.58.com/ershoufang/pn66'] def parse(self, response): item=Five8Item() # fangzi=response.xpath('//ul[@class="house-list-wrap"]') fangzi=response.xpath('//ul[@class="house-list-wrap"]/li') for fang in fangzi: item['biaoti']=fang.xpath('./div[@class="list-info"]/h2/a/text()').extract()[0] item['huxing']=fang.xpath('./div[@class="list-info"]/p[1]/span[1]/text()').extract()[0] item['mianji']=fang.xpath('./div[@class="list-info"]/p[1]/span[2]/text()').extract()[0] item['chaoxiang']=fang.xpath('./div[@class="list-info"]/p[1]/span[3]/text()').extract()[0] item['cenggao']=fang.xpath('./div[@class="list-info"]/p[1]/span[4]/text()').extract()[0] #area=fang.xpath('./li/div[@class="list-info"]/p[2]/span[1]/text()').extract() #item['quyu']=("-").join(area) item['quyu']=fang.xpath('./div[@class="list-info"]/p[2]/span/a[1]/text()').extract()[0] item['zongjia']=fang.xpath('./div[@class="price"]/p[@class="sum"]/b/text()').extract()[0] item['danjia']=fang.xpath('./div[@class="price"]/p[@class="unit"]/text()').extract()[0] #item['adtime']=fang.xpath('./div[@class="time"]/text()').extract()[0] yield item nexturl=response.xpath('//div[@class="pager"]/a[@href and @class="next"]').extract() if nexturl: next_url=nexturl[0] # yield Request(urljoin(response.url,next_url),callback=self.parse) yield response.follow(next_url,self.parse) #

4 pipelines.py

pipelines.py文件是配置文件导出的配置文件,导出的格式有很多种,下面是引用openpyxl库导出为xlsx格式

from openpyxl import Workbook class Five8Pipeline: def __init__(self): #这个初始化函数是自己创建的,提前创建excel,并填写表头 self.wb = Workbook() self.ws = self.wb.active self.ws.append(['标题', '户型', '面积', '朝向', '层高','区域','总价','单价']) # 设置表头 def process_item(self, item, spider): line = [item['biaoti'], item['huxing'], item['mianji'], item['chaoxiang'],item['cenggao'],item['quyu'],item['zongjia'],item['danjia']] # 把数据中项整理出来 self.ws.append(line) # 将数据需要保存的项以行的形式添加到xlsx中 self.wb.save(r'web1.xlsx') # 保存xlsx文件 return item

5、运行爬虫

在CMD(如果安装anaconda 那么最好进入到Anaconda Prompt这个CMD,普通的CMD需要将python的路径加入环境变量),进入到与scrapy.cfg文件同级路径中

然后输入scrapy crawl fiveqq88 开始爬虫

导出结果如下

浙公网安备 33010602011771号

浙公网安备 33010602011771号