Kubernetes调试环境搭建

K8s MacBook本地debug环境搭建

1. K8s集群部署

可以在本地通过VMware Fusion虚拟机和kubeadm来搭建两个节点的K8s调试集群

| IP | 操作系统 | role |

| 192.168.1.121 | centos7 | kube-apiserver,kube-scheduler,ube-controller-manager,etcd |

| 192.168.1.122 | centos7 | kubelet |

1.1 虚拟机装好系统之后,进行基本环境设置(可以装一台虚拟机进行通用设置后复制)

关闭防火墙和selinux

systemctl stop firewalld && systemctl disable firewalld sed -i '/^SELINUX=/c SELINUX=disabled' /etc/selinux/config setenforce 0

关闭swap(新版本在开启交换区的机器上安装会报错)

swapoff -a sed -i 's/^.*centos-swap/#&/g' /etc/fstab

主机host配置

cat << EOF >> /etc/hosts 192.168.1.121 master 192.168.1.122 node1 EOF

内核参数和模块设置

# 激活 br_netfilter 模块 modprobe br_netfilter cat << EOF > /etc/modules-load.d/k8s.conf br_netfilter EOF # 内核参数设置:开启IP转发,允许iptables对bridge的数据进行处理 cat << EOF > /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF # 立即生效 sysctl --syste

内核参数和模块设置

# 激活 br_netfilter 模块 modprobe br_netfilter cat << EOF > /etc/modules-load.d/k8s.conf br_netfilter EOF # 内核参数设置:开启IP转发,允许iptables对bridge的数据进行处理 cat << EOF > /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 EOF # 立即生效 sysctl --system

1.2 安装Docker

yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo yum install -y docker-ce systemctl enable docker && systemctl start docker

配置 docker 的镜像加速器、cgroup 驱动及存储驱动程序。

Kubernetes 推荐使用 systemd 来代替 cgroupfs。因为 docker 容器默认 cgroup 驱动为 cgroupfs,而 kubelet 默认为 systemd,所以为了使系统更为稳定,容器和 kubelet 应该都使用 systemd 作为 cgroup 驱动。

cat << EOF > /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"] } EOF systemctl daemon-reload systemctl restart docker

1.3 安装 kubeadm 和相关工具

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl EOF # Set SELinux in permissive mode (effectively disabling it) sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes sudo systemctl enable --now kubelet

1.4 安装K8s测试集群

在master机器(192.168.1.121)执行

kubeadm init --kubernetes-version=v1.23.1 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16 --kubernetes-version:选择特定的Kubernetes版本(默认为“stable-1”) --service-cidr:为服务的VIP指定使用的IP地址范围(默认为“10.96.0.0/12”) --pod-network-cidr:指定Pod网络的IP地址范围。如果设置,则将自动为每个节点分配CIDR

执行命令之后会从官方镜像库k8s.gcr.io下载镜像,内网用户需要指定镜像库地址为内网镜像地址(--image-repository)

master节点初始化完成后会打印如下信息:

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.1.121:6443 --token xeqz0h.w0vp4e1vjnt2zs1v \ --discovery-token-ca-cert-hash sha256:77363daea05baa04513f4317d69d783c785f6c58a8212c3238cad52040ca5dbe

在slave集群执行加入命令:

kubeadm join 192.168.1.121:6443 --token xeqz0h.w0vp4e1vjnt2zs1v \ --discovery-token-ca-cert-hash sha256:77363daea05baa04513f4317d69d783c785f6c58a8212c3238cad52040ca5dbe

安装网络插件:

这里以安装flannel为例,https://github.com/flannel-io/flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

查看系统pod运行情况

kubectl get pods -n kube-system

部署测试服务,验证集群状态

[root@k8s-master kubernetes]# kubectl create deployment nginx --image=nginx deployment.apps/nginx created

[root@k8s-master kubernetes]# kubectl expose deployment nginx --port=80 --type=NodePort service/nginx exposed

[root@k8s-master kubernetes]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 25h nginx NodePort 10.1.167.250 <none> 80:30700/TCP 25h [root@k8s-master kubernetes]# curl 10.1.167.250 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title>

选装部署dashboard,dashboard其实对本地开发调试用处不大,可选择安装

安装dashboard:(https://github.com/kubernetes/dashboard)

2.调试环境(开发环境默认已安装)

我们使用Goland+delve进行远程调试

需要调试的组件运行的节点需要安装Go环境,注意Go版本和Kubernetes的对应关系,我们本次演示以当前最新版本1.23版本的kube-apiserver进行调试

1.23需要Go版本为1.17+

我们在kube-apiserver所在的节点192.168.1.121安装Go1.17.5

[root@k8s-master app]# wget https://go.dev/dl/go1.17.5.linux-amd64.tar.gz [root@k8s-master app]# tar zxf go1.17.5.linux-amd64.tar.gz # add env [root@k8s-master app]# vim /etc/profile #追加 #add go export GOROOT=/app/go export PATH=$PATH:$GOROOT/bin export GOPATH=/root/go export PATH=$PATH:$GOPATH/bin [root@k8s-master app]# source /etc/profile #验证版本 [root@k8s-master app]# go version go version go1.17.5 linux/amd64

安装delve

参考:https://github.com/go-delve/delve/tree/master/Documentation/installation

# Install the latest release: $ go install github.com/go-delve/delve/cmd/dlv@latest # Install at tree head: $ go install github.com/go-delve/delve/cmd/dlv@master # Install at a specific version or pseudo-version: $ go install github.com/go-delve/delve/cmd/dlv@v1.7.3 $ go install github.com/go-delve/delve/cmd/dlv@v1.7.4-0.20211208103735-2f13672765fe

kubeadm创建的集群中的控制组件是static pod,所以我们只要把配置文件移动到别的目录,对应的static pod就会自己销毁,于是我们可以用移除配置文件的方式停止集群中的kube-apiserver

#进入kubeadm 默认的配置路径 [root@k8s-master ~]# cd /etc/kubernetes #新建static pod配置备份目录 [root@k8s-master ~]# mkdir manifests-down [root@k8s-master kubernetes]# mv ./manifests/kube-apiserver.yaml ./manifests #看到apiserver组件对应的pod已经停止 [root@k8s-master kubernetes]# kubectl get po --all-namespaces

本地编译K8s源码,本地环境MacBook Pro为darwin,因此需要交叉编译出Linux amd64对应的apiserver二级制文件

参考编译命令:

make all GOGCFLAGS="-N -l" GOLDFLAGS=""

需要添加:GOLDFLAGS="" 不然无法支持远程调试,报错为:

could not launch process: could not open debug info

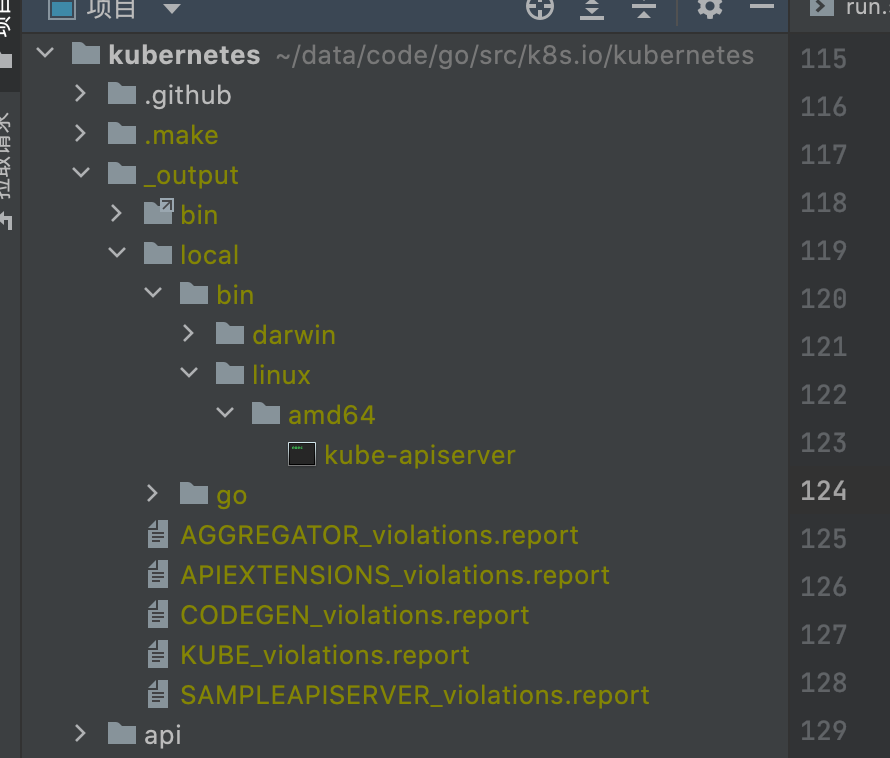

解决方案参考:https://github.com/kubernetes/kubernetes/issues/77527编译好的目录结构如图所示:

把编译好的二进制文件上传至master机器192.168.1.121

scp ./_output/local/bin/linux/amd64/kube-apiserver root@192.168.1.121:/etc/kubernetes/mycode

从manifests-down/kube-apiserver.yaml 获取启动配置信息

cd manifests-down/kube-apiserver.yaml

配置示例:

apiVersion: v1 kind: Pod metadata: annotations: kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.1.121:6443 creationTimestamp: null labels: component: kube-apiserver tier: control-plane name: kube-apiserver namespace: kube-system spec: containers: - command: - kube-apiserver - --advertise-address=192.168.1.121 - --allow-privileged=true - --authorization-mode=Node,RBAC - --client-ca-file=/etc/kubernetes/pki/ca.crt - --enable-admission-plugins=NodeRestriction - --enable-bootstrap-token-auth=true - --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt - --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt - --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key - --etcd-servers=https://127.0.0.1:2379 - --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt - --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt - --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key - --requestheader-allowed-names=front-proxy-client - --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt - --requestheader-extra-headers-prefix=X-Remote-Extra- - --requestheader-group-headers=X-Remote-Group - --requestheader-username-headers=X-Remote-User - --secure-port=6443 - --service-account-issuer=https://kubernetes.default.svc.cluster.local - --service-account-key-file=/etc/kubernetes/pki/sa.pub - --service-account-signing-key-file=/etc/kubernetes/pki/sa.key - --service-cluster-ip-range=10.1.0.0/16 - --tls-cert-file=/etc/kubernetes/pki/apiserver.crt - --tls-private-key-file=/etc/kubernetes/pki/apiserver.key image: k8s.gcr.io/kube-apiserver:v1.23.1 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 192.168.1.121 path: /livez port: 6443 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 name: kube-apiserver readinessProbe: failureThreshold: 3 httpGet: host: 192.168.1.121 path: /readyz port: 6443 scheme: HTTPS periodSeconds: 1 timeoutSeconds: 15 resources: requests: cpu: 250m startupProbe: failureThreshold: 24 httpGet: host: 192.168.1.121 path: /livez port: 6443 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 volumeMounts: - mountPath: /etc/ssl/certs name: ca-certs readOnly: true - mountPath: /etc/pki name: etc-pki readOnly: true - mountPath: /etc/kubernetes/pki name: k8s-certs readOnly: true hostNetwork: true priorityClassName: system-node-critical securityContext: seccompProfile: type: RuntimeDefault volumes: - hostPath: path: /etc/ssl/certs type: DirectoryOrCreate name: ca-certs - hostPath: path: /etc/pki type: DirectoryOrCreate name: etc-pki - hostPath: path: /etc/kubernetes/pki type: DirectoryOrCreate name: k8s-certs status: {}

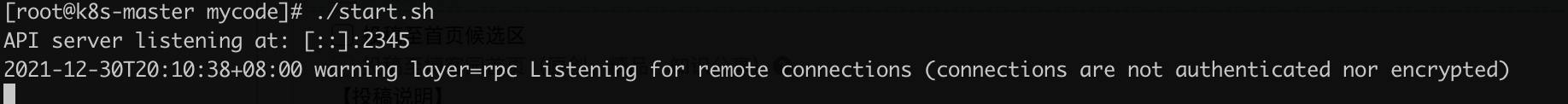

拼接后的启动命令,用默认的2345端口

dlv exec --listen=:2345 --headless=true --api-version=2 ./kube-apiserver -- --advertise-address=192.168.1.121 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key --etcd-servers=https://127.0.0.1:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.1.0.0/16 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

参数过长,可以写入一个启动脚本文件kube-apiserver-start.sh 保存起来方便使用

启动脚本:

chmod +x kube-apiserver-start.sh ./kube-apiserver-start.sh

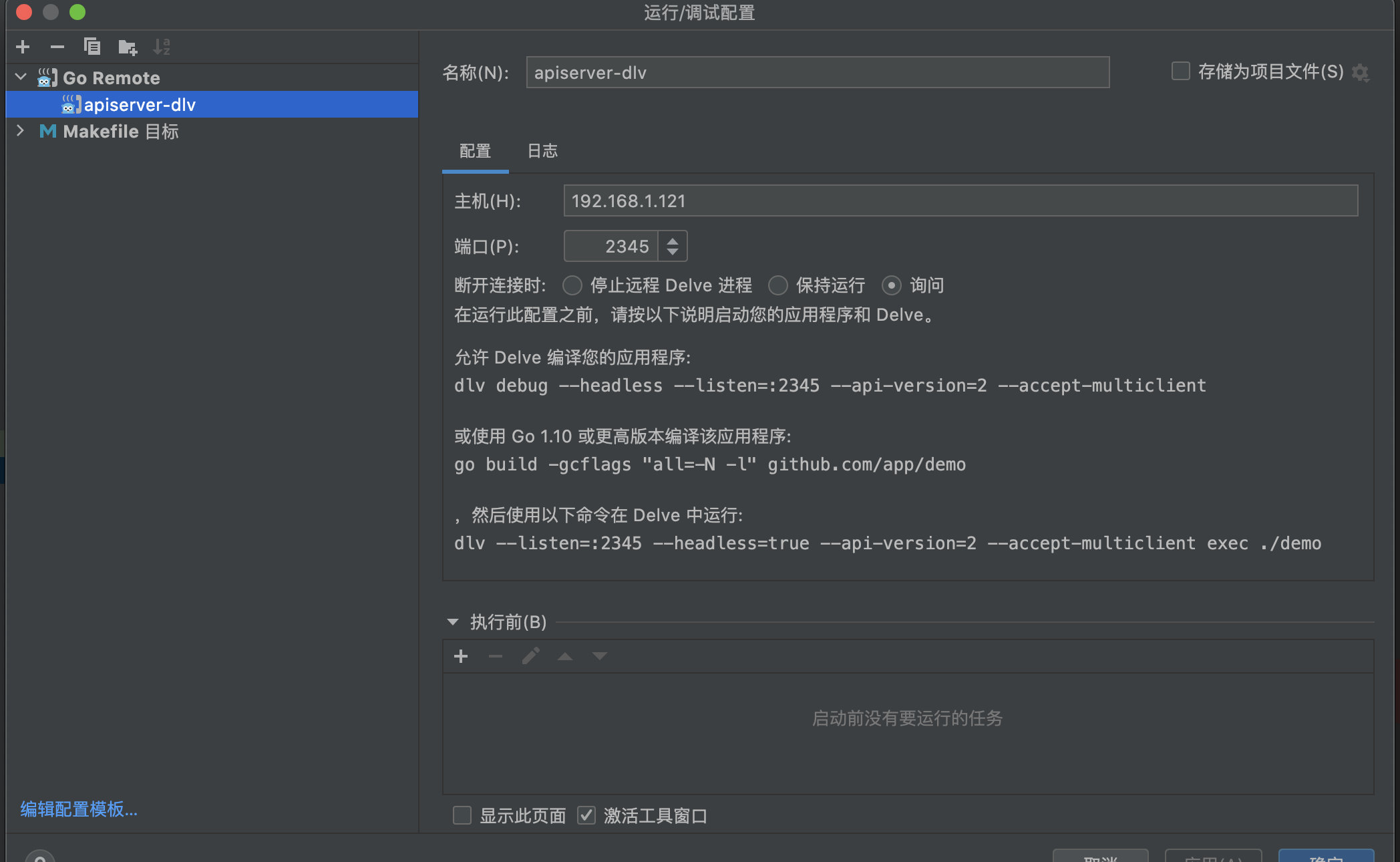

切换到本地的Goland 添加远程调试参数

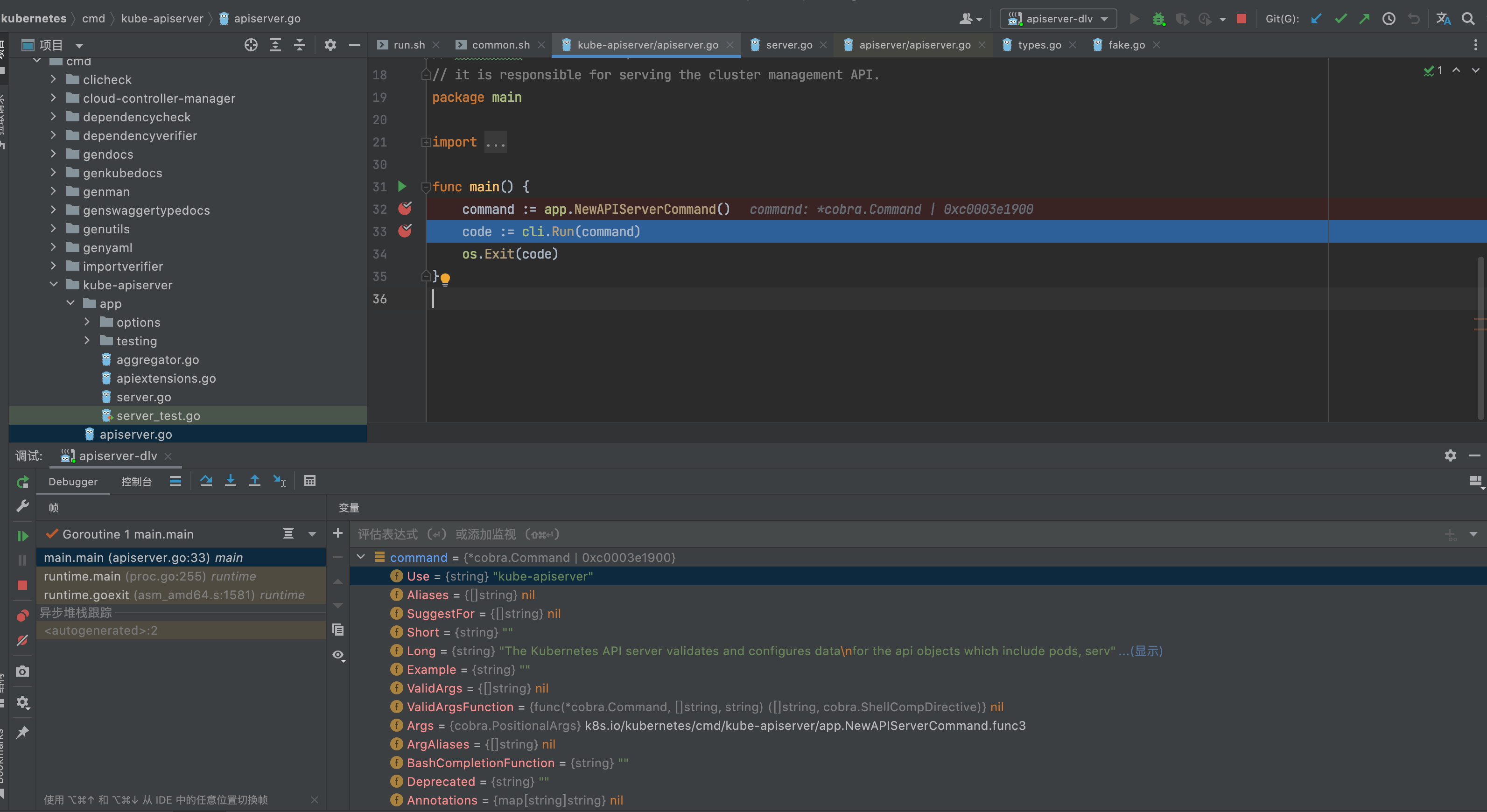

在apiserver的main方法 添加断点,启动debug,已经阻塞在断点处,开始进行远程debug